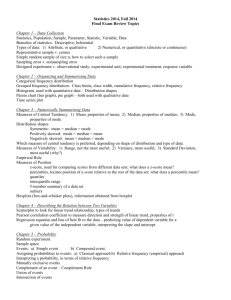

2002-09-03: Statistics Review I

Lecture 3: Statistics Review I

Date: 9/3/02

Distributions

Likelihood

Hypothesis tests

Sources of Variation

Definition: Sampling variation results because we only sample a fraction of the full population (e.g. the mapping population ).

Definition: There is often substantial experimental error in the laboratory procedures used to make measurements.

Sometimes this error is systematic .

Parameters vs. Estimates

Definition: The population is the complete collection of all individuals or things you wish to make inferences about it. Statistics calculated on populations are parameters .

Definition: The sample is a subset of the population on which you make measurements. Statistics calculated on samples are estimates .

Types of Data

Definition: Usually the data is discrete , meaning it can take on one of countably many different values.

Definition: Many complex and economically valuable traits are continuous. Such traits are quantitative and the random variables associated with them are continuous (QTL).

Random

We are concerned with the outcome of random experiments.

production of gametes

union of gametes (fertilization)

formation of chiasmata and recombination events

Set Theory I

Set theory underlies probability.

Definition: A set is a collection of objects.

Definition: An element is an object in a set.

Notation: s

S

“ s is an element in S

”

Definition: If A and B are sets, then A is a subset of B if and only if s

A implies s

B.

Notation: A

B

“

A is a subset of B

”

Set Theory II

Definition: Two sets A and B are equal if and only if A

B and B

A . We write A = B .

Definition: The universal set is the superset of all other sets, i.e. all other sets are included within it. Often represented as

.

Definition: The empty set contains no elements and is denoted as

.

Sample Space & Event

Definition: The sample space for a random experiment is the set

that includes all possible outcomes of the experiment.

Definition: An event is a set of possible outcomes of the experiment. An event E is said to happen if any one of the outcomes in

E occurs.

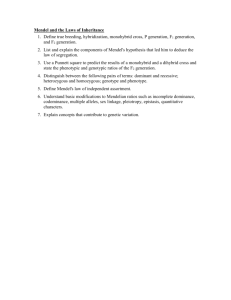

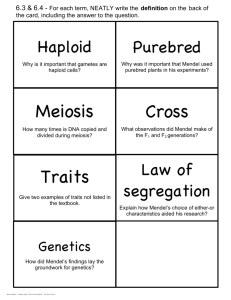

Example: Mendel I

Mendel took inbred lines of smooth AA and wrinkled BB peas and crossed them to make the F1 generation and again to make the F2 generation.

Smooth A is dominant to B .

The random experiment is the random production of gametes and fertilization to produce peas.

The sample space of genotypes for F2 is AA , BB ,

AB .

Random Variable

Definition: A function from set S to set T is a rule assigning to each s

S , an element t

T .

Definition: Given a random experiment on sample space

, a function from

to T is a random variable. We often write X , Y , or Z .

If we were very careful, we’d write

X ( s ).

Simply, X is a measurement of interest on the outcome of a random experiment.

Example: Mendel II

Let X be the number of A alleles in a randomly chosen genotype. X is a random variable.

Sample space is

= {0, 1, 2}.

Discrete Probability

Distribution

Suppose X is a random variable with possible outcomes { x

1

, x

2

, …, x m

}. Define the discrete probability distribution for random variable X as with p

X

P

X

x i

0 p

X

i

1 i

1 x

x i x

x i

Example: Mendel III p

X p p

X

X p

X

otherwise

0

0 .

0 .

0 .

25

50

25

Cumulative Distribution

The discrete cumulative distribution function is defined as

P

X

x i

F

i

x

x i

P

X

x

The continuous cumulative distribution function is defined as

F

X

x

x

f

du

Continuous Probability

Distribution

F ' ( x )

dF ( x )

f x f ( x ) is the dx continuous probability distribution. As in the discrete case,

f

du

1

Expectation and Variance

E

x i

x i uf p x

i

du for discrete random variable for continuous random variable

Var

x i

x i

u

E

i

2 p

x x i

E

2 f

du for discrete random variable for continuous random variable

Moments and MGF

Definition: The r th moment of X is E( X r ).

Definition: The moment generating function is defined as E( e tX ).

mgf

E

x i

e e tu tx i f p x

i

du for discrete random variable for continuous random variable

Example: Mendel IV

Define the random variable Z as follows:

Z

0 if seed is smooth

1 if seed is wrinkled

If we hypothesize that smooth dominates wrinkled in a single-locus model, then the corresponding probability model is given by:

Example: Mendel V

Var p

Z

P

P

Z

0

Z

1

3

4

1

4

E

3

4

0

1

4

1

1

4

0

1

4

2

3

4

1

4

2 1

4

3

16

Joint and Marginal Cumulative

Distributions

Definition: Let X and Y be two random variables. Then the joint cumulative distribution is F

X

x , Y

y

Definition: The marginal cumulative

F

X distribution is

F

y

P

X

f

x , Y

y

dvdu for discrete random variables for continuous random variables

Joint Distribution

Definition: The joint distribution is p

X

x , Y

y

As before, the sum or integral over the sample space sums to 1.

Conditional Distribution

Definition: The conditional distribution of X p given that Y = y is

p p

y

P

X

x Y

y

P

X

P

x ,

Y

Y y

y

Lemma: If X and Y are independent, then p ( x | y )= p ( x ), p ( y | x )= p ( y ), and p ( x , y )= p ( x ) p ( y ).

Example: Mendel VI

P(homozygous | smooth seed) =

P

X

2 Z

1

P

X

P

Z

2 ,

Z

1

1

1

4

3

4

1

3

Binomial Distribution

Suppose there is a random experiment with two possible outcomes, we call them

“success” and “failure”. Suppose there is a constant probability p of success for each experiment and multiple experiments of this type are independent. Let X be the random variable that counts the total number of successes. Then X

Bin( n , p ).

Properties of Binomial

Distribution f

x ; n , p

P

X

x n , p

n x

p x

1

p

n

x mgf

Var

E

e tx

n

x

0

1

x

np np

p

p x

1

p

n

x

1

p

pe t n

Examples: Binomial

Distribution

recombinant fraction

between two loci: count the number of recombinant gametes in n sampled.

phenotype in Mendel’s F2 cross: count the number of smooth peas in F2.

Multinomial Distribution

M

n , p

1

, p

2

, , p m

Suppose you consider genotype in Mendel’s

F2 cross, or a 3-point cross.

Definition: Suppose there are m possible outcomes and the random variables X

1

, X

…,

X m count the number of times each

2

, outcome is observed. Then,

P

X

1

x

1

, X

2

x

2

, , X m

x m

n !

x

1

!

x

2

!

x m

!

p

1 x

1 p

2 x

2

p m x m

Poisson Distribution

Consider the Binomial distribution when p is small and n is large, but np =

is constant.

Then,

n x

p x

1

p

n

x e

x x !

The distribution obtained is the Poisson

Distribution .

Properties of Poisson

Distribution f

e

x x !

mgf

E

Var

X

e tx e

x x !

e

1

Normal Distribution

Confidence intervals for recombinant fraction can be estimated using the Normal distribution.

N

,

2

Properties of Normal

Distribution mgf

E

Var f

1

X

e

2

t

2

2

2 t

2 e

x

2

2

2

Chi-Square Distribution

Many hypotheses tests in statistical genetics use the chi-square distribution.

1 f

k

2 mgf

E

Var

1

1

2 t

k

k

2 k

1

2

2 k

2 x k

2

1 e

x

2 for t

0 .

5

Likelihood I

Likelihoods are used frequently in genetic data because they handle the complexities of genetic models well.

Let

be a parameter or vector of parameters that effect the random variable X . e.g.

=(

,

) for the normal distribution.

Likelihood II

Then, we can write a likelihood

L

L

x

1

, x n

i n

1

P

i

where we have observed an independent sample of size n , namely x

1 parameter

.

, x

2

,…, x n

, and conditioned on the

Normally,

is not known to us. To find the

that best fits the data, we maximize L (

) over all

.

Example: Likelihood of

Binomial

L

P

X

x n , p

n x

x

1

n

x l

l

p

log L

log

n x

x log

x p

n

1

x p p

n

x

1

p

x n

The Score

Definition: The first derivative of the log likelihood with respect to the parameter is the score .

For example, the score for the binomial parameter p is

p

x p

n

1

x p

Information Content

Definition: The information content is

I

E

l

2

E

2

2 l

If evaluated at maximum likelihood estimate , then it is called expected information .

Hypothesis Testing

Most experiments begin with a hypothesis.

This hypothesis must be converted into statistical hypothesis.

Statistical hypotheses consist of null hypothesis H

0 and alternative hypothesis H

A

.

Statistics are used to reject H

0

Sometimes we cannot reject H

0 instead.

and accept H

A and accept it

.

Rejection Region I

Definition: Given a cumulative probability distribution function for the test statistic X,

F ( X ), the critical region for a hypothesis test is the region of rejection , the area under the probability distribution where the observed test statistic X is unlikely to fall if H

0 is true.

The rejection region may or may not be symmetric.

Rejection Region II

Distribution under H

0

1F ( x c

)

1-

F ( x l

) or 1F ( x u

)

Acceptance Region

Region where H

0 cannot be rejected.

One-Tailed vs. Two-Tailed

Use a one-tailed test when the H

0 unidirectional, e.g. H

0

:

0.5.

Use a two-tailed test when the H

0 bidirectional, e.g. H

0:

=0.5.

is is

Critical Values

Definition: Critical values are those values corresponding to the cut-off point between rejection and acceptance regions.

P-Value

Definition: The p-value is the probability of observing a sample outcome, assuming H

0 true.

p value

1

F

is

Reject H

0 when the p-value

.

The significance value of the test is

.

Chi-Square Test: Goodness-of-

Fit i a

1

o i

e i

2

2 e i

Calculate e i under H

0

.

2 is distributed as Chi-Square with a -1 degrees of freedom. When expected values depend on k unknown parameters, then df= a -

1k.

Chi-Square Test: Test of

Independence

2 i b a

1 j

1

o ij

e ij

2

e ij

e ij

= np

0i p

0j

degrees of freedom = ( a -1)( b -1)

Example: test for linkage

Likelihood Ratio Test

LR

L

L

ˆ

X

0

X

G =2log(LR)

G ~

2 with degrees of freedom equal to the difference in number of parameters.

LR: goodness-of-fit & independence test

goodness-of-fit

G

2 i n

1

independence test o i log o i e i

G

2 i b a

1 j

1 o ij log o ij e ij

Compare

2 and Likelihood

Ratio

Both give similar results.

LR is more powerful when there are unknown parameters involved.

LOD Score

LOD stands for log of odds .

It is commonly denoted by Z .

Z

log

10

L

L

0

The interpretation is that H

A

X

X

is 10 Z times more likely than H

0

. The p-values obtained by the LR statistic for LOD score Z are approximately 10 Z .

Nonparametric Hypothesis

Testing

What do you do when the test statistic does not follow some standard probability distribution?

Use an empirical distribution. Assume H

0 and resample (bootstrap or jackknife or permutation) to generate: empirical CDF ( X )

P

X

x