PPT - Computer Science and Engineering

advertisement

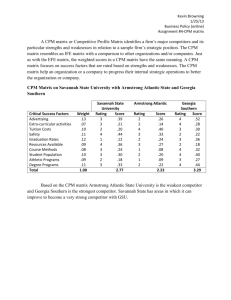

Sensitivity Analysis for

Ungapped Markov Models of

Evolution

David Fernández-Baca

Department of Computer Science

Iowa State University

(Joint work with Balaji Venkatachalam)

CPM '05

Motivation

• Alignment scoring schemes are often

based on Markov models of evolution

• Optimum alignment depends on

evolutionary distance

• Our goal: Understand how optimum

alignments are affected by choice of

evolutionary distance

CPM '05

Ungapped local alignments

An ungapped local alignment of sequences

X and Y is a pair of equal-length substrings

of X and Y

X

Y

Only matches and mismatches — no gaps

CPM '05

Ungapped local alignments

23 matches

2 mismatches

A:

34 matches

11 mismatches

B:

P. Agarwal and D.J. States. Bayesian evolutionary distance.

Journal of Computational Biology 3(1):1—17, 1996

CPM '05

Which alignment is better?

Score = ∙ #matches + ∙ #mismatches

>0

<0

score(B)

score(A)

/

-11/9

In practice, scoring schemes depend on

evolutionary distance

CPM '05

Log-odds scoring

Let

qX = base frequency of nucleotide X

mXY (t) = Prob(XY mutation in t time units)

A be an alignment

X1X2X3 Xn

Y1Y2Y3 Yn

Then,

mXiYi (t )

Log odds score of A = log

i

CPM '05

qXi qYi

Log-odds scoring

• Simplest model:

– mXX(t) = r(t) for all X

– mXY(t) = s(t) for all X Y

– qX = ¼ for all X

• Log-odds score of alignment:

(t) ∙ #matches + (t) ∙ #mismatches

where

(t) = 4 + log r(t)

(t) = 4 + log s(t)

CPM '05

Scores depend nonlinearly on

evolutionary distance

CPM '05

This talk

• An efficient algorithm to compute

optimum alignments for all evolutionary

distances

• Techniques

– Linearization

– Geometry

– Divide-and-conquer

CPM '05

Related Work

• Combinatorial/linear scoring schemes:

– Waterman, Eggert, and Lander 1992: Problem

definition

– Gusfield, Balasubramanian, and Naor 1994: Bounds

on number of optimality regions for pairwise

alignment

– F-B, Seppäläinen, and Slutzki 2004: Generalization

to multiple and phylogenetic alignment

• Sensitivity analysis for statistical models:

– P. Agarwal and D.J. States 1996

– L. Pachter and B. Sturmfels 2004a & b:

connections between linear scoring and Markov

models

CPM '05

A simple Markov model of

evolution

• Sites evolve independently through

mutation according to a Markov process

• For each site:

– Transition probability matrix:

M = [mij],

i, j {A, C, T, G}

where

mij = Prob(i j mutation in 1 time unit)

– Transition matrix for t time units is M(t)

CPM '05

Jukes-Cantor transition

probability matrix

r(t)

( t) s(t)

M

s(t)

s(t)

where

s(t) s(t) s(t)

r(t) s(t) s(t)

s(t) r(t) s(t)

s(t) s(t) r(t)

1

r (t ) 1 3e 4t

4

1

s (t ) 1 e 4t

4

CPM '05

versus

t = +∞

(t) = 4 + log r(t)

(t) = 4 + log s(t)

t=0

CPM '05

Linearization

• Allow and to vary arbitrarily,

ignoring that they

– are functions of t and

– must satisfy laws of probability

• Result is a linear parametric problem

Recall:

Score(A) = ∙ #matches + ∙ #mismatches

CPM '05

Theorem

Let n be the length of

the shorter sequence.

Then,

(ii) The parameter

space decomposition

looks like this:

(i) The number of

distinct optimal

solutions over all

values of and is

O(n2/3).

CPM '05

Re-introducing distance

The vs. curve

intersects every boundary

line with slope (-∞, +1]

The optimum solutions for

t = 0 to + are found by

varying / from - to 1

Non-linear problem in t reduces to a linear

one-parameter problem in /

CPM '05

An algorithm

1. Start with a simple, but highly parallel,

algorithm for fixed-parameter problem

2. Lift the fixed-parameter algorithm

•

•

Lifted algorithm runs simultaneously for all

parameter values in linearized problem

Output: A decomposition of parameter space into

optimality regions

3. Construct solution to original problem by

finding the optimality regions intersected by

the (t), (t) curve

CPM '05

A naïve dynamic programming

algorithm

aattcaattcaatc . . .

X

– Process each diagonal

separately

– Pick best answer over all

diagonals

• Total time: O(nm)

CPM '05

caatttgtcacttttt . . .

• Let C be the matrix where

Cij = score of opt alignment

ending at Xi and Yj

• Subdiagonals correspond to

alignments

• Diagonals are independent of

each other

Y

C

Divide and conquer for diagonals

Split diagonal in half, solve each side recursively,

and combine answers. E.g.:

X

Y

X(1)

Y(1)

X(2)

Y(2)

X(1)

Y(1)

X(1)

Y(1)

X(2)

Y(2)

T(N) = 2 T(N/2) + O(1)

length of diagonal

X(2)

Y(2)

T(N) = O(N)

#subproblems

CPM '05

Lifting

• Run naïve DP algorithm for all

parameter values by manipulating

piecewise linear functions instead of

numbers:

– “+” “+” for piecewise linear functions

– “max” “max” of piecewise linear

functions

CPM '05

Adding piecewise linear functions

f+g

f

g

Time = O(total number of segments)

CPM '05

Computing the maximum of

piecewise linear functions

max (f,g)

f

g

Time = O(total number of segments)

CPM '05

Analysis

• Processing a diagonal:

– T(n) = 2 T(n/2) + O(n2/3)

T(n) = O(n)

#(optimum solutions

for diagonal)

• Merging score functions for diagonals:

– O(n2/3) line segments per function, m+n-1

diagonals

– Total time: O(mn + mn2/3 lg m)

CPM '05

Further Results (1): Parametric

ancestral reconstruction

• Given a phylogeny,

find most likely

ancestors

• Sensitive to edge

lengths

AAC

AAT

• Result: O(n) algorithm

for uniform model (all

edge lengths equal)

ACT

CPM '05

AAT

AGC

Further Results (2)

• Bounds on number of regions for gapped

alignment (indels are allowed)

– Lead to algorithms, but not as efficient as

ungapped case

CPM '05

Open Problems

• Tight bounds on size of parameter

space decomposition

• Evolutionary trees with different

branch lengths

• Efficient sensitivity analysis for gapped

models

• Evaluation of sensitivity to changes in

structure and parameters

– Useful in branch-swapping

CPM '05

Thanks to

• National Science Foundation

– CCR-9988348

– EF-0334832

CPM '05