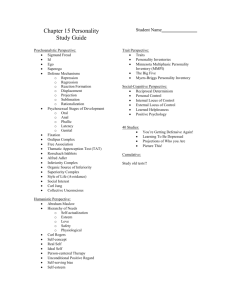

lecture 1

advertisement