Chapter 3 and 4 Notes

advertisement

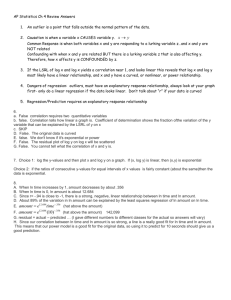

AP Statistics Chapters 3 & 4 Measuring Relationships Between 2 Variables Basic Terms Response Variable: Measures an outcome of a study. Explanatory Variable: Helps explain or influences changes in a response variable. Scatterplot: Shows the relationship between two quantitative variables measured on the same individuals (one variable on each axis). We are examining relationships and associations. DO NOT ASSUME that the explanatory variable causes a change in the response variable. Interpreting a Scatterplot Just like with univariate data, we are looking for an overall pattern and for deviation from that pattern. Overall pattern – Direction: Negative or positive association – Form: curved or linear? Are there clusters? – Strength: How closely do the points follow a clear form? Deviations – Outliers: Individual value that falls outside of the overall pattern. Correlation Correlation (r) measures the direction and strength of the linear relationship between two quantitative variables. – Does not distinguish between explanatory and response variables (i.e. r would stay the same if you switched the x and y axes) – r has no units of measurement (correlation will not change if you change the units for either of the two variables) Correlation (+) r indicates a positive association – As one variable increases, so does the other. (-) r indicates a negative association – As one variable increases, the other decreases. r is always between -1 and 1. – If r is close to zero, then the linear relationship is weak. – If r is close to 1 or -1, then the linear relationship is strong. Correlation CORRELATION DOES NOT IMPLY CAUSATION!!! 𝑟 𝑟 = 1 𝑛 −1 𝑧𝑥 𝑧𝑦 = 1 𝑛 −1 𝑥𝑖 − 𝑥 𝑠𝑥 𝑦𝑖 − 𝑦 𝑠𝑦 Regression line A line that describes how a response variable, y, changes as an explanatory variable, x, changes. It is often used to predict y given x. – y = a + bx b slope: the amount by which y changes on average when x changes one unit. a y-intercept Making predictions with the regression line Interpolation – Estimating predicted values between known values. (Good ) Extrapolation – Predicting values outside the range of values used to make the regression line. (Bad ) Least-Squares Regression Line Line that makes the sum of the squared vertical distances between the data points and the line as small as possible. – ŷ = a + bx (ŷ y-hat) Slope: b = r(sy/sx) Passes through the point (x, y) Example An SRS of 50 families has provided the following statistics – # of children in the family Mean: 2.1, std dev: 1.4 – Annual Gross Income Mean: $34,250, std dev: $10,540 – r = .75 Write the equation for the least squares regression line that can be used to predict gross income based on # of children. – Be sure to define your variables. Residuals Residual: The difference between an observed value of the response variable and the value predicted by the regression line. – Residual = observed y – predicted y =y–ŷ Standard deviation of the residuals: s= residuals2 n-2 How well does the line fit the data? To answer this question, you must look at two things. 1. Residual plot: scatterplot of the regression residuals plotted against (usually) the explanatory variable. – If the regression line represents the pattern of data well, then… The residual plot will show no pattern. The residuals will be relatively small. How well does the line fit the data? 2. Coefficient of Determination: r2 – The fraction (%) of the variation in the values of y that is explained by the least squares regression line of y on x. Template: – r2 % of the variation in (y-variable) is explained by the least squares regression line with (x-variable) . Other Considerations Outlier: Observation that lies outside the overall pattern (may or may not have a large residual). Influential Point: Observation which, if removed, would greatly change the statistical calculation. Lurking variable: An additional variable that may influence the relationship between the explanatory and response variables. Correlation v. Causation The goal of a study or experiment is often to establish causation…a direct cause and effect link. – Lurking variables make establishing causation difficult. Common response: Observed association between two variables, x and y, is explained by a lurking variable, z. Both x and y change in response to changes in z. Correlation v. Causation Confounding: Occurs when the effects of two or more variables on a response variable cannot be distinguished from each other, (often occurs in an observational study). Establishing Causation w/o an Experiment 1. The association is strong. 2. The association is consistent. 3. Larger values of the explanatory variable are associated with stronger responses. 4. The alleged cause precedes the effect in time. 5. The alleged cause is plausible. Non-linear relationships If data follows a non-linear form, we can sometimes transform the data to become linear. By doing so we can then perform the same analyses that we do for linear data. (regression line, correlation, r2, residual plot). What are the most common non-linear models for bivariate data? Transforming non-linear data. Exponential model – y = abx – For each unit increase in x, y is multiplied by constant, b. To transform to linearity, plot log y against x on the coordinate plane. Then perform a linear regression. – log y = a + bx OR ln y = a + bx Transforming non-linear data Power Model – y = axb – Often used when trying to use a onedimensional variable (e.g. length), to predict a multi-dimensional variable (e.g. area, volume, weight) To transform to linearity, plot log y against log x on the coordinate plane. Then perform a linear regression. – log y = a + b(log x) OR ln y = a + b(ln x) Analyzing the relationship between categorical variables A two-way table is used to compare categorical variables. Marginal distribution: Analyzing the totals for one of the variables by itself. Conditional distribution: The distribution of the response variable for each value of the explanatory variable.