Weighted Finite-State Transducer

advertisement

Synchronous Grammars and

Tree Automata

David Chiang and Kevin Knight

USC/Information Sciences Institute

Viterbi School of Engineering

University of Southern California

Why Worry About

Formal Language Theory?

• They already figured out most of the key things

back in the 1960s & 1970s

• Lucky us!

–

–

–

–

Helps clarify our thinking about applications

Helps modeling & algorithm development

Helps promote code re-use

Opportunity to develop novel, efficient algorithms and

data structures that bring ancient theorems to life

• Formal grammar and automata theory are the

daughters of natural language processing – let’s

keep in touch

[Chomsky 57]

• Distinguish grammatical English from

ungrammatical English:

–

–

–

–

–

–

–

–

–

–

John thinks Sara hit the boy

* The hit thinks Sara John boy

John thinks the boy was hit by Sara

Who does John think Sara hit?

John thinks Sara hit the boy and the girl

* Who does John think Sara hit the boy and?

John thinks Sara hit the boy with the bat

What does John think Sara hit the boy with?

Colorless green ideas sleep furiously.

* Green sleep furiously ideas colorless.

This Research Program has

Contributed Powerful Ideas

Context-free grammar

Formal

language

hierarchy

Compiler

technology

This Research Program has

NLP Applications

Alternative speech recognition or translation outputs:

Green = how grammatical

Blue = how sensible

This Research Program has

NLP Applications

Alternative speech recognition or translation outputs:

Pick this one!

Green = how grammatical

Blue = how sensible

This Research Program

Has Had Wide Reach

• What makes a legal

RNA sequence?

• What is the structure of a

given RNA sequence?

Yasubumi Sakakibara, Michael Brown, Richard Hughey,

I. Saira Mian, Kimmen Sjolander, Rebecca C. Underwood

and David Haussler. Stochastic Context-Free Grammars

for tRNA, Modeling Nucleic Acids Research,

22(23):5112-5120, 1994.

This Research Program is

Really Unfinished!

Type in your English sentence here:

Is this grammatical?

Is this sensible?

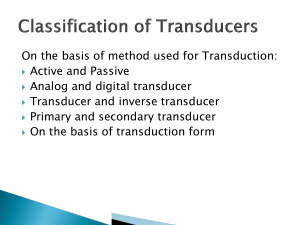

Acceptors and Transformers

• Chomsky’s program is about which utterances are

acceptable

• Other research programs are aimed at

transforming utterances

–

–

–

–

Translate a English sentence into Japanese…

Transform a speech waveform into transcribed words…

Compress a sentence, summarize a text…

Transform a syntactic analysis into a semantic

analysis…

– Generate a text from a semantic representation…

Strings and Trees

• Early on, trees were realized to be a useful tool in describing

what is grammatical

– A sentence is a noun phrase (NP) followed by a verb phrase (VP)

– A noun phrase is a determiner (DT) followed by a noun (NN)

– A noun phrase is a noun phrase (NP) followed by a prepositional

phrase (PP)

– A PP is a preposition (IN) followed by an NP

S

NP

NP

VP

PP

IN

NP

• A string is acceptable if it has an acceptable tree …

• Transformations may take place at the tree level …

Natural Language Processing

• 1980s: Many tree-based grammatical formalisms

• 1990s: Regression to string-based formalisms

– Hidden Markov Models (HMM), Finite-State Acceptors (FSAs) and

Transducers (FSTs)

– N-gram models for accepting sentences [eg, Jelinek 90]

– Taggers and other statistical transformations [eg, Church 88]

– Machine translation [eg, Brown et al 93]

– Software toolkits implementing generic weighted FST operations

[eg, Mohri, Pereira, Riley 00]

WFSA

w

WFST

e

WFST

j

WFST

k

“backwards application of string k through a composition of a transducer

cascade, intersected with a weighted FSA language model”

Natural Language Processing

• 2000s: Emerging interest in tree-based probabilistic

models

– Machine translation

• [Wu 97, Yamada & Knight 02, Melamed 03, Chiang 05, …]

– Summarization

• [Knight & Marcu 00, …]

– Paraphrasing

• [Pang et al 03, …]

– Question answering

• [Echihabi & Marcu 03, …]

– Natural language generation

• [Bangalore & Rambow 00, …]

What are the conceptual tools to

help us get a grip on encoding and

exploiting knowledge about

linguistic tree transformations?

Goal of this tutorial

Part 1: Introduction

Part 2: Synchronous Grammars

< break >

Part 3: Tree Automata

Part 4: Conclusion

Part 1: Introduction

Part 2: Synchronous Grammars

< break >

Part 3: Tree Automata

Part 4: Conclusion

Part 1: Introduction

Part 2: Synchronous Grammars

< break >

Part 3: Tree Automata

Part 4: Conclusion

Tree Automata

• David talked about synchronous grammars

Output tree 1

Output tree 2

synchronized

• I’ll talk about tree automata

Input tree

Output tree

Steps to Get There

Strings

Trees

Grammars

Regular grammar

?

Acceptors

Finite-state acceptor

(FSA), PDA

?

Transducers

String Pairs

Tree Pairs

(inputoutput) (inputoutput)

Finite-state transducer

?

(FST)

Context-Free Grammar

• Example:

–

–

–

–

–

–

–

–

–

S NP VP

NP DET N

NP NP PP

PP P NP

VP V NP

DET the

N boy

V saw

P with

Generative Process:

[p=1.0]

[p=0.7]

[p=0.3]

[p=1.0]

[p=0.4]

[p=1.0]

[p=1.0]

[p=1.0]

[p=1.0]

• Defines a set of strings

• Language described by CFG

can also be described by a

push-down acceptor

S

Context-Free Grammar

• Example:

–

–

–

–

–

–

–

–

–

S NP VP

NP DET N

NP NP PP

PP P NP

VP V NP

DET the

N boy

V saw

P with

Generative Process:

[p=1.0]

[p=0.7]

[p=0.3]

[p=1.0]

[p=0.4]

[p=1.0]

[p=1.0]

[p=1.0]

[p=1.0]

• Defines a set of strings

• Language described by CFG

can also be described by a

push-down acceptor

S

NP

VP

Context-Free Grammar

• Example:

–

–

–

–

–

–

–

–

–

S NP VP

NP DET N

NP NP PP

PP P NP

VP V NP

DET the

N boy

V saw

P with

Generative Process:

[p=1.0]

[p=0.7]

[p=0.3]

[p=1.0]

[p=0.4]

[p=1.0]

[p=1.0]

[p=1.0]

[p=1.0]

• Defines a set of strings

• Language described by CFG

can also be described by a

push-down acceptor

S

NP

NP

VP

PP

Context-Free Grammar

• Example:

–

–

–

–

–

–

–

–

–

S NP VP

NP DET N

NP NP PP

PP P NP

VP V NP

DET the

N boy

V saw

P with

Generative Process:

[p=1.0]

[p=0.7]

[p=0.3]

[p=1.0]

[p=0.4]

[p=1.0]

[p=1.0]

[p=1.0]

[p=1.0]

• Defines a set of strings

• Language described by CFG

can also be described by a

push-down acceptor

S

NP

NP

DET

N

the

boy

VP

PP

Context-Free Grammar

• Example:

–

–

–

–

–

–

–

–

–

S NP VP

NP DET N

NP NP PP

PP P NP

VP V NP

DET the

N boy

V saw

P with

Generative Process:

[p=1.0]

[p=0.7]

[p=0.3]

[p=1.0]

[p=0.4]

[p=1.0]

[p=1.0]

[p=1.0]

[p=1.0]

• Defines a set of strings

• Language described by CFG

can also be described by a

push-down acceptor

S

NP

NP

DET

N

the

boy

VP

PP

P

NP

…

Context-Free Grammar

• Example:

–

–

–

–

–

–

–

–

–

S NP VP

NP DET N

NP NP PP

PP P NP

VP V NP

DET the

N boy

V saw

P with

Generative Process:

[p=1.0]

[p=0.7]

[p=0.3]

[p=1.0]

[p=0.4]

[p=1.0]

[p=1.0]

[p=1.0]

[p=1.0]

• Defines a set of strings

• Language described by CFG

can also be described by a

push-down acceptor

S

NP

NP

VP

PP

P

V

DET

N

NP

the

boy with DET N

NP

saw DET N

the boy

the boy

Context-Free Grammar

• Is this what lies behind modern parsers like

Collins and Charniak?

• No… they do not have a finite list of productions,

but an essentially infinite list.

– “Markovized” grammar

• Generative process is head-out:

S

VP

Ph(VP | S)

Context-Free Grammar

• Is this what lies behind modern parsers like

Collins and Charniak?

• No… they do not have a finite list of productions,

but an essentially infinite list.

– “Markovized” grammar

• Generative process is head-out:

S

NP

VP

Pleft(NP | VP, S)

Context-Free Grammar

• Is this what lies behind modern parsers like

Collins and Charniak?

• No… they do not have a finite list of productions,

but an essentially infinite list.

– “Markovized” grammar

• Generative process is head-out:

S

PP

NP

VP

Pleft(PP | VP, S)

Context-Free Grammar

• Is this what lies behind modern parsers like

Collins and Charniak?

• No… they do not have a finite list of productions,

but an essentially infinite list.

– “Markovized” grammar

• Generative process is head-out:

S

STOP PP

NP

VP

Pleft(STOP | VP, S)

Context-Free Grammar

• Is this what lies behind modern parsers like

Collins and Charniak?

• No… they do not have a finite list of productions,

but an essentially infinite list.

– “Markovized” grammar

• Generative process is head-out:

S

STOP PP

NP

VP

ADVP

Context-Free Grammar

• Is this what lies behind modern parsers like

Collins and Charniak?

• No… they do not have a finite list of productions,

but an essentially infinite list.

– “Markovized” grammar

• Generative process is head-out:

S

STOP PP

NP

VP

ADVP STOP

Context-Free Grammar

• Is this what lies behind modern parsers like

Collins and Charniak?

• No… they do not have a finite list of productions,

but an essentially infinite list.

– “Markovized” grammar

• Generative process is head-out:

S

STOP PP

NP

VP

VBD

ADVP STOP

Context-Free Grammar

• Is this what lies behind modern parsers like

Collins and Charniak?

• No… they do not have a finite list of productions,

but an essentially infinite list.

– “Markovized” grammar

• Generative process is head-out:

S

STOP PP

NP

VP

ADVP STOP

VBD NP

Context-Free Grammar

• Is this what lies behind modern parsers like

Collins and Charniak?

• No… they do not have a finite list of productions,

but an essentially infinite list.

– “Markovized” grammar

• Generative process is head-out:

S

STOP PP

NP

VP

ADVP STOP

… VBD NP NP …

ECFG [Thatcher 67]

• Example:

– S ADVP* NP VP PP*

– VP VBD NP [NP] PP*

• Defines a set of strings

• Can model Charniak and Collins:

– VP (ADVP | PP)* VBD (NP | PP)*

• Can even model probabilistic version:

PP : Pleft(PP|VP)

VP

*e*:

Phead(VBD|VP)

*e* : Pleft(STOP|VP)

ADVP : Pleft(ADVP|VP)

VBD:1.0

PP

*e* : Pright(STOP|VP)

NP

ECFG [Thatcher 67]

• Is ECFG more powerful than CFG?

• It is possible to build a CFG that generates the

same set of strings as your ECFG

– As long as right-hand side of every rule is regular

– Or even context-free! (see footnote, [Thatcher 67])

• BUT:

– the CFG won’t generate the same derivation trees

as the ECFG

ECFG [Thatcher 67]

• For example:

– ECFG can (of course) accept the string language a*

– Therefore, we can build a CFG that also accepts a*

– But ECFG can do it via this set of derivation trees, if so

desired:

S

S

,

a

S

,

a a

S

,

aaa

S

,

aa a a

…

,

aaaaa

Tree Generating Systems

• Sometimes the trees are important

– Parsing, RNA structure prediction

– Tree transformation

• We can view CFG or ECFG as a tree-generating

system, if we squint hard …

• Or, we can look at tree-generating systems

– Regular tree grammar (RTG) is standard in automata

literature

• Slightly more powerful than tree substitution grammar (TSG)

• Less powerful than tree-adjoining grammar (TAG)

– Top-down tree acceptors (TDTA) are also standard

What We Want

• Device or grammar D for compactly representing

possibly-infinite set S of trees (possibly with

weights)

• Want to support operations like:

– Membership testing: Is tree X in the set S?

– Equality testing: Do sets described by D1 and D2

contain exactly the same trees?

– Intersection: Compute (possibly-infinite) set of trees

described by both D1 and D2

– Weighted tree-set intersection, e.g.:

• D1 describes a set of candidate English translations of some

Chinese sentence, including disfluent ones

• D2 describes a general model of fluent English

Regular Tree Grammar (RTG)

Generative Process:

• Example:

–

–

–

–

–

–

–

–

q S(qnp, VP(V(run)))

qnp NP(qdet, qn)

qnp NP(qnp, qpp)

qpp PP(qprep, qnp)

qdet DET(the)

qprep PREP(of)

qn N(sons)

qn N(daughters)

• Defines a set of trees

[p=1.0]

[p=0.7]

[p=0.3]

[p=1.0]

[p=1.0]

[p=1.0]

[p=0.5]

[p=0.5]

q

Regular Tree Grammar (RTG)

Generative Process:

• Example:

–

–

–

–

–

–

–

–

q S(qnp, VP(V(run)))

qnp NP(qdet, qn)

qnp NP(qnp, qpp)

qpp PP(qprep, qnp)

qdet DET(the)

qprep PREP(of)

qn N(sons)

qn N(daughters)

• Defines a set of trees

[p=1.0]

[p=0.7]

[p=0.3]

[p=1.0]

[p=1.0]

[p=1.0]

[p=0.5]

[p=0.5]

S

qnp

VP

V

run

Regular Tree Grammar (RTG)

Generative Process:

• Example:

–

–

–

–

–

–

–

–

q S(qnp, VP(V(run)))

qnp NP(qdet, qn)

qnp NP(qnp, qpp)

qpp PP(qprep, qnp)

qdet DET(the)

qprep PREP(of)

qn N(sons)

qn N(daughters)

• Defines a set of trees

[p=1.0]

[p=0.7]

[p=0.3]

[p=1.0]

[p=1.0]

[p=1.0]

[p=0.5]

[p=0.5]

S

NP

qnp

VP

qpp

V

run

Regular Tree Grammar (RTG)

Generative Process:

• Example:

–

–

–

–

–

–

–

–

q S(qnp, VP(V(run)))

qnp NP(qdet, qn)

qnp NP(qnp, qpp)

qpp PP(qprep, qnp)

qdet DET(the)

qprep PREP(of)

qn N(sons)

qn N(daughters)

• Defines a set of trees

[p=1.0]

[p=0.7]

[p=0.3]

[p=1.0]

[p=1.0]

[p=1.0]

[p=0.5]

[p=0.5]

S

NP

qpp

NP

qdet

VP

qn

V

run

Regular Tree Grammar (RTG)

• Example:

–

–

–

–

–

–

–

–

q S(qnp, VP(V(run)))

qnp NP(qdet, qn)

qnp NP(qnp, qpp)

qpp PP(qprep, qnp)

qdet DET(the)

qprep PREP(of)

qn N(sons)

qn N(daughters)

• Defines a set of trees

Generative Process:

S

[p=1.0]

[p=0.7]

NP

[p=0.3]

[p=1.0]

qpp

NP

[p=1.0]

[p=1.0]

DET

qn

[p=0.5]

[p=0.5]

the

VP

V

run

Regular Tree Grammar (RTG)

• Example:

–

–

–

–

–

–

–

–

q S(qnp, VP(V(run)))

qnp NP(qdet, qn)

qnp NP(qnp, qpp)

qpp PP(qprep, qnp)

qdet DET(the)

qprep PREP(of)

qn N(sons)

qn N(daughters)

• Defines a set of trees

Generative Process:

S

[p=1.0]

[p=0.7]

NP

[p=0.3]

[p=1.0]

qpp

NP

[p=1.0]

[p=1.0]

DET

N

[p=0.5]

[p=0.5]

the

sons

VP

V

run

Regular Tree Grammar (RTG)

• Example:

–

–

–

–

–

–

–

–

q S(qnp, VP(V(run)))

qnp NP(qdet, qn)

qnp NP(qnp, qpp)

qpp PP(qprep, qnp)

qdet DET(the)

qprep PREP(of)

qn N(sons)

qn N(daughters)

• Defines a set of trees

Generative Process:

S

[p=1.0]

[p=0.7]

NP

[p=0.3]

[p=1.0]

PP

NP

[p=1.0]

[p=1.0]

NP

DET

N PREP

[p=0.5]

[p=0.5]

the

sons of DET

the

VP

V

run

N

daughters

P(t) = 1.0 x 0.3 x 0.7 x 0.5 x 0.7 x 0.5

Relation Between RTG and CFG

• For every CFG, there is an RTG that directly

generates its derivation trees

– TRUE (just convert the notation)

• For every RTG, there is a CFG that generates the

same trees in its derivations

– FALSE

{

NP

NN

NP

NN

killer clown

,

NN

NN

clown killer

but reject “clown clown”

}

RTG:

q NP(NN(clown) NN(killer))

q NP(NN(killer) NN(clown))

No CFG possible.

Relation Between RTG and TSG

• For every TSG, there is an RTG that directly

generates its trees

– TRUE (just convert the notation)

• For every RTG, there is an TSG that generates

the same trees

– FALSE

• Using states, an RTG can accept all trees (over symbols

a and b) that contain exactly one a

Relation of RTG to Johnson [98]

Syntax Model

TOP

S

NP

VP

P(PRO | NP:S)

= 0.21

PRO

VB

NP

P(PRO | NP:VP)

= 0.03

PRO

Relation of RTG to Johnson [98]

Syntax Model

TOP

S:TOP

NP:S

VP:S

P(PRO | NP:S)

= 0.21

PRO:NP

VB:VP

NP:VP

P(PRO | NP:VP)

= 0.03

PRO:NP

Relation of RTG to Johnson [98]

Syntax Model

RTG:

qstart S(q.np:s, q.vp:s)

q.np:s NP(q.pro:np)

q.np:vp NP(q.pro:np)

q.pro:np PRO(q.pro)

q.pro:np he

q.pro:np she

q.pro:np him

q.pro.np her

S

p=0.21

NP

VP

p=0.03

PRO

VB

NP

P(PRO | NP:VP)

= 0.03

PRO

Relation of RTG to

Lexicalized Syntax Models

• Collins’ model assigns P(t) to every tree

• Can weighted RTG (wRTG) implement P(t) for

language modeling?

– Just like a WFSA can implement smoothed n-gram

language model…

• Something like yes.

– States can encode relevant context that is passed up and

down the tree.

– Technical problems:

• “Markovized” grammar (Extended RTG?)

• Some models require keeping head-word information, and if we

back off to a state that forgets the head-word, we can’t get it back.

Something an RTG Can’t Do

{b, b b , a

a

a

a

bbb b

, a

a a

a

a

,…

a a

bbbbbbbb

n

Note also that the yield language is {b2 : n 1}, which is not context-free.

}

Language Classes in

String World and Tree World

…

indexed

languages

String

World

CFL

Regular

Languages

…

yield

yield

CFTL

RTL

Tree

World

Picture So Far

Strings

Trees

Grammars

Regular grammar,

CFG

DONE (RTG)

Acceptors

Finite-state acceptor

(FSA), PDA

Transducers

?

String Pairs

Tree Pairs

(inputoutput) (inputoutput)

Finite-state transducer

?

(FST)

FSA Analog: Top-Down Tree Acceptor

[Rabin, 1969; Doner, 1970]

RTG:

q NP(NN(clown) NN(killer))

q NP(NN(killer) NN(clown))

TDTA:

q NP q2 q3

q NP q3 q2

q2 NN q2

q2 clown accept

q3 killer accept

q3 NN q3

q NP

NN NN

clown killer

NP

NP

q2 NN q3 NN

clown

killer

NN

NP

NN

q2 clown q3 killer

NN

NN

clown

killer

accept

accept

For any RTG, there is a TDTA that accepts the same trees.

For any TDTA, there is an RTG that accepts the same trees.

FSA Analog: Bottom-Up Tree Acceptor

[Thatcher & Wright 68; Doner 70]

• A similar story for bottom-up acceptors…

• To summarize tree acceptor automata and RTGs:

–

–

–

–

Tree acceptors are the analogs of string FSAs

They are often used in proofs

The visual appeal is not as great at FSAs

People often prefer to read and write RTGs

Properties of Language Classes

String Sets

Tree Sets

RSL

CFL

RTL

(FSA, rexp)

(PDA, CFG)

(TDTA, RTG)

Closed under union

YES

YES

YES

Closed under intersection

YES

NO

YES

Closed under complement

YES

NO

YES

Membership testing

O(n)

O(n3)

O(n)

Emptiness decidable?

YES

YES

YES

Equality decidable?

YES

NO

YES

(references: Hopcroft & Ullman 79, Gécseg & Steinby 84)

Picture So Far

Strings

Trees

Grammars

Regular grammar,

CFG

DONE (RTG)

Acceptors

Finite-state acceptor

(FSA), PDA

Transducers

DONE (TDTA)

String Pairs

Tree Pairs

(inputoutput) (inputoutput)

Finite-state transducer

?

(FST)

Transducers

String Transducer

Tree Transducer

FST compactly represents a

possibly-infinite set of

string pairs

Tree transducers compactly

represent a possiblyinfinite set of tree pairs

Probabilistic FST assigns

P(s2 | s1) to every string

pair

Probabilistic tree transducer

assigns P(t2 | t1) to every

tree pair

Can ask: What’s the best

transformation of input

string s1?

Can ask: What’s the best

transformation of tree t1?

Example 1: Machine Translation [Yamada & Knight 01]

Parse Tree(E)

S

NP

S

VB1

he

VB2

adores

Reorder

VB

listening

he

P

NN

to

music

PP

P

music

to

PP

VB

to

music

Translate

ga

S

NP

kare

adores

desu

listening no

adores

listening

P

NN

VB1

VB

NN

VB1

Insert

VB2

ha

VB2

he

PP

S

NP

NP

VB2

ha

PP

NN

ongaku

VB1

VB

ga

daisuki desu

P

wo kiku

no

Take Leaves

.

Sentence(J)

Kare ha ongaku wo kiku no ga daisuki desu

Example 2: Sentence Compression

[Knight & Marcu 00]

S

S

VP

NP

VP

VB

PRP

PRP

adores

he

VP

NP

VB

listening

PP

P

adores

NP

to

he

VP

VB

VB

listening

P

to

NN

music

PP

PP

NP

JJ

NN

good music

P

NP

at

NN

home

What We Want

• Device T for compactly representing possiblyinfinite set of tree pairs (possibly with weights)

• Want to support operations like:

– Forward application: Send input tree X through T, get all

possible output trees, perhaps represented as an RTG.

– Backward application: What input trees, when sent through T,

would output tree X?

– Composition: Given a T1 and T2, can we build a transducer T3

that does the work of both in sequence?

– Equality testing: Do T1 and T2 contain exactly the same tree

pairs?

– Training: How to set weights for T given a training corpus of

input/output tree pairs?

Finite-State Transducer (FST)

Original input:

Transformation:

k

q k q2 ε

q i q AY

q g q3 ε

q2 n q N

q3 h q4 ε

q4 t qfinal T

n

i

g

k:

i : AY

h

q

g:

t

q

FST

ε

ε

q3

n

i

g

q2

n:N

qfinal

h:

ε q4

k

h

t:T

t

Finite-State (String) Transducer

Original input:

Transformation:

k

FST

q k q2 ε

q i q AY

q g q3 ε

q2 n q N

q3 h q4 ε

q4 t qfinal T

n

i

g

k:

i : AY

h

q

g:

t

ε

ε

g

qfinal

h:

ε q4

n

i

q2

n:N

q3

q2

h

t:T

t

Finite-State (String) Transducer

Original input:

Transformation:

k

FST

q k q2 ε

q i q AY

q g q3 ε

q2 n q N

q3 h q4 ε

q4 t qfinal T

n

i

g

k:

i : AY

h

q

g:

t

ε

ε

q

qfinal

h:

ε q4

i

g

q2

n:N

q3

N

h

t:T

t

Finite-State (String) Transducer

Original input:

Transformation:

k

FST

q k q2 ε

q i q AY

q g q3 ε

q2 n q N

q3 h q4 ε

q4 t qfinal T

n

i

g

k:

i : AY

h

q

g:

t

ε

ε

AY

q

q2

n:N

q3

N

qfinal

h:

ε q4

g

h

t:T

t

Finite-State (String) Transducer

Original input:

Transformation:

k

FST

q k q2 ε

q i q AY

q g q3 ε

q2 n q N

q3 h q4 ε

q4 t qfinal T

n

i

g

k:

i : AY

h

q

g:

t

ε

ε

AY

q2

n:N

q3

N

qfinal

h:

ε q4

q3

h

t:T

t

Finite-State (String) Transducer

Original input:

Transformation:

k

FST

q k q2 ε

q i q AY

q g q3 ε

q2 n q N

q3 h q4 ε

q4 t qfinal T

n

i

g

k:

i : AY

h

q

g:

t

ε

ε

AY

q2

n:N

q3

N

qfinal

h:

ε q4

t:T

q4

t

Finite-State (String) Transducer

Original input:

Transformation:

k

FST

q k q2 ε

q i q AY

q g q3 ε

q2 n q N

q3 h q4 ε

q4 t qfinal T

n

i

g

k:

i : AY

h

q

g:

t

ε

ε

AY

q2

n:N

q3

N

qfinal

q4

h:ε

t:T

T

qfinal

Finite-State (String) Transducer

Original input:

k

n

i

g

Final output:

FST

q k q2 ε

q i q AY

q g q3 ε

q2 n q N

q3 h q4 ε

q4 t qfinal T

N

AY

h

T

t

Trees

Original input:

Target output:

S

NP

PRO

he

S

VP

VBZ

enjoys

NP

NP

?

S

PRO PN

NP

VP

kare wa SBAR PN VB

PV

SBAR

PS ga daisuki desu

S

VBG

VP

listening P

NP

NP

NN PN kiku

ongaku o

to

music

VB no

Tree Transformation

Original input:

Transformation:

S

NP

qS

VP

NP

PRO

VBZ

NP

he

enjoys

SBAR

VBG

?

VP

VP

PRO

VBZ

NP

he

enjoys

SBAR

VBG

VP

listening P

NP

listening P

NP

to

music

to

music

Tree Transformation

Original input:

Transformation:

S

NP

S

VP

q.np NP

PRO

VBZ

NP

he

enjoys

SBAR

VBG

?

S

PRO

q.np NP

he

VP

q.vbz VBZ

SBAR

VBG

enjoys

VP

listening P

NP

listening P

NP

to

music

to

music

Trees

Original input:

Transformation:

S

NP

PRO

he

S

VP

VBZ

enjoys

S

NP

NP

?

PRO PN

q.np NP

kare wa

SBAR

VBG

q.vbz VBZ

SBAR

VP

VBG

enjoys

VP

listening P

NP

listening P

NP

to

music

to

music

Trees

Original input:

Final output:

S

NP

PRO

he

S

VP

VBZ

enjoys

NP

NP

?

S

PRO PN

NP

VP

kare wa SBAR PN VB

PV

SBAR

PS ga daisuki desu

S

VBG

VP

listening P

NP

NP

NN PN kiku

ongaku o

to

VB no

music

qfinal

Top-Down Tree Transducer

• Introduced by Rounds (1970) & Thatcher (1970)

“Recent developments in the theory of automata have pointed to

an extension of the domain of definition of automata from

strings to trees … parts of mathematical linguistics can be

formalized easily in a tree-automaton setting … Our results

should clarify the nature of syntax-directed translations and

transformational grammars …” (Mappings on Grammars and

Trees, Math. Systems Theory 4(3), Rounds 1970)

• “FST for trees”

• Large literature

– e.g., Gécseg & Steinby (1984), Comon et al (1997)

• Many classes of transducers

– Top-down (R), bottom-up (F), copying, deleting, lookahead…

Top-Down Tree Transducer

Original

Input:

Target

Output:

R (“root to frontier”) transducer

S

PRO

he

X

?

VP

VB

NP

likes spinach

VP

VB

PRO AUX

NP

likes spinach

he

does

Top-Down Tree Transducer

Original

Input:

Transformation:

R (“root to frontier”) transducer

q S

x0 x1

X

q x1 q x0 AUX

does

S

PRO

VP

qS

PRO

VP

Or in computer-speak:

he

VB

NP

likes spinach

q S(x0, x1)

X(q x1, q x0, AUX(does))

he

VB

NP

likes spinach

Top-Down Tree Transducer

Original

Input:

Transformation:

R (“root to frontier”) transducer

q S

x0 x1

X

q x1 q x0 AUX

does

S

PRO

VP

X

q VP

q PRO AUX

Or in computer-speak:

he

VB

NP

likes spinach

q S(x0, x1)

X(q x1, q x0, AUX(does))

VB

NP

likes spinach

he

does

Relation of Tree Transducers to

(String) FSTs

• Tree transducers

generalize FSTs

(strings are

“monadic trees”)

FST

transition

q

q

A/B

A/*e*

r

r

Equivalent tree

transducer rule

qA

B

x0

r x0

qA

r x0

x0

q

*e*/B

r

qx

B

rx

Tree Transducer Simulating FST

Original input:

k

n

Transformation:

R (“root to frontier”) transducer

q k q2 x0

q

k

n

x0

i

g

qn N

x0

q x0

i

g

h

h

t

t

Tree Transducer Simulating FST

Original input:

k

n

Transformation:

R (“root to frontier”) transducer

q k q2 x0

q2

n

x0

i

g

qn N

x0

q x0

i

g

h

h

t

t

Tree Transducer Simulating FST

Original input:

k

n

Transformation:

R (“root to frontier”) transducer

q k q2 x0

N

x0

i

g

qn N

x0

q x0

h

q

i

g

h

(and so on …)

t

t

Top-Down Transducers can Copy

and Delete

sentence

compression

VP

VB

R transducer that deletes

q VP

PP

calculus

d sin(y) = cos(y) · d y

d sin

y

x0

VP

VP

x1

q VB

q x0

R transducer that copies

d sin

x0

MULT

MULT

cos

d x0

i x0

Example from Rounds (70)

cos

i y

d y

Complex Re-Ordering

Original

Input:

R transducer

q S

?

Target

Output:

x0 x1

S

S

PRO

VB

VB

VP

NP

PRO

NP

Complex Re-Ordering

Original

Input:

R transducer

q S

x0 x1

qleft VP

S

PRO

qright VP

VB

S

qleft x1

q x0

x0 x1

VP

Transformation:

qright x1

q S

PRO

q x1

q PRO

PRO

q VB

VB

q NP

NP

NP

q x0

x0 x1

VB

VP

NP

Complex Re-Ordering

Original

Input:

R transducer

q S

x0 x1

qleft VP

S

PRO

qright VP

VB

S

qleft x1

qright x1

S

qleft VP q PRO qright VP

VB

q x1

q PRO

PRO

q VB

VB

q NP

NP

NP

q x0

q x0

x0 x1

VP

Transformation:

x0 x1

NP

VB

NP

Complex Re-Ordering

Original

Input:

R transducer

q S

x0 x1

qleft VP

S

PRO

qright VP

VB

S

qleft x1

q x0

qright x1

S

VB

q x1

q PRO

PRO

q VB

VB

q NP

NP

NP

q x0

q VB q PRO qright VP

x0 x1

VP

Transformation:

x0 x1

NP

Complex Re-Ordering

Original

Input:

R transducer

q S

x0 x1

qleft VP

S

PRO

qright VP

VB

S

qleft x1

q x0

qright x1

S

VB

q x1

q PRO

PRO

q VB

VB

q NP

NP

NP

q x0

VB q PRO qright VP

x0 x1

VP

Transformation:

x0 x1

NP

Complex Re-Ordering

Original

Input:

R transducer

q S

x0 x1

qleft VP

S

PRO

qright VP

VB

S

qleft x1

qright x1

S

VB

PRO qright VP

VB

q x1

q PRO

PRO

q VB

VB

q NP

NP

NP

q x0

q x0

x0 x1

VP

Transformation:

x0 x1

NP

Complex Re-Ordering

Original

Input:

R transducer

q S

x0 x1

qleft VP

S

PRO

qright VP

VB

S

qleft x1

qright x1

S

VB

q x1

q PRO

PRO

q VB

VB

q NP

NP

NP

q x0

q x0

x0 x1

VP

Transformation:

x0 x1

PRO

q NP

Complex Re-Ordering

Original

Input:

R transducer

q S

x0 x1

qleft VP

S

PRO

qright VP

VB

S

qleft x1

qright x1

S

VB

q x1

q PRO

PRO

q VB

VB

q NP

NP

NP

q x0

q x0

x0 x1

VP

Final

Output:

x0 x1

PRO

NP

Extended Left-Hand Side

Transducer: xR

Original

Input:

xR transducer

q S

q x1

q x0

q x2

S

x1:VB x2:NP

S

VB

S

x0:PRO VP

PRO

Final

Output:

q PRO

PRO

q VB

VB

q NP

NP

VP

NP

Mentioned already in Section 4, Rounds 1970.

Not defined or used in proofs there.

See [Knight & Graehl 05].

VB

PRO

NP

Tree-to-String Transducers: xRS

Syntax-directed translation for compilers [Aho & Ullman 71]

Original input:

Transformation:

xRS transducer

S

q S

NP

VP

x0:NP

PRO

VBZ

NP

he

enjoys

SBAR

VBG

NP

VP

VP

x1:VBZ x2:NP

VP

q x0 , q x2 , q x1

qS

PRO

VBZ

NP

he

enjoys

SBAR

VBG

VP

listening P

NP

listening P

NP

to

music

to

music

Tree-to-String Transducers: xRS

Syntax-directed translation for compilers [Aho & Ullman 71]

Original input:

xRS transducer

S

NP

q S

VP

x0:NP

PRO

Transformation:

VBZ

q x0 , q x2 , q x1

VP

NP

x1:VBZ x2:NP

he

enjoys

SBAR

VBG

q.np NP

q.np NP

PRO

VP

he

listening P

NP

to

music

,

q.vbz VBZ

SBAR

VBG

,

VP

listening P

NP

to music

enjoys

Tree-to-String Transducers: xRS

Syntax-directed translation for compilers [Aho & Ullman 71]

Original input:

xRS transducer

S

NP

Transformation:

q S

VP

x0:NP

PRO

VBZ

NP

he

enjoys

SBAR

VBG

VP

x1:VBZ x2:NP

VP

q x0 , q x2 , q x1

q.np NP

kare

,

wa

,

SBAR

VBG

listening P

NP

to

music

q.vbz VBZ

,

VP

listening P

NP

to music

enjoys

Tree-to-String Transducers: xRS

Syntax-directed translation for compilers [Aho & Ullman 71]

Original input:

xRS transducer

S

NP

Final output:

VP

q S

x0:NP

PRO

VBZ

NP

he

enjoys

SBAR

VBG

q x0 , q x2 , q x1

VP

x1:VBZ x2:NP

kare ,wa , ongaku,o ,kiku , no, ga, daisuki, desu

VP

listening P

NP

to

music

Used in a practical MT

system [Galley et al 04].

Are Tree Transducers Expressive Enough

for Natural Language?

• Can published tree-based probabilistic models

be cast in this framework?

– This was previously done for string-based models,

e.g.

• [Knight & Al-Onaizan 98] – word-based MT as FST

• [Kumar & Byrne 03] – phrase-based MT as FST

• Can published tree-based probabilistic models

be naturally extended in this framework?

Are Tree Transducers Expressive Enough

for Natural Language?

Parse (E)

S

NP

S

VB1

he

VB2

adores

Reorder

VB

listening

he

P

NN

to

music

PP

P

music

to

PP

VB

to

music

Translate

ga

listening no

S

NP

kare

adores

desu

Take Leaves

adores

listening

P

NN

VB1

VB

NN

VB1

Insert

VB2

ha

VB2

he

PP

S

NP

NP

[Yamada & Knight 01]

VB2

ha

PP

NN

ongaku

VB1

VB

ga

daisuki desu

P

wo kiku

no

.

Sentence (J)

Kare ha ongaku wo kiku no ga daisuki desu

Are Tree Transducers Expressive Enough

for Natural Language?

Parse (E)

S

NP

S

VB1

he

VB2

adores

Reorder

VB

NP

he

PP

P

music

to

to

See [Graehl & Knight 04]

VB1

VB

NN

adores

listening

P

music

music

VB2

ha

VB

Can PbeNNcast as a single 4-state

tree transducer.

Insert

to

NP

VB1

PP

NN

listening

S

VB2

he

PP

[Yamada & Knight 01]

Translate

ga

listening no

NP

kare

adores

desu

Take Leaves

S

VB2

ha

PP

NN

ongaku

VB1

VB

ga

daisuki desu

P

wo kiku

no

.

Sentence(J)

Kare ha ongaku wo kiku no ga daisuki desu

Extensions Possible within the

Transducer Framework

Phrasal Translation

VP

S

está, cantando

PRO

VBZ VBG

Non-contiguous Phrases

VP

hay, x0

VP

there VB

singing

is

Non-constituent Phrases

x0:NP

poner, x0

VB x0:NP

PRT

put

on

are

Context-Sensitive

Word Insertion

NPB

DT

the

x0:NNS

x0

Multilevel Re-Ordering

NP

S

x0:NP VP

x1:VB

Lexicalized Re-Ordering

x1, x0, x2

x2:NP2

PP

x0:NP

P x1:NP

of

x1,

, x0

Extensions Possible within the

Transducer Framework

Phrasal Translation

VP

Non-constituent Phrases

S

está, cantando

PRO

VBZ VBG

Non-contiguous Phrases

VP

hay, x0

VP

poner, x0

VB x0:NP

PRT

there VB

Practical

MTx0:NP

system

on

put

[Galley et al 04,are06; Marcu et al 06]

uses 40m such

Context-Sensitive

Multilevel Re-Ordering

Lexicalized Re-Ordering

automatically

collected

rules

Word Insertion

singing

is

NPB

DT

the

x0:NNS

x0

NP

S

x0:NP VP

x1:VB

x1, x0, x2

x2:NP2

PP

x0:NP

P x1:NP

of

x1,

, x0

Limitations of the

Top-Down Transducer Model

Who does John think Mary believes I saw?

John thinks Mary believes I saw who?

qS

WhNP

Who

S

VP

NP

AUX

does

S

NP

John

*

VP

V

Mary

V qwho S

thinks NP

S

think NP

John

VP

Mary

VP

V

V

S

believes NP

S

believes NP

I

VP

VP

V

saw

I

VP

V

saw

Limitations of the

Top-Down Transducer Model

Who does John think Mary believes I saw?

John thinks Mary believes I saw who?

qS

WhNP

Who

S

VP

NP

AUX

does

S

NP

John

*

VP

V

Mary

V

S

thinks NP

S

think NP

John

VP

Mary

VP

V

V

S

believes NP qwhoVP

S

believes NP

I

VP

VP

V

saw

I

V

saw

Limitations of the

Top-Down Transducer Model

Who does John think Mary believes I saw?

John thinks Mary believes I saw who?

qS

WhNP

Who

S

VP

NP

AUX

does

S

NP

John

*

VP

V

Mary

V

S

thinks NP

S

think NP

John

VP

Mary

VP

V

V

S

believes NP

S

believes NP

I

VP

VP

V

saw

I

VP

V

WhNP

saw

who

Limitations of the

Top-Down Transducer Model

Whose blue dog does John think Mary believes I saw?

John thinks Mary believes I saw whose blue dog?

qS

WhNP

DT ADJ N

S

VP

NP

AUX

does

S

NP

*

John

VP

Whose blue dog

John

V

Mary

V

S

thinks NP

S

think NP

VP

Mary

VP

V

V

S

believes NP

S

believes NP

I

VP

I

VP

V

saw

Can’t

do this

VP

V

saw

WhNP

DT ADJ N

whose blue dog

Tree Transducers:

A Digression

R

copying

+ copy (before

Nprocess)

+ complex

re-order

non-copying

RL

+ delete

deleting

non-deleting

RLN | FLN

+ branching LHS rules

WFST

Tree Transducers:

A Digression

xR

+ finite-check

before delete

+ copy (before

nprocess)

R

xRL

+ delete

+ finiite-check

before delete

xRLN

+ finite-check

before delete

+ complex

re-order

+ complex

re-order

copying

+ copy (before

Nprocess)

+ complex

re-order

non-copying

RL

+ delete

deleting

non-deleting

RLN | FLN

+ branching LHS rules

WFST

Tree Transducers:

A Digression

RR

+ reg-check

before delete

xR

+ finite-check

before delete

+ copy (before

nprocess)

R

xRL

+ delete

+ finiite-check

before delete

xRLN

to R

RD

RT

+ finite-check

before delete

+ complex

re-order

+ complex

re-order

copying

+ copy (before

Nprocess)

+ complex

re-order

RL

+ delete

deleting

non-deleting

RN

RLN | FLN

RTD

non-copying

+ branching LHS rules

WFST

Tree Transducers:

A Digression

PDTT

R2

F2

+ reg-check before delete

+ nprocess before copy

RR

+ reg-check

before delete

xR

+ copy (before

nprocess)

+ delete

+ finiite-check

before delete

xRLN

RD

RT

+ nprocess (before copy)

+ complex re-order

R

xRL

to R

F

+ finite-check

before delete

+ finite-check

before delete

+ complex

re-order

+ complex

re-order

+ copy (before

Nprocess)

+ complex

re-order

RLR | FL

non-copying

+ non-determinism

+ reg-check

before delete

FLD

RL

+ delete

+ reg-check before delete

+ delete

deleting

non-deleting

RN

RLN | FLN

RTD

copying

+ branching LHS rules

WFST

Tree Transducers:

A Digression

PDTT

R2

Bold circles =

Closed under

Composition

F2

+ reg-check before delete

+ nprocess before copy

RR

+ reg-check

before delete

xR

+ copy (before

nprocess)

+ delete

+ finiite-check

before delete

xRLN

RD

RT

+ nprocess (before copy)

+ complex re-order

R

xRL

to R

F

+ finite-check

before delete

+ finite-check

before delete

+ complex

re-order

+ complex

re-order

+ copy (before

Nprocess)

+ complex

re-order

RLR | FL

non-copying

+ non-determinism

+ reg-check

before delete

FLD

RL

+ delete

+ reg-check before delete

+ delete

deleting

non-deleting

RN

RLN | FLN

RTD

copying

+ branching LHS rules

WFST

Top Down Tree Transducers are Not

Closed Under Composition [Rounds 70]

Z

X

|

Y

|

X

|

…

|

a

R that changes

X’s and Y’s to

X’s and Y’s, nondeterministically

No problem!

Y

|

X

|

X

|

…

|

a

R that duplicates

input tree under

new label Z

No problem!

/

Y

|

X

|

X

|

…

|

a

\

Y

|

X

|

X

|

…

|

a

Top Down Tree Transducers are Not

Closed Under Composition [Rounds 70]

Z

X

|

Y

|

X

|

…

|

a

R that changes

X’s and Y’s to

X’s and Y’s, nondeterministically,

then duplicates

resulting tree under

new label Z

Impossible for R

/

Y

|

X

|

X

|

…

|

a

\

Y

|

X

|

X

|

…

|

a

NOTE: Deterministic R-transducers are composable.

Properties of Transducer Classes

String Transducers

Tree Transducers

FST R

RL

RLN=FLN F

FL

Closed under composition

YES

NO

NO

YES

NO

YES

Closed under intersection

NO

NO

NO

NO

NO

NO

Image of tree (or finite

forest) is:

RSL

RTL

RTL

RTL

Not

RTL

RTL

Image of RTL is:

RSL

Not

RTL

RTL

RTL

Not

RTL

RTL

Inverse image of tree is:

RSL

RTL

RTL

RTL

RTL

RTL

Inverse image of RTL is:

RSL

RTL

RTL

RTL

RTL

RTL

Efficiently trainable

YES

YES

YES

YES

(references: Hopcroft & Ullman 79, Gécseg & Steinby 84, Baum et al 70, Graehl & Knight 04)

K-best Trees & Determinization

• String sets (FSA)

– [Dijkstra 59] gives best path through FSA

• O(e + n log n)

– [Eppstein 98] gives k-best paths through FSA, including cycles

• O(e + n log n + k log k)

/* to print k-best lengths */

– [Mohri & Riley 02] give k-best unique strings (determinization followed

by k-best paths)

• Tree sets (RTG)

– [Knuth 77] gives best derivation tree from CFG (easily adapted to RTG)

• O(t + n log n) running time

– [Huang & Chiang 05] give k-best derivations in RTG

– [May & Knight 05] give k-best unique trees (determinization followed by

k-best derivations)

The Training Problem

[Graehl & Knight 04]

• Given:

– an xR transducer with a set of non-deterministic rules (r1…rm)

– a training corpus of input-tree/output-tree pairs

• Produce:

– conditional probabilities p1…pm that maximize

P(output trees | input trees)

= Πi,o P(o | i)

input/output trees <i, o>

= Πi,o Σd P(d, o | i)

derivations d mapping i to o

= Πi,o Σd Πr pr

rules r in derivation d

Derivations in R

R Transducer rules:

Input tree:

Derivations:

q A

x0

B

x1

R

E

F

rule2

1.0

rule2

x0

x0

x0

S

W

X

rule4

rule6

q x0

x1

rule2

rule7

q x1

(total weight = 0.02)

Packed Derivations:

T

0.4

rule4

A

(total weight = 0.27)

T

q C

Output tree:

U

rule8

rule1

0.6

rule3

T

rule5

U

x1

q C

R

q x0 X

G

q B

V

rule3

S

C

q x1

D

rule1

A

1.0

rule1

A

q x1

x1

rule5

q F 0.9

V

rule6

q F 0.1

W

rule7

q G 0.5

V

rule8

q G 0.5

W

q x0

qstart

1.0

q.1.12 1.0

q.2.11 0.6

q.2.11 0.4

q.21.111 0.9

q.22.112 0.5

q.21.112 0.1

q.22.111 0.5

rule1(q.1.12, q.2.11)

rule2

rule3(q.21.111, q.22.112)

rule4(q.21.112, q.22.111)

rule5

rule8

rule6

rule7

Derivations in R

R Transducer rules:

Input tree:

Derivations:

q A

x0

B

x1

R

E

F

rule2

1.0

rule2

x0

x0

x0

S

W

X

rule4

rule6

q x0

x1

rule2

rule7

q x1

(total weight = 0.02)

Packed Derivations:

T

0.4

rule4

A

(total weight = 0.27)

T

q C

Output tree:

U

rule8

rule1

0.6

rule3

T

rule5

U

x1

q C

R

q x0 X

G

q B

V

rule3

S

C

q x1

D

rule1

A

1.0

rule1

A

q x1

x1

rule5

q F 0.9

V

rule6

q F 0.1

W

rule7

q G 0.5

V

rule8

q G 0.5

W

q x0

qstart

1.0

q.1.12 1.0

q.2.11 0.6

q.2.11 0.4

q.21.111 0.9

q.22.112 0.5

q.21.112 0.1

q.22.111 0.5

rule1(q.1.12, q.2.11)

rule2

rule3(q.21.111, q.22.112)

rule4(q.21.112, q.22.111)

rule5

rule8

rule6

rule7

Derivations in R

R Transducer rules:

Input tree:

Derivations:

q A

x0

B

x1

R

E

F

rule2

1.0

rule2

x0

x0

x0

S

W

X

rule4

rule6

q x0

x1

rule2

rule7

q x1

(total weight = 0.02)

Packed Derivations:

T

0.4

rule4

A

(total weight = 0.27)

T

q C

Output tree:

U

rule8

rule1

0.6

rule3

T

rule5

U

x1

q C

R

q x0 X

G

q B

V

rule3

S

C

q x1

D

rule1

A

1.0

rule1

A

q x1

x1

rule5

q F 0.9

V

rule6

q F 0.1

W

rule7

q G 0.5

V

rule8

q G 0.5

W

q x0

qstart

1.0

q.1.12 1.0

q.2.11 0.6

q.2.11 0.4

q.21.111 0.9

q.22.112 0.5

q.21.112 0.1

q.22.111 0.5

rule1(q.1.12, q.2.11)

rule2

rule3(q.21.111, q.22.112)

rule4(q.21.112, q.22.111)

rule5

rule8

rule6

rule7

Naïve EM Algorithm for xR

Initialize uniform probabilities

Until tired do:

Make zero rule count table

For each training case <i, o>

Compute P(o | i) by summing over derivations d

For each derivation d for <i, o>

/* exponential! */

Compute P(d | i, o) = P(d, o | i) / P(o | i)

For each rule r used in d

count(r) += P(d | i, o)

Normalize counts to probabilities

Efficient EM for xR

Initialize uniform probabilities

Until tired do:

Make zero rule count table

For each training case <i, o>

Build packed derivation forest O(q n2 r) time/space

Use inside-outside algorithm to collect rule counts

Inside pass

O(q n2 r) time/space

Outside pass

O(q n2 r) time/space

Count collection pass

O(q n2 r) time/space

Normalize counts to probabilities (joint or conditional)

Per-example training complexity is O(n2) x “transducer constant” -- same as

forward-backward training for string transducers [Baum & Welch 71].

Variation for xRS runs in O(q n4 r) time (if |RHS| = 2).

Training: Related Work

• Generalizes specific MT model training algorithm in the appendix

of [Yamada/Knight 01]

– xR training not tied to particular MT model, or to MT at all

• Generalizes forward-backward HMM training [Baum et al 70]

– Write strings vertically, and use xR training

• Generalizes inside-outside PCFG training [Lari/Young 90]

– Attach fixed input tree to each “output” string, and use xRS

training

• Generalizes synchronous tree-substitution grammar (STSG)

training [Eisner 03]

– xR and xRS allow copying of subtrees

Picture So Far

Strings

Grammars

Regular grammar, CFG

Acceptors

Finite-state acceptor

(FSA), PDA

Trees

DONE (RTG)

DONE (TDTA)

String Pairs

Tree Pairs

(inputoutput) (inputoutput)

Transducers

Finite-state transducer

(FST)

DONE

If You Want to Play Around …

• Tiburon: A Weighted Tree Automata Toolkit

– www.isi.edu/licensed-sw/tiburon

– Developed by Jonathan May at USC/ISI

– Portable, written in Java

• Implements operations on tree grammars and tree transducers

– k-best trees, determinization, application, training, …

• Tutorial primer comes with explanations, sample automata,

and exercises

– Hands-on follow-up to a lot of what we covered today

• Beta version released June 2006

Part 1: Introduction

Part 2: Synchronous Grammars

< break >

Part 3: Tree Automata

Part 4: Conclusion

Why Worry About

Formal Languages?

• They already figured out most of the key

things back in the 1960s & 1970s

• Lucky us!

Relation of Synchronous Grammars

with Tree Transducers

• Developed largely independently

– Synchronous grammars

• [Aho & Ullman 69, Lewis & Stearns 68] inspired by compilers

• [Shieber and Schabes 90] inspired by syntax/semantics mapping

– Tree Transducers

• [Rounds 70] inspired by transformational grammar

• Recent work is starting to relate the two

– [Shieber 04, 06] explains both in terms of abstract

bimorphisms

– [Graehl, Hopkins, Knight in prep] relates STSG to xRLN &

gives composition closure results

Wait, Maybe They Didn’t Figure Out

Everything in the 1960s and 1970s …

• Is there a formal tree transformation model that fits natural

language problems and is closed under composition?

• Is there an “markovized” version of R-style transducers, with

horizontal Thatcher-like processing of children?

• Are there transducers that can move material across unbounded

distance in a tree? Are they trainable?

• Are there automata models that can work with string/string data?

• Can we build large, accurate, probabilistic syntax-based language

models of English using RTGs?

• What about root-less transformations like

– JJ(big) NN(cheese) jefe

• Can we build probabilistic tree toolkits as powerful and

ubiquitous as string toolkits?

More To Do on

Synchronous Grammars

• Connections between synchronous grammars and tree

transducers and transfer results between them

• Degree of formal power needed in theory and practice for

translation and paraphrase [Wellington et al 06]

• New kinds of synchronous grammars that are more

powerful but not more expensive, or not much more

• Efficient and optimal algorithms for minimizing the rank

of a synchronous CFG [Gildea, Satta, Zhang 06]

• Faster strategies for translation with an n-gram language

model [Zollmann & Venugopal 06; Chiang, to appear?]

Further Readings

• Synchronous grammar

– Attached notes by David (with references)

• Tree automata

– [Knight and Graehl 05] overview and relationship to

natural language processing

– Tree Automata textbook [Gécseg & Steinby 84]

– TATA textbook on the Internet [Comon et al 97]

References on These Slides

[Aho & Ullman 69]

ACM STOC

[Aho & Ullman 71]

Information and Control, 19

[Bangalore & Rambow 00]

COLING

[Baum et al 70]

Ann. Math. Stat. 41

[Chiang 05]

ACL

[Chomsky 57]

Syntactic Structures

[Church 77]

ANLP

[Comon et al 97]

www.grappa.univ-lille3.fr/tata/

[Dijkstra 59]

Numer. Math. 1

[Doner 70]

J. Computer & Sys. Sci. 4

[Echihabi & Marcu 03]

ACL

[Eisner 03]

HLT

[Eppstein 98]

Siam J. Computing 28

[Galley et al 04, 06]

HLT, ACL-COLING

[Gecseg & Steinby 84]

textbook

[Gildea, Satta, Zhang 06]

COLING-ACL

[Graehl & Knight 04]

HLT

[Jelinek 90]

Readings in Speech Recog.

[Knight & Al-Onaizan 98]

AMTA

[Knight and Graehl 05]

Cicling

[Knight & Marcu 00]

AAAI

[Knuth 77]

Info. Proc. Letters 6

[Kumar & Byrne 03]

HLT

[Lewis & Stearns 68]

[Lari & Young 90]

[Marcu et al 06]

[May & Knight 05]

[Melamed 03]

[Mohri & Riley 02]

[Mohri, Pereira, Riley 00]

[Pang et al 03]

[Rabin 69]

[Rounds 70]

[Shieber & Schabes 90]

[Shieber 04, 06]

[Thatcher 67]

[Thatcher 70]

[Thatcher & Wright 68]

[Wellington et al 06]

[Wu 97]

[Yamada & Knight 01, 02]

[Zollmann & Venugopal 06]

JACM

Comp. Speech and Lang. 4

EMNLP

HLT

HLT

ICSLP

Theo. Comp. Sci.

HLT

Trans. Am. Math. Soc. 141

Math. Syst. Theory 4

COLING

TAG+, EACL

J. Computer & Sys. Sci. 1

J. Computer & Sys. Sci. 4

Math. Syst. Theory 2

ACL-COLING

Comp. Linguistics

ACL

HLT

Thank you