Capacity Planning - SharePoint Practice

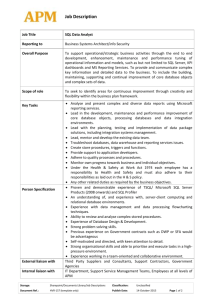

advertisement