PD2004_8

Chapter 8

Dynamic Models

• 8.1 Introduction

• 8.2 Serial correlation models

• 8.3 Cross-sectional correlations and time-series crosssection models

• 8.4 Time-varying coefficients

• 8.5 Kalman filter approach

8.1 Introduction

• When is it important to consider dynamic , that is, temporal aspects of a problem?

– For forecasting problems, the dynamic aspect is critical.

– For other problems that are focused on understanding relations among variables, the dynamic aspects are less critical.

– Still, understanding the mean and correlation structure is important for achieving efficient parameter estimators.

• How does the sample size influence our choice of statistical methods?

– For many panel data problems, the number of cross-sections ( n ) is large compared to the number of observations per subject ( T ). This suggests the use of regression analysis techniques.

– For other problems, T is large relative to n . This suggests borrowing from other statistical methodologies, such as multivariate time series.

Introduction – continued

• How does the sample size influence the properties of our estimators?

– For panel data sets where n is large compared to T , this suggests the use of asymptotic approximations where T is bounded and n tends to infinity.

– In contrast, for data sets where

T is large relative to n , we may achieve more reliable approximations by considering instances where

• n and T approach infinity together or

• where n is bounded and T tends to infinity.

Alternative approaches

• There are several approaches for incorporating dynamic aspects into a panel data model.

• Perhaps the easiest way is to let one of the explanatory variables be a proxy for time.

– For example, we might use x ij,t model.

= t , for a linear trend in time

• Another strategy is to analyze the differences, either through linear or proportional changes of a response.

– This technique is easy to use and is natural is some areas of application. To illustrate, when examining stock prices, because of financial economics theory, we always look at proportional changes in prices, which are simply returns.

– In general, one must be wary of this approach because you lose n

(initial) observations when differencing.

Additional strategies

• Serial Correlations

– Section 8.2 expands on the discussion of the modeling dynamics through the serial correlations, introduction in

Section 2.5.1.

• Because of the assumption of bounded

T , one need not assume stationarity of errors.

• Time-varying parameters

– Section 8.4 discusses problems where model parameters are allowed to vary with time.

• The classic example of this is the two-way error components model, introduced in Section 3.3.2.

Additional strategies

• The classic econometric method handling of dynamic aspects of a model is to include a lagged endogenous variable on the right hand side of the model.

– Chapter 6 described approach, thinking of this approach as a type of Markov model.

• Finally, Section 8.5 shows how to adapt the Kalman filter technique to panel data analysis.

– This a flexible technique that allows analysts to incorporate time-varying parameters and broad patterns of serial correlation structures into the model.

– Further, we will show how to use this technique to simultaneously model temporal and spatial patterns.

• Cross-sectional correlations – Section 8.3

– When T is large relative to n , we have more opportunities to model cross-sectional correlations.

8.2 Serial correlation models

• As T becomes larger, we have more opportunities to specify R = Var

, the T

T temporal variance-covariance matrix.

• Section 2.5.1 introduced four specifications of R : (i) no correlation, (ii) compound symmetry, (iii) autoregressive of order one and (iv) unstructured.

• Moving average models suggest the “Toeplitz” specification of R :

–

R rs

=

| r-s |

. This defines elements of a Toeplitz matrix.

– R rs

=

| r-s | for | r-s | < band banded Toeplitz matrix. and R rs

= 0 for | r-s |

band . This is the

• Factor analysis suggests the form R =

+

,

– where is a matrix of unknown factor loadings and

is an unknown diagonal matrix.

– Useful for specifying a positive definite matrix.

Nonstationary covariance structures

• With bounded

T , we need not fit a stationary model to R .

• A stationary

AR (1) structure,

it

=

i,t1

+

it

, yields

R

AR

(

)

1

2

T

1

1

T

2

2

1

T

3

T

1

T

2

T

3

1

• A (nonstationary) random walk model,

– With i 0

= 0, we have Var

it

= t

it

=

i,t1

+

it

2 , nonstationary

Var

ε i

2

R

RW

2

1

1

1

1

1

2

2

2

1

2

3

3

1

2

3

T

Nonstationary covariance structures

• However, this is easy to invert

(Exercise 4.6) and thus implement.

R

1

RW

2

1

0

0

0

1

2

1

0

0

0

1

2

0

0

0

0

0

2

1

0

0

0

1

1

• One can easily extend this to nonstationary

AR (1) models that do not require |

ρ

| < 1

– use this to test for a “unit-root”

– Has desirable rootn rate of asymptotics

– There is a small literature on “unit-root” tests that test for stationarity as T becomes large – this is much trickier

Continuous time correlation models

• When data are not equally spaced in time

– consider subjects drawn from a population, yet with responses as realizations of a continuous-time stochastic process.

– for each subject i , the response is { y i

( t ), for t

R }.

– Observations of the i th subject at taken at time t ij

= y i

( t ij

) denotes the j th response of the i th subject so that y ij

• Particularly for unequally spaced data, a parametric formulation for the correlation structure is useful.

– Use R rs

= Cov (

ir

,

is

) = correlation function of {

2

( | t ir

i

( t )}.

– t is

| ), where

is the

– Consider the exponential correlation model

( u ) = exp (–

u ), for

> 0

– Or the

Gaussian correlation model

( u ) = exp (–

u 2 ), for

> 0.

Spatially correlated models

• Data may also be clustered spatially .

– If there is no time element, this is straightforward.

– Let d ij to be some measure of spatial or geographical location of the j th observation of the i th subject.

– Then, | d ij

– d ik

| is the distance between the observations of the i th subject. j th and k th

– Use the correlation functions.

• Could also ignore the spatial correlation for regression estimates, but use robust standard errors to account for spatial correlations.

Spatially correlated models

• To account for both spatial and temporal correlation, here is a two-way model y it

=

i

+

t

+ x it

β

+

it

• Stacking over i, we have

y

1 t y

2 t y nt

α

1

α

α

2 n

1 n

t

x x

1 t x

2 t

nt

β where 1 n is a n

1 vector of ones. We re-write this as y t

= α + 1 n

t

+ X t

β +

t

.

• Define

H = Var

t

H ij

• Assuming that { to be the spatial variance matrix

= Cov (

it

,

jt

) =

2

( | d t

} is i.i.d. with variance i

– d j

| ).

2 , we have

Var y t

= Var α +

=

2

• Because Cov ( y r

, y s

I n

+

) =

V = Var y =

2 1 n

1 n

+ Var

t

2

2

2

I n

J n

I n

+ H =

J

T

2 I n

+ V

H for r

s , we have

+ V

H

I

T

.

.

1 t

2 t nt

• Use GLS from here.

.

8.3 Cross-sectional correlations and time-series cross-section models

V ij

• When

T is large relative to n, the data are sometimes referred to as time-series cross-section ( TSCS) data.

• Consider a

TSCS model of the form y i

= X i

β

+

i

,

– we allow for correlation across different subjects through the notation Cov(

i

,

j

) = V ij

.

• Four basic specifications of cross-sectional covariances are:

– The traditional model set-up in which ols is efficient.

– Heterogeneity across subjects.

– Cross-sectional correlations across subjects. However,

0

2 observations from different time points are uncorrelated.

I i i i

j j

V ij

0 i

2

I i i i

j j

Cov

it

,

js

0 ij t t

s s

Time-series cross-section models

• The fourth specification is (Parks, 1967):

– Cov( it

,

js

) =

σ ij for t=s and

i,t

=

ρ i

i,t -1

+

η it

.

– This specification permits contemporaneous crosscorrelations as well as intra-subject serial correlation through an AR (1) model.

– The model has an easy to interpret cross-lag correlation function of the form, for s < t ,

Cov

, it

js

ij

t j

s

• The drawback, particularly with specifications 3 and

4, is the number of parameters that need to be estimated in the specification of V ij

.

Panel-corrected standard errors

• Using OLS estimators of regression coefficients.

• To account for the cross-sectional correlations, use robust standard errors.

• However, now we reverse the roles of i and t .

– In this context, the robust standard errors are known as panel-corrected standard errors.

• Procedure for computing panel-corrected standard errors .

– Calculate OLS estimators of β , b

OLS

, and the corresponding residuals, e it

= y it

– x it

b

OLS

.

– Define the estimator of the ( ij )th cross-sectional covariance to be ˆ ij

T

1

T t

1 e it e jt

– Estimate the variance of

i n

1

X i

b

X i

OLS

1

using i n n

1 j

1

ˆ ij

X i

X j

i n

1

X i

X i

1

8.4 Time-varying coefficients

• The model is y it

= z´

,it

i

+ z´

,it

t

+ x´ it

+

it

• A matrix form is y i

– Use

R i

=Var

i

= Z

i

i

, D =Var

i

+ Z

,i and V

t

+ X i

= Z i i

+

i

.

D Z i

´

+ R i

•

Example 1: Basic two-way model y it

=

i

+

t

+ x´ it

+

it

•

Example 2: Time varying coefficients model

– Let z

,it

= x it and

t y it

=

= x´ it t

-

.

t

+

it

Forecasting

• We wish to predict, or forecast, y i , T i

L

z

α

, i , T i

L

α i

z

λ

, i , T i

L

λ

• The BLUP forecast turns out to be

T i

L

x

i , T i

L

β i , T i

L

ˆ i , T i

L

x i

, T i

L b

GLS

z

α

, i , T i

L

α i , BLUP

z

λ

, i , T i

L

Cov( λ

T i

L

, λ )

2 Σ

λ

1 λ

BLUP

Cov(

i , T i

L

, ε i

)

2

R i

1 e i , BLUP

Forecasting - Special Cases

• No Time-Specific Components y

ˆ i , T i

L

x

i , T i

L b

GLS

z

α , i , T i

L a i , BLUP

Cov(

i , T i

L

, ε i

)

2

R i

1 e i , BLUP

• Basic Two-Way Error Components

– Baltagi (1988) and Koning (1988) (balanced) y

ˆ i , T i

L

x i

, T i

L b

GLS

y i

x i

b

GLS

2 n

1 n

1

2

2

y

x

b

GLS

• Random Walk model

ˆ i , T i

L

x

i , T i

L b

GLS

t s

1

λ t , BLUP

z

α

, i , T i

L

α i , BLUP

Cov(

i , T i

L

, ε i

)

2

R i

1 e i , BLUP

Lottery Sales Model Selection

• In-sample results show that

– One-way error components dominates pooled crosssectional models

– An

AR (1) error specification significantly improves the fit.

– The best model is probably the two-way error component model, with an AR (1) error specification

8.5 Kalman filter approach

• The Kalman filter is a technique used in multivariate time series for estimating parameters from complex, recursively specified, systems.

• Specifically, consider the observation equation y t

= W t

δ t

+

t and the transition equation

δ t

= T t

δ t-1

+ η t

.

• The approach is to consider conditional normality of y t given y t -1

,…, y

0

, and use likelihood estimation.

• The basic approach is described in Appendix D. We extend this by considering fixed and random effects, as well as allowing for spatial correlations.

Kalman filter and longitudinal data

• Begin with the observation equation . y it

= z

,i,t

α i

+ z

,i,t

λ t

+ x it

β

+

it

,

• The time-specific quantities are updated recursively through the transition equation ,

λ t

=

1 t

λ t1

+

η

1 t

.

• Here, { η

1 t

} are i.i.d mean zero random vectors.

• As another way of incorporating dynamics, we also assume an AR ( p ) structure for the disturbances

– autoregressive of order p ( AR ( p ) ) model

– Here, {

i,t

=

1

i,t -1

+

2

i,t -2

+ … + p

i,t p

+ i,t

} are i.i.d mean zero random vectors.

i,t

.

Transition equations

• We now summarize the dynamic behavior of into a single recursive equation.

• Define the p

1 vector

i,t we may write

ξ i , t

1

1

0

0

2

0

1

0

= (

i,t

p

1

0

0

1

,

p

0

0

0

• Stacking this over i =1, …, n yields i,t -1

, …, i,t-p +1

)

so that

ξ

i , t

1

0

0

0 i , t

Φ

2

ξ i , t

1

η

2 i , t

ξ t

ξ

1 , t

ξ

n , t

Φ

2

ξ

1 , t

1

Φ

2

ξ

n , t

1

η

21 , t

η

2 n , t

I n

Φ

2

ξ t

1

η

2 t

• Here, t is an np

1 vector, I n is an n

n identity matrix and

is a Kronecker (direct) product (see Appendix A.6).

Spatial correlation

• The spatial correlation matrix is defined as

2 , for all t .

• We assume no cross-temporal spatial correlation so that

Cov(

i , s

,

j , t

)=0 for s

t .

• Thus,

H n

= Var(

1, t

, …, n , t

)/

Var

η

2 t

H n

0

2

0

0 p

1

• Recall that i,t

=

1

i,t -1

+ … + p

i,t p

+

i,t and

ξ i , t

1

0

0

1

1

0

2

0

p

1

0

0

1

p

0

0

0

ξ

i , t

1

0

0

0 i , t

Φ

2

ξ i , t

1

η

2 i , t

Two sources of dynamic behavior

• We now collect the two sources of dynamic behavior, and

λ

, into a single transition equation.

δ t

λ

ξ t t

I n

Φ

1 , t

λ

Φ

2 t

1

ξ t

1

η

1 t

η

2 t

Φ

1 , t

0

• Assuming independence, we have

I n

0

Φ

2

λ t

1

ξ t

1

η t

T t

δ t

1

η t

Q t

Var

η t

Var

0

η

1 t

Var

0

η

2 t

2

Q

1 t

0 H n

0

1

0 0

0 p

1

2

Q t

*

• To initialize the recursion, we assume that δ

0 parameters to be estimated.

is a vector of

Measurement equations

• For the t th time period, we have y t

y y y i

1 i

2 i n t

, t

, t

, t

x x

i

2

i

1 x

i n t

, t

, t

, t

β

z

α

0

, i

1

0

, t z

α

0

, i

2

0

, t

0

0

z

α , i n t

, t

α i

1

α i

2

α

i n t

• That we express as

• With

X t

x

x i

i

1 x i

n t

2

, t

, t

, t

Z

α

, t y t

z

α , i

1

, t

0

0

X t

X

β t

β

Z

α , t

Z

α

α

, t

α

Z

λ , t

λ

W t t

δ t

W

1 t

ξ t z

α

0

, i

2

0

, t

z

α

, i n t

W t

δ t

0

0

, t

Z

M

λ

, t t

I q

W

1 t

z z z

λ

λ

,

, i

1 i

2

λ

, i n t

, t

, t

, t

λ t

λ

ξ t t

α

α

α

α

2 n

1

Z

λ

, t

λ t

i

2

i

1

, t i n t

, t

, t

Z

λ

, t

W

1 t

ξ t

z z

λ

λ

,

, i

1 i

2

z

λ

, i n t

, t

, t

, t

• That is, fixed and random effects, with a disturbance term that is updated recursively.

Capital asset pricing model

• We use the equation y it

=

β

0 i

+

β

1 i x m t

+

ε it

,

• where

– y is the security return in excess of the risk-free rate,

– x m is the market return in excess of the risk-free rate.

• We consider n = 90 firms from the insurance carriers that were listed on the CRSP files as at December 31, 1999.

• The “insurance carriers” consists of those firms with standard industrial classification, SIC, codes ranging from

6310 through 6331, inclusive.

• For each firm, we used sixty months of data ranging from

January 1995 through December 1999.

Table 8.2. Summary Statistics for Market Index and Risk Free Security

Based on sixty monthly observations, January 1995 to December 1999.

Variable Mean

2.091

Median Minimum Maximum Standard deviation

2.946

-15.677 8.305

4.133 VWRETD (Value weighted index)

RISKFREE (Risk free) 0.408

0.415

0.296 0.483

0.035

VWFREE (Value weighted in excess of risk free)

1.684

2.517

-16.068 7.880

4.134

Table 8.3. Summary Statistics for Individual Security Returns

Based on 5,400 monthly observations, January 1995 to December 1999, taken from 90 firms.

Variable Mean Median Minimum Maximum Standard deviation

RET (Individual security return) 1.052

0.745 -66.197

102.500

10.038

RETFREE (Individual security return in excess of risk free)

0.645

0.340 -66.579

102.085

10.036

Table 8.4. Fixed effects models

Summary measure

Residual std deviation ( s )

-2 ln Likelihood

AIC

AR (1) corr (

) t -statistic for

ρ

Homogeneous model

9.59

Variable intercepts model

9.62

39,751.2

39,753.2

39,488.6

39,490.6

Variable slopes model

9.53

Variable intercepts and slopes model

9.54

Variable slopes model with

AR(1) term

9.53

39,646.5

39,648.5

39,350.6

39,352.6

39,610.9

39,614.9

-0.08426

-5.98

Time-varying coefficients models

• We investigate models of the form: y it

=

β

0

+

β

1, i,t x m,t

+

ε it

,

• where

ε it

=

ρ

ε

ε i,t -1

+

η

1 ,it

,

• and

β

1, i,t

- β

1, i

=

ρ

β

(

β

1, i,t -1

- β

1, i

) +

η

2 ,it

.

• We assume that { ε it

} and {

β

1, i,t

} are stationary AR (1) processes.

• The slope coefficient, β

1, i,t

, is allowed to vary by both firm i and time t .

• We assume that each firm has its own stationary mean β

1, i and variance Var

β

1, i,t

.

Expressing CAPM in terms of the

• First define j n,i

Kalman Filter

to be an n

1 vector, with a “one” in the i th row and zeroes elsewhere.

• Further define x it

1 j n , i x mt

β

0

1 , 1

1 , n

z

, it

j n , i x mt

λ t

1 , 1 t

1 , nt

1 , 1

1 , n

• Thus, with this notation, we have y it

=

β

0

+

β

1, i,t

• no random effects….

x m t

+

ε it

= z

,i,t

λ t

+ x it

β

+

it

.

Kalman filter expressions

• For the updating matrix for time-varying coefficients we use

1 t

= I n

.

•

AR (1) error structure, we have that p =1 and

2

=

.

• Thus, we have

1 , 1 t

1 , 1

δ t

λ

ξ t t

1 , nt

1 t

nt

1 , n

T t

I n

0

0

I n

0

0

I n

• and

Q t

Var

0

η

1 t

Var

0

η

2 t

( 1

2

)

2

I n

0 ( 1

0

2

)

2

I n

Table 8.5 Time-varying CAPM models

Parameter

σ

Model fit with ρ

ε

Estimate parameter

9.527

Standard Error

Model fit without ρ

ε

0.141

parameter

Estimate

Standard Error

9.527

0.141

ρ

ε

-0.084

0.019

ρ

β

-0.186

0.140

-0.265

0.116

σ

β

0.864

0.069

0.903

0.068

• The model with both time series parameters provided the best fit.

• The model without the ρ

ε estimate of the ρ

yielded a statistically significant parameters – the primary quantity of interest.

BLUPs of

1 ,it

• Pleasant calculations show that the BLUP of

1, i,t b

1 , i , t , BLUP

b

1 , i , t , GLS

2

| t

1 |

| t

T i

| x m , 1 x m , T i

Var y i

1

y i

b

0 , GLS

1 i

b

1 , i , t is

, GLS x m

• where

x m

x m , 1

x m , T i

Var y i

2

X m

R

AR

(

) X m

2

R

AR

(

)

X m

diag

x m , 1

x m , T i

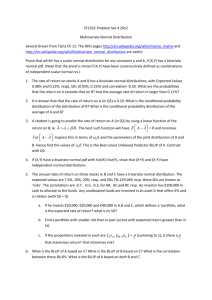

BLUP predictors

• Time series plot of BLUP predictors of the slope associated with the market returns and returns for the Lincoln National Corporation. The upper panel shows that BLUP predictor of the slopes. The lower panels shows the monthly returns.

BLUP

0.7

0.6

0.5

0.4

1995 retLinc

20

10

0

-10

-20

1995

1996

1996

1997

Year

1998 1999

1999

2000

2000 1997

Year

1998

Appendix D. State Space Model and the Kalman Filter

• Basic State Space Model

– Recall the observation equation y t

= W t

– and the transition equation

δ t

+

t

δ t

= T t

δ t1

+ η t

.

• Define

– Var t -1

t

= H t and Var t -1

.

– d

0

= E

δ

0

, P

• Assume that {

0

= Var

δ t

} and {

η

0 and P t

= Var t

δ t

. t

} are mutually independent.

• Stacking, we have

η t

= Q t y y

1

y

2

W

1

δ

1

W

2

δ

2

ε

1

ε

2

W

1

0

0

W

2

0

0

δ

1

δ

2

ε

1

ε

2

Wδ

ε y

T

W

T

δ

T

ε

T

0 0 W

T

δ

T

ε

T

Kalman Filter Algorithm

• Taking a conditional expectation and variance of the transition equation yields the “prediction equations” d t / t1

= E t -1

δ t

= T t d t1

– and

P t/t1

= Var t1

δ t

= T t

P t1

T t

+ Q t

.

• Taking a conditional expectation and variance of measurement equation yields

E t -1 y t

= W t d t / t1

– and

F t

= Var t -1 y t

• The updating equations are

= W t d t

= d t / t1

+ P t / t1

P

W t

F t -1 t / t1

( y t

W t

+ H t

.

W t d t / t1

)

– and

P t

= P t / t1

P t / t1

W t

F t -1

W t

P t / t1

.

• The updating equations are motivated by joint normality of δ t and y t

.

Likelihood Equations

• The updating equations allows one to recursively compute

E t -1 y t and F t

= Var t -1 y t

• The likelihood of { y

1

, …, y

T

} may be expressed as

L

ln f( y

1

,..., y

T

)

ln f( y

1

)

t

T

2 f( y t

| y

1

,..., y t

1

)

1

2

N ln 2

t

T

1 ln det( F t

)

t

T

1

y t

E t

1 y t

F t

1

y t

E t

1 y t

• This is much simpler to evaluate (and maximize) than the full likelihood expression.

• From the Kalman filter algorithm, we see that E t -1 combination of { y

1

, …, y t -1

}. Thus, we may write y t is a linear

Ly

L

y

1 y

2 y

T

y y

1 y

T

2

E

E

T

0

1 y

E

1 y y

1

2

T

• where L is a N

N lower triangular matrix with one’s on the diagonal.

– Elements of the matrix

L do not depend on the random variables.

– Components of Ly are mean zero and are mutually uncorrelated.

– That is, conditional on { y

1

, …, y t -1

}, the t th component of

Ly , v t

, has variance F t

.

Extensions

• Appendix D provides extensions to the mixed linear model

– The linearity of the transform turns out to be important

• Section 8.5 shows how to extend this to the longitudinal data case.

• We can estimate initial values as parameters

• Can incorporate many different dynamic patterns for both and

• Can also incorporate spatial relations