1 Ellen Mickiewicz The Impact of Democracy and Press Freedom R

advertisement

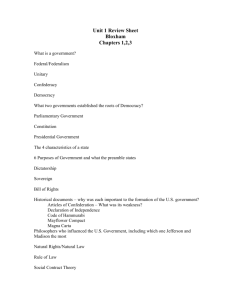

1 Ellen Mickiewicz The Impact of Democracy and Press Freedom Ratings of Countries Worldwide: Dilemmas of quantitative and cultural perspectives RATING COUNTRIES ON THE DEMOCRACY SCALE. Today I am going to talk about the extremely influential practice of rating countries all over the globe in terms of their closeness to or distance from democracy. In the process, I shall also examine the pitfalls in an influential poll about censorship—a poll that in its structure and interpretation does not, unfortunately, represent a rare pitfall. Finally, I shall discuss the commonly used and virtually uninterpretable practice of asking respondents in large-scale mass public opinion polls to diagnose themselves: to identify the cause of their own behavior. DEMOCRACY: A DEFINITION The fundamental question to be posed in the attempt to rate degrees of democracy among different countries is what to use for a definition of democracy? Most scholars in this field rely on the work of Robert Dahl, who defines democracy (polyarchy) along two dimensions: contestation and participation. Each has subcategories subject to an evaluation. It should be obvious that the theoretical foundation of democracy should thus be the source of the ratings or indicators of democracy or press freedom and that categories to be rated should be derived from the theoretical foundation in order to have meaning and structure. How else—without a guiding theory—could one arrive at a coherent system of the elements of democracy? We should, I think, be interested and possibly concerned about the process of rating countries as more or less democratic: these ratings are influential in the allocation of funding to assist democratization in the United Nations, the International Monetary Fund, The World Bank, country-level donations, such as the United States Assistance in International Development, the United States Department of States, and NGOs such as IREX, the Research and Exchanges Board, Reporters without Borders and other NGOs. A recent entry into the ratings arena is The Economist , which intends to provide ratings based more heavily on the qualitative, i.e. subjective, mass public opinion surveys. In the allocation of resources at the global scale, ratings are taken seriously. 2 Where do the ratings come from? I want to divide these remarks into two spatial sectors, as it were: ratings as they anchor scholarly research and ratings as driving policy of governments and international organizations. The dimensions of the research dilemma are, to my mind, enormous. There are some very fine scholars, most notably Michael Coppege. Other scholars, such as Kenneth Bollen, Gerardo Munck, Ted Robert Gurr, Mark Gasiorowski, Zeha Arat, and Axel Hadenius work on these issues. Many of the concerns I raise in these remarks are drawn from this literature and from my own experience with cross-national research.. They and scholars similarly engaged are attempting to make the ranking process more reliable, accurate, and theoretically sound. My concern in the weaknesses I analyze below, is not with these scholars, but rather with the very large research literature in the US, Europe, and Latin America that aims to discover what causes democracy or what democracy causes. Put differently, in political science terms, what might be the associations between variations in democracy and variations in other variables.’ For example: democracy can be a dependent variable, should one wish to look at measures of association between the independent “explanatory” variables and the outcome—democracy. On the other hand, as an independent variable, level of democracy might be one of the variables explaining, for example, economic growth. This research is quantitative and fairly technical, pervasive in the academy and influential in the social science disciplines. When you see global issues of democracy as scholarly research, it is highly likely that country ratings are crucial data in the study. On the other hand—in the world of policy, assistance, amounts of money and a nearmonopoly, one organization, however, has had unparalleled success. Freedom House has been the longtime leader in the provision of country-level indicators in the global context. Unfortunately, in my view, the scholarly research world also uses their ratings—calling into question their basic research and findings. The tremendous asset of Time Series data covering much of the globe. Freedom House has a very impressive and unique time series data. This is akin to an enormous investment in the bank, understood as a databank.: the entire time series runs from 1973 on. If one wishes to study the relationship to democracy of such variables as economic wealth per capita or indices of inequality or literacy or urbanization or comparisons of attitudes, a time series of this size is unequalled. However, the grading and rating methods HAVE changed over time, but Freedom House asserts on its web page and elsewhere that the results are unaffected by this 3 change However, in a report on its own methodology, FH’s Joseph E. Ryan notes that “(In the Surveys completed from 1989-90 through 1992-93, the methodology allowed for a less nuanced range of 0 to 2 raw points per question. Taking note of this modification, scholars should consider the 1993-94 scores the statistical benchmark.)” 1 With respect to the selection of democracy and rights indicators,, the clearest description of this organizing principle may be found in the most credible source: Raymond Gastil, as he put it, “ With little or no staff support the author has carried out most of the research and ratings [for Freedom House, 1978-1989]…by working alone the author has not had to integrate the judgments of a variety of people…. “2 Although above I stressed the importance of theory-based generation of ratings, that approach was rejected by Gastil from the start of his work. His rejection of theory left him and Freedom House without the “anchor” of working on the basis that theory gives meaning to ratings, or put another way, high construct-validity depends first on choosing the correct indicators and then combining them correctly: or measuring what you intend to measure or believe you are measuring. Rather, Gastil’s approach has been that theory is actually an obstacle to his enterprise Arguably, then, the most important problem with the comparative world indices of Freedom House is that they are not only atheoretical, but antitheoretical. Theories of democracy are considered obstacles. Freedom House prefers observations by journalists. Gastil produced his own checklist using reference books, and “in effect, the author developed rough models in his mind as to what to expect of a country at each rating level, reexamining his ratings only when current information no longer supported this model.” 3 What is important to note here, is that (1) a single individual, sometimes with interns and/or assistants determined the checklist of indicators based on journalists’ accounts and other “reference” sources and deliberately divorced from theory, and (2) the single individual early on had in his mind an evaluative picture of each country and made the decision that the picture would prevail over time, absent some highly visible events the press itself would bring to light. It is hardly surprising that any measure of association over the years would tend to be extremely high. It attests to the “sticky” judgment of a single individual, rather than to a collective re-examination of the “picture” itself. In any case, results would therefore likely be skewed toward the more sensational. by which I mean “newsworthy”, which is based on what is unusual rather than usual.. 4 Usually, to overcome the likely possibility of subjectivity or bias in producing a rating, several individuals trained in the “rules” of what to grade and how to recognize differences in performance (i.e. the coding procedure relies on the codebook of rules.) will code independently the same piece of information. Their scores, or ratings, are then compared, and if differences emerge, the process must be improved and again tested. In the case of the Freedom House data—and not only there, but also data from other non-scholarly sources—we do not know the coding rules; there appears to be no inter-coder reliability information (degree of agreement or non-agreement on each factor). It appears, further, that there are no coders of the sort used in the typical scholarly research project, as noted above. Instead, collective discussions leading to consensus is the norm—exactly the opposite of the reliability test of independent coders. This means that, importantly, replicability, an essential element of any project such as this, is not possible. The team producing the survey is very small (the degree of their expertise on the very large number of countries they evaluate can be found by careful analysis of the small team making up the judges), and the rating of the indicators they devise are simply added, not weighted, as they would have been had they proceeded from theory and then gone down the vertical of indicators. Because the indices are based on the judgments of very few people, there is a distinct problem of representativeness not only of the world, but also within the rated country: Gastil, noted the important “question of balance of positive and negative activities….. if we are to use quantitative measures, we must develop means of measuring both demonstrations that occur and demonstrations suppressed, public criticism not suppressed along with public criticism suppressed”4 Given the reliance on journalists and newsworthiness that is part of their professional culture, it would be difficult to assure adequate attention to the non-newsworthy, but important, everyday behavior in a country. Weighting It is obvious that individual factors, variables, or indicators are more directly and powerfully related to democracy than others. Some will be far more significant than others. This means that the most meaningful set of indicators should receive the greatest weight. It is impossible to devise a system of weighting unless that system it is directly related to the theory of democracy guiding the entire project. Other features may be less central and critical and deserve less weight. Freedom House does not weight anything on its check list. All factors believed to relate to democracy are put in the pot, so to speak, and all in the end, are simply added up 5 Multicollinearity “Gastil…compiles separate indexes for political rights and civil liberties, even though they are very highly correlated.”5 There is likely ample multicollinearity among most of the indicators in the indices. Therefore, it will remain obscure, what independent indicators are really in play. Several or even many could well be one and the same basic factor posed in varied questions measuring the same concept or behavior. REPLICABILITY To summarize the importance of this idiosyncratic method, we return to the continuing centrality of replication and the inability of others to perform the same research to test the results. 1. we do not know the coding rules for each coder; there appears to be no inter-coder reliability information at all (if there are coders in the usual sense of the term.) 2. the prevailing method is collective discussions leading to consensus as the norm is exactly the opposite of the reliability test of independent coders. 3. Thus the process leading to the widely used conclusions is impossible to replicate The indicators, such as they are, are simply added, not weighted, as they would have been had they proceeded from theory and then gone down a transitive vertical of indicators. SCALES The scale itself is deeply problematic: they attach a subjectively derived number to an indicator. That produces an ordinal scale, in which more is relative to less. It does not produce an interval scale, in which, like a thermometer, the distance between, say 4 and 6 is exactly the same as the one between 7 and 9. Only an interval scale provides for what are continuous variables. Assigning numbers to items on an ordinal scale does not by itself render an ordinal scale an interval scale. The enterprise of rating with which we are concerned here does not appear to engage in complex statistical analysis, while many scholars whose analyses do employ logistic regression analysis, often use the numbers Freedom House has produced in its ordinal scales. 6 THE BIAS TOWARD CAPITALISM Privileging Capitalism In the IREX ratings, roughly a quarter of the items related to freedom of the press refer to the workings of an unfettered market and to the importance of advertising revenues as an economic model for viability and autonomy. In the Freedom House ratings, there is nothing on labor movements, rights of workers, protection of workers or similar practices that challenge the heavily pro-capitalist thrust of the project as a whole. Future of greater stratification in terms of political knowledge? Unfortunately lying ahead is the strong possibility of political selfsegregation: the ability of the public to seek only that with which it agrees. Such an outcome, if it happens, may well produce an even more pronounced stratification of knowledge and participation, thus leaving a narrower layer of elites compared to the larger group of citizens who self-select their sources precisely to exclude diversity. The nascent field of research on such matters suggests that one’s personal, face-to-face circle of friends tends to be the least diverse, with individuals seeking others with whom they agree to be their friends. In the heyday of broadcast television in the United States there were only three national television networks all competing for the entire national population of television watchers, the highest degree of diversity was recorded. Each network, to remain economically viable, was constrained to satisfy the greatest range of preferences and therefore to have “something for everybody”, and , for example, classical opera excerpts coexisted with sports, comedians, with composers News was virtually the same on all and editorializing was forbidden. Cable introduced much greater choice and the option to customize one’s viewing according to one’s preferences, political preferences, among them. With the advent of the Internet, the choice and capacity of the user to customize and exclude or include diversity has exploded. There is less selfsegregation on the Internet than among real-life circles of friends and for certain television events, but that is not a final judgment. The use of microblogging, particularly the advent of Twitter, may be an extremely efficient and low-cost instrument for creating “flash crowds”, and they, in turn, as we have seen in North Africa and the Middle East, can have revolutionary consequences, but the long-term political staying-power of the mainly young participants themselves may be limited by competition from older, more politically experienced and more organized groupings. Nonetheless, it is important to keep in mind that observations such as these are likely to be very different across cultures, as my own research has found. 7 THE POLITICAL PHILOSOPHICAL BASIS FOR THE SEPARATION OF POLITICAL AND ECONOMIC RIGHTS I The ideological basis for separating civil and economic rights It has become customary to separate political/civil rights from economic rights. Valorization of political rights puts the respondent in the democratic camp, while preference for economic rights denotes an aversion to democracy. I would argue that the so-called opposition of these two answers is a vestige of the Cold War that has given us little analytic purchase. Throughout the Cold War the position of the United States was that only political/civil rights were the true democratic human rights and that commitment primarily to social/economic rights would mean a highly intrusive State overwhelming the individual. The Soviet Union countered that economic rights (whether or not they were met) were more important. Each attacked the other for absence or violation of fundamental human rights: The West held up the Soviet Union as violators of human rights, understood as civil liberties, the most basic foundation of democracy, and that social and economic rights undermined political freedoms. The Soviet Union countered that without meeting basic economic needs, without guaranteed work, housing, food, the human being is denied life and the exercise of any rights. These two orders of rights unfortunately still occupy distinctly different spaces in the contest of the true meaning of democracy. This asymmetry continues: merging economic and civil rights in order to approximate more nearly the real world has not been achieved. A more flexible understanding that multiple rights are held simultaneously is evident: starvation, immobilizing illness, and childbearing in an insalubrious environment may nullify the ability to advocate, say, freedom of the press, or freedom of assembly. In other words, we should not be looking at classes of rights, only one of which signals democratic attitudes and tendencies, but a complex interactivity, in which basic political and basic economic rights are intertwined and interdependent (and others as they proliferate.) The United Nations, in large part because of the work of Amartya Sen, has adopted a new standard of living index based on capabilities (related to the work Martha Nussbaum has done on Aristotle).1 It is obvious that we 1 Amartya Sen writes in Development as Freedom: “It is sometimes argued that in a poor country it would be a mistake to worry too much about the unacceptability of coercion—a luxury that only the rich countries can 8 gain little by devising surveys in which two complementary and interdependent sets of attitudes area pitted against one another and one is deemed democratic while the other is its opposite. One of the most respected polling agencies in the world is the Pew Institute. In their Survey of Global Attitudes, they include 47 countries and consistently use the same protocol. They have a very impressive time series of data. Here is their question about distinguishing democratic from non-democratic Russia: Q.50 “If you had to choose between a good democracy or a strong economy, which would you say is more important?” Russia: good democracy 15% Strong economy 74% DK 11%. Q. 51: “Some feel that we should rely on a democratic form of government to solve our country’s problems. Others feel that we should rely on a leader with a strong hand to solve our country’s problems. Which comes closer to your opinions?” Russia: democratic form of government—27% Strong leader 63% DK 11%6 In my view it is impossible to interpret what respondents have in mind. Because the categories are far from mutually exclusive, it not illogical or undemocratic to hope for both economic well being and personal freedoms. As for the strong leader: it is not unusual to hear readers ask: “Should we elect a weak leader?” Pew is considered the ‘afford’—and that poor people are not really bothered by coercion. It is not at all clear on what evidence this argument is based….while arguments are often presented to suggest that people who are very poor do not value freedom, in general and reproductive freedom in particular, the evidence insofar as it exists, is certainly to the contrary. People do, of course, value—and have reason to value—other things as well, including well-being and security, but that does not make them indifferent to their political civil, or reproductive rights.1 9 gold standard and this 47-country survey an enormously important undertaking meriting the headline its results are given in The New York Times.. THE INTERPRETION OF CENSORSHIP Attempts to find out what Russians “really” think of censorship are extraordinarily difficult and nuanced. From our focus group participants, this subject came up when they were talking about Soviet-era television. The problem is what they mean by it. In one focus group, for example, a participant in the space of two sentences uses the word three times, each with a different meaning. One meaning describes the elimination of differing political views in a straitjacket of government control. The second meaning is quite different: it describes a reasonable regulatory system seeking to protect children and puts obscenity beyond the time when small children are watching and excessive violence is also pushed back to later hours, as well. Russian respondents, perhaps because the experiment with democracy did not last long enough, their notion of regulation is left in the word, censorship., but it is this meaning of regulation that accounts for by far the largest number of survey responses, when national opinion surveys ask about censorship in a methodologically correct way. The third meaning appears idiosyncratic to Russian society, but it is very widespread there. They also use censorship to protest the decline of the national language in the media. The undifferentiated understanding of the word by subpar surveys results in something like almost ¾ of Russians surveyed are open to the return of censorship. When this annual finding was released, there was a prominent New York Times article reporting this dismaying finding, which also figured in an article in Foreign Affairs. But the poll itself, with its deeply flawed methodology, had been an utterly useless and opaque exercise and the journalistic uptake, an unfortunate by-product . Self-Diagnosis Finally, there is a question almost always used in mass opinion surveys and considered especially important: “What influenced you most to vote for X in the last election?” or “What influenced you most in your decision to leave the country?” Self diagnosis is pernicious for two main reasons: Respondents usually answer in terms of the source they consulted most recently (“it was because of television that I decided for whom to vote”) and the pervasiveness of media, usually puts a diagnosis squarely on the media. Most 10 countries where television is widely available still have a majority of the population using it as their main source of news and information The second reason to doubt a respondent’s self-diagnosis should be especially vivid to those fortunate enough to live in Vienna. In addition to its other many features, it is the birthplace of psychoanalysis. Survey respondents cannot plumb their own unconscious to separate and measure the influence of, for example, patterns of upbringing, the authoritarianism or permissiveness of parents, information and emotion stored in long-term memory, job-related issues, physical problems, what happened “today.” This is but a short catalogue of the many sources of influences of which most of us are unaware. To detach a particular piece from complex space in order to say that for it and it alone the media are causal is being studied, but we are far from a significant answer. The respondent most certainly cannot do so and should not be asked to venture a guess. * * * * * In summing up, the differences in political and cultural values, the different forms of discourse, the inability and lack of interest to take these accepted practices apart and subject them to serious analysis does have perverse effects on democratization policy, to be sure, in which decisions about eligibility for assistance and other linkages emerge. But at least equally, if not more seriously, the results of dubious value have become essential variables in much scholarly research on globalization. ENDNOTES Joseph E. Ryan, “ Survey Methodology,” Freedom Review, Jan/Feb95, vol. 26, Issue 1. Raymond Duncan Gastil, “The Comparative Survey of Freedom: Experiences and Suggestions,” On Measuring Democracy: Its Consequences and Concomitants, ed. Alex Inkeles, New Brunswick, New Jersey, Transaction Publishers, 1991, p. 22. 3 Gastil, p. 22 4 Gastil, p. 31 5 Michael Coppedge and Wolfgang Reinike, “Measuring Polyarchy,” On Measuring Democracy, ed. Alex Inkeles, Brunswick, NJ, 1991, p. 52. 6 “World Publics Welcome Global Trade-but not immigration,” The Pew Global Attitudes Project, October 4, 2007, pp. 133-134. 1 2