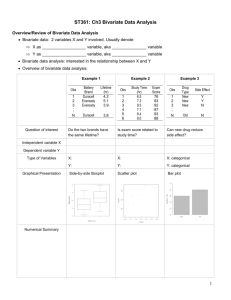

The Boxplot on Minitab

advertisement

The Boxplot (33) Example: Consider Psychological profile scores for 30 convicted felons. For this sample we know that m = 196.5, Q1 = 191 and Q3 = 204 Inner Fences: LIF = HIF = Outer Fences: LOF = HOF = Sketch : (34) The Boxplot on Minitab MTB>gstd MTB> boxp c1 [draws a boxplot for the sample in c1] Note: 1. On the boxplot scale one space (sp) “-“ is given by sp = ( the distance between successive tick marks [+] )/10 2. Values read on the boxplot scale are approximate. Example: Consider the psychological profile score data MTB > boxp c1 ------------I + I--------* O ------+---------+---------+---------+---------+---------+------C1 140 160 180 200 220 240 O * * Question: From the boxplot scale determine the ( approximate) values of the sample median, first and third quartiles and possible and probable outliers. sp= ; m= Q1 = ; Q3 = Possible Outliers: Probable Outliers: (35) Identifying Outliers in a Stem-and–leaf Plot Minitab gives us an option when we use the stem command to identify outliers as calculated in the boxplot. Using the STEM command with the subcommand TRIM one can form a stem and leaf plot with the outliers trimmed away and shown on special lines labeled LO and HI. In this way one can examine the shape of the sample with the outliers removed. Example: Scientists use ph (power of Hydrogen) as a numerical measure of acidity with a ph=7.00 being neutral. A scientist suspects a ph meter is defective. He takes a random sample of 45 measurements with this meter on a neutral substance. The data have been entered into C1. 8.32 6.13 5.89 6.45 7.14 9.00 7.21 6.02 5.96 6.19 10.28 7.05 6.56 5.90 5.99 6.99 6.98 8.26 4.61 6.86 9.05 5.88 5.79 6.58 3.46 6.21 6.07 5.49 6.09 5.82 5.38 6.60 6.49 6.62 7.22 3.76 6.76 8.35 7.22 8.85 5.95 7.24 3.26 6.72 11.17 MTB > stem c1; SUBC> trim. Stem-and-leaf of PHlevel N = 45 Leaf Unit = 0.10 LO 4 6 14 22 (9) 14 8 8 5 4 4 5 5 6 6 7 7 8 8 9 HI 32, 34, 37, Low Outliers(values below the LIF) 6 34 78889999 00011244 555677899 012222 233 8 00 102, 111, High Outliers(values above the HIF) (36) The outliers are the values beyond the inner fences. We can show this using the approximate sample values read from the plot. See the calculations below: To show the “HI” values are outliers, we need calculate the high inner fence. AP(Q1) =(25/100)45 = 11.25, Pos(Q1) = 12 Q1 = AP(Q3) = (75/100)45 = 33.75; Pos(Q3) = 34 Q3 = IQR = HIF= The values 10.2 and 11.1 are both bigger than 9.15 and are plotted as “HI” outliers in the stem and leaf plot. Similarly, LIF = The values 3.2, 3.4 and 3.7 are all smaller than LIF and are plotted as “LO” outliers in the previous stem and leaf plot. (37) Bivariate Data All the data sets we have encountered up to now deal with measurements of one variable on each of several “individuals” (i.e. incomes of individuals, test scores etc.). Such type of data is called univariate data. In many situations it may be of interest to take measurements of two variables on each of several individuals. For example we may be interested in both the income of an individual and the number of hours of television watched per week. In such a case each observation will consist of two values ( a pair of measurements) (income, number of hours watched). Such type of data is called bivariate data and is often denoted in the form; (x,y), the “x-observation” and “y-observation”. A bivariate sample of ‘n’ observations would then be written as (x1,y2), (x2,y2),. . ., (xn,yn) The reason we take observations on two variables is that we are interested in exploring the relationship between the variables. For example “ Do poorer people tend to watch more TV”. The simplest possible relationship between two variables x and y is that of a straight line. (38) Measuring Association Between Variables Here are some typical examples of association: Positive linear relationship between x and y: Curvilinear relationship between x and y: Negative linear relationship between x and y: There are many measures of association in statistics and the term CORRELATION may be applied to such measures. We will study a specific type of correlation called PEARSON’S SAMPLE CORRELATION COEFFICIENT which measures the strength of the linear relationship between the two numerical variables. (39) Pearson’s Sample Correlation Coefficient r Consider the following bivariate data on two class quizzes: x y 1 1 2 4 5 5 2 4 5 4 4 2 1 2 2 4 sxy = = = sxy is called the sample covariance and is a measure of the linear relationship between x and y. To make this value independent of the units of measurement we divide by sx = 1.690 and sy =1.414. The scatter plot of this sample data is as follows: (40) Pearson’s sample correlation coefficient r is r = = = Note: r is always between –1 and +1. The nearer r is to –1, the closer the plotted points are to a straight line with negative slope. The nearer r is to +1, the closer the plotted points are to a straight line with positive slope. ( If r is equal to –1 or +1 then all the points are on a straight line). Note: Pearson’s sample correlation coefficient r is a measure of the linear relationship between two variables X and Y. Other relationship may exist which are not detected by r. Example: Consider the sample of pairs (-1,1), (0,0), (1,1). We use Minitab to calculate Pearson’s sample correlation coefficient r. C1: -1 0 1 C2: 1 0 1 MTB> corr c1 c2 Correlations (Pearson) Correlation of X and Y =0.000 The correlation r is 0. Thus there is no linear component to the relationship between X and Y; note however that for each pair (x,y), y=x2, so there is another type of relationship between X and Y ( a quadratic relationship). (41) Correlation and Causality Just because the value of r is close to 1, does not of itself mean that x “causes” y. A strong ( negative or positive) correlation does not necessarily imply a cause and effect relationship between X and Y. Often there is a third hidden variable which creates an apparent relationship between X and Y. Consider the example below. Example: A sample of students from a grade school were given a vocabulary test. A high positive correlation was found between X= “student’s height” and Y= “student’s score on the test” Should one infer that growing taller will increase one’s vocabulary? Explain. Also indicate a third variable which could offer a plausible explanation for this apparent relationship. Note: A cause and effect relationship is best established by an experiment in which other variables which influence X and Y are controlled. Question: In the example above, how might a more controlled experiment be performed? (42) Fitting a Straight Line: The Least Squares (Regression) Line A set of points (bivariate observations) may or may not have a linear relationship. If we want to draw a straight line which “fits” these point, which line fits best? Our choice depends on what we use to define a “good” fit. Given a sample of bivariate data (x1, y1), (x2,y2), . . .(xn,yn), the least squares line L is the line fitted to the points in such a way that d12+d22+…+dn2 is as small as possible. The equation of the least squares line L is given by y =b0 + b1x, where b1 = (syr/sx) and b0 = Note: 1. The sign of the slope (b1) is the same as the sign of the sample correlation coefficient. 2. The least squares line always passes through the point ( (43) ). Example: Consider the previous example of scores on two class quizzes. Sample data is (1,5), (1,4), (2,4), (4,2), (5,1), (5,2), (2,2), (4,4). In our calculation of r=-.717, we also found that =3, =3, =1.690 and = 1.414 Thus, b1 = (syr/sx) = b0 = = = = y = b0 + b1x = SKETCH: Note: 1.The least squares line only fits the points used in its calculation. Do not be tempted to extend it below the smallest x-value or above the largest x-value. New observations with x-values outside this range may not fall anywhere close to the fitted line. So if a situation requires you to extend the line, do so with caution. 2. In the language of regression r2 is known as the COEFFICIENT OF DETERMINATION. It is used in regression to measure how well the least squares line fits the points. r2 satisfies the following inequality 0 r2 1 (44) Pearson’s Sample Correlation Coefficient and the Least Squares Line on Minitab Example: The data below gives the cost estimates and actual costs (in millions) of a random sample of 10 construction projects at a large industrial facility. Estimate(X) 44.277 2.737 7.004 22.444 18.843 46.514 3.165 21.327 42.337 7.737 Actual(Y) 51.174 9.683 14.827 22.159 26.537 50.281 15.550 23.896 50.144 13.567 To find Pearson’s sample correlation coefficient ‘r’ for this data and the equation of the least squares line using Minitab, proceed as follows. Name C1 as EST(X) and C2 as ACT(Y), then enter the x-data into C1 and the corresponding y-data into C2. Then Click: Stat Regression Fitted Line Plot Type in C2 in the Response (Y) box and type in C1 in the Predictor(X) box. Now click OK. Regression Plot ACT(Y) = 7.49228 + 0.937658 EST(X) S = 3.49312 R-Sq = 96.0 % R-Sq(adj) = 95.5 % 50 ACT(Y) 40 30 20 10 0 10 20 30 EST(X) (45) 40 50 From the output we find that the coefficient of determination r2 = .960 and the equation of the least squares line is y=7.49228+.937658x Questions: (1) What is the value of the sample correlation coefficient? Interpret it. (2) Predict the actual cost of a project whose estimated cost is 15 million dollars. (3) Is it safe to use this data to predict the actual cost of a project estimated to cost 80 million dollars? Explain. (4) Estimate the average change in the actual cost of a project when the estimated cost increases by 10 million. (46)