Computer_Notes_-_2009

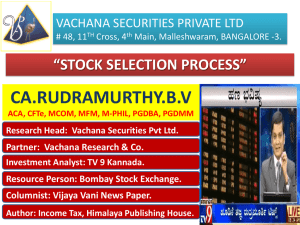

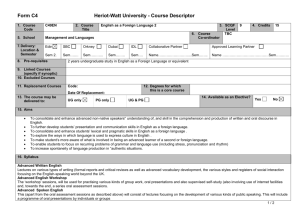

advertisement