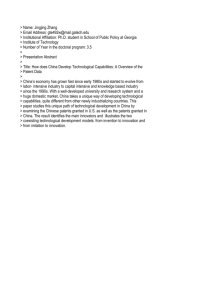

In recent years a fundamental solution to the 'Schumpeterian

advertisement