Paper

advertisement

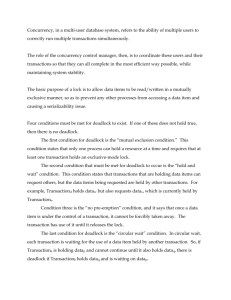

School of Science and Engineering Master of Science in Computer Networks Advanced Database Systems and Data Warehouses CSC 5301 SPRING 2003 By : Benmammass ElMehdi Supervised by : Doctor Hachim Haddouti INTEGRITY Achieving integrity in a database system : The performance of a database system depends on many features, among them accuracy, correctness and validity of the data provided by the database. These features introduce the integrity of a database. Integrity constraints guard against accidental damage to the database, by ensuring that authorized changes to the database do not result in a loss of data consistency. We can guarantee the integrity of a database in different ways. We will see now that a DBMS has an integrity subsystem component and how this component can achieve data integrity. Integrity subsystem : The role of an integrity subsystem is : Monitoring transactions and detecting integrity violation. Take appropriate actions given a violation. The integrity subsystem is provided with a set of rules that define the following : the errors to check for; when to check for these errors; what to do if an error occurs. This is an example of an integrity rule called RULE#1 that forces the value of the sold quantity to be greater than 0. RULE#1 : AFTER UPDATING sales.quantity : sales.quantity > 0 ELSE DO ; set return code to “RULE#1 violated” ; REJECT ; END ; In general, an integrity rule is defined with the following structure : Trigger condition (after updating, inserting…) Constraint (sales.quantity >0) Violation response (else do…) The integrity rules are compiled and stored in the system dictionary by the integrity rule compiler (which is a component of the integrity subsystem). At each time we add a new rule, the integrity subsystem checks if this new rule violates any of the old rules in order to accept it, or reject it. Using the integrity subsystem, all the integrity rules and stored in the same location (system dictionary). We can query them using the system query language, and their role is more efficient. The integrity rules can be divided into three main parts : The domain integrity rules; The relation integrity rules; The fansets integrity constraints. Domain integrity rules : Integrity constraints guard against accidental damage to the database, by ensuring that authorized changes to the database do not result in a loss of data consistency. The domain constraint is the most elementary form of integrity constraint. It specifies the set of possible values that may be associated with an attribute. Such constraint may also prohibit the use of null values for particular attributes. The domain integrity rules test values inserted in the database, and test queries to ensure that the comparisons make sense. New domains can be created from existing data types or “base domains”. This is an example of some domain integrity rules definitions. DCL S# PRIMARY DOMAIN CHARACTER (5) SUBSTR (S#,1,1) = ‘S’ AND IS_NUMERIC (SUBSTR (S#,2,4)) ELSE DO; Set return code to “S# domain rule violated” ; REJECT ; END ; DCL SNAME DOMAIN CHARACRTER (20) VARYING ; DCL STATUS DOMAIN FIXED (3,0) STATUS > 0 ; DCL LOCATION DOMAIN CHARACTER (15) VARYING LOACTION IN (‘LONDON’,’PARIS’,’ROME’); DCL P# PRIMARY DOMAIN CHARACTER (6) SUBSTR (P#,1,1) = ‘P’ AND IS_NUMERIC (SUBSTR (P#,2,5)) DCL PNAME DOMAIN CHARACRTER (20) VARYING ; DCL COLOR DOMAIN CHARACRTER (6) COLOR IN (‘RED’,’GREEN’,’BLUE’,’YELLOW’); DCL WEIGHT DOMAIN FIXED (5,1) WEIGHT > 0 AND WEIGHT < 2000 ; DCL QUANTITY DOMAIN FIXED (5) QUANTITY >= 0 ; Let’s see definition by definition the meaning of the syntax : S# is a string of 5 characters. Te first character is an S and the last 4 characters are numeric. SNAME is a string of 20 characters maximum. STATUS is a integer of three digits. It can be negative. LOCATION is a string. It can take the values defined (Paris, London…) P# is a string of 6 characters. Te first character is a P and the last 5 characters are numeric. PNAME is a string of 20 characters maximum. COLOR is a string. It can take the values defined (green, blue, yellow…) WEIGHT is a number of 5 digits that takes one decimal. Its value is always between 0 and 2000. QUANTITY is an integer of 5 digits. It is always greater or equal to zero. We can define other domain integrity rules like : Composite domains : a domain DATE which is composed of three domains DAY, MONTH and YEAR User-Written Procedures. Interdomain Procedures : some conversion rules (procedures) may help for example to compare two values from two distinct domains (distance expressed in kms and miles). Relation integrity rules : The RULE#1 defined in the introduction of this paper is an example of Relation Integrity Rules. Relation integrity rules promote integrity within a relation, allowing the comparison of multiple attributes in a given table. For example: 044 and 045 area codes in phone number imply that the city of residence Marrakech. Can potentially act as a substitute for normalization in situations where creating 3NF tables seems overkill (e.g., ZIPCODE → CITY) This is the set of different constraints or rules that we can define as Relation integrity rules : Immediate record state constraints After updating or inserting sales.quantity, verify : sales.quantity > 0 Immediate record transition constraints Before updating an attribute, restrict the new value to be greater than the old one : New_date > sales.date Immediate set state constraints This class includes two important cases that are : defining a key uniqueness and enforcing non-null values of the key (Entity Integrity Rule), imposing referential integrity (Foreign Key Integrity Rule) , and other functional dependencies. Immediate set transition constraints This constraint is the same as the immediate set state constraint, but there is a difference between them: the constraint in the first case is applied after updating, inserting or deleting while in the second case, it is applied before. Deferred record state constraints This rule is useless for a record state checking. It doubles the processing time of transactions, because the constraint must be applied twice. Deferred record transition constraints This type of constraints can require to access the log file to get the old value of the updated record. This is, the implementation is expensive and the processing is much higher. Deferred set state constraints The deferred set state constraint is applied at the end of the transaction (WHEN COMMITING). In some cases, we need this kind of constraints because sometimes the set of updates in a transaction violates temporarily the rule. Deferred set transition constraints This constraints are difficult to implement and expensive because they need to access the entire set of a transaction before and after updating it. Fansets integrity constraints : The fanset constraints are used in network databases. They prevent integrity violations by providing referential integrity. Triggered Procedures : We have seen that integrity rules are in fact a special case of triggered procedures. We can define a set of actions to be executed When insert, update, delete, Before insert, update, delete, After insert, update, delete. Triggered procedures are used to achieve the following tasks : Prevent the user that deleting a client will delete all its sales. Prevent that a record cannot be changed in this table, but must be changed in another table (when a set of records is duplicated in two tables) . Compute fields instead of having a field in the database that does that operation. Access security. Performance measurement of the database. Program debugging. Controlling stored record (compressing and decompressing data when storing and retrieving data). Exception reporting (expiry date for medicaments) CONCURRENCY Before going deeply in the explanation of concurrency control solutions, let ‘s review some important definitions : Contention occurs when two or more users try to access simultaneously the same record. Concurrency occurs when multiple users have the ability to access the same resource and each user has access to the resource in isolation. Concurrency is high when there is no apparent wait time for a user to get its request. Concurrency is low when wait times are evident Consistency occurs when a user access a shared resource and the resource exhibits the same characteristics and satisfies all the constraints among all operations. The problem of concurrency is major in a database system because when several transactions execute concurrently in the database, the consistency of data may no longer be preserved. It is necessary for the system to control the interaction among the concurrent transactions, and this control is achieved through one of a variety of mechanisms call concurrency-control schemes. Concurrency control achieves preventing loss of data integrity caused by interference between multiple users in a multi-user environment. When several transactions are processed concurrently, the result must be the same as if the transactions are processed in a serial order. There are several mechanisms which are used to accomplish concurrency control and that will be presented later, but now let’s take a look at this example : We have two bank accounts, and their balances are A and B. Let’s assume A = 100 DH and B = 100 DH. We have also two transactions, T1 transfers 100DH from B to A, and T2 adds 5% interest to both accounts : T1 : start, A=A+100, B=B-100, COMMIT T2 : start, A=A*1.05, B=B*1.05, COMMIT We can have the following sequences of execution : A=A+100, B=B-100, COMMIT A=A*1.05, B=B*1.05, COMMIT or Time A=A+100 A=A*1.05, B=B*1.05, COMMIT B=B-100, COMMIT The two sequences give different results (A=210 and B=0 for the first case, A=210 and B=5 for the second case). Our concurrency control mechanisms must ensure that the second sequence does never happen. In this part of the report, I will describe the most common concurrency control mechanisms and give examples to understand how they implement concurrency. 1. Lock-Based Protocols : An important component in the DBMS is the Lock Manager. This component can help us to accomplish two types of lock protocols : exclusive (X) mode: data can be both read as well as written. X-lock is requested using lock-X instruction. shared (S) mode: data can only be read. S-lock is requested using lock-S instruction. Transaction A Shared Lock Exclusive Lock Exclusive Lock Accounts Table Row 1 Row 2 Row 3 Row 4 Row 5 Row 6 Row 7 Row 8 Transaction B Shared Lock Exclusive Lock Exclusive Lock In this figure, transactions A and B can both read simultaneously from the table (shared lock authorizes the read-only , but can only update specific records to each transaction. The table below shows if a transaction can require a lock for a given object (row or table) knowing that it is already locked by another transaction. We see that if a row (or table) is already exclusively locked by a transaction, the system cannot allow a new shared/exclusive lock for a new transaction. Shared lock Exclusive lock Shared lock True False Exclusive lock False False When a transaction asks for a row lock, it waits until the concerned row is not locked by any of the other transactions. A transaction can proceed only if its locks requests are granted. PX Protocol : In this protocol, if a transaction needs to update a record, it asks for an exclusive lock. The following example shows how the concurrency control manager handles locks. We define two transactions that ask for either shared lock (lock-S) or exclusive lock (lock-X): Transaction 1: Transaction 2 : lock-X(B) lock-S(A) read(B) read(A) B = B -50; unlock(A) write(B); lock-S(B) unlock(B); read(B); lock-X(A); unlock(B); read(A); display(A+B); A = A + 50; write(A); unlock(A); The sequence of execution of these transactions is as follows : Transaction 1 Transaction 2 Concurrency control manager lock-X(B) grant-X(B, T1) Read(B) B = B -50; write(B); unlock(B); lock-S(A) grant-S(A, T2) read(A) unlock(A) lock-S(B) grant-S(B, T2) read(B); unlock(B); display(A+B); lock-X(A); grant-X(A, T2) read(A); A = A + 50; write(A); unlock(A); Here comes the notion of serializability. The goal is to find an interleaved execution sequence of a set of transactions that will obtain the same results as if the transactions are processed serially. For example, if we have two transactions, we have to look at the lock requests of each transaction and to find an order to execute them without any interference between then. The resulting sequence, if there is one, implies that the two transactions are serializable. Using the lock-based mechanism, deadlock and starvation can occur. The table below shows how deadlock occurs : Transaction A Shared Lock Already X-Locked Asks for an X-Lock Accounts Table Row 1 Row 2 Row 3 Row 4 Row 5 Row 6 Row 7 Row 8 Transaction B Shared Lock Asks for an X-Lock Already X-Locked Neither transaction A nor transaction B can proceed. We can avoid this deadlock situation by releasing the locks earlier. I will discuss deadlock avoidance more in details later on in this report. PS Protocol : In this protocol, any transaction that updates a record must firstly ask for a shared lock of that record. And during the transaction, before the update command comes a request of changing the lock-S to lock-X. Some problems due o deadlocks persist in this protocol. PU Protocol : This protocol uses a third lock state : the update lock. Any transaction that intends to update a record is required to ask for lock-U of that record. A U-lock is compatible with an Slock but not with another U-lock. We use this compatibility matrix. X S U X False False False S False True True U False True False This protocol is more efficient than the previous ones, because limits considerably deadlock. The Two-Phase Locking Protocol This is a protocol which ensures conflict-serializable schedules. As it is named, TPLP includes two phases : Growing phase : transaction may obtain locks and may not release locks, Shrinking phase : transaction may release locks and may not obtain locks. The protocol assures serializability. It can be proved that the transactions can be serialized in the order of their lock points (i.e. the point where a transaction acquired its final lock). Transaction 1 Lock-X(A) Read(A) Lock-S(B) Read(B) Write(A) Unlock(A) Transaction 2 Transaction 3 Lock-X(A) Read(A) Write(A) Unlock(A) Lock-S(A) Read(A) Unlock(A) This example shows the serializability using 2PL. There are many protocol that are derive from 2PL : Strict two-phase locking. Here a transaction must hold all its exclusive locks till it commits/aborts. Rigorous two-phase locking is even stricter: here all locks (shared and exclusive) are held till commit/abort. In this protocol transactions can be serialized in the order in which they commit. Another alternative for 2PL is the graph-based protocol. In this protocol, we fix an order of accessing data. If a transaction has to update d2 and read d1, it has to access these data in a predefined order. The tree-protocol is a kind of graph-based protocols. Deadlock Avoidance : A system is deadlocked if there is a set of transactions such that every transaction in the set is waiting for another transaction in the set. Deadlock prevention protocols ensure that the system will never enter into a deadlock state. It can be achieved using different strategies : Require that each transaction locks all its needed items before it begins execution (predeclaration). Impose partial ordering of all data items and require that a transaction can lock needed items only in the order specified by the partial order (graph-based protocol). The following schemes use transaction timestamps, that I will present in the next part, for achieving only deadlock prevention : wait-die scheme : older transaction may wait for younger one to release data item. Younger transactions never wait for older ones; they are rolled back instead. And a transaction may die several times before acquiring needed data item wound-wait : older transaction forces rollback of younger transaction instead of waiting for it. Younger transactions may wait for older ones. There are fewer rollbacks than wait-die scheme. Both in wait-die and in wound-wait schemes, a rolled back transaction is restarted with its original timestamp. Older transactions have precedence over newer ones in these schemes, and starvation is hence avoided. Timeout-Based Schemes : a transaction waits for a lock only for a specified amount of time. After that, the wait times out and the transaction is rolled back. In this case, deadlocks are not possible. This scheme is simple to implement; but starvation is possible. Also difficult to determine good value of the timeout interval. Deadlocks can be described as a wait-for graph, which consists of a pair G = (V,E), V is a set of vertices (all the transactions in the system) E is a set of edges; each element is an ordered pair Ti Tj. If Ti Tj is in E, then there is a directed edge from Ti to Tj, implying that Ti is waiting for Tj to release a data item. When Ti requests a data item currently being held by Tj, then the edge from Ti to Tj is inserted in the wait-for graph. This edge is removed only when Tj is no longer holding a data item needed by Ti. The system is in a deadlock state if and only if the wait-for graph has a cycle. The system must invoke a deadlock-detection algorithm periodically to look for cycles. What to do when a deadlock is detected ? Some transaction will have to roll back to break deadlock. Select that transaction as victim that will incur minimum cost. We have to determine how far to roll back the transaction. We can either carry out : Total rollback: Abort the transaction and then restart it. Partial rollback: it is more effective to roll back transaction only as far as necessary to break deadlock. Locking Granularity: Another possibility of lock-based protocols is that allow data items to be of various sizes and define a hierarchy of data granularities, where the small granularities are nested within larger ones. Then, we can represent them graphically as a tree. When a transaction locks a node in the tree explicitly, it implicitly locks all the node's children in the same mode. Granularity of locking (level in tree where locking is done): - fine granularity (lower in tree): high concurrency, high locking overhead - coarse granularity (higher in tree): low locking overhead, low concurrency The highest level in the example hierarchy is the entire database. The levels below are of type area, file and finally record. In addition to S and X lock modes, there are three additional lock modes with multiple granularity: intention-shared (IS): indicates explicit locking at a lower level of the tree but only with shared locks. intention-exclusive (IX): indicates explicit locking at a lower level with exclusive or shared locks shared and intention-exclusive (SIX): the subtree rooted by that node is locked explicitly in shared mode and explicit locking is being done at a lower level with exclusive-mode locks. Here comes the notion of intent locking : Intention locks allow a higher level node to be locked in S or X mode without having to check all descendent nodes. This is the compatibility matrix for an intent locking protocol with 5 states. IS ( IX ( S ( S IX X S IX IS ( ( S IX ( X ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( ( Timestamp Technique In the timestamp protocol, each transaction is issued a timestamp when it enters the system. If an old transaction Ti has time-stamp TS(Ti), a new transaction Tj is assigned time-stamp TS(Tj) such that TS(Ti) <TS(Tj). The protocol manages concurrent execution such that the time-stamps determine the serializability order. In order to assure this behavior, the protocol maintains for each data Q two timestamp values: W-timestamp(Q) is the largest time-stamp of any transaction that executed write(Q) successfully. R-timestamp(Q) is the largest time-stamp of any transaction that executed read(Q) successfully. The timestamp ordering protocol ensures that any conflicting read and write operations are executed in timestamp order. The timestamp-ordering protocol guarantees serializability. Thus, there will be no cycles. Timestamp protocol ensures also freedom from deadlock as no transaction ever waits. But the schedule may not be cascade-free, and may not even be recoverable. Optimistic Concurrency Control : This kind of concurrency control is a validation-based protocol. Execution of or example transaction Ti is done in three phases. 1. Read and execution phase: Transaction Ti writes only to temporary local variables 2. Validation phase: Transaction Ti performs a ``validation test'' to determine if local variables can be written without violating serializability. 3. Write phase: If Ti is validated, the updates are applied to the database; otherwise, Ti is rolled back. The three phases of concurrently executing transactions can be interleaved, but each transaction must go through the three phases in that order. Optimistic means that each transaction executes fully hoping that all will go well during the validation. Each transaction Ti has 3 timestamps Start(Ti) : the time when Ti started its execution Validation(Ti): the time when Ti entered its validation phase Finish(Ti) : the time when Ti finished its write phase Serializability order is determined by timestamp given at validation time, to increase concurrency. Thus TS(Ti) is given the value of Validation(Ti). This protocol is useful and gives greater degree of concurrency if probability of conflicts is low. That is because the serializability order is not pre-decided and relatively less transactions will have to be rolled back.