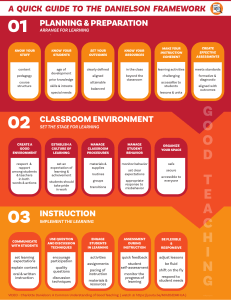

Pilot Model Comparisons + - ? North Mason (Danielson Framework

advertisement

Pilot Model Comparisons + North Mason (Danielson Framework) North Thurston (Danielson Framework) - Clearly written Multiple sources of information for evidence Stair step for implementation PD aligned to stair-step Possible differentiation for specialists Very thorough; detailed out the elements Exactly what came out of Danielson book Developed some forms Clearly defined Self-reflection piece for individual/team Liked the complete cycle of the evaluation process A little unwieldy – 22 pieces Creating a portfolio Some evidence is only observable In some cases, there is a reliance on students Summative evaluation . .. “it is my judgment (subjective) Line blurred between “volunteer” and “professional” Discipline and evaluation are two different issues Need to develop a system for reviewing evidence Process to refute? [Need PD to develop skills for crucial conversations] How to roll out new system with confidence – ways to “take out the scary” [PD] Tool for district office administrators (directors, TOSA’s)? Directors – could select appropriate criteria and do a Snohomish (Danielson Framework) ? Liked how the pages were formatted, started with exemplary, then moved right to unsatisfactory Aligned the Danielson No long term PD District resources may be differed – evaluation PD vs. content PD No forms for specialists Level 1 indicator language is “weird” or negative Wondered if “not observed” should be on there Not observed vs. not applicable Too simplified Will the state exempt those who have no specific form? Remember – NA or Comment Should reverse the order to 43-2-1 Some items difficult to measure School success is a key indicator to principal evaluation 1 ESD 101 Consortium (Danielson Framework) domains to the state criteria District has worked on a 4 tier evaluation system for 8 years. Emphasized depth vs. breadth Seem more doable; more condensed More specific of what classroom observation is part of teacher evaluation (what’s seen) More opportunity to use with certificated specialists (librarians, counselors) Not punitive or tied to high stakes testing Seems to be tailored to meet their size (primarily small districts) Felt like it was more about the “feel of it” vs. hard evidence Consistent and laid-out process; teacher protocol order with tools to support Manageable Emphasis on self-assessment Evidence is applicable to specialists Online components support and add additional “meat” to the process; i.e. selfassessment, conversations holistic rating Principal ( criteria 6) – who is going to determine whether it is proficient or merging – they both say the same thing Principal: Missing language or voids in areas of rubrics Wonder about the difference in language between “emerging” and “basic” Questioning how “equipment management” pertains to teacher evaluation – open to interpretation No summative report yet State hot button – “value added” Concern about indicator (3b) that says “all students” Important components are left out of the formal observation form (rubric) Unsure how evidence from observation process is transferred to the final evaluation; what does “preponderance” mean? Unclear as to which of the six scoring models they have chosen Scoring is specifically designed to avoid averaging scores Website is not complete – still Need clarification of observation language, i.e. formal and informal Why is the numbering/lettering done that way? Confuses the reader 2 Wenatchee (Marzano Framework) Teacher self-reflection has all 22 Danielson pieces Attempts to allow for collaboration and reflection Provides criteria comparison between old eval and new; takes focus off knowledge and puts it into “action” words Holistic process The meeting design for the “changing process” and the future is real valuable (addresses non-traditional roles Format/layout of principal rubric Evidence clear on the page; point system clear Sub-categories (elements) give clear, specific criteria to help teacher and administrator Very detailed PD plan; focused on the individual in the pilot process Very clear how you earn the “final” score Includes a % of merit (multiple measures, such as student growth) Targeted to what to do within the day; does not require work outside the contract Focuses on student growth in some unpublished content Self-assessment for students is different for special education students Concern about 4d; indicates that teacher would be evaluated on “work having to be done outside contract” to rated a “4” A few areas are difficult to tie to individuals; subjective – peer perception (1.1, 11.2) From sped point of view, language does not honor differences in student needs Specialists/ SpEd – “pacing” and “adopted curriculum” do not apply Some things are very specific to the WSD 33 pages of how to be successful on criteria – thought artifacts do not have a lot of examples Self-reflection has not been developed Could “seem” massive; a lot to evaluating 4 times/year Indicates that measures/evidence and process steps are “to be developed” – when? How does it all fit together? A lot to evaluating 4 times/year Why did they specifically reference K-8 Make Your Day as the behavior program? What is meant by “looser vs. stricter summative eval forms For implementation, are they only looking at 3 criteria (goals areas?) or everything? Did pilot districts understand impact across the state or just focus at district level? 3 learning Anacortes (UW Center for Educational Leadership’s Five Dimensions of Teaching and Learning) Observables in the frameworks 3.9 supplemental material – student selects (pg. 8) Observables had several options – student and teacher Student engagement is very important Easy to read Glossary nice to have, but could be a problem if incorrect wording Grades teacher performance – mostly what happens in the classroom Unclear how it all “fits together;” not clearly lined out Harder to be proficient than distinguished (2.1, 1.2, 2.2) Some criteria were difficult to distinguish from 1 to 4 48 pages – lots of words And/or follow state or district curriculum District policy on “blogging?” 4