nov-1st-2012-2-Gen-Admin

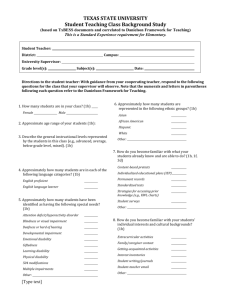

advertisement

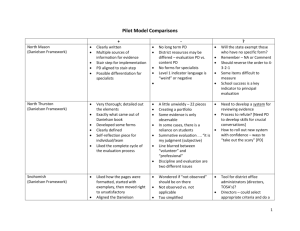

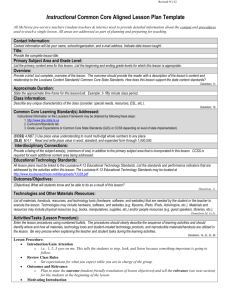

TEACHER EVALUATION TRAINING November 1st, 2012 General Admin Meeting BY GLENN MALEYKO, Ph.D Director of Human Resources John McKelvey– Teachscape Some of the Slides are also based on the Danielson iobservation training (Pam Rosa) from WCRESA on April 3rd and 4th. MCLSection 380.1249 3. C (i) 1. If the school administrator conducts teacher performance evaluations, the school administrator's training and proficiency in using the evaluation tool for teachers described in subsection (2)(d), including a random sampling of his or her teacher performance evaluations to assess the quality of the school administrator's input in the teacher performance evaluation system. Michigan Training Grant MCL 388.1695. Sec. 95. (1) From the funds appropriated in section 11, there is allocated an amount not to exceed $1,750,000.00 for 2012-2013 for grants to districts to support professional development for principals and assistant principals in a departmentapproved training program for implementing educator evaluations as required under section 1249 of the revised school code, MCL 380.1249. Grant Stipulations Districts Can apply for $350 per building administrator Must be an approved MDE training module. Contain instructional content on methods of evaluating teachers consistently across multiple grades and subjects. (b) Include training on evaluation observation that is focused on reliability and bias awareness and that instills skills needed for consistent, evidence-based observations. Grant Stipulations (c) Incorporate the use of videos of actual lessons for applying rubrics and consistent scoring. (d) Align with recommendations of the governor's council on educator effectiveness. (e) Provide ongoing support to maintain inter-rater reliability. As used in this subdivision, "inter-rater reliability" means a consistency of measurement from different evaluators independently applying the same evaluation criteria to the same classroom observation. The Danielson Teachscape Model Highest Validity and Reliability based on state study of current evaluation models. State is currently piloting four different models Link:http://www.teachscape.com/produc ts/danielson-proficiency-system We Plan had a review committee Brainstorm ideas We will be able to receive training via the following methods Gen Admin large group discussion Secondary Admin or Elementary Forum Small Group example, instructional rounds Plan As individuals on-line from any location All individuals will take an exam at the end to receive certification Increase Rater-Reliability and Credibility Working on letter of agreement with ADSA for comp day. Framework Dearborn Standards 1. : Classroom Environment 2. Preparation and Planning 3. Instruction 4. Assessment 5. Communication and Professional Responsibilities Danielson: 1. Domains Planning and Preparation 2. Classroom Environment 3. Instruction 4. Professional Responsibilities Danielson I-observation technique 1. Verbatim, scripting of teacher or student comments 2. non-evaluative statements of observed teacher and student behavior. 3. Quantitative Data, time on task, assessment etc. 4. Environmental observations Other Dearborn to Danielson Framework correlations Dearborn 4 Levels: ineffective, minimally effective, effective, highly effective Danielson, unsatisfactory, basic, proficient, distinguished Elements in both models, but Danielson has attributes Observations What are the strengths? What are the weaknesses? What do you still wonder after viewing a snapshot of the lesson? What questions? What recommendations might you have?