scb.iit.notes2 - Minds & Machines Home

advertisement

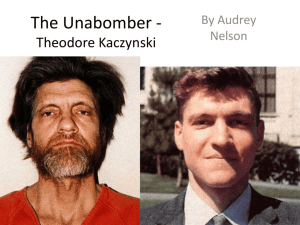

Foundational Position on AI and the Human Mind. Selmer Bringsjord, PhD Professor of Logic, Philosophy, Cognitive Science, and Computer Science Rensselaer AI & Reasoning (RAIR) Laboratory (Director) Department of Cognitive Science (Chair) Department of Computer Science Rensselaer Polytechnic Institute (RPI) Troy NY 12180 USA selmer@rpi.edu “Strong” Artificial Intelligence (AI), according to which people are at bottom computing machines and AI will before long produce artificial persons, is something I take to be refuted, in no small part through my own work. My refutations include a number of papers and books; e.g., the book What Robots Can and Can’t Be (Kluwer, 1992). (This book is somewhat technical. A non-technical overview of my rejection of “Strong” AI can be found in my “Chess is Too Easy,” Technology Review 101.2: 23-28. This paper is available from both TR and my web site.) “Weak” AI, however, according to which human cognition can be simulated, is, I believe, provably true. For example, in a recent book, AI and Literary Creativity, I argue that no computer program can be genuinely creative, but nonetheless we can build systems (as in the BRUTUS storytelling system) that appear to be creative. For example, we can strive to build computer systems that can pass what I’ve called the Short Short Story Game (S3G, for short), but not what I call the Lovelace Test. In order to pass S3G, a computer must craft a story of no more than 500 words in reaction to a “premise” given to it in the form of a sentence. The catch is that the computer’s story must be at least as good as a story written by a human given the same sentence as input. To pass the Lovelace Test (described in “Creativity, the Turing Test, and the (Better) LovelaceTest,” Minds and Machines 11: 3-27), a computer must generate artifacts (e.g., stories) that even those who know the inner workings of the computer find inexplicably good. (Knowing how BRUTUS works, I’m not impressed by its output.) At bottom, I believe that human persons are what I call “superminds,” creatures capable of cognition that is beyond standard computation. A rather hefty book due out in March – Superminds: People Harness Hypercomputation, and More – from Kluwer, and now available, explains and defends this view. What about technology to “improve” humanity? Am I working on anything like that, or am I just pondering the foundations of AI? Actually, I’m doing lots of applied work intended to be helpful. Readers are free to visit the web site for my department (www.cogsci.rpi.edu, and from there follow the link to my RAIR Lab). Below, you can find a picture that indicates some of the R&D I’m overseeing in this lab. Here, very briefly, are some projects in my RAIR Lab devoted to making some concrete contributions. I end with a comment about AI’s “Dark Side.” Artificial Teachers. Along with a number of collaborators, I’m working on building artificial agents capable of autonomously teaching humans (in the areas of logic, mathematics, and robotics). This field is known as Intelligent Tutoring Systems. Our R&D here is based on a new theory of human reasoning, mental metalogic, the co-creator of which is Yingrui Yang. Synthetic Characters (in Interactive Enter(Edu)tainment and Military Simulations). I’m working with others in my lab on building robust synthetic characters that cannot be distinguished from humans in virtual environments. Artificial Co-Writers. My work on computational creativity has led to work on intelligent systems that can co-author documents with humans. These documents are of many types, and range from contracts and proposals to tests to screenplays to novels. Tracking Terrorists. With support from various funding agencies, my lab is designing systems that can anticipate the behavior of terrorists, so that they can be thwarted. AI-Augmented Reasoning. One thing psychology of reasoning has without a doubt revealed is that untrained human reasoners are pitiful context-independent reasoners. One possible remedy is to give everybody two or more courses in symbolic logic -- but this probably isn’t realistic. Another possible remedy is to somehow augment the human cognitive system with systems that can kick in whenever cognizers are faced with problems calling for context-independent reasoning. One can imagine implants, visual displays that pop up in one's field of vision. nanotechnology to change the brain so that better reasoning power is available, etc. The “Dark Side” of AI. To conclude, one quick comment on Bill Joy’s “Why the Future Doesn’t Need Us” from Wired. Joy affirms one of the central arguments in Kaczynski’s Unabomber Manifesto (which was published jointly, under duress, by The New York Times and The Washington Post in an attempt to bring his murderous campaign to an end). The argument in question begins: First let us postulate that the computer scientists succeed in developing intelligent machines that can do all things better than human beings can do them. In that case presumably all work will be done by vast, highly organized systems of machines and no human effort will be necessary. Either of two cases might occur. The machines might be permitted to make all of their own decisions without human oversight, or else human control over the machines might be retained… Joy’s presupposition here is Kaczynski’s: viz., that AIs in the future will have autonomy or “free will.” They will not; they cannot. (I have explained why in my writings. In short, as Lady Lovelace long ago realized, machines originate nothing, they simply do what they’re programmed to do.) In light of this, if the “intelligent” machines screw up, we will be to blame! I cannot speak about the other technologies Joy worries about, but at least when it comes to AI, I see no evil artificial masterminds in the future, despite movies such as The Terminator and Matrix. There will be evil masterminds, for sure, but they will not be artificial. They will be human like Kaczynski himself.