Research on Data Cleaning in Data Acquisition(China)

advertisement

Research on Data Cleaning in Data Acquisition

Zhang Jin

( Nanjing Audit University , Nanjing 210029, Jiangsu, China)

Abstracts: According to the present condition of electronic data collected for IT audit, this paper

analyzes the importance of data cleaning in electronic data acquisition. Based on the expatiation on the

principle of data cleaning, the paper also investigates the solutions to the common problems in

electronic data acquisition, which is illustrated by an example of the application of electronic data

cleaning in data acquisition.

Key words: IT audit, data acquisition, data cleaning

1. Introduction

To effectively implement the audit supervision under the network environment, it is necessary to do

some research into the techniques of data acquisition and processing, of which, electronic data acquisition

is an essential one. The features of IT audit data acquisition and transferring are as follows [1]:

(1) The variety of manifestation of auditees’ data: database, text, web data;

(2) The impossibility of acquiring all the data of auditees in auditing, hence, classification and cleaning.

Either true and original or integrated data are needed.

(3) In data acquisition, detailed acquisition and analysis of auditees’ data cannot be done instantly due to

limited time. Therefore, it is uncertain which data important, and which unimportant. The general measure

adopted is to acquire all the data needed after a certain range is determined before further processing and

coordinating.

(4) Considering the abundance of data and the risks in data acquisition, it is a general practice to acquire

more data than enough, which usually leads to data repetition and immensity.

(5) Some data attributes value being uncertain, it is not uncommon that the value cannot be obtained,

leading to data incompleteness.

It can be thus concluded that, to meet the demands of audit analysis from the data acquisition, data

cleaning plays an important role in data acquisition. As a result, based on the expatiation on the principle of

data cleaning, the paper investigates the solutions to the common problems in electronic data acquisition.

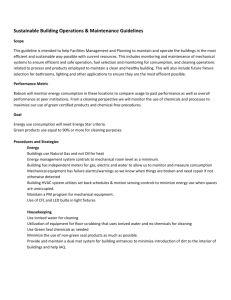

2. The principle of data cleaning

Dirty data

Data Cleaning

Business

Knowledge

Cleaning by User

Cleaning

Algorithm s

Autom atic

Cleaning

Cleaning Rules

Higher Quality Data

Fig.1 T he Principle of Data Cleaning

1

Data cleaning is also called data cleansing or scrubbing. To state it simple, it means to clean the errors

and inconformity, i.e. to detect and clean the wrong, incomplete and duplicated data by means of statistics,

data mining or predefined cleaning rules, so as to improve data quality. The principle of data cleaning is

illustrated in Figure 1 [2].

3. Data Cleaning Methods

Data cleaning involves many contents. According to the practical needs of electric data acquisition, the

paper mainly focuses on the approximately duplicated records cleaning, incomplete data cleaning and data

standardization.

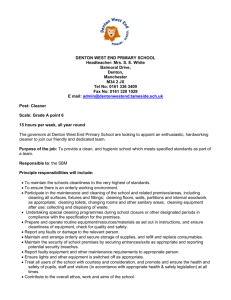

3.1 Approximately Duplicated Records Cleaning

3.1.1 The Principle of Approximately Duplicated Records Cleaning

In order to reduce the redundant information from the electric data acquisition, it is important to clean

approximately duplicated records. The approximately duplicated records refer to the same real-world entity,

which cannot be confirmed by the system of database for its differences of formatting and spelling. Figure2

shows the principle of approximately duplicated records cleaning.

Dirty Data with

Duplicate Records

Sorting the Database

Approximately

Duplicate Records

Detecting

Records Approximation

Detecting

M

times

Duplicate

Records

Do duplicate

records satisfy

some Merge/

Purge Rule?

Yes

Automatic

Merge/Purge

No

Merged/Purged by User

Clean Data

Clean Data

Fig.2 T he Principle of Approximately

Duplicate Records Cleaning

The process of approximately duplicated records cleaning can be described as follows:

Firstly, the data to be cleaned is input into system. Then, data cleaning is performed. The module of

sorting the database introduces sorting arithmetic from arithmetic base in order to sort the database. Having

sorted the database, the module of records approximation detecting introduces approximation detecting

arithmetic from arithmetic base. Approximation detecting is done in the neighboring scope so as to 1)

calculate the approximation of records, 2) to determine whether the records are approximately duplicated

ones. To detect more approximately duplicated records, sorting the database once is inadequate. It is

necessary to adopt multi-round sorts, multi-round contrasts, with a different key for a different round,

2

before the integration of all the detected approximately duplicated records. In this way, the detection of

approximately duplicated records is completed. Finally, the integrated disposal of approximately duplicated

records is completed according to the predefined purge/merge rules for each detected group of

approximately duplicated records.

3.1.2 The key steps of approximately duplicated records cleaning

From Figure 2, the key steps of approximately duplicated records cleaning can be summarized as:

sorting the database records approximation detecting purge/merge of approximately duplicated

records, the functions of which are illustrated as follows:

(1) Sorting the database

To locate all the duplicated records in data source, it is essential that each possible record pair be

contrasted. However, the detection of approximately duplicated records becomes a costly operation. When

the amount of acquired electronic data increases enormously, this will result in an invalid and unpractical

approach. To decrease the number of record contrasts, and to increase the effectiveness of detection, the

general approach is to contrast the records within a limited range, i.e. to sort the database first, then to

contrast the records in the neighboring range.

(2) Records approximation detecting

It is an essential step to detect the approximation of records in approximately duplicated record

cleaning. By detecting the approximation of records, we can determine whether two records are

approximately duplicated records.

(3) Approximately duplicated record purge/merge

Having completed the detection of approximately duplicated records, the detected duplicated records

should be processed. For a group of approximately duplicated records, two methods are applied:

Method 1: regard one record true in the approximately duplicated records, the rest false. The mission

is, therefore, to delete the duplicated records in the database. In this situation, the following measures can

be taken:

Manual rules

Manual rules refers to find the most accurate record to store manually from a group of approximately

duplicated ones and delete all the rest from the database. This is the easiest.

Random rules

Random rules refers to select any one record to store randomly from a group for approximately

duplicated ones and delete all the rest from the database.

The latest rules

In many cases, the latest records can better represent a group of approximately duplicated records. For

example, the more up-to-date the information is, the more accurate it may be. The address of daily used

accounts is more authorized than that of the retired accounts. So it means to choose the latest record from a

group of approximately duplicated records and delete the others.

Integrated rules

Integrated rules refers to choose the most integrated record to store from a group of approximately

duplicated ones and delete the others.

Practical rules

The more repeated the information is, the more accurate it may be. For instance, if, in three records,

the phone numbers of two suppliers are the same, it is most likely that the repeated numbers are accurate

and reliable. According to this, practical rules refers to choose the record whose matching number is the

largest to store and delete the other duplicated records.

3

Method 2: regard each individual approximately duplicated record as a portion of the whole

information source, the purpose of which is to integrate a group of duplicated records to produce another

more complete group of new records. This approach is generally manually done.

3.1.3 Improved Efficiency of Approximately Duplicated Records Detection

It is very important to complete data cleaning quickly. Therefore, it is essential to improve the

efficiency of approximately duplicated records detection. From the previous analysis, it is clear that

approximate detection between records still remains a major problem in approximately duplicated records

detection. The key steps lie in the approximate detection between each field, whose efficiency has an

impact on the whole algorithmic efficiency. Generally, edit distance [3,4] is applied. Since the complexity of

distance editing is O (m × n ), without an effective filtration to reduce unnecessary edit distance when the

quantity of data is enormous, this may lead to over-length of detecting. Therefore, to increase the efficiency

of approximately duplicated records detection, the technique of length-filtering method can be adopted to

reduce unnecessary edit distance. Length-filtering method is based on the following theorem:

Theorem 1 given any two character strings, x, y, the length of which are respectively |x|, |y|. If the

maximum edit distance is k, the difference between the lengths of the two character strings cannot exceed k,

i.e.

x y k.

Theorem 1 is called length-filtering. The pseudocode of approximation detecting algorithm optimized by

means of length-filtering is as follows [5]:

Input: two records: R1 and R2, the threshold of the two fields is δ1, the threshold of the two records is δ2.

(the two values are to determine whether the two records are approximate)

Output: True/False

Rdist = 0;

n = GetFieldNum(R1);

m = n;

For i = 1 to n

{

If R1.Field[i] == NULL OR R2.Field[i] == NULL

Then

m = m - 1;

Continue;

End If;

//********** Done with le ngth -filtering method **********

s_int = length(R1.Field[i]);

t_int = length(R2.Field[i]);

If abs(s_int-t_int) > δ1

Then

Return False;

Else

Dist = d(R1.Field[i],R2.Field[i]);

End If;

//********** Done with le ngth -filtering method **********

If Dist > δ1 Then

Return False;

4

Else

Rdist = Rdist + Dist;

End If;

}

Rdist = Rdist / m

If Rdist < δ2

Then

Return True;

Else

Return False;

End If;

Here, function d(R1.Field[i],R2.Field[i]) is used to calculate the edit distance between fields

R1.Field[i] and R2.Field[i].

The study shows that length-filtering method can effectively reduce unnecessary edit distance

calculation, reduce detecting time, and improve the efficiency of approximately duplicated records

detection.

3.2 The Cleaning of Incomplete Data

Due to being unable to obtain the value of some data attributes in collecting data, some data are

incomplete. To meet the demands of auditing analysis, it is necessary to clean the incomplete data in data

source. The principle is illustrated in Figure 3:

Dirty Data with

Incom pleteness Data

Detecting Incom pleteness

Data

Is Data

Incom plete?

Determ ining Data

Usability

Yes

No

No

Deleting Record

Is Data Usabile?

Yes

Inferring Missing

Attribute Values

Clean Data

Fig.3 T he Principle of Incompleteness Data Cleaning

The main steps of incomplete data cleaning are as follows:

(1) Detecting of incomplete data

The first step is to detect the incomplete data before cleaning so as to further cleaning.

(2) Detecting data’s usability

5

This is an important step in incomplete data cleaning. When much value of record attributes is lost, it

is unnecessary to make up all the records. In this case, it is very essential to determine the usability of

records in order to seek solution to data incompleteness. To determine the usability means to decide these

records should be saved or deleted, based on the degree of incompleteness of each record and other

factors.

The degree of incompleteness should first be evaluated. That is to calculate the percentage of lost

value of attributes of any record, then to consider other factors. For instance, decide whether the key

information still exists in the residuals of values of attributes, then decide whether to accept or reject. If the

attributes of one record are default, this means that the values are lost. The evaluation of data

incompleteness is as follows:

Let R {a1 , a2 ,, an } .

Here, let n attributes of record R be denoted by a1 , a2 ,, an . m denotes the number of missing

attribute values in record R (including the fields whose values are default values). AMR denotes the

percentage of missing attribute values in record R, denotes the threshold of the percentage of missing

attribute in record R. If:

AMR

m

, [0,1]

n

Then, the record should be retained; else, the record should be discarded.

In cleaning incomplete data, the value of is decided by the analysis of its data source by expert and

is saved in the system for use. The default attribute values are also defined in the rules base for calculating

the value of m.

The existence of key attributes, apart from the consideration of the incompleteness degree, has also to

be taken into consideration. The key attributes are determined by the field experts according to their

analysis of concrete data source. The records should also be saved even AMR , given the key attributes

exist in the incomplete data.

(3) Incomplete data processing

This means to process the lost values of attributes in the records by means of some techniques after

detecting the usability of data. Some methods are as follows [6]:

Manual method: this is often applied for processing important data or the incomplete data when the

amount is not large.

Constant substitute method: All missing values are filled in with the same constant, such as

“Unknown” or “Miss Value”. This method is simple but may result in wrong analysis results since all

missing values are filled in with the same.

Average substitute method: Use the average of an attribute to fill in all missing values in the same

attribute.

Regular substitute method: The value of the attribute that occurs most often is selected to be the

value for all the missing values of the attribute.

Estimated attribute method: this is the most complex, yet most scientific method. Use such relevant

arithmetic as regress and decision tree to predict the possible value of the lost attributes and then replace

defaults with predicted values.

The methods given above are some usual approaches to process the lost values of attributes in record

processing. Which method to adopt should be decided according to the specific data source.

6

3.3 Data Standardization

Since the data acquired in data acquisition may be various in formats, it is essential to standardize

different formats into unified format to convenience audit analysis. Two methods can be used to achieve

standardization.

1) To standardize date and such type of data, internal function in the system is adopted.

2) IF-THEN rules are used to complete the standardization of the field-value data.

e.g.

IF Ri (Gender ) = F THEN

Ri (Gender ) = 0

ELSE

Ri (Gender ) = 1

END IF

Here, Ri (Gender ) denotes the value of field “gender” in Ri . The different “F/M” or “0/1” can be

transferred into uniformed “0/1”.

4. A Practical Example

According to the third part, Jbuilder 10 can be adopted to achieve these cleaning methods. The

windows of approximately duplicated records and incomplete data cleaning’s subsystem are shown in

Figure 4 and 5.

Take the acquired data of ERP (Enterprise Resource Planning) for instance, we can see the key steps

of approximately duplicated records and incompleteness data cleaning as follows:

(1) The cleaning of approximately duplicated records

First of all, each parameter’s value should be confirmed. According to the analysis of the data table of

“Customer Information”, the value of each parameter is 1 2 , 1 2 .

Fig.4 The Interface of Approximately

Duplicated Records Cleaning

Then, the process of approximately duplicated records detection should be run as the windows shown

in Figure 4.

Finally, the approximately duplicated records detected from “Customer Information” should be deleted

with “integrity rules”. It means to choose the most integrated record from a group of approximately

duplicated ones and delete the others.

7

Thus, we can complete the approximately duplicated records cleaning effectively.

(2) The cleaning of incomplete data

The value of each parameter should first be confirmed and defined in rules database. Having analyzed

the “Clients database”, should be 0.5 and “Customer Name” should be made the key field.

Then the process of detecting incomplete data should be run as the windows shown in Figure 5.

Finally, the incomplete data are detected. For the detected incomplete data from “Clients Database”,

this should be done manually as the data is very important and the amount is not large.

Thus, the incomplete data cleaning is completed effectively.

Fig.5 The Interface of Incompleteness Data

Cleaning

5. Conclusion

It is worthwhile to do research into how to apply the advanced data cleaning technique into practice,

so as to collect electronic data to meet the increasing demands of audit analysis as auditing becomes more

challenging and the nature of auditees are various. Apart from the structured data, semi-structured XML

(Extensible Markup Language) may appear in the future, which also deserves our attention.

References:

[1] 审计署计算机技术中心. 计算机审计数据采集与处理技术的总体设计报告[R], 2004

[2] Lee M L, Ling T W, Low W L. IntelliClean: a knowledge-based intelligent data cleaner [A]. In: Proceeding of the 6th

ACM SIGKDD International Conference on Knowledge discovery and Data Mining[C]. Boston: ACM Press, 2000: 290

- 294

[3] Chen Wei, Ding Qiu-lin. Edit distance application in data cleaning and realization with Java[J].Computer and Information

Technology, 2003,11(6):33 - 35

[4] Bunke H, Jiang X Y, Abegglen K,et al. On the weighted mean of a pair of strings [J]. Pattern Analysis & Applications,

2002,5(5): 23 - 30

[5] Chen Wei, Ding Qiu-lin, Xie Qiang.Interactive data migration system and its approximately-detecting efficiency

optimization[J].Journal of South China University of Technology (Natural Science Edition), 2004, 22(2): 148 - 153

[6]

Batista G E A P A, Monard M C. An analysis of four missing data treatment methods for supervised learning [J].

Applied Artificial Intelligence, 2003,17(5-6): 519 - 533

8