Connecting Practice, Data and Research

advertisement

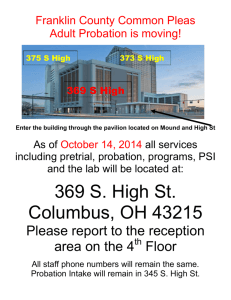

Connecting Practice, Data and Research C Connecticut’s i ’ Contractor C Data D C Collection ll i SSystem Maureen DeLude Program Manager CT Judicial Branch Court Support Services Division Barbara Pierce Parker Managing Associate Crime and Justice Institute at CRJ Introductions and Learning Objectives By the end of the session, we hope you understand: ◦ Why we invested in comprehensive statewide data collection ◦ Key elements of correctional treatment data collection ◦ Wh What’s ’ involved l d in implementing l ad data system withh a variety of contracted correctional treatment p g programs A Little History CT Judicial Branch Court Support Services Division is under the leadership of the Chief Justice of the Supreme Court and the Chief Court Administrator 1,600 Employees Functions: ◦ ◦ ◦ ◦ ◦ ◦ Adult Services – Bail and Probation Juvenile Probation Juvenile Detention Family Relations – DV and Civil Contracted Treatment Services Administration “The Mission of CSSD is to provide effective support services within the Judicial Branch by working collaboratively with system stakeholders to promote compliance with court orders and instill positive change in individuals, families and communities. communities.” A Little History 51,000 adult pprobationers supervised p on any given day O On ave average, age, 16,000 6,000 pprobationers obat o e s aaree involved in programming or treatment with private providers p p These providers deliver more than 20 program models models” “program A Little History More than a decade ago, adopted the principles p p of effective intervention internally and with private pprovider its p network Crime and Justice Institute at Community Resources for Justice (2009). Implementing Evidence-Based Policy and Practice in Community Corrections, 2nd ed. Washington, DC: National Institute of Corrections A Little History In 2007, the Legislature’s g Appropriations pp p Committee adopted Results Based Accountabilityy (RBA) ( )* Critical planning tool for the budgetary p process Provides a framework to determine a program’ss impact on the citizens of the program State of Connecticut *RBA was developed by Mark Friedman of the Fiscal Policy Studies Institute A Little History RBA asks 3 questions q ◦ How much do we do? ◦ How well do we do it? ◦ Is anyone better off? CSSD piloted RBA with its internal departments and its contracted providers ◦ Added incentive for data collection as budget decision made through the lens of RBA The Missing Data Link Recidivism D t Data What is CDCS? Contractor Data Collection System ◦ Web-based, secure system ◦ Specific to each program model Collects client level data from CSSD’s network of private correctional treatment providers id What data is collected? Referral Intake Assessment Services (group and individual) Program rogra Outcomes o s & Discharge D s arg CDCS DESIGN Gather Information Purpose ◦G Gain a comprehensive h understanding d d off how h a model works ◦ Determine what information to collect and how to organize/display the data elements Products ◦ A design document to include: Names and definitions for all data elements, all dropdowns and other response options, and proposed screen layouts (the “what”) what ) Logic (the “how”) ◦ A user manual (ideally) ◦ Risk Ri k reduction d ti indicators i di t (d (developed l d using i RBA) Gather Information Process ◦ Review program model RFP, contracts, relevant policies ◦ Meet with stakeholders CSSD Adult or Juvenile Programs and Services staff Probation and Bail regional managers A sample or all of the Program Directors for the model being designed Gather Information What are the program model’s outcomes? Wh refers Who f clients? l ? What Wh is the h referral f l process? Who is served? Are there any clients it is important to track separately? How does intake occur? What is done at intake? What assessments are done? What services are offered? Wh t is What i d done to t link li k clients li t with ith other th services in the community? What is the discharge process? Program and Test Programmer g creates screens and backend tables according to design document Internal te a team tea tests Volunteer providers test Correct bugs and add identified enhancements before release CDCS IMPLEMENTATION Implementation Train users ◦ ◦ ◦ ◦ 4-6 hours for new users Overview of CDCS H d on practice Hands i Review of definitions and CDCS features Pilot and revise ◦ Voluntary basis 2-4 4 providers ◦2 Rollout ◦ Trainingg ◦ Implementation visits Implementation Develop reports for ◦ Providers ◦ Contract monitors ◦ Probation, Bail, Family Services Quality assurance ◦ ◦ ◦ ◦ ◦ Help desk Data quality reports D quality Data l reviews Data definitions U User manuals l & online li ttraining i i A “DEMONSTRATION” DEMONSTRATION Referral Information is pre-populated from Case Management Information System based on case number Intake Built in logic to ensure data are completed Assessments LSI-R and ASUS-R Program model-specific assessments Services and Group Logs Referrals to Outside Services Track connections to community to pp post-program p p g ensure support Activity Logs Case Management Urinalysis and Breathalyzer Discharge When and why client is discharged Outcomes achieved during program REPORTS Report Features Report visibility ◦ Log in determines report availability Parameters Export p Click through Comparisons with other program locations and statewide figures Types of Reports Available How much do we do? ◦ Client Lists ◦ Demographics ◦ Program Activity How well do we do it? ◦ Data Quality ◦ Completion Rates Is anyone better off? ◦ Risk Reduction Other Administrative Data Export Client Level Utilization Click Through Feature Completion Rates How We Use the Data: An Example p Concern about low completion rates (33.8%) for bail clients at the Alternative in the Community program R reportt showing Ran h i program discharge di h reasons Large percentage discharged because case was disposed while clients were at the AICs How We Use the Data: An Example p Met with Bail Regional Managers D Determined d that h Bail B l had h d no consistent way of knowing how long clients should be at AIC Added a data element to CDCS so Bail would know the projected end date for services i Anticipated completion dates are now p y on the progress p g report p generated g displayed in CDCS and sent to Bail How We Use the Data: An Example p This has resulted in smaller percentages of b il clients bail li t bbeing i removed d bbefore f having h i th the opportunity to complete the AIC services Case Disposed Discharges for Bail AIC Clients 30% 28% 25% 25% 26% 24% 22% 20% 18% 19% 19% 15% 10% 5% 0% Q1 2009 Q2 2009 Q3 2009 Q4 2009 Q1 2010 Q2 2010 Q3 2010 Q4 2010 Risk Reduction Indicators Built set of reports p for Probation, Bail and Family Service and contracted providers to measure agency g y performance p and client/public safety outcomes Comparisons to All Locations Risk Reduction Indicators – How We Use Them Quarterlyy Adult Risk Reduction meetings g ◦ ◦ ◦ ◦ Probation Bail Family Services Adult Providers Present and review all indicators Identify how each entity impacts and can assist others Risk Reduction Indicators – Examples of How We Use Them Probation indicator ◦ Discussion with adult service providers: Are clients showingg upp to the program? p g If not, what can probation do to ensure clients show for the program? How can programs do better to engage clients? Are there enough groups running to accommodate the number of referrals probation is making? If not, why? Risk Reduction Indicators – Examples of How We Use Them AIC indicator ◦ Discussion with adult probation and bail: What are probation and bail doing to encourage participation in services? Do probation and bail need more information from AICs in order to more effectively support client participation? Client Level Reports Progress g Report p Discharge Report Service Team Meeting Form Case Management History Substance S b T Testing i Hi History LESSON LEARNED Lessons Learned - Time Accept p that system y development p takes a LONG time Use p project oject pplanning a g methods et o s to set realistic time frames Implement one service type at a time Allow sufficient time for implementation before using the data Lessons Learned - Design Start simple Design with the end user in mind ◦ Does the data layout match business flow? ◦ Do the names of data elements, drop downs, etc. have meaning to the user? For every data element, element ask yourself: ◦ What you we do with this information? pp risk ◦ How will havingg this information support reduction? Avoid “scope creep” Build in flexibility Lessons Learned – Buy Buy--in Include wide ggroupp of stakeholders in design S Show ow internal te a and a external e te a stakeholders sta e o e s how they will benefit Respond quickly to issues and data requests Use feedback to improve the data system and give credit for suggestions Lessons Learned – Implementation Include both line staff and management g in the implementation process Provide ov e hands a s on o training ta g Provide on-going support beyond training Be prepared to uncover programmatic issues – have mechanism to address Lesson Learned – Data Quality Clearly define data elements and expectations t ti suchh as time ti fframes ffor data d t entry Ideally, QA will be done by both the service provider and the funder Create tools such as exception reports and ti li timeliness reports t Develop a reward system for positive performance Support poor performers by providing concrete feedback and helping them develop strategies i for f improvement i Example of a Timeliness Report Shows amount of d entry in the data h specified period Shows the % of data entry within the required 5 business day time frame Example of an Exception Report This report lists data entry that looks atypical. Users are asked to review each item for accuracy. Lessons Learned – Using the Data Allow several months of implementation p before using data Define, e e, define, e e, define! e e! Find ways to reduce workload Defend against information overload Create a forum to discuss the story b hi d the behind th numbers b To sum it up in one word: Where are we now? 10 p program g models input p data in 115 locations across the state 500 use userss 96,974 referral records to date 170 help desk requests per month More and more data requests Where are we headed? Electronic referral Probation officer access Reduction in paper reporting Integration with billing QUESTIONS?