an activity for introducing the statistical process

advertisement

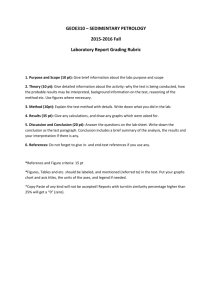

Original Article Hold my calls: an activity for introducing the statistical process Todd Abel Department of Mathematical Sciences, Appalachian State University, Boone, North Carolina, USA e-mail: abelta@appstate.edu Lisa Poling Reich College of Education, Appalachian State University, Boone, North Carolina, USA e-mail: polingll@appstate.edu Summary Working with practicing teachers, this article demonstrates, through the facilitation of a statistical activity, how to introduce and investigate the unique qualities of the statistical process including: formulate a question, collect data, analyze data, and interpret data. Keywords: Teaching; Statistical literacy; Problem solving cycle; Statistical process; Teaching statistics; Data investigation. INTRODUCTION Statistical reasoning defined by Gal (2000, 2002) is the ability to problem solve and reason with data in a way that makes data succinct and understandable. To be able to reason statistically, statisticians and statistical educators have developed standard phases of the statistical process that can be described as follows: (1) specify the problem and outline a plan; (2) collect the required data; (3) analyse and represent the data; and (4) interpret and discuss the findings (Marriott et al. 2009; Guidelines for Assessment and Instruction in Statistics Education (GAISE), 2005; Wild and Pfannkuch 1999). Teachers of statistics should understand the statistical process not only as a way of doing statistics but as a primary means of motivating, introducing, investigating and applying statistical concepts. Indeed, to be engaged in the conceptual understanding of statistics, both learner and instructor must value and understand the process of statistical thinking. Unfortunately, ‘inappropriate reasoning about statistical ideas is widespread and persistent, similar at all age levels, and quite difficult to change’ (Garfield and BenZvi 2007, p. 374). The purpose of this article is to describe a statistical activity used with practicing teachers to introduce and investigate the statistical process. While the activity is intended for secondary level learners and could be used to teach particular 96 statistical content, the primary intent is to highlight and discuss the statistical process. The statistical process Learning experiences are bound by how individuals navigate information and what they deem to be pertinent and essential. According to Wild and Pfannkuch (1999), the ‘ultimate goal of statistical investigation is learning in the context sphere’ (p 225). When selecting tasks for the classroom, the significance of the activity to the individual and the focus on statistical reasoning skills become paramount to the learning experience. Designing an investigation based on data requires that teachers identify issues of interest and frame the questions, ‘together with considerations of practicalities in collecting the data’ (MacGillivray 2007, p. 53), in a manner that adheres to the most appropriate measure of statistical analysis (MacGillivray 2007). Using a statistical process model provides a framework that allows individuals to proceed through an exploration that promotes uniformity and reliability. The problem-solving approach in teaching statistics is ‘considered to improve students’ skills, particularly as they interact with real data’ (Marriott et al. 2009, p. 3). Although various models of the problem-solving approach exist, for this study, we used a four-step model, the GAISE framework (2005). Designed by statisticians and statistics educators in the USA, the © 2015 The Authors Teaching Statistics © 2015 Teaching Statistics Trust, 37, 3, pp 96–103 Hold my calls GAISE framework provides a conceptual framework for K–12 statistics education. The GAISE framework outlines a statistical process consisting of four components: (1) formulate questions; (2) collect data; (3) analyse data; and (4) interpret results (GAISE, 2005). The GAISE framework is then organized into three sequential developmental levels. As students progress across levels, learning becomes more student directed and less teacher directed, and student engagement becomes more nuanced and sophisticated (figure 1). Importance for teachers Ben-Zvi and Garfield (2004) identify various factors that challenge both learners and teachers of statistics: statistical ideas are complex, lack of mathematical knowledge impedes the computational aspect of statistics, the context in which statistical problems are presented is confusing and individuals transfer limited understanding of mathematics to statistics focusing on getting the correct answer but not interpreting results. To create environments that scaffold learning and engage students, instructional strategies must provide support so that students can experience the full range of the statistical process: enter the problem, allow for dissonance and make connections to existing concepts (Garfield and Ben-Zvi 2009). Students, as constructors of knowledge, must be actively engaged as they explore probability and statistics. Teachers therefore must provide consistent feedback, giving students opportunities to practice using statistical techniques and 97 address misconceptions as sources of knowledge (Garfield 1995; Garfield and Ben-Zvi 2007). Just as students need experience to understand a concept, instructors need to first experience a concept in order to fully understand and more effectively teach it (Polly and Hannafin 2011). Thus, in order to help teachers experience and understand the statistical process, we developed and implemented a task during a professional development workshop for grades 6–12 mathematics teachers that used the GAISE framework to engage participants in that process. The underpinning and consistency of using the GAISE framework allow learners to organize and enter into a statistical problem, providing the scaffold that learners need in order to make sense of specific statistical content. Creating such carefully designed sequences helps students improve reasoning and understanding (Garfield and Ben-Zvi 2007). Moreover, it emphasizes the nature of statistics and the practices and processes involved in statistical thought. CONTEXT In subsequent sections, an activity is outlined that was used with practicing teacher, showing the four steps in the problem-solving approach to teaching statistics. Four different groups of practicing teachers engaged in the activity at various times during 2 days of professional development. As a result, the data displayed in the examples differ depending on the group. Fig. 1. Guidelines for Assessment and Instruction in Statistics Education framework © 2015 The Authors Teaching Statistics © 2015 Teaching Statistics Trust, 37, 3, pp 96–103 98 Todd Abel and Lisa Poling INTRODUCING THE STATISTICAL PROCESS WITH AN ACTIVITY Adapting the Cell Phone Impairment? activity from the Statistics Education Web (STEW) (nd) of the American Statistics Association, the lesson investigated the relationship between cell phone usage and reaction time. Changes in the activity were made to conform to the particular context and emphasize the statistical process, permitting multiple levels of engagement. Formulate questions The first phase of the statistics process involves formulating questions to be investigated. Participants were given the following prompt: According to the National Safety Council, cell phones are a factor in 1.3 million traffic accidents each year, resulting in thousands of deaths and injuries. Many have conjectured that this is due to decreased reaction time and attentiveness. What questions does this prompt you to ask? The open-ended nature of the prompt was useful for eliciting a variety of possible responses. This highlighted the questioning habits of teachers in the groups and motivated a discussion of characteristics of good statistics questions. Initial questions tended to either address the reliability of the statement or propose possible contributing variables. For instance, • how do they know cell phones are a factor? • where did the number come from – define traffic accident? • how is cell phone use reported? • how does this compare to other distracting activities as a factor? • is it just cell phones or any distraction? • what are the demographics of drivers? • how will you measure reaction time? • hands-free versus not? • does type of phone matter? • how is phone being use? • does age matter? • were there other possible reasons? Is cell phone the only/leading factor? • men or women? • does type of driving (setting) make difference? These questions lack specificity and do not anticipate variability in a way that can be systematically investigated. Many of them are, however, important questions to consider and were useful for considering sources of variation and possible avenues for investigation. Listing such questions encouraged the groups to consider the characteristics of good statistical questions and prompted a discussion about what could or should be investigated. In table groups, participants then proposed possible statistics questions refined from the initial list. A direct investigation of the reasonableness of the statistics from the prompt was unrealistic, so the focus was placed on the conjectured relationship between cell phone usage and reaction time. Ultimately, each group settled on the following question: Does cell phone usage have an impact on reaction time? Although it does not consider any effects on driving, the final question could guide a classroom investigation and was compelling to the participants. The nature of the initial questions highlights that teachers, as with students, need opportunities to practice and develop the statistical habit of mind of questioning. In the classroom, as in our example, it may be necessary for instructors to use questioning to guide students to a particular well-defined statistical question. If the goal of the lesson is to understand the statistical process, however, it is important that students have opportunities to propose questions, and that viable alternatives are recognized. Collect data Data collection procedures must account for potential sources of variation in data. While students need opportunities to design data collection procedures, allowing a class to design an experiment from scratch is often impractical. Implementing ideal data collection procedures can also be difficult. In many cases, a classroom pilot experiment may highlight the necessary content while permitting students to be actively involved in data collection. In this activity, basic data collection procedures were given, but participants were asked to analyse the procedures for possible sources of variability and then specify ways to minimize them. The following data collection procedures were proposed to the group: 1. Participants worked with a partner, and two sets of partners formed a table group of four. 2. Reaction time was quantified using a ruler drop method. One partner (the dropper) held a ruler and dropped it at random, while the other partner (the catcher) held their hand at the bottom end of the ruler and caught it as it fell. Reaction © 2015 The Authors Teaching Statistics © 2015 Teaching Statistics Trust, 37, 3, pp 96–103 Hold my calls 99 Table 1. Types of analysis Individualized Aggregate Separate conditions Difference Type I–C: graph compares each individual’s reaction time for each condition: with and without a cell phone. Type A–C: graph compares the aggregate data for each condition: with and without a cell phone. Type I–D: graph illustrates the difference in two reaction times for each individual. Type A–D: graph illustrates the difference in reaction time for the overall data set, instead of by individual. time was measured by recording the distance the ruler travelled before being caught.1 3. A coin flip determined whether the table group would measure reaction time with a cell phone or without a cell phone first. a. Each partner took a turn as catcher and as dropper without a cell phone.2 b. Each partner took a turn as catcher and as dropper. During a particular turn, the two catchers in a table group talked to each other on cell phones. To simulate a conversation, each participant created a list of ten words, which they read to the other catcher, who had to try and repeat as many back as they could. While doing that, the dropper would drop the ruler. 4. Each table group input their data into a collaborative spreadsheet to make it available to the whole group. When prompted, participants identified and clarified several other possible sources of variability, such as the following: • How will the dropper hold the ruler? Where and how should the catcher hold their hand? • Where should the measurement be taken? What finger will locate the measurement? • What level of precision is expected in the length measurements (nearest inch, quarter inch, etc.)? • How many trials with and without the cell phone? 1 Note that the measurement of reaction time using these data collection procedures is actually a length measurement. Because the ruler will accelerate due to gravity, the length and time are not proportional. During data analysis, one could convert the lengths 2 to times using the equation d = 192t , where t is time in seconds and d is distance in inches. However, the question can be addressed without attending to that conversion, as it was in the instance presented here. Participants did acknowledge the length-to-time conversion issue, and one group modelled the conversion. The results were close enough to proportional, and the times short enough, that this group decided to ignore the conversion to time and work strictly with the recorded lengths. Thus, in what follows, reaction time refers to the point on the ruler where the ruler was caught. 2 If available, reaction time apps are freely available for laptops, tablets and smartphones and could be used in place of the ruler drop method. © 2015 The Authors Teaching Statistics © 2015 Teaching Statistics Trust, 37, 3, pp 96–103 Using a think, pair, share protocol, data collection procedures were standardized as a group. Participants were also asked to identify limitations of the data collection plan, such as the limited population (limited to the classroom), the minimal number of trials and possible confounding of data from subjects being observed by other subjects. Despite the quasi-experimental nature of data collection, these procedures were sufficient to highlight key characteristics of experimental design and illustrate the nature of the data collection phase of the statistical process. Issues of data entry, handling and preparation were not emphasized here. Analyse data During the data analysis phase of the statistical process, data are explored and represented. In instructional settings, the data analysis phase may serve as an opportunity to motivate and implement new techniques, to further explore or apply previously developed techniques, or to compare different analyses. The goal of this particular activity was to introduce and investigate the statistical process rather than any particular content, so each table group was asked to examine the data by creating a visual representation of their choice, an important first step in data analysis. This permitted engagement at different levels, thereby creating opportunities to discuss the statistical process and differentiate levels of understanding. Although this group did not explore further, more sophisticated analysis techniques would certainly be appropriate given different learning goals. It was observed that table groups organized their choice of visual representation by two main categorizations: individualized versus aggregate and separate versus difference as shown in table 1. Below, examples of each type are shown, and conceptual underpinnings (or misunderstandings) underlying each are discussed. Note that each table group of four participants created a graph for the data from their entire class. Four different classes took part in the activity, so the data differ somewhat among the graphs shown below. Type I–C table groups placed an emphasis on representing each individual time, both with and 100 Todd Abel and Lisa Poling Fig. 2. Examples of type I–C graphs without the cell phone. These graphs attempted to give an overall sense of which time was generally higher without regard to the magnitude of the differences for each individual or attempt to summarize the data. A variety of type I–C representations was used. Some table groups created double bar graphs showing each individual’s reaction time with and without the cell phone. Other table groups created a scatterplot, with each ordered pair (without cell phone and with cell phone) representing an individual. Effect on reaction time was visualized by comparing points to the line y = x. Other table groups created line graphs that attempted to show the same person-by-person comparison. While each of these graphs gives the overall impression that the cell phone reaction times were typically longer, they do not give any specific information about the group as a whole, attending instead to individuals. In addition, the line graph is particularly misleading since the data on the horizontal axis (individuals) are not ordered (figure 2). Only one table group created a graph of type I–D. This group calculated the change in reaction time (with cell phone) and (no cell phone) for each individual, then plotted each one using a type of bar graph. This does attend to the differences in reaction time, but still emphasizes each Fig. 3. An example of a type I–D bar graph individual rather than giving any sense of the data as a whole. The graph itself is problematic – it is not initially clear that each bar represents an individual (on first glance, it looks more like a histogram) and makes the horizontal axis appear to be ordered when it is not (figure 3). The most popular type of graph was type A–C. These groups compared the aggregated data with and without a cell phone and used summary statistics to display information about each treatment on the entire group. One table group simply found the mean of the reaction times with and without the cell phone and created a bar graph to show the two means. More common, however, was a comparative box plot – one box plot for the aggregate data within each condition. Such graphs are moving beyond looking at each individual subject and instead taking advantage of aggregate data. However, they are not taking account of the experimental situation and hence not conveying any specific information about the differences. This problem is emphasized by an incorrect interpretation described below (figure 4). Type A–D graphs considered the difference (with cell phone and without cell phone) for each individual then created graphs that looked at the data as a whole instead of individually. For instance, one group created a box plot using the differences, and several groups created histogram plots for the set of data of the differences. Such graphs illustrated the most sophisticated representation of the data, moving beyond individual responses and giving a sense of the typical difference in reaction times. Note how only the A–D graphs can pick up both the overall effects and the nuances of effects and variation in individuals of using cell phones (figure 5). Allowing participants to choose and create their own displays of data provided opportunities to compare the different data displays, highlighting the strengths and weakness, and possible misapplications, of each. It also highlighted that the choice of appropriate graphs is an important topic © 2015 The Authors Teaching Statistics © 2015 Teaching Statistics Trust, 37, 3, pp 96–103 Hold my calls 101 Fig. 4. Examples of type A–C graphs Fig. 5. Examples of type A–D graphs for teachers to consider, and one with which they may need to gain experience. Comparing different graphs also prompted discussions about what attributes of the data were important for addressing the original question. • ‘[The] median is higher for use of the cell phone. Maximum data is much higher for reaction time with cell phone.’ • ‘All measurements of quartiles without the cell phone are less than the quartile measures of with a cell phone.’ Interpret results Asking participants to draw and support conclusions from their graphs also highlighted some misconceptions and problems with choice of graphical representation. For instance, the table group shown below created a comparative box plot and concluded that the graph ‘shows that over 75% can react faster without the distraction of a cell phone!’ While it is true that 75% of the cell phone reaction times were greater than at least 75% of the without cell phone reaction times, this does not imply that 75% of individuals had faster reaction times without cell phones. Since differences on individuals were not used, the graph cannot be used to make statements (figure 6). To emphasize the importance of interpreting results in a meaningful and well-supported way, participants were asked if they were confident enough in their conclusions to It can be challenging to generalize from data without making unsupported claims. Each table group was asked to write the conclusions that they could draw from their graphs. Several examples are represented in the pictures above. The participants’ conclusions often reflected the type of representations and the sophistication of their understanding of data. For instance, a very general conclusion such as the reaction time is greater when using a cell phone reflected the nonspecific graphical analysis undertaken in the task. Type I–C and type I–D graphs did not illustrate any specific measurements of differences in reaction time, permitting only general overall impressions of the effect. Table groups creating type A–C and type A–D graphs, however, could use summary statistics to support their conclusions. Although type A–C graphs ignored the paired nature of the data, in this particular case, the contrast between using and not using cell phones was far greater than variation across individuals, allowing participants who created comparative box plots to make statements such as the following: © 2015 The Authors Teaching Statistics © 2015 Teaching Statistics Trust, 37, 3, pp 96–103 1. change their own behaviour, 2. use it as evidence to influence the behaviour of others or 3. present it to other statistically proficient people. 102 Fig. 6. A misleading representation of data with misconceptions in the conclusion The subsequent discussion allowed the group to not just propose conclusions but also support them and analyse the strength of those conclusions. DISCUSSION Summarizing the activity Since the learning goal of this activity was the statistical process, the groups identified the portion of the activity that corresponded to each phase of the statistical process, identifying the key characteristics. For instance, standardizing data collection procedures in order to isolate a single source of variability (as much as possible) was noted as a key characteristic of the collect data phase. Making choices about how to organize, summarize and display the data was identified as key characteristics of the analyse data phase. The reaction time task is typical of many activities in that it permits adaptations that would increase or decrease the statistical sophistication of any given component. For instance, the question could be posed for the class, and only individualized data might be considered. Such adaptations may be appropriate for an initial exposure to the statistical process if the issues of variability involved in each component are discussed. For a more sophisticated approach to the activity, students could design data collection procedures that incorporate random sampling outside the classroom, and data analysis could include tests of significance and measures of variability. CONCLUSION To promote the statistical process, the implementation of the problem-solving approach for statistics, Todd Abel and Lisa Poling teacher participants became students. Participants were asked to identify the key ideas they would like to carry forward into their classrooms. All groups identified the nature of statistical investigation as a major point of emphasis. For instance, one group noted that they realized they needed to ‘let students be flexible – [it’s] not a linear path’, while another described it as ‘not a set pattern’. The idea of different levels of sophistication was also meaningful to many groups. Opportunities such as this are important for both teachers and students. Using the statistical investigation process as a framework for the activity structured the experience and supported participant growth in statistical understanding. This activity illustrated how intentionally designed learning opportunities can support understanding of the statistical process and the nature of statistical work. References Ben-Zvi, D. and Garfield, J. (Eds) (2004). The Challenge of Developing Statistical Literacy, Reasoning, and Thinking, Dordrecht, Netherlands: Kluwer. Gal, I. (Ed) (2000). Adult Numeracy Development: Theory, Research, Practice, Cresskill, NJ: Hampton Press. Gal, I. (2002). Adults’ statistical literacy: meaning, components, responsibilities. International Statistical Review, 70(1), 1–5. Garfield, J. (1995). How students learn statistics. International Statistical Review, 63, 25–34. Garfield, J. and Ben-Zvi, D. (2007). How students learn statistics revisited: a current review of research on teaching and learning statistics. International Statistical Review, 75, 372–396. Garfield, J. and Ben-Zvi, D. (2009). Helping students develop statistical reasoning: implementing a statistical reasoning learning environment. Teaching Statistics, 31, 72–77. Franklin, C., Kader, G., Mewborn, D., Moreno, J., Peck, R., Perry, M. and Scheaffer, R. (2005). Guidelines for Assessment and Instruction in Statistics Education (GAISE) Report; A Pre-K Curriculum Framework, Alexandria, VA: American Statistical Association. MacGillivray, H. (2007). Clasping hands and folding arms: a data investigation. Teaching Statistics, 29, 49–53. Marriott, J., Davies, N. and Gibson, L. (2009). Teaching, learning and assessing statistical problem solving. Journal of Statistics Education, 17(1). Retrieved from http: www.amstat.org/ © 2015 The Authors Teaching Statistics © 2015 Teaching Statistics Trust, 37, 3, pp 96–103 Hold my calls publications/jse/v17n1/marriott.html (accessed 28 January 2015). Polly, D. and Hannafin, M.J. (2011). Examining how learner-centered professional development influences teachers/espouse and enacted practices. The Journal of Education Research, 104, 120–130. © 2015 The Authors Teaching Statistics © 2015 Teaching Statistics Trust, 37, 3, pp 96–103 103 Statistics Education Web (STEW). (nd) Retrieved from http://www.amstat.org/education/stew/ (accessed May 2014). Wild, C.J. and Pfannkuch, M. (1999). Statistical thinking in empirical enquiry. International Statistical Review, 67(3), 223–265.