Exercise Session 6: V & V Software Engineering Testing strategy

advertisement

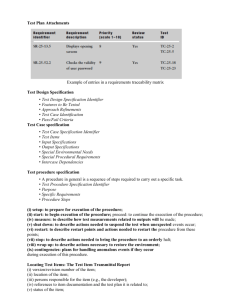

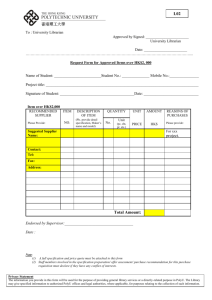

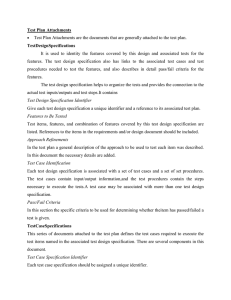

Testing strategy Chair of Software Engineering Planning & structuring the testing of a large program: ¾ Defining the process Test plan Input and output documents ¾ Who is testing? Developers / special testing teams / customer ¾ What test levels do we need? Unit, integration, system, acceptance, regression ¾ Order of tests Top-down, bottom-up, combination ¾ Running the tests Manually Use of tools Automatically Software Engineering Prof. Dr. Bertrand Meyer March–June 2007 Exercise Session 6: V & V Who tests Classifying reports: by severity Classification must be defined in advance Applied, in test assessment, to every reported failure Analyzes each failure to determine whether it reflects a fault, and if so, how damaging Example classification (from a real project): ¾ Not a fault ¾ Minor ¾ Serious ¾ Blocking Any significant project should have a separate QA team Why: the almost infinite human propensity to selfdelusion Unit tests: the developers ¾ My suggestion: pair each developer with another who serves as “personal tester” Integration test: developer or QA team System test: QA team Acceptance test: customer & QA team 3 Classifying reports: by status From a real project: ¾ Registered ¾ Open ¾ Re-opened ¾ Corrected ¾ Integrated ¾ Delivered ¾ Closed ¾ Not retained ¾ Irreproducible ¾ Cancelled 2 4 Assessment process (from real project) Customer Registered Cancelled Customer Project/ Customer Regression bug! Project Open Irrepro- Developer ducible Project Corrected Project Integrated Project Developer Customer 5 Reopened Closed Project 6 1 Some responsibilities to be defined Test planning: IEEE 829 Who runs each kind of test? IEEE Standard for Software Test Documentation, 1998 Who is responsible for assigning severity and status? Can be found at: http://tinyurl.com/35pcp6 (shortcut for: http://www.ruleworks.co.uk/testguide/documents/ IEEE%20Standard%20for%20Software%20Test%20Do cumentation..pdf) What is the procedure for disputing such an assignment? What are the consequences on the project of a failure at each severity level? (e.g. “the product shall be accepted when two successive rounds of testing, at least one week apart, have evidenced fewer than m serious faults and no blocking faults”). Specifies a set of test documents and their form For an overview, see the Wikipedia entry 7 IEEE-829-conformant test elements Test plan Test plan: ¾ “Prescribes scope, approach, resources, & schedule of testing. Identifies items & features to test, tasks to perform, personnel responsible for each task, and risks associated with plan”* Test specification documents: ¾ Test design specification: identifies features to be covered by tests, constraints on test process ¾ Test case specification: describes the test suite ¾ Test procedure specification: defines testing steps Test reporting documents: ¾ Test item transmittal report ¾ Test log ¾ Test incident report ¾ Test summary report *Citation slightly abridged 8 Shows: ¾ How the testing will be done ¾ Who will do it ¾ What will be tested ¾ How long it will take ¾ What the test coverage will be, i.e. what quality level is required 9 IEEE 829: Test plan structure 10 Test design/Test case specification The test design specification details: a) Test plan identifier b) Introduction c) Test items d) Features to be tested e) Features not to be tested f) Approach g) Item pass/fail criteria h) Suspension criteria and resumption requirements i) Test deliverables j) Testing tasks k) Environmental needs l) Responsibilities m) Staffing and training needs n) Schedule o) Risks and contingencies p) Approvals ¾ Test conditions ¾ Expected results ¾ Test pass criteria The test case specification details: ¾ 11 Specifies the test data for use in running the test conditions identified in the test design specification 12 2 Test procedure spec./ transmittal report Test log / test incident report The test procedure specification details: ¾ The test log records: How to run each test, including any set-up preconditions and the steps that need to be followed ¾ The test item transmittal report: ¾ which tests cases were run, who ran them, in what order, and whether each test passed or failed The test incident report details: Reports on when tested software components have progressed from one stage of testing to the next ¾ for any test that failed, the actual versus expected result, and other information intended to throw light on why a test has failed that will help in its resolution. The report will also include, if possible, an assessment of the impact upon testing of an incident. 13 14 A small case study Test summary report ¾ A management report providing any important information uncovered by the tests accomplished, and including assessments of the quality of the testing effort, the quality of the software system under test, and statistics derived from Incident Reports. ¾ The report also records what testing was done and how long it took, in order to improve any future test planning. ¾ This final document is used to indicate whether the software system under test is fit for purpose according to whether or not it has met acceptance criteria defined by project stakeholders. . 15 Consider a small library database with the following transactions: 1. Check out a copy of a book. Return a copy of a book. 2. Add a copy of a book to the library. Remove a copy of a book from the library. 3. Get the list of books by a particular author or in a particular subject area. 4. Find out the list of books currently checked out by a particular borrower. 5. Find out what borrower last checked out a particular copy of a book. There are two types of users: staff users and ordinary borrowers. Source*: Wing 88 Transactions 1, 2, 4, and 5 are restricted to staff users, except that ordinary borrowers can perform transaction 4 to find out the list of books currently borrowed by themselves. The database must also satisfy the following constraints: ¾ All copies in the library must be available for checkout or be checked out. ¾ No copy of the book may be both available and checked out at the same time. ¾ A borrower may not have more than a predefined number of books checked out at one time. 16 3