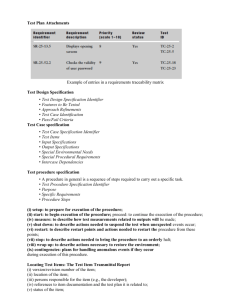

Test Plan Attachments

advertisement

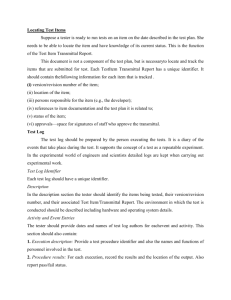

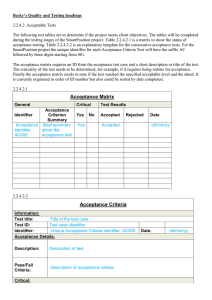

Test Plan Attachments Test Plan Attachments are the documents that are generally attached to the test plan. TestDesignSpecifications It is used to identity the features covered by this design and associated tests for the features. The test design specification also has links to the associated test cases and test procedures needed to test the features, and also describes in detail pass/fail criteria for the features. The test design specification helps to organize the tests and provides the connection to the actual test inputs/outputs and test steps.It contains Test Design Specification Identifier Give each test design specification a unique identifier and a reference to its associated test plan. Features to Be Tested Test items, features, and combination of features covered by this test design specification are listed. References to the items in the requirements and/or design document should be included. Approach Refinements In the test plan a general description of the approach to be used to test each item was described. In this document the necessary details are added. Test Case Identification Each test design specification is associated with a set of test cases and a set of set procedures. The test cases contain input/output information,and the test procedures contain the steps necessary to execute the tests.A test case may be associated with more than one test design specification. Pass/Fail Criteria In this section the specific criteria to be used for determining whether theitem has passed/failed a test is given. TestCaseSpecifications This series of documents attached to the test plan defines the test cases required to execute the test items named in the associated test design specification. There are several components in this document. Test Case Specification Identifier Each test case specification should be assigned a unique identifier. Test Items This component names the test items and features to be tested by this testcase specification. References to related documents that describe the itemsand features, and how they are used should be listed. Input Specifications This component of the test design specification contains the actual inputs needed to execute the test. Inputs may be described as specific values, or as file names, tables, databases, parameters passed by the operating system,and so on. Any special relationships between the inputs should be identified. Output Specifications All outputs expected from the test should be identified. If an output is to be a specific value it should be stated. If the output is a specific feature such as a level of performance it also should be stated. The output specifications are necessary to determine whether the item has passed/failed the test. Special Environmental Needs Any specific hardware and specific hardware configurations needed to execute this test case should be identified. Special software required to execute the test such as compilers, simulators, and test coverage toolsshould be described, as well as needed laboratory space and equipment. Special Procedural Requirements Describe any special conditions or constraints that apply to the test procedures associated with this test. Intercase Dependencies In this section the test planner should describe any relationships between this test case and others, and the nature of the relationship. The test caseidentifiers of all related tests should be given. TestProcedureSpecifications A procedure in general is a sequence of steps required to carry out a specific task. Test Procedure Specification Identifier Each test procedure specification should be assigned a unique identifier. Purpose Describe the purpose of this test procedure and reference any test cases it executes. Specific Requirements List any special requirements for this procedure, like software, hardware, and special training. Procedure Steps Here the actual steps of the procedure are described. Include methods, documents for recording (logging) results, and recording incidents. These will have associations with the test logs and test incident reports that result from a test run. A test incident report is only required when an unexpected output is observed. Steps include (i) setup: to prepare for execution of the procedure; (ii) start: to begin execution of the procedure; (iii) proceed: to continue the execution of the procedure; (iv) measure: to describe how test measurements related to outputs will be made; (v) shut down: to describe actions needed to suspend the test when unexpected events occur; (vi) restart: to describe restart points and actions needed to restart the procedure from these points; (vii) stop: to describe actions needed to bring the procedure to an orderly halt; (viii) wrap up: to describe actions necessary to restore the environment; (ix) contingencies: plans for handling anomalous events if they occurduring execution of this procedure. Locating Test Items Suppose a tester is ready to run tests on an item on the date described in the test plan. She needs to be able to locate the item and have knowledge of its current status. This is the function of the Test Item Transmittal Report. This document is not a component of the test plan, but is necessaryto locate and track the items that are submitted for test. Each TestItem Transmittal Report has a unique identifier. It should contain thefollowing information for each item that is tracked . (i) version/revision number of the item; (ii) location of the item; (iii) persons responsible for the item (e.g., the developer); (iv) references to item documentation and the test plan it is related to; (v) status of the item; (vi) approvals—space for signatures of staff who approve the transmittal. Test Log The test log should be prepared by the person executing the tests. It is a diary of the events that take place during the test. It supports the concept of a test as a repeatable experiment. In the experimental world of engineers and scientists detailed logs are kept when carrying out experimental work. Test Log Identifier Each test log should have a unique identifier. Description In the description section the tester should identify the items being tested, their version/revision number, and their associated Test Item/Transmittal Report. The environment in which the test is conducted should be described including hardware and operating system details. Activity and Event Entries The tester should provide dates and names of test log authors for eachevent and activity. This section should also contain: 1. Execution description: Provide a test procedure identifier and also the names and functions of personnel involved in the test. 2. Procedure results: For each execution, record the results and the location of the output. Also report pass/fail status. 3. Environmental information: Provide any environmental conditions specific to this test. 4. Anomalous events: Any events occurring before/after an unexpected event should be recorded. If a tester is unable to start or compete a test procedure, details relating to these happenings should be recorded 5. Incident report identifiers: Record the identifiers of incident reports generated while the test is being executed. Test Incident Report The tester should record in a test incident report (sometimes called a problem report) any event that occurs during the execution of the tests that is unexpected, unexplainable, and that requires a follow-up investigation. 1. Test Incident Report identifier: to uniquely identify this report. 2. Summary: to identify the test items involved, the test procedures, testcases, and test log associated with this report. 3. Incident description: this should describe time and date, testers, observers, environment, inputs, expected outputs, actual outputs,anomalies, procedure step, environment, and attempts to repeat. 4. Impact: what impact will this incident have on the testing effort, the test plans, the test procedures, and the test cases? A severity rating should be inserted here. Test Summary Report This report is prepared when testing is complete. It is a summary of the results of the testing efforts. 1. Test Summary Report identifier: to uniquely identify this report. 2. Variances: these are descriptions of any variances of the test items from their original design. Deviations and reasons for the deviation from the test plan, test procedures, and test designs are discussed. 3. Comprehensiveness assessment: the document author discusses the comprehensiveness of the test effort as compared to test objectives and test completeness criteria as described in the test plan. 4. Summary of results: the document author summarizes the testing results. All resolved incidents and their solutions should be described. Unresolved incidents should be recorded. 5. Evaluation: in this section the author evaluates each test item based on test results. Did it pass/fail the tests? If it failed, what was the level of severity of the failure? 6. Summary of activities: all testing activities and events are summarized. Resource consumption, actual task durations, and hardware and software tool usage should be recorded. 7. Approvals: the names of all persons who are needed to approve this document are listed with space for signatures and dates.