doc

advertisement

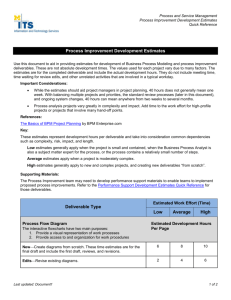

Software Engineering: SEG3300 A & B, Deliverable 3: Test Plan Submission Date: Noon, Wednesday, April 7, 2004 (Please submit to Prof. Probert or a TA in SITE 4051) TEST PLAN and EXAMPLE TEST CASES Introduction: This third deliverable is based on the completed Deliverables 1 and 2, which specify and describe requirements, use cases and the design in the form of UML diagrams. Domain Analysis and Requirements (Deliverable 1), Use-Case Analysis and Systems Design (Deliverable 2). Context and Scope: The context of this deliverable is the Testing Process shown in Figure 1. The scope of this deliverable is a subset of the activities included in the Test Plan and the Test Case Design blocks. The work items that you have to do are specified on page 2. The layout of a comprehensive Test Plan is often governed by standards such as IEEE 829 (shown in Appendix 1). This deliverable is aimed at completing a small part of the Test Plan and a selected number of Test Cases. Designed or Modified Code Testing Program Test Plan Test Case Design TC Review & Coverage Problem Reports Designer’s Loop Test Case Execution Tester’s Loop Figure 1: The Testing Process Test Results What you have to do: 1. Test Plan Overview (20 marks): This part is inspired from the first section in the IEEE 829 standard (Appendix 1). You are asked to provide the following: A. Introduction. B. Overview of features/functionalities to be tested (and their pass/fail criteria) C. Description of technique used to assess the completeness of the testing and completion criteria. D. Environmental needs: identify relevant tools that will be used to support your testing process. 2. Requirement List and Traceability Tables (30 marks): A. Provide a requirements list (updated from Deliverables 1 and 2), with unique numbers/identifiers. B. Enumerate 12 test cases (TC1-TC12) applicable to your system, with good coverage of your requirements. Give each a number (TC1-TC12), a relevant name, and a one-line description of its purpose. C. Test Case, Use Case and Requirements Traceability Table: Provide traceability links from these test cases to your use cases and to your requirements by filling up this table. Test cases can sometimes be related to others, e.g. when one is a prerequisite of another (try to find and report such a situation in your plan). Test Case TC1 TC2 … TC12 Related Requirements Requirement number Where Specified (Location in Deliverable 1) Related Use Cases Related Test Cases 3. Functional Test Cases (30 marks) This part is inspired from the third and fourth sections in the IEEE 829 standard (Appendix 1). You are asked to develop four (4) test cases amongst the 12 cited in the previous section. These 4 test cases should target different requirements. Provide the following information for each test: A. Identification and classification Includes number, descriptive title (purpose), references to related requirements and use cases. Importance level (consequences of failure in the field). Refer to section 10.8 of the manual for some inspiration. B. Input (stimulus) specification C. Test steps (if any) D. Output specification ("oracle" - expected output). Specify what should be done if the SUT fails this test case. E. Test cleanup (how to reset for next test) 4. Statement of Test Coverage and Quality (10 marks) This involves a single paragraph stating whether or not the 4 test cases adequately tested the related requirements, and briefly giving reasons and recommendations about what additional testing needs to be done. Do not forget to include a title page, executive summary, table of contents, list of figures/tables, references, appendices (if needed), etc. Please pay attention to the quality of the language. (10 marks) Appendix 1: Outlines of a Software Test Plan (IEEE 829 Standard) 1. Test Plan (Overview) 1.1. Introduction 1.2. Test items 1.3. Features to be tested 1.4. Features not to be tested 1.5. Approach For each major set of features, specify the major activities, techniques, and tools which will be used to test them. The specification should be in sufficient detail to permit estimation and scheduling of the associated tasks. Specify techniques which will be used to assess the comprehensiveness of testing and any additional completion criteria. 1.6. 1.7. 1.8. 1.9. 1.10. 1.11. 1.12. 1.13. 1.14. 1.15. Specify the techniques used to trace requirements. Identify any relevant constraints. Item pass/fail criteria Suspension criteria and resumption requirements Test deliverables Deliverables shall include: Test Design Specification Test Case Specification Test Procedure Specification Test Item Transmittal Report Test Log Test Incident Report Test Summary Report Test Input Data and Output Data Test tools may be included as deliverables. Testing tasks Identify tasks, inter-task dependencies, and any special skills required. Environmental needs Physical characteristics, including hardware, communications and system software, mode of usage, any other software or supplies. Level of security Special test tools or needs Responsibilities Staffing and training needs Schedule Risks and contingencies Approvals 2. Test Design Specification 2.1. Features to be tested 2.2. Approach requirements 2.3. Test identification 2.4. Feature pass/fail criteria 3. Test Case Specification 3.1. Test items 3.2. Input specifications 3.3. Output specifications (i.e., test "oracle") 3.4. Environmental needs 3.5. Special procedural requirements 3.6. Inter-case dependencies 4. Test Procedure Specification 4.1. Purpose 4.2. Special requirements 4.3. 5. Test Log 5.1. 5.2. Procedure steps For example, Log, Set up, Start, Proceed, Measure, Shut down, Restart, Stop, Wrap up, Contingencies. Description Log entries Executions Procedure results Environmental information Anomalous events Incident report identifiers 6. Test Incident Report 6.1. Summary 6.2. Incident description (e.g., inputs, expected results, actual results, anomalies, date and time, procedure step, environment, attempts to repeat, testers, observers) 6.3. Impact on testing 7. Test Summary Report 7.1. Summary 7.2. Variances 7.3. Comprehensiveness assessment 7.4. Summary of results Resolved incidents Unresolved incidents 7.5. Evaluation (by test items) 7.6. Summary of activities 7.7. Approvals Additional References: 1. Test Plan Design: http://www.cit.gu.edu.au/teaching/3192CIT/documentation/test.html 2. Outline of a Test Plan (IEEE 829): http://www.cs.swt.edu/~donshafer/project_documents/test_plan_template.html 3. Test Plan Example: http://www.luckydogarts.com/dm158/docs/System_Test_Plan.doc 4. Test Case Example: http://www.csc.calpoly.edu/~hitchner/CSC103.S2002/testPlanExample.html