Systematic Test Case Generation Overview Two basic uses of

advertisement

2/17/16

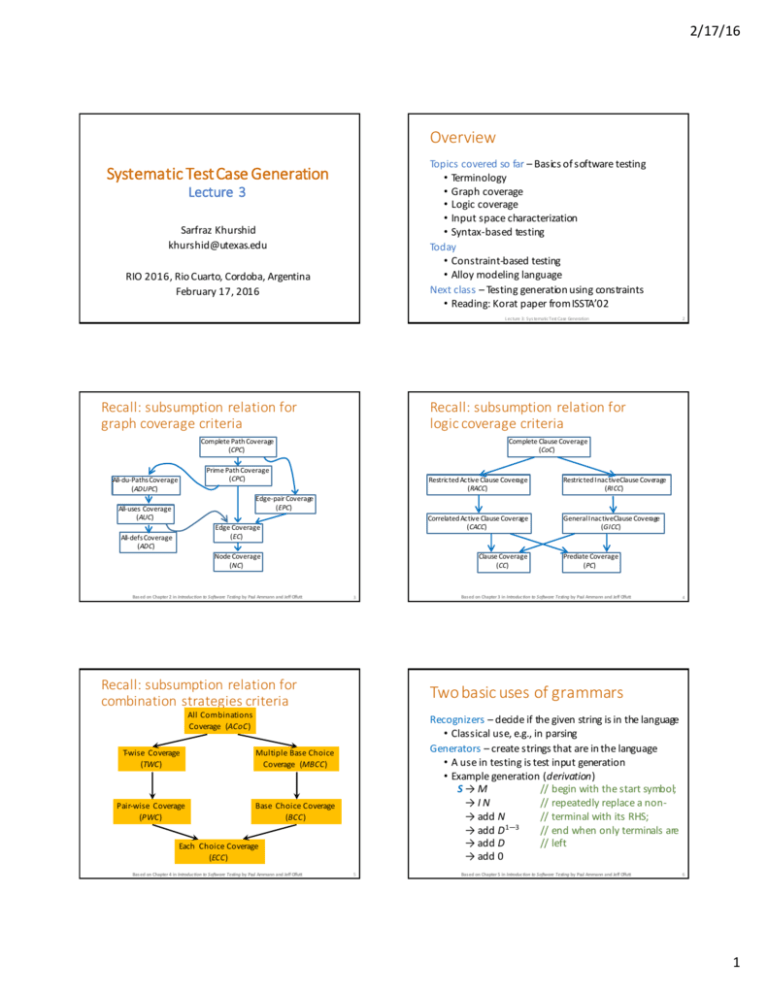

Overview

Topics covered so far – Basics of s oftware testing

• Terminology

• Graph coverage

• Logic coverage

• Input s pace characterization

• Syntax-­‐based testing

Today

• Constraint-­‐b ased testing

• Alloy modeling language

Next class – Testing generation u sing constraints

• Reading: K orat p aper from ISSTA’02

Systematic Test Case Generation

Lecture 3

Sarfraz Khurshid

khurshid@utexas.edu

RIO 2 016, Rio Cuarto, Cordoba, Argentina

February 1 7, 2 016

Lecture 3: S ys tematic Test Case Generation

Recall: subsumption relation f or graph coverage criteria

Recall: subsumption relation f or

logic coverage criteria

Complete Path Coverage

(CPC)

Complete Clause Coverage

(CoC)

Prime Path Coverage

(CPC)

All-­‐du-­‐Paths Coverage

(ADUPC)

2

Restricted Active Clause Coverage

(RACC)

Restricted I nactive Clause Coverage

(RICC)

Correlated Active Clause Coverage

(CACC)

General I nactive Clause Coverage

(GICC)

Edge-­‐pair Coverage

(EPC)

All-­‐uses Coverage

(AUC)

Edge Coverage

(EC)

All-­‐defs Coverage

(ADC)

Node Coverage

(NC)

Bas ed on Chapter 2 in Introduction to S oftware Testing by Paul Ammann and Jeff Offutt

Clause Coverage

(CC)

3

Recall: subsumption relation f or combination strategies criteria

Multiple Base Choice

Coverage (MBCC)

Pair-­‐wise Coverage

(PWC)

Base Choice Coverage

(BCC)

4

Recognizers – decide if the given string is in the language

• Classical u se, e.g., in p arsing

Generators – create s trings that are in the language

• A u se in testing is test input generation

• Example generation ( derivation)

S → M

// begin with the s tart symbol;

→ I N // repeatedly replace a n on-­‐

→ add N // terminal with its RHS;

→ add D 1—3 // end when only terminals are

→ add D // left

→ add 0

Each Choice Coverage

(ECC)

Bas ed on Chapter 4 in Introduction to S oftware Testing by Paul Ammann and Jeff Offutt

Bas ed on Chapter 3 in Introduction to S oftware Testing by Paul Ammann and Jeff Offutt

Two basic uses of g rammars

All Combinations

Coverage (ACoC)

T-­‐wise Coverage

(TWC)

Prediate Coverage

(PC)

5

Bas ed on Chapter 5 in Introduction to S oftware Testing by Paul Ammann and Jeff Offutt

6

1

2/17/16

BNF Coverage criteria

Mutation to g enerate invalid inputs

Terminal s ymbol coverage (TSC) – TR contains each terminal in the grammar

• #tests ≤ #terminals, e.g., 1 2 for our example

Production coverage ( PDC) – TR contains each production in the grammar

• #tests ≤ #productions, e.g., 1 7 for our example

• PDC s ubsumes TSC

Derivation coverage ( DC) – TR contains every string that can b e d erived from the grammar

• Typically, D C is impractical to u se

• 2 * ( 10 + 1 00 + 1 000) tests for our example

Using a grammar as a generator allows generating strings that are in the language, i.e., valid inputs

Sometimes invalid inputs are n eeded, e.g., to check exception h andling behavior or observe failures

Invalid inputs can b e created u sing mutation, i.e., (syntactic) modification – the focus of this chapter

Two s imple ways to create mutants (valid or invalid):

• Mutate s ymbols in a ground string

• E.g., “add 0” → “remove 0”

• Mutate grammar and d erive ground strings

• E.g., “I → add | remove” → “I → add | d elete”

Bas ed on Chapter 5 in Introduction to S oftware Testing by Paul Ammann and Jeff Offutt

7

Testing programs with mutation

Insight: in p ractice, if the software contains a fault, there will u sually b e a s et of mutants that can only be killed b y a test case that also d etects the fault

Approach: given a p rogram p

1. Create mutants of p

2. Remove redundant mutants ( if feasible)

3. Generate a test s uite for p

4. Run each test on p and its mutants to check mutant killing

5. Compute the mutation s core for the test suite

6. Check p ’s outputs for tests that kill s ome mutant(s)

Bas ed on Chapter 5 in Introduction to S oftware Testing by Paul Ammann and Jeff Offutt

Bas ed on Chapter 5 in Introduction to S oftware Testing by Paul Ammann and Jeff Offutt

8

6 example mutants

static int min(int a, int b) {

int minVal;

minVal = a;

if (b < a)

{

minVal = b;

}

return minVal;

}

Δ1, Δ3, Δ5: variable replacement

Δ2: relational op. replacement

Δ4: unconditional failure

Δ6: conditional failure

9

static int min(int a, int b) {

int minVal;

minVal = a;

Δ1 minVal = b;

if (b < a)

Δ2 i f (b > a)

Δ3 if (b < minVal)

{

minVal = b;

Δ4 fail();

Δ5 minVal = a;

Δ6 minVal = failOnZero(b);

}

return minVal;

}

Bas ed on Chapter 5 in Introduction to S oftware Testing by Paul Ammann and Jeff Offutt

10

Coverage criteria – mutation testing

Let M b e the s et of mutants of p rogram p

Strong mutation coverage (SMC) – for each m in M, TR

contains exactly one requirement, to strongly kill m

Constraint-­‐based testing f or OO programs

A brief introduction

Weak mutation coverage (WMC) – for each m in M, TR

contains exactly one requirement, to weakly kill m

# $%&'(&) *+,,-.

mutation s core = # $%&'(&)

• Consider n on-­‐equivalent mutants only (if possible)

Bas ed on Chapter 5 in Introduction to S oftware Testing by Paul Ammann and Jeff Offutt

11

2

2/17/16

Specs for OO programs

Example spec

Class invariant – properties of all valid objects of a class in all p ublicly visible states

• E.g., acyclic s tructure

Precondition – properties that a method expects of its inputs for correct b ehavior

• E.g., sorted array

Postcondition – behavioral correctness p roperties

• Can include exceptional b ehaviors

For p ublic methods, p reconditions and postconditions

include class invariants

Recall our s ingly linked-­‐list example:

public class SLList { // invariant: acyclicity

Node header;

int size;

static class Node {

int elem;

Node next;

}

int removeFirst() {

// precondition: header != null

// postcondition: returns the element in the first

//

node and removes that node if header != null;

//

else, throws NullPointerException

... }

}

Lecture 3: S ys tematic Test Case Generation

13

Lecture 2: S ys tematic Test Case Generation

14

Constraint-­‐based testing

Specs written as logical constraints

Input constraints – capture p roperties of d esired inputs

• E.g., method p reconditions and class invariants

• Enable test input generation

Oracle constraints – describe expected behavioral correctness p roperties

• E.g., method p ostconditions

• Enable test oracle automation

Alloy modeling language

alloy.mit.edu

Two key q uestions:

• How to write constraints?

• How to s olve constraints?

Lecture 3: S ys tematic Test Case Generation

15

Overview

Alloy tool-­‐set

“Analyzable models for software d esign”

Declarative language

• First-­‐order logic with transitive closure

• Based on relations

Two d esign goals

• Simple language for modeling

• Automatic analysis

Motivated b y small s cope hypothesis

Developed b y D aniel Jackson and h is group ( MIT)

Alloy

• Language to b uild models, requirements, specifications, d esign

Alloy analyzer

• Automatic analysis tool

• Translates to SAT

Lecture 3: S ys tematic Test Case Generation

17

Lecture 3: S ys tematic Test Case Generation

18

3

2/17/16

History

Why do we need s uch a language?

NP/nitpick ( 1996)

• Relational calculus only; BDDs

Alloy alpha ( 1998)

• Adds q uantifiers, has flat state and one operation

Alloy 2 .0 ( 2001)

• Signatures for h ierarchical state and constraints

Alloy 3 .0 ( 2004)

• Types

Alloy 4 .0 ( 2006)

• Faster analysis

Provides p recise d escription of artifacts

• Documentation

• Reasoning: eliminate/reduce ambiguities, inconsistencies, and incompleteness

Enables machine reasoning

Facilitates writing p roperties that we cannot ( easily) express in s ource code

Provides a h igher level of abstraction

Lecture 3: S ys tematic Test Case Generation

Lecture 3: S ys tematic Test Case Generation

19

Example informal statement

Basic concepts

“Everybody likes a winner”

Meaning?

• all p : P erson | s ome w: Winner | p .likes(w)

• all p : P erson | all w: Winner | p .likes(w)

• some w: Winner | s ome p: P erson | p .likes(w)

• some w: Winner | all p : P erson | p .likes(w)

Atom ( or s calar)

Set

Relation

Universe of d iscourse

• Bounded b y scope

Quantification

• Universal

• Existential

Lecture 3: S ys tematic Test Case Generation

Lecture 3: S ys tematic Test Case Generation

21

Example Alloy model

Where is Russell’s paradox? [ Dijkstra]

Russell’s p aradox:

in a village in which the barber shaves every man who

doesn’t s have himself, who shaves the b arber?

Russell’s p aradox:

in a village in which the barber shaves every man who

doesn’t s have himself, who shaves the b arber?

module russell

module russell

sig Man {

shaves: set Man } // shaves: Man -> Man

sig Man {

shaves: set Man } // shaves: Man -> Man

one sig Barber extends Man {}

/* one */ sig Barber extends Man {}

pred Paradox() { Barber.shaves =

{ m: Man | m not in m.shaves } }

run Paradox for 4

pred Paradox() { Barber.shaves =

{ m: Man | m not in m.shaves } }

run Paradox for 4

Lecture 3: S ys tematic Test Case Generation

23

Lecture 3: S ys tematic Test Case Generation

20

22

24

4

2/17/16

Example of modeling and g enerating structures

Example of checking equivalence of formulas

module list // acyclic list

module list // acyclic list

sig List { header: lone Node }

sig List { header: lone Node }

sig Node { next: lone Node }

sig Node { next: lone Node }

pred RepOk(l: List) {

all n: l.header.*next | n not in n.^next }

pred RepOk(l: List) {

all n: l.header.*next | n not in n.^next }

run RepOk for 3

pred RepOk2(l: List) {

no l.header || some n: l.header.*next | no n.next

}

assert Equiv { all l: List | RepOk[l] <=> RepOk2[l] }

check Equiv for 3

Lecture 3: S ys tematic Test Case Generation

Lecture 3: S ys tematic Test Case Generation

25

26

Everything is a set

Relational composition

No s calars

Alloy equates the following:

• a ( atom)

• <a> (one-­‐tuple)

• {a} ( singleton set)

• {<a>} ( singleton s et of a one-­‐tuple)

Makes n avigation easier

x.y

Assume:

• x is a relation of type T1 x T2 x … x Tm

• y is a relation of type S1 x S2 x … x Sn

• m + n > 2

[[ x.y ]] = { <t1, …, tm-­‐1, s 2, …, s n> |

some c . <t1, …, tm-­‐1, c> in [[ x ]] and

<c, s 2, …, s n> in [[ y ]] }

Common case is that “x” is just a set

• “x” set, “y” b inary relation: “x.y” is relational image

“x”, “y” binary relations: “x.y” is relational composition

Lecture 3: S ys tematic Test Case Generation

Alloy analyzer

28

Alloy analyzer architecture

Translates to b oolean formulas, exploits SAT

Provides two types of analysis (technically the same)

• Simulation finds instances

• Checking finds counterexamples

Lecture 3: S ys tematic Test Case Generation

Lecture 3: S ys tematic Test Case Generation

27

relational

formula

scope

29

relational

instance

translate

formula

mapping

translate

instance

boolean

formula

SAT

solver

boolean

instance

Lecture 3: S ys tematic Test Case Generation

30

5

2/17/16

Example representation

Assume s cope 2

Set S

• [ s 0 s 1 ] for b oolean s0, s1

• Element is in the s et or not

Binary relation r: S x S

• r00 r01

r10 r11

S.r = [ s 0 s 1 ] . r00 r01 = [ s0 r00 ⌵ s1 r10

r10 r11

Exercise: model SLList.removeFirst()

s 0 r01 ⌵ s1 r11 ]

Lecture 3: S ys tematic Test Case Generation

31

Discussion

Lecture 3: S ys tematic Test Case Generation

32

Lecture 3: S ys tematic Test Case Generation

34

?/!

How well d oes the analyzer scale?

• What is s calability?

When d oes the “small s cope h ypothesis” hold?

Usage in other tools

• Requirements analysis?

• Software architecture d escriptions?

What kind of analyses can the tool-­‐s et assist with?

• Checking d eclarative models

• Checking imperative code

• Deep s tatic checking

• Systematic testing

Lecture 3: S ys tematic Test Case Generation

33

6