Systems Architecture, Sixth Edition

advertisement

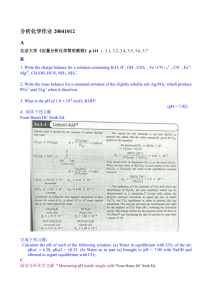

Systems Architecture, Sixth Edition Chapter 6 System Integration and Performance Chapter Objectives • In this chapter, you will learn to: – Describe the system and subsidiary buses and bus protocol – Describe how the CPU and bus interact with peripheral devices – Describe the purpose and function of device controllers – Describe how interrupt processing coordinates the CPU with secondary storage and I/O devices Systems Architecture, Sixth Edition 2 Chapter Objectives (continued) • In this chapter, you will learn to: – Describe how buffers and caches improve computer system performance – Compare parallel processing architectures – Describe compression technology and its performance implications Systems Architecture, Sixth Edition 3 FIGURE 6.1 Topics covered in this chapter Courtesy of Course Technology/Cengage Learning Systems Architecture, Sixth Edition 4 System Bus • Connects computer system components including CPU, memory, storage and peripheral devices • Conceptually or physically divided into specialized subsets – Data bus – Address bus – Control bus Systems Architecture, Sixth Edition 5 FIGURE 6.2 The system bus and attached devices Courtesy of Course Technology/Cengage Learning Systems Architecture, Sixth Edition 6 Bus Clock and Data Transfer Rate • Bus clock – Coordinate activities of all attached devices – Frequency of pulses measured in MHz or GHz • Bus cycle – Time interval from one clock pulse to the next • Data transfer rate – Measure of communication capacity – Bus capacity = data transfer unit x clock rate Systems Architecture, Sixth Edition 7 Bus Protocol • Governs format, content, timing of data, memory addresses, and control messages sent across bus • Approaches for access control – Master-slave approach – Peer-to-peer approach • Approaches for transferring data without CPU – Direct memory access (DMA) – Peer-to-peer buses Systems Architecture, Sixth Edition 8 Subsidiary Buses • Connect a subset of computer components • Specialized for components’ characteristics and communication between them – Memory bus – Video bus – Storage bus – External I/O bus Systems Architecture, Sixth Edition 9 Logical and Physical Access • I/O port – Communication pathway from CPU to peripheral device – Usually a memory address that can be read/ written by the CPU and a single peripheral device – Also a logical abstraction that enables CPU and bus to interact with each peripheral device as if the device were a storage device with linear address space Systems Architecture, Sixth Edition 10 FIGURE 6.4 A typical PC motherboard Courtesy of Course Technology/Cengage Learning Systems Architecture, Sixth Edition 11 Logical and Physical Access (continued) • Logical access: – The device, or its controller, translates linear sector address into corresponding physical sector location on a specific track and platter FIGURE 6.5 An example of assigning logical sector numbers to physical sectors on disk platters Systems Architecture, Sixth Edition Courtesy of Course Technology/Cengage Learning 12 Device Controllers • Implement the bus interface and access protocols • Translate logical addresses into physical addresses • Enable several devices to share access to a bus connection Systems Architecture, Sixth Edition 13 FIGURE 6.6 Secondary storage and I/O device connections using device controllers Courtesy of Course Technology/Cengage Learning Systems Architecture, Sixth Edition 14 Mainframe Channels • Many mainframe computers have a dedicated special-purpose computer called a channel • Compared with device controllers: – Number of devices that can be controlled – Variability in type and capability of attached devices – Maximum communication capacity Systems Architecture, Sixth Edition 15 Interrupt Processing • Secondary storage and I/O devices have slower data transfer rates than the CPU Systems Architecture, Sixth Edition 16 Interrupt Processing (continued) • Interval between a CPU’s request for input and the moment the input is received can span thousands, millions, or billions of CPU cycles • I/O wait states: CPU cycles that could have been, but weren’t, devoted to instruction execution • Interrupt register • Interrupt code Systems Architecture, Sixth Edition 17 Interrupt Handlers • More than just a hardware feature • Method of calling system software programs and processes • OS service routine used to process each possible interrupt • Supervisor – Examines the interrupt code stored in the interrupt register – Uses it as an index to the interrupt table Systems Architecture, Sixth Edition 18 Multiple Interrupts • Categories of interrupts – I/O event – Error condition – Service request • OS groups the interrupts by importance or priority Systems Architecture, Sixth Edition 19 Stack Processing • • • • • Machine state: saved register values Push: CPU values added to the stack Pop: CPU removes values at the top of the stack Stack overflow error: a push to a full stack Stack pointer: special-purpose register – Always points to the next empty address in the stack Systems Architecture, Sixth Edition 20 FIGURE 6.7 Interrupt processing Courtesy of Course Technology/Cengage Learning Systems Architecture, Sixth Edition 21 Buffers and Caches • Improve overall computer system performance by employing RAM to overcome mismatches in data transfer rate and data transfer unit size Systems Architecture, Sixth Edition 22 Buffers • Small storage areas (usually DRAM or SRAM) that hold data in transit from one device to another • Use interrupts to enable devices with different data transfer rates and unit sizes to efficiently coordinate data transfer • Buffer overflow Systems Architecture, Sixth Edition 23 FIGURE 6.8 A buffer resolves differences in data transfer unit size between a PC and a laser printer Courtesy of Course Technology/Cengage Learning Systems Architecture, Sixth Edition 24 Buffers (continued) • Computer system performance improves dramatically with larger buffer Systems Architecture, Sixth Edition 25 Diminishing Returns • When multiple resources are required to produce something useful, adding more and more of a single resource produces fewer and fewer benefits • Increased buffer size has no benefit after a point • At some point, the cost is higher than the benefit Systems Architecture, Sixth Edition 26 Diminishing Returns (continued) • Law of diminishing returns affects both bus and CPU performance Systems Architecture, Sixth Edition 27 Cache • Differs from buffer: – Data content not automatically removed as used – Used for bidirectional data – Used only for storage device accesses – Usually much larger – Content must be managed intelligently • Achieves performance improvements differently for read and write accesses Systems Architecture, Sixth Edition 28 Cache (continued) • Write access: – Sending confirmation (2) before data is written to secondary storage device (3) can improve program performance – Program can immediately proceed with other processing tasks FIGURE 6.9 A storage write operation with a cache Courtesy of Course Technology/Cengage Learning Systems Architecture, Sixth Edition 29 Cache (continued) • Read accesses – Routed to cache (1) • If data already in cache, access from there (2) • If not in cache, it must be read from the storage device (3) – Performance improvement realized only if requested data is already waiting in cache FIGURE 6.10 A read operation when data is already stored in the cache Courtesy of Course Technology/Cengage Learning Systems Architecture, Sixth Edition 30 Cache Controller • Processor that manages cache content • Guesses what data will be requested – Loads it from storage device into cache before it is requested • Can be implemented in: – A storage device storage controller or communication channel – Operating system Systems Architecture, Sixth Edition 31 Primary Storage Cache Secondary Storage Cache • Can limit wait states by • Gives frequently accessed using SRAM cached files higher priority for between CPU and SDRAM cache retention primary storage • Uses read-ahead caching for files that are read • Level one (L1): closest to the CPU sequentially • Level two (L2): next closest • Gives files opened for to CPU random access lower priority for cache retention • Level three (L3): farthest from CPU Systems Architecture, Sixth Edition 32 Processing Parallelism • Many applications are too big for a single CPU or computer system to execute – Large-scale transaction processing applications – Data mining – Scientific applications • Problems broken into pieces – Each piece solved in parallel with separate CPUs Systems Architecture, Sixth Edition 33 Multicore Processors • Able to place billions of transistors and their interconnections in a single microchip • Devoting the “extra” transistors entirely to cache memory began to yield fewer performance improvements • Multicore architecture – Typically share memory cache, memory interface, and off-chip I/O circuitry between cores – Reduces the total transistor count and cost Systems Architecture, Sixth Edition 34 FIGURE 6.11 The six-core AMD Opteron processor Courtesy of Advanced Micro Devices, Inc. Systems Architecture, Sixth Edition 35 FIGURE 6.12 Memory caches in a dualcore Intel Core-i7 processor Courtesy of Course Technology/Cengage Learning Systems Architecture, Sixth Edition 36 Multiple-Processor Architecture • Uses two or more processors on a single motherboard or set of interconnected motherboards • Common in midrange computers, mainframe computers, and supercomputers • Cost-effective for: – Single system that executes many different application programs and services – Workstations Systems Architecture, Sixth Edition 37 Scaling Up • Increasing processing by using larger and more powerful computers • Used to be most cost-effective • Still cost-effective when maximal computer power is required and flexibility is not as important Systems Architecture, Sixth Edition 38 Scaling Out • Partitioning processing among multiple systems • Speed of communication networks – Diminished relative performance penalty • Economies of scale have lowered costs • Distributed organizational structures emphasize flexibility • Improved software for managing multiprocessor configurations Systems Architecture, Sixth Edition 39 High-Performance Clustering • Connects separate computer systems with highspeed interconnections • Used for the largest computational problems (e.g., modeling three-dimensional physical phenomena) Systems Architecture, Sixth Edition 40 FIGURE 6.13 Organization of two interconnected supercomputing clusters Courtesy of European Centre for Medium-Range Weather Forecasts Systems Architecture, Sixth Edition 41 Compression • Reduces number of bits required to encode a data set or stream • Reducing the size of stored or transmitted data can improve performance if there is plenty of processing power Systems Architecture, Sixth Edition 42 Compression Algorithms • Vary in: – Type(s) of data for which they are best suited – Whether information is lost during compression – Amount by which data is compressed – Computational complexity Systems Architecture, Sixth Edition 43 Compression Algorithms (continued) • Lossless compression • Lossy compression • Compression ratio Systems Architecture, Sixth Edition 44 FIGURE 6.15 A digital image before (top) and after (bottom) 20:1 JPEG compression Courtesy of Course Technology/Cengage Learning Systems Architecture, Sixth Edition 45 FIGURE 6.16 Data compression with a secondary storage device (a) and a communication channel (b) Courtesy of Course Technology/Cengage Learning Systems Architecture, Sixth Edition 46 MPEG and MP3 • Moving Picture Experts Group (MPEG) • MP3 takes advantage of: – Sensitivity that varies with audio frequency (pitch) – Inability to recognize faint tones of one frequency simultaneously with much louder tones in nearby frequencies – Inability to recognize soft sounds that occur shortly after louder sounds Systems Architecture, Sixth Edition 47 FIGURE 6.17 MP3 encoding components Systems Architecture, Sixth Edition 48 Summary • The CPU uses the system bus and device controllers to communicate with secondary storage and input/output devices • Hardware and software techniques for improving data efficiency, and thus, overall computer system performance – Bus protocols, interrupt processing, buffering, caching, and compression Systems Architecture, Sixth Edition 49