6.851 Advanced Data Structures (Spring'14) Prof. Erik

advertisement

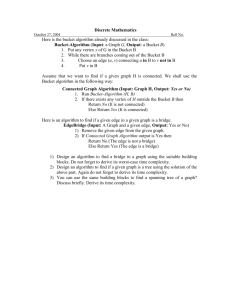

6.851 Advanced Data Structures (Spring’14) Prof. Erik Demaine TAs: Timothy Kaler, Aaron Sidford Final Project: Colored Successor Problem Solutions by James Duyck, Nirvan Tyagi, Andrew Huang, William Hasenplaugh (jduyck, ntyagi, kahuang, whasenpl) 1 Problem Statement and Introduction 1.1 Abstract This paper presents a data structure that can query the predecessor that is a particular color in O(lg lg n) amortized time per operation with high probability, for an arbitrary number of colors. Time and space complexity are analyzed, and an application is suggested. 1.2 Problem statement This was given as Problem C08: Retroactive Hashing in 6.851, Advanced Algorithms. Maintain a dynamic ordered data structure on n elements, where each element is one of k ≤ n colors, and supporting the following operations in amortized O(lg lg n) time: • insertAfter(x, d, c): Create node y of color c and data d, insert y after x, and return y. • delete(x, d, c): Delete node x. • prev(x, c): Return the first node of color c that occurs before node x. • next(x, c): Return the first node of color c that occurs after node x. 1.3 Previous work The predecessor problem without colors has been solved in various ways. Van Emde Boas trees solve it in O(lg w) = O(lg lg u) time per operation and θ(u) space, where w is the word size and u is the universe size [1] [2]. This was shown to be optimal for w = O(poly lg n) in the cell probe model by Ptracu and Thorup [4] [5]. They show that this also holds for the case of two colors. Mehlhorn, Nher, and Alt proved the same bound for the Union-Split-Find problem (which solves the uncolored predecessor problem) in the pointer machine model [6]. In 2008 Blelloch presented an algorithm solving the colored list problem, which is a generalization of the problem presented here [7]. In the colored list problem, each item may have multiple colors, and additional operations are supported. Blelloch presents several algorithms for different numbers of colors and elements with the following time complexities, where w is the word size: elements colors query update √ √ O( w) O( w) O(1) O(1) √ O(2w ) O( w) O(lg lg n) O(lg lg n) O(2w ) O(w ) O(lg lg n) O(w lg2 n lg lg n) O(poly-w) O(w/lg w) O(lg n) O(lg n) In this paper, we present a solution to the more restrictive colored predecessor problem with O(2w ) elements, O(2w ) colors, and query and update time O(lg lg n). 1.4 Model and assumptions Operations are performed under the word RAM model. The following data structures are taken as black boxes: • van Emde Boas tree: a data structure on nV EB elements chosen from an ordered universe of size u and represented with words of size w = lg u, supporting insertion, predecessor, and successor queries on universe members and deletion of elements in O(lg w) time and θ(u) space, or O(lg w) time with high probability and θ(nV EB ) space [1] [2]. • List labeling: an ordered data structure on nLL elements, supporting insert before/after, predecessor/successor, and delete in O(lg nLL ) time and O(nLL ) words of space (assuming a word size w ≥ O(lg nLL )), and generating labels for each element in the same order as the elements in O(n2LL ) label space (O(lg nLL ) bits per label) [3]. • Hash table: a data structure on nHT elements supporting insertion, deletion, and lookup in O(1) amortized time. • Balanced binary search tree: a data structure on nBBT elements chosen from an ordered universe, supporting insertion, predecessor, and successor queries on universe members, deletion of elements, and merge/split operations in O(lg nBBT ) time and O(nBBT ) space. 2 Design In this section, we will detail the design of the data structure. The design is best broken down into 3 layers. (1) Bucket layer with list labeling, (2) vEB layer, (3) BST layer. 2.1 List labeling, buckets, bucket BSTs, and bucket hash tables We consider the elements of the data structure in order and divide them into O(n/ lg n) buckets each of size O(lg n). We then apply the list labeling data structure on these O(n/ lg n) buckets to generate a label for each bucket. We call these the bucket labels. We maintain the size, s, of any bucket to be lg n < s < 4 lg n. When buckets reach these threshold sizes, we perform split and merge operations on the bucket level and insert and delete operations on the list labeling level. Additionally, inside each bucket, we apply a list labeling data structure on the O(lg n) elements of a bucket. We call these the element labels. The elements of a bucket are stored in BSTs corresponding to their color and keyed by their element label. Thus, a bucket stores a BST for each distinct color of its elements. We call these trees the bucket trees. The bucket stores a bucket tree hash table keyed by color to access the bucket trees. Each element, when originally inserted has an O(1/ lg2 n) chance of being promoted to a ’captain’ element. Captains are inserted into the corresponding color vEB tree as described in the next section. 2.2 Color vEB trees and promotion The data structure stores a vEB tree for each distinct color of elements contained. We call these the color vEBs. The data structure also stores a color vEB hash table keyed by color to access the color vEBs. The color vEBs store captain elements for the given color keyed by the captain’s bucket label. In addition, they contain two ’sentinel’ elements representing −∞ and ∞. The color vEBs are updated accordingly to account for bucket label changes from merges and splits. Figure 1: vEB trees are keyed on bucket labels. 2.3 Connecting BSTs Connecting BSTs are used to keep track of colored elements that were not promoted to captains and thus not represented in their respective color vEB. We store a connecting BST for every pair of two consecutive elements (captains) in the color vEBs, and store pointers from captains to connecting BSTs. This contains all elements (non-captains) of that color existing between the two captains, keyed by their bucket label. A captain will have pointers to two connecting BSTs, a forward pointer to the connecting BST representing elements after it in order and a backward pointer to the connecting BST representing elements before. Although these connecting trees will have to be split and merged during captain inserts and deletes, it is important to note that the overarching order of the elements does not change. This means that updates to the connecting BSTs will not be necessary after bucket label changes. 2.4 API descriptions The following section provides some simple pseudocode as to how the methods will execute using the data structure layers as described above. We note that descriptions for insert and delete will be symmetric as will successor and predecessor. 2.4.1 insert / delete function insertAfter(x, c) Figure 2: Connecting BSTs contain elements between the captains. Insert y into bucket b containing x. Create element label for y in b and insert into proper color BST c. if y is promoted to captain then Insert into color vEB c with key b bucket label. Find predecessor in color vEB and search and split corresponding connecting BST at b bucket label. else Search color vEB c for predecessor of b bucket label. Add element with key b bucket label to connecting BST end if if Bucket b has > 4 lg n elements then Split bucket and change bucket list labels. Remove captains in buckets with changed bucket labels from color vEB and re-add with new bucket labels. Recreate list labeling on elements in 2 separate buckets. Split color BSTs. end if end function 2.4.2 successor / predecessor function successor(x, c) Check if successor in same bucket b as x. Search successor of x element label in color BST c. if Successor not found in b then Search successor of b bucket label in color vEB c. Find successor of b in backward connecting BST. if Successor b0 found in connecting BST then Return largest element in color BST c in bucket b0 . else Return captain. end if end if end function Figure 3: Insertion must update bucket labels, element labels, color BSTs, color vEB trees, connecting BSTs and hash tables. 3 3.1 Analysis Basic analysis: time complexity The insert and successor methods as described by the pseudocode above run in amortized O(lg lg n) time. Each color BST is at most size O(lg n), thus inserting or searching to the color BST takes O(lg lg n). Each color vEB is at most size O(n), so searching for a predecessor or inserting takes O(lg lg n). A connecting BST is w.h.p size O(lg n) as proved below (if gets above a threshold, we rebuild), thus takes O(lg lg n) for inserts and searches. Each of these routines are used a constant number of times in the methods. When buckets are merged or split, more work needs to be done to maintain the data structure. The amortized analysis of this situation is provided in the next section. 3.2 List labeling overflow (merge / split of buckets) When buckets merge or split, O(lg n) bucket labels change. A merge or a split is induced due to O(lg n) inserts or deletes that we can charge to. Thus, we have O(lg n ∗ lg lg n) amortized work to fix our data structure at a merge or split. There are a number of actions that need to be taken to maintain the data structure. These can be broken down into two main groups. (1) Adjusting for change in O(lg n) bucket labels. (2) Adjusting for structures stored in bucket merging/splitting. Looking at (1), we need to see how data structures that are keyed by bucket labels are affected. As noted before, connecting BSTs are not affected since overall order of elements do not change. Even though overall order does not change, the color vEB trees are still affected since the way they are created is dependent on the label. With O(lg n) bucket labels changing, we have to delete and re-add all the captains in each of those buckets. We describe below a w.h.p bound that each bucket will have 1 captain (if there are more than 1, we can rebuild). Thus, deleting and re-adding 1 captain per bucket takes O(lg n · lg lg n) total, or O(lg lg n) amortized. For (2), we have to consider two tasks that need to be completed. Without loss of generality, let us consider the split case. The first task is the color BSTs need to be split and placed accordingly into the new buckets. There are O(lg n) color BSTs of size O(lg n) since that is both the max colors and max elements of a single color. Thus splitting each color BST (at the middle element label), takes O(lg lg n) time and O(lg n · lg lg n) time for all of them. The other task is to redo the element list labeling structure. Even though the element list labels are changing, using the same argument as in the connecting BSTs case, the color BSTs order remains the same and changing the list labels does not require any additional change to the BST. Thus, changing the list labels for O(lg n) elements takes O(lg lg n) time per element and O(lg n · lg lg n) time total. All the work to maintain the data structure after a merge or split can be completed in O(lg n·lg lg n) time fitting inside our available work for the amortization argument. 3.2.1 Promotion probability Above, it is assumed that there is at most one captain per bucket, and that the binary search trees between captains have size at most polylogarithmic in n. We will show that these two statements are true with high probability, as long as we choose our bucket size and the probability of making an element a captain carefully. Suppose that an entry is designated a captain with probability O( ln1a n ), and assume that buckets are of size O(lnb n), with a = b + 1. For each element i of a bucket we assign a random variable P Xi where Xi is 1 if element i is a captain and 0 otherwise. If we let X = Xi , then the expected b−a lnb n value µ of X is O( ln n). a ) = O(ln n Taking the Chernoff bound, we have δ2 µ P(X ≥ (1 + δ)µ) ≤ e− 3 Letting δ = µ2 − 1, we have P(X ≥ 2) ≤ e = e− = e− 4 µ −4+µ 3 4 43 3 µ µ e− 3 e − 2 −1)2 µ (µ 3 Then since µ = c ln−1 n for some constant c 4 4 ≤ ( n1 ) 3c e 3 And choosing the captain probability such that c < 1 4 P(X ≥ 2) ≤ e3 n4/3 We can further decrease the constant factor on the probability of making an element a captain to decrease the probability that two captains are placed in the same bucket to O(1/n4/3c ). We choose c so that the probability is less than O(1/n2 ), so with high probability, no bucket has two captains. Note that as elements are deleted, since the captains are randomly distributed, the expectation remains the same - that is, an adversary does not know where the captains are and so cannot choose to delete the non-captain elements to move captains into the same bucket. Now we will show that with high probability, there is at least one captain within some polylogarithmic number of buckets. Consider the case of B ln2 n buckets. Let µ0 = B ln2 nµ be the expected number of captains. From above, µ0 = B ln2 nc ln−1 n = Bc ln n Then, again using a Chernoff bound, δ 2 µ0 P(X ≤ (1 − δ)µ0 ) ≤ e− 2 Letting δ = 1 − µ10 = 1 − Bc1ln n , we have P(X ≤ 1) ≤ e− (1− 10 )2 µ0 µ 2 µ0 −2+ 10 µ 2 1 Bc ln n−2+ Bc ln n − 2 − =e =e ≤ n−Bc/2 e Choosing B ≥ 2/c, we have P(X ≤ 1) ≤ O(1/n2 ). Then with high probability, no binary search tree has size greater than a fixed polylogarithmic function of n. 3.3 Data structure rebuilding analysis To make sure that each element is a captain with probability dependent on n, we rebuild the entire datastructure when n changes by a large amount. In particular, at each time n ≈ 2i . If, through deletes and inserts, n leaves the range 12 2i ≤ n ≤ 2 · 2i , we rebuild the data structure. This is amortized over the O(2i ) inserts or deletes required between rebuildings, so the amortized time complexity per operation doesn’t change. We can also rebuild in the case that two captains are chosen in the same bucket or a binary search tree has size larger than B ln2 n. Since these occur with low probability (less than every O(1/n) operations), we can afford to rebuild. 3.4 Space complexity There are O(n/ lg n) buckets of size O(nB ) = O(lg n). Each bucket has • A list labeling data structure on all elements in the bucket taking O(nB ) = O(lg n) space. • A hash table on all colors in the bucket taking at most O(nB ) = O(lg n) space. • A balanced binary search tree for each color. Each element of the bucket is in one BBST, for a total of O(nB ) = O(lg n) space. Then the total space per bucket is O(lg n), so the total space taken by all buckets is O(n). There is a list labeling data structure on all buckets. Since there are O(n/ lg n) buckets, and label space is the square of the number of elements, the label space has size O(n2 / lg2 n). Then O(lg(n2 / lg2 n)) = O(lg n) bits are required per label, so the total space required is O(n/ lg n) words. There is a van Emde Boas tree on the labels of buckets containing captains. This takes O(n2 / lg2 n) space since the universe size (the label space) is the number of buckets squared. Alternatively, using the version of van Emde Boas that achieves O(lg w) time with high probability, the space is the number of elements (buckets with captains), O(n/ lg2 n). There is a binary search tree for each captain containing the elements between that captain and the next (or the sentinel). Since each element of the data structure is in exactly one binary tree, the total space is O(n). There is a hash table on mapping all colors to color vEB trees, and taking up to O(n) space. Therefore the total space for the whole data structure is O(n). 4 Future Work An avenue for future research is to implement this data structure to confirm the space and time bounds shown. A possible application is retroactive hashing. To implement retroactive hashing, one color is reserved as a ”time” color. Other colors are used to represent inserted values. When a new operation is given at a particular time, two elements are inserted into the data structure. First an element of a color corresponding to the inserted/deleted value is inserted, with data being whether the operation was an insert or delete, then a time element of the time color is inserted. To lookup whether an value is present in the hash table at a particular time, start with the corresponding time element and find the predecessor of the color corresponding to the value. If the predecessor is a delete operation or is not found, the value is not in the hash table at that time; otherwise it is in the table. Inserts, deletes, and lookups in the retroactive hashing data structure take O(lg lg n) time, where n is the total number of inserts and deletes performed. 5 Bibliography References [1] Peter van Emde Boas, Preserving order in a forest in less than logarithmic time. Proceedings of the 16th Annual Symposium on Foundations of Computer Science, p. 75-84, 1975. [2] Peter van Emde Boas, Peter van Emde Boas: Preserving Order in a Forest in Less Than Logarithmic Time and Linear Space. Inf. Process. Lett. 6(3), p. 80-82, 1977. [3] P. Dietz, J. Seiferas, J. Zhang, A Tight Lower Bound for Online Monotonic List Labeling. SIAM Journal on Discrete Mathematics, 18(3), p. 626-637, 2005. [4] Mihai Ptracu and Mikkel Thorup, Time-Space Trade-Offs for Predecessor Search. 38th ACM Symposium on Theory of Computing, 2006. [5] Mihai Ptracu and Mikkel Thorup, Randomization Does Not Help Searching Predecessors. 18th ACM/SIAM Symposium on Discrete Algorithms, 2007. [6] Kurt Mehlhorn, Stefan Nher, and Helmut Alt, A Lower Bound on the Complexity of the Union-Split-Find Problem. SIAM J. Comput. 17-6, p. 1093-1102, 1988. [7] Guy E. Blelloch, Space-Efficient Dynamic Orthogonal Point Location, Segment Intersection, and Range Reporting. SIAM/ACM Symposium on Discrite Algortithms, January 2008.