Tools for Grading Evidence: strengths

advertisement

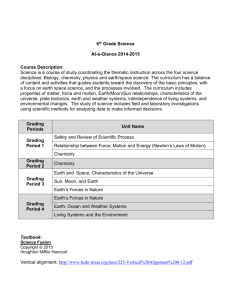

Tools for Grading Evidence: strengths, weaknesses, and the impact on effective knowledge translation Relevant conference theme: Concepts and methods Translating Research Into Practice – Dementia and Public Health (TRIP-DPH) Michelle Irving, Nic Cherbuin, Ranmalee Eramudugolla, Peter Butterworth, Lily O'Donoughue Jenkins, Kaarin Anstey Rating evidence and knowledge translation: Could grading tools be selling us short? • Knowledge Translation is defined by the World Health Organization as “the synthesis, exchange, and application of knowledge by relevant stakeholders to accelerate the benefits of global and local innovation in strengthening health systems and improving people’s health” (WHO, 2005). • This objective is compromised when a body of research is oversimplified, graded incorrectly, or not fully understood by those relying on flawed grading systems to inform their decision making. 2 Translating Research into Practice – Dementia and Public Health (TRIP-DPH) TRIP-DPH is an ARC Linkage collaboration between the Australian National University, ACT Health and Alzheimer‟s Australia. The project is examining multiple barriers to effective knowledge translation and what can be done to make improvements. One of the barriers identified pertains to the vast quantities of research that decision makers are confronted with and the issues that arise from trying to evaluate the quality of such evidence. How do those working outside the research world distinguish strong evidence from weak evidence? This well known problem has broadly been addressed through the development of hundreds of grading instruments for evaluating individual studies and systematic reviews If these tools are flawed, over-reliance on them may lead to miscommunication and incorrect assessments of the evidence base, compromising knowledge translation 3 What is grading? • Grading instruments provide a metric to „quantify‟ the quality of evidence from either an individual study or from a body of evidence • Typically, they comprise a list of criteria and instructions for application of these criteria in order to achieve a quality rating or „grade‟ • Ratings for scales and checklists are frequently presented as a whole score or fraction (e.g. 18/20) while ratings that are applied to bodies of evidence are usually grade A, B, C etc. 4 Examples of grading instruments Grading a body of evidence Grading individual studies Grading of Recommendations Assessment, Development and Evaluation (GRADE) Critical Appraisal Skills Program (CASP) National Health and Medical Research Council - FORM (NHMRC-FORM) Graphic Appraisal Tool for Epidemiology (GATE) Scottish Intercollegiate Guidelines Network (SIGN) Downs and Black Checklist National Service Framework for Long Term Conditions (NSF-LTC) Newcastle-Ottawa Scale (NOS) Strength of Recommendations Taxonomy (SORT) Risk of Bias Assessment Tool for Nonrandomized Studies (RoBANS) National Institute for Clinical Evidence (NICE) Guidelines Jadad Scale (aka Oxford Quality Scoring System) 5 What types of criteria are included? While these tools vary, typically the criteria used to rate evidence includes some or all of the below: Precision Quantity Coherence Directness Consistency Strength of Evidence Doseresponse relationship Publication Bias Magnitude of Effect Bias Hierarchy of study design Plausible Confounding Evidence can be rated up or down depending on how well it meets criteria in any one of these domains. Different grading systems place less or more emphasis on different components. Coleman, C. I., R. Talati, et al. (2009). A Clinician's Perspective on Rating the Strength of Evidence in a Systematic Review. Pharmacotherapy, 29(9), 1017-1029. 6 Why is grading evidence a good idea? Objective Systematic approach Time effective Can minimise bias in assessments Instruments often facilitate quick assessments Not reliant on author(s)‟s view of the strength of their research Accessible Clear High Impact Once studies have been graded the results are usually broadly available for others to see and use Grades are a straightforward way to communicate to those who are not necessarily equipped to evaluate research Grades of systematic reviews are often used to address real world problems (e.g. clinical guidelines) 7 What are the problems? Lack of validation and poor reliability Highly subjective - low interrater reliability Create false sense of trust or over-reliance on grading outcomes Overly complicated, unclear instructions and little or no training – mistakes made Biased in favour of certain types of studies (e.g. randomised control trials) and against others (e.g. observational) Grading of evidence may not be applicable to local settings (Bagshaw & Bellomo, 2008; Baker et al., 2011; Baker et al., 2010; Barbui et al., 2010; Brouwers et al., 2005; Calderón et al., 2006; Colle et al., 2002; Gugiu & Gugiu, 2010; Hartline et al. 2012; Ibargoyen-Roteta et al., 2010; Juni et al., 1999; Katrak et al., 2004; Kavanagh, 2009; Persaud & Mamdani, 2006) 8 Why should policy makers, practitioners and other decision makers care? • Even if you don‟t directly use one of these instruments, chances are that you have relied on research that is evaluated by them: e.g. NHMRC guidelines, Cochrane Collaboration reviews, WHO recommendations, NIH reports • This research may have been graded in ways that do not accurately represent the strength of the evidence, leading to flawed conclusions • Decisions made on the basis of erroneous evidence could lead to resources being misdirected to less worthy causes or away from more worthy causes 9 Example: Smoking as a risk factor for Alzheimer‟s Disease • In 2010 the US Department of Health and Human Services National Institutes of Health (NIH) released a report, “Preventing Alzheimer‟s Disease and Cognitive Decline” (Williams et al., 2010). It aimed to, „help clinicians, employers, policymakers, and others make informed decisions about the provision of health care services.‟ • In this report a systematic review by Anstey et al. (2007) on the effects of smoking on Alzheimer‟s Disease was given a „low‟ quality rating, as assessed using the GRADE approach. There are at least two major problems with this rating: 10 Example: Smoking as a risk factor for Alzheimer‟s Disease 1. The systematic review was downgraded because it relied on observational studies rather than using randomised control trials. However in research on smoking it is not ethical to perform randomised control trials, as such observational studies were the strongest possible form of evidence available. 2. Grading failed to take into account additional sources of evidence that fall outside the scope of GRADE‟s rating criteria. This includes a convergence of evidence from other research areas, such as animal studies that have also linked smoking and cognitive decline. 11 Potential outcome of flawed grading If the strongest possible evidence is graded as „low‟ due to ineffective use of grading systems it is possible that health practitioners and policy makers may conclude that there are no grounds to recommend reduction or cessation of smoking as a preventative means of decreasing dementia. Smokers themselves may also directly interpret such information as reassurance that their use of tobacco is not linked with increased risk. Such an outcome has the potential to maintain the prevalence of this costly disease at higher levels. 12 Where to from here? 1. At a minimum researchers, policy makers and practitioners should be aware of the shortcomings of grading instruments. Critical evaluation of their conclusions is necessary before relying on grades as a form of evidence for decision making 2. There should be greater emphasis on applying the correct grading system to the type of evidence 3. Options include creating a new instrument, having multiple instruments that are more targeted for specific types of research, or modifying existing instruments to address criticisms 13 References Anstey, K. J., von Sanden, C., Salim, A., & O'Kearney, R. (2007). Smoking as a risk factor for dementia and cognitive decline: a meta-analysis of prospective studies. American Journal of Epidemiology, 166(4), 367-78 Bagshaw, S. M. and R. Bellomo (2008). The need to reform our assessment of evidence from clinical trials: A commentary. Philosophy, Ethics, and Humanities in Medicine, 3(1), 23 Baker, A., J. Potter, et al. (2011). The applicability of grading systems for guidelines. Journal of Evaluation in Clinical Practice, 17(4), 758-762 Baker, A., K. Young, et al. (2010). A review of grading systems for evidence-based guidelines produced by medical specialties. Clinical medicine, 10(4), 358-363 Barbui, C., T. Dua, et al. (2010). Challenges in Developing Evidence-Based Recommendations Using the GRADE Approach: The Case of Mental, Neurological, and Substance Use Disorders. PLOS Medicine, 7(8) Brouwers, M. C., M. E. Johnston, et al. (2005). Evaluating the role of quality assessment of primary studies in systematic reviews of cancer practice guidelines." BMC Medical Research Methodology, 5(1), 8 Calderón, C., R. Rotaeche, et al. (2006). Gaining insight into the Clinical Practice Guideline development processes: qualitative study in a workshop to implement the GRADE proposal in Spain. BMC Health Services Research, 6(1), 138 Coleman, C. I., R. Talati, et al. (2009). A Clinician's Perspective on Rating the Strength of Evidence in a Systematic Review. Pharmacotherapy, 29(9), 1017-1029 Colle, F., F. Rannou, et al. (2002). Impact of quality scales on levels of evidence inferred from a systematic review of exercise therapy and low back pain. Archives of Physical Medicine and Rehabilitation, 83(12), 1745-1752 Gugiu, P. C. & M. R. Gugiu (2010). A Critical Appraisal of Standard Guidelines for Grading Levels of Evidence. Evaluation & the Health Professions, 33(3), 233-255 Hartling, L., R. M. Fernandes, et al. (2012). From the trenches: a cross-sectional study applying the GRADE tool in systematic reviews of healthcare interventions. PloS One, 7(4), e34697 Ibargoyen-Roteta, N., I. Gutiérrez-Ibarluzea, et al. (2010). The GRADE approach for assessing new technologies as applied to apheresis devices in ulcerative colitis. Implementation Science, 5(1), 48 Jüni, P., A. Witschi, et al. (1999). The Hazards of Scoring the Quality of Clinical Trials for Meta-analysis. Journal of the American Medical Association, 282(11), 1054-1060 Katrak, P., A. Bialocerkowski, et al. (2004). A systematic review of the content of critical appraisal tools. BMC Medical Research Methodology, 4(1), 22 Kavanagh, B. P. (2009). The GRADE system for rating clinical guidelines. PLoS Medicine, 6(9), e1000094 Persaud, N. & M. M. Mamdani (2006). External validity: the neglected dimension in evidence ranking. Journal of Evaluation in Clinical Practice, 12(4), 450-453 Williams, J.W., Plassman, B.L., Burke, J., Holsinger, T., Benjamin, S. (2010). Preventing Alzheimer‟s Disease and Cognitive Decline. Evidence Report/Technology Assessment No. 193. AHRQ Publication No. 10-E005. Rockville, MD: Agency for Healthcare Research and Quality. World Health Organization (2005). Bridging the “Know–Do” gap: Meeting on knowledge translation in global health. Retrieved from http://www.who.int/kms/WHO_EIP_KMS_2006_2.pdf 14 Grading Instrument References CASP: Details available at the Critical Appraisal Skills Program website: http://www.casp-uk.net/ Downs & Black Checklist: Downs, S. H., & Black, N. (1998). The feasibility of creating a checklist for the assessment of the methodological quality both of randomised and non-randomised studies of health care interventions. Journal of Epidemiology and Community Health, 52(6), 377-384. GATE: Jackson, R., Ameratunga, S., Broad, J., Connor, J., Lethaby, A., Robb, G., ... & Heneghan, C. (2006). The GATE frame: critical appraisal with pictures. Evidence Based Medicine, 11(2), 35-38 GRADE: Details available at the Grading of Recommendations, Assessment, Development and Evaluations Working Group website: http://www.gradeworkinggroup.org/ JADAD: Jadad, A. R., Moore, R. A., Carroll, D., Jenkinson, C., Reynolds, D. J. M., Gavaghan, D. J., & McQuay, H. J. (1996). Assessing the quality of reports of randomized clinical trials: is blinding necessary?. Controlled clinical trials, 17(1), 1-12. NHMRC-FORM: Hillier, S., Grimmer-Somers, K., Merlin, T., Middleton, P., Salisbury, J., Tooher, R., & Weston, A. (2011). FORM: An Australian method for formulating and grading recommendations in evidence-based clinical guidelines. BMC medical research methodology, 11(1), 23 NICE: Details available at National Institute for Health and Clinical Effectiveness website: http://www.nice.org.uk:80/aboutnice/howwework/developingniceclinicalguidelines/clinicalguidelinedevelopmentmethods/clinical_guideline_development_metho ds.jsp NOS: Wells, G. A., Shea, B., O‟connell, D., Peterson, J., Welch, V., Losos, M., & Tugwell, P. (2000). The Newcastle-Ottawa Scale (NOS) for assessing the quality of nonrandomised studies in meta-analyses. Details available at: http://www.medicine.mcgill.ca/rtamblyn/Readings/The%20Newcastle%20%20Scale%20for%20assessing%20the%20quality%20of%20nonrandomised%20studies%20in%20meta-analyses.pdf NSF-LTC: Turner-Stokes, L., Harding, R., Sergeant, J., Lupton, C., & McPherson, K. (2006). Generating the evidence base for the National Service Framework for Long Term Conditions: a new research typology. Clinical medicine, 6(1), 91-97. RoBANS: Park, J., Lee, Y., Seo, H., Jang, B., Son, H., & Kim, S. Y. (2011). Risk of bias assessment tool for non-randomized studies (RoBANS): development and validation of a new instrument. In Proceedings of the 19th Cochrane Colloquium (pp. 19-22). SIGN: Scottish Intercollegiate Guidelines Network methodology available at: http://www.sign.ac.uk/guidelines/fulltext/50/index.html SORT: Ebell, M.H., Siwek, J., Weiss, B.D., Woolf, S.H., et al. (2004). Strength of Recommendation Taxonomy (SORT): a patient-centered approach to grading evidence in the medical literature. American Family Physician, 69, 549-57. 15 Contact author Michelle Irving Centre for Research on Ageing, Health and Wellbeing Australian National University michelle.irving@anu.edu.au www.crahw.anu.edu.au The First Global Conference on Research Integration and Implementation, http://www.I2Sconference.org/ 16