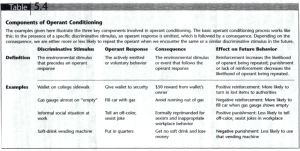

4 OPERANT BEHAVIOR

advertisement