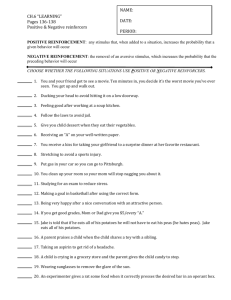

Animals prefer reinforcement that follows greater effort: Justification

advertisement