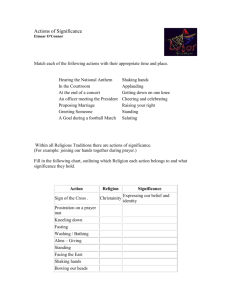

The Development of Statistical Significance Testing Standards in

advertisement