The Contagion Effect of Low-Quality Audits

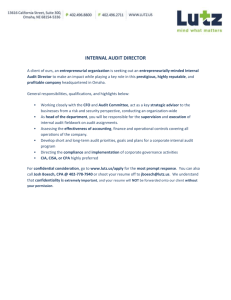

advertisement