Gung Ho: A code design for weather and climate prediction

advertisement

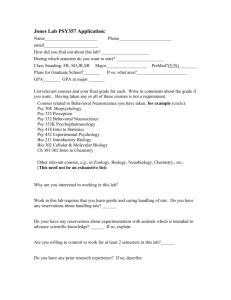

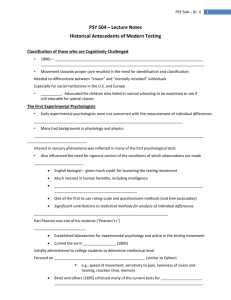

Gung Ho: A code design for weather and climate prediction on exascale machines R. Forda , M.J. Gloverb , D.A. Hamc , C.M. Maynardb , S.M. Picklesa , G.D. Rileyd , N. Woodb a Scientific Computing Department, STFC Daresbury laboratory b The Met Office, FitzRoy road, Exeter, EX1 3PB c Department of Computing and Grantham Institute for Climate Change, Imperial College, London d School of Computer Science, University of Manchester Abstract The Met Office’s numerical weather prediction and climate model code, the Unified Model (UM) is almost 25 years old. It is a unified model in the sense that the same global model is used to predict both short term weather and longer term climate change. More accurate and timely predictions of extreme weather events and better understanding of the consequences of climate change require bigger computers. Some of the underlying assumptions in the UM will inhibit scaling to the number of processor cores likely to be deployed at Exascale. In particular, the longitude-latitude discretisation presently used in the UM would result in 12 m grid resolution at the poles for a 10 km resolution at mid-latitudes. The Gung Ho project is a research collaboration between the Met Office, NERC funded researchers and STFC Daresbury to design a new dynamical core that will exhibit very large data parallelism. The accompanying software design and development project to implement this new core and enable it to be coupled to physics parameterisation, ocean models and sea ice models, for example is presented and some of the computational science issues discussed. Keywords: 1. Introduction Partial differential equations describe a wide range of physical phenomena. Typically, they exhibit no general analytic solution and certainly this Preprint submitted to Advances in Engineering Software March 24, 2014 is the case for the Navier-Stokes equations, which are the starting point for numerical weather prediction (NWP) and climate models. However, an approximate numerical solution can be constructed by replacing differential operators with finite difference or finite element versions of the same equations. The domain of the problem can be decomposed and the equations solved for each sub-domain. This requires only a small amount of boundary data from neighbouring sub-domains. This data parallel approach naturally maps to modern supercomputer architectures with large numbers of compute nodes and distributed memory. Each node can perform the necessary calculations on its own sub-domain and then communicate these results to its neigbouring nodes. However, the relevance of this simple picture of the massively parallel paradigm for supercomputing is diminishing as machine architectures become increasingly complex. While there is still much uncertainty about what future architectures will look like in 2018 and beyond, it is clear that the trend towards sharedmemory cluster nodes with an ever-increasing number of cores, stagnant or decreasing clock frequency, and decreasing memory per core, are certain to continue. For a review, see for example [1]. This is because the design of new silicon is constrained by the imperative to minimize power consumption. Furthermore, it is expected that improvements to Instruction Level Parallelism (ILP) within the execution units1 on commodity processors be incremental at best. Consequently, the computational performance of an individual processor core may even decrease. Thus simulation codes must be able to exploit ever increasing numbers of cores just to manage current workloads on future systems, let alone achieve the next order-of-magnitude resolution improvements demanded by scientific ambitions. Most scientific codes exploit the data parallelism expressed by the Message Passing Interface (MPI) over distributed memory. However, the arguments (and evidence) in favour of introducing an additional thread-based layer of parallelism over a shared memory node, such as OpenMP threads, into an MPI code are more compelling than ever. Exploiting the shared memory within a cluster node helps to reduce the cost of communications thereby improving scalability, and to alleviate the impact of decreasing memory per core by reducing the need to store redundant copies in memory. On 1 e.g. Single Instruction Multiple Data (SIMD) instructions such as Streaming SIMD Extension (SSE) and Advanced Vector Extensions (AVX). 2 many architectures, it is also possible to hide much of the effect of memory latency by oversubscribing execution units with threads. Extrapolating the trends described above and in [1] results in a prediction of a so called exascale machine between 2018 and 2020. Assuming that an individual thread can run at a peak rate of 1 GFlops2 , then 109 threads would be needed to keep an exascale machine busy. To put this into context for an atmospheric model, a 1 km resolution model will have approximately 5 × 108 mesh elements in the horizontal. Compare this to a 10 km global model which has only 5 × 106 mesh elements in the horizontal. Whilst this naive analysis may suggest that such a global model has only enough data parallelism to exploit 106 or 107 concurrent threads, this is not as bad as it first appears. Neither operational forecasts nor research jobs occupy the whole machine and moreover, increased use of coupled models instead of atmosphere-only models can be expected to boost resource utilisation, as can ensemble runs via task parallelism. However, it is clear that in the future it will be necessary to find and exploit additional parallelism beyond horizontal mesh domain decomposition and concurrent runs of the atmosphere, ocean and IO models. In the current Met Office code, the Unified Model[2, 3], a major factor limiting the scalability and accuracy of global models is the use of a regular latitude-longitude mesh, as the resolution increases dramatically towards the poles. A third generation UM dynamical core, ENDGame [4] is scheduled to enter operational service for the global model in summer 2014. Whilst this greatly improves the scaling behaviour of the Unified Model, the latitudelongitude mesh remains. This will fundamentally limit the ability of the global model to scale to the degree necessary for an exascale machine. Clearly, the disruptive technology change necessary for exascale computing is a compelling driver of algorithmic and software change. In response, the UK Met Office, NERC and STFC have funded a five year project to design a new dynamical core for an atmospheric model, called Gung Ho. This project is a collaboration between meteorologists, climate scientists, computational scientists and mathematicians based at the Universities of Bath, Exeter, Leeds, Reading, Manchester and Warwick, Imperial College London, the Met Office and STFC Daresbury. This paper describes the design of the software needed to support such a dynamical core. 2 Floating point operations per second (flops) 3 2. Design considerations for a dynamical core The Gung Ho dynamical core is being designed from the outset with both numerical accuracy and computational scalability in mind and the primary role of the computational science work package is to ensure that the solutions being proposed by other work packages in the project will scale efficiently to a large numbers of threads. At the same time, it must be recognised that a software infrastructure for next generation weather and climate prediction must aspire to an operational lifetime of 25 years or more if it is to match the longevity of the current Unified Model. Therefore, other aspects – such as portable performance, ease of use, maintainability and future-proofing – are also important, and are addressed here. • Portable performance means the ability to obtain good performance on a range of different machine architectures. This should apply to the size of machine (from tablet to supercomputer), the model configuration (climate, coupled etc.) and to different classes of machine (e.g. graphical processor unit (GPU) or multi-core oriented systems). It is not known what type of architectures will dominate in 2018 and onwards but, as is the case today, architecture specific parallelisation optimisations are expected to play an important role in obtaining good performance. Therefore the Gung Ho software architecture should be flexible enough to allow different parallelisation options to be added. • Ease of use is primarily about making the development of new algorithms as simple as possible and ease of maintenance will hopefully follow from this simplicity. Clearly there is a trade-off between application specific performance optimisations and ease of use/maintainability. As will be shown in later sections, in the proposed Gung Ho software architecture this problem is addressed by separating out these different concerns into different software layers. • Future-proofing in this context refers to being able to support changes in the dynamical core algorithm (such as a change of mesh or discretisation for example) without having to change all of the software. As well the ability to exploit hardware developments. The current Unified Model is written in Fortran 90 and much of the cultural heritage and experience of programming in the meteorology and climate 4 community is in Fortran. Therefore, the Gung Ho dynamical core software will be written in Fortran, but the more modern Fortran 2003; that is, it may rely on any feature of the Fortran 2003 standard. This allows the support software to use some of the new object oriented features (procedures within objects in particular) if required whilst keeping the performance benefits and familiarity of Fortran. Fortran 2003 also provides a standard way of calling C code, which is also desirable. Parallelisation is expected to be a mixture of MPI and OpenMP with the addition of OpenACC if GPUs are also to be targeted. However, there may emerge other programming models that these designs do not exclude. In particular Partitioned Global Address Space models (PGAS) such as Co-Array Fortran (CAF), which is part of the Fortran 2008 standard, are not excluded. 2.1. Support for unstructured meshes To avoid some of the problems associated with a regular latitude/longitude mesh, quasi-uniform structured meshes such as the cubed sphere are being considered for the Gung Ho dynamical core. However, it is highly undesirable to specialise to a particular grid prematurely. Indeed, alternative grids to the cubed sphere are still under active consideration in Gung Ho [5–7]. Moreover, the best choice of mesh now may not be the best choice over the lifetime of the Gung Ho dynamical core. Therefore, a more general irregular data structure will be used to store the data on a mesh. This means that instead of neighbours being addressed directly in the familiar stencil style (data(i+1,j+1) etc.) of a structured mesh, they are addressed by a neighbours list e.g. data(neighbour(i),i=1,Nneighbours). Iterating over a list of neighbours allows an arbitrary number of neighbours for each mesh element which allows simple support for different topologies. There is a performance cost associated with this indirect memory addressing, that is having to look up the locations of neighbours. However, a critical design concept is that the mesh will be structured in the vertical. This is driven by the dominance of gravity in the Earth’s atmosphere, which leads to the atmosphere being highly anisotropic on a planetary scale. Thus the semi-structured mesh will be a horizontally unstructured columnar mesh where the vertical discretisation is anisotropic. By using direct addressing in the vertical and traversing the vertical dimension in the innermost loop nest, the overhead of indirect addressing can be reduced to a negligible level. Fair comparisons between structured and unstructured approaches are hard to achieve due to the difficulty in separating impact on performance of 5 factors such as the order of the method; the choice of finite difference, finite volume or finite element approaches; the efficiency of the implementations; and so on. That said, in [8], a set of kernels drawn from an atmospheric finite volume icosahedral model execute with a performance of within 1% of the directly addressed speed when executed in a vertical direct, horizontal indirect framework. So, the proposed data model uses indirect addressing in the horizontal, and direct addressing in the vertical, with columns contiguous in memory. In contrast, the current Unified Model uses direct addressing in all three dimensions, and it is levels that are contiguous in memory. The change to column-contiguous (vertical innermost) from level-contiguous (horizontal innermost) will also have performance consequences, which depend on the operation being performed and the architecture on which it runs. For a cache based memory model such as conventional CPUs, coding the vertical index as the innermost dimension is usually optimal for cache reuse because the array data in this dimension will be contiguous in memory. Thus, an inner loop over this dimension will exhibit data locality and allow the compiler to exploit the cache. However, for a non-cache based memory model such as on a GPU this arrangement may not necessarily be optimal [9– 14]. On current GPUs, memory latency can be hidden by oversubscribing the number of thread teams (commonly referred to as warps for NVidia hardware and software) to physical cores. The low cost of switching warps whilst waiting for data amortises the cost of memory latency. Consequently the number of concurrent threads is very high. Moreover, GPUs have a vector memory access feature (known as coalesced memory access in NVidia terminology) for an individual warp. To access data in this way, each thread in a warp requests data from a sequential element of a contiguous array in a single memory transaction. Naively, a data layout with the horizontal index innermost would achieve this. However, coalesced memory access could still be achieved with the vertical index innermost if a warp of threads cooperate to load a single element column into shared memory as a contiguous load. The warp then computes for the column (using a red-black ordering if required if write stencils overlap). In particular, a thread-wise data decomposition in the vertical is likely to be required for operations such as a tridiagonal solve so that data segment sizes are sufficiently reduced to fit into (warp) shared memory. Furthermore, such considerations are not limited to GPUs. Commodity processors today possess cache-based memory architectures and vector-like, 6 pipe-lined execution units capable of issuing multiple floating-point instructions per cycle. Although today’s “vectors”, typified by 128 or 256 bit SSE or AVX, are much smaller than in the era of vector computers, it is still the case that an application incapable of exploiting the ILP that such units confer is likely to achieve disappointing performance. It is unclear whether the recent trend towards larger vector units in commodity processors will continue, because the returns for many applications – especially those of limited computational intensity – are diminishing. In a recent study of the NEMO ocean code, [15] compared two alternative index orderings for 3-dimensional arrays; the level contiguous ordering (which is used both by NEMO and the current UM) and the alternative, column contiguous in memory, as will be the case in the Gung Ho data model. The study found that although the column contiguous ordering is advantageous for some important operations (such as halo exchanges and the avoidance of redundant computations on land), and although most operations can be made to vectorize in either ordering, there remain some operations that are difficult to vectorize in the column contiguous ordering. The archetypal example is a tridiagonal solve in the vertical dimension, because of loop-carried dependencies that inhibit vectorization. In the column contiguous ordering, the dependencies remain, but by operating on a whole level at once, the vector units can be fed; however, this trick is not cache friendly in the column contiguous ordering. As it is likely that similar concerns will arise in the Gung Ho dynamical core, alternative methods for solving tri-diagonal systems such as sub-structuring and cyclic reduction are under consideration [16]. These methods have in common the idea of transforming the problem into one that exposes more parallelism, albeit at the cost of more floating point operations; the hope is that as “flops become free”, the increased parallelism will win. Clearly, performance portability and data layout are complex issues. There are complex performance benefits and penalties whatever choice is made, and uncertainty in what hardware features will be available at exascale make all the consequences of this choice harder to foresee. However, the vertical index innermost data layout represents the best choice for achieving performance with the awareness that hardware specific performance code will be necessary to exploit whatever hardware features are available. This consideration impacts on the design of the Gung Ho software architecture. 7 3. The Gung Ho software architecture The software architecture is designed with a separations of concerns in mind to satisfy the design criteria of performance portability and futureproofing. The software will be formally separated into different layers, each with its own responsibilities. A layer can only interact with another via the defined API. These layers are the driver layer, the algorithm layer, the parallelisation system (PSy), the kernel layer and the infrastructure layer. These are illustrated in figure 1. The identification of these layers enables a separation of concerns between substantially independent components which have different requirements and which require developers with substantially different skill sets. For example the PSy is responsible for the shared memory parallelisation of the model and the placement of communication calls. The layers above (algorithm) and below (kernel) are thus isolated from the complexities of the parallelisation process. Figure 1: Gung Ho software component diagram. The arrows represent the APIs connecting the layers and the direction shows the flow control. The driver encapsulates and controls all models in a coupled model and 8 their partitions; it calls the algorithm layer in each model3 . The algorithm layer is effectively the top level of Gung Ho software. It is where the scientist defines their algorithm in terms of local numerical operations, called kernels, and global predefined operators such as linear algebra operations on solution fields. The algorithm layer calls the PSy layer which is responsible for shared memory parallelisation and intra-model communication. The PSy layer calls the scientific kernel routines. The kernel routines implement the individual scientific kernels. All layers may make use of the infrastructure layer which provides services (such as halo operations and library functions). The following sections discuss each of the layers and their associated APIs in more detail. Although the design of the software architecture is layered, some layers are depicted side-by-side in Figure 1. The reason for this, and for the vertical line between the algorithm layer and kernel layer on one side and the PSy on the other, is to emphasise the division of responsibilities. The natural scientist will deal with the algorithm and the kernel, and the computational scientist will deal with the PSy layer. The vertical line has been termed the “iron curtain” to underline the strong separation between code parallelisation and optimisation on the one hand and algorithm development on the other. The driver is mainly the responsibility of the computational scientist as it makes use of the computational infrastructure. The kernel layer can be treated as part of a general toolkit in order to promote the re-use of functionality within Gung Ho and between Gung Ho and other models at the Met Office (including the large collection of parametrisations of small-scale processes known in atmospheric modelling as physics). In a formal instantiation of this idea, developers would be encouraged to use the toolkit functions wherever possible and would not be able to add new science into the system unless that science were added to the toolkit. 3.1. Driver layer The driver layer is responsible for the scheduling of the individual models in a coupled model including the output and checkpoint frequencies. There may be different implementations of the driver layer depending on the particular configuration. For example, a stand-alone driver might look different to a driver for a fully coupled Earth System Model. 3 A model refers to a complete physical subsystem, e.g. the atmospheric dynamics, the atmospheric chemistry or oceanic model, which are then coupled together 9 The driver is also responsible for holding any state that is passed into and out of the Gung Ho dynamical core, or exchanged with other models, and for ensuring that any required coupling involving the state takes place. The driver may know little about the content of the state. For example, it may simply pass an opaque state object, as is the case with the Model for Prediction Across Scales (MPAS) 4 . However, these options need further investigation, and for now, we postpone the decision as to which layer(s) will be responsible for allocating and initialising the state variables. The driver will control the mapping of the model onto the underlying resources i.e. the number of MPI processes and threads and the number of executables. It is envisioned that the driver will make use of existing coupled model infrastructure provided by third parties, for example some of the functionality of the Earth System Modelling Framework (ESMF)5 may be adopted. The driver can call the algorithm layer directly. 3.2. Algorithm layer The algorithm layer, named Algs in Figure 1, will be where the scientist specifies the Gung Ho algorithm(s). As such it should be kept as high level as possible to keep it simple to develop and manage. This layer will have a logically global data view i.e. should operate on whole fields. Data objects at the field level should be derived types. The same applies to other data structures, such as sparse matrices, that are visible at the algorithm layer. This layer should primarily (and potentially solely) make use of a set of calls to existing building block code and control code to implement the logic of the algorithm. These building blocks will often, but not exclusively, be defined by user-defined kernels that specify an operation to be performed, typically column-wise, on fields. The exception is common or performance critical operations that may be implemented as library functions. Calls may be in a hierarchy, for example a call to a Helmholtz solver may contain a number of smaller “building block” calls. These kernels form the kernel layer and the algorithm is specified as a set of kernels calls (via the Psy layer) in the algorithm layer. There will be no explicit parallel communication calls (for example halo updates) in the layer: this will be handled exclusively by the PSy layer. 4 5 http://mpas-dev.github.io/ http://www.earthsystemmodeling.org/ 10 However, calls to communicate data via a coupler (put/get calls) will occur at this layer. 3.2.1. Algorithm to PSy interface The interface between these layers must maintain the separation of concerns between the high level algorithmic view and a computational view. The interface defines what information the algorithm layer must present and how it is to be used by the PSy layer. Information on the kernel to be executed must be passed. This may be as a direct call to the PSy layer, or as an argument (e.g. a function pointer) of a generic execute kernel function. The merits and defects of each approach are still under consideration although the differences are subtle and the consequences of choosing one over the other are at this stage still unforeseen. Other arguments in the interface will contain information about data objects to be operated on, and critically data access descriptors as to whether and when the data is to be read only, can be overwritten or the values incremented. Such an interface with a generic compute engine approach has been deployed in a different context in [17] Furthermore, multiple kernels may be passed to the PSy layer in a single call. This coupled with the data access descriptors allows the PSy layer to do two things. As the PSy layer is responsible for communication, both interand intra-node, this approach allows the PSy layer to parallelise the routines together, for example by reducing the the number of halo swaps. This is known as kernel fusion. Moreover, the data access descriptors will allow the PSy layer to computationally reason about the order of the operations, for example to achieve a better overlap between computation and communication. This is known as kernel re-ordering. Both offer potential performance optimisation gains. 3.3. PSy layer The interface to the PSy layer presents a limited package of work, what operations are required and critically, the data dependencies. In short, a computational pattern. Whilst there is no intention to formally characterise these patterns as in [18, 19], this abstraction remains a powerful software engineering concept. The interface to the PSy layer will be Fortran 2003 compliant, however, this may never be compiled directly. This interface will be parsed (a Python parser being the most likely candidate) and then transformed to implement some of the necessary optimisations. This may be to default Fortran 2003 routines, but also allows for the generation of hardware 11 specific code. For example, this could be language/directive specific such as CUDA or OpenACC for a GPU hardware target or even CPU assembler for a CPU target. Another example of such optimisations could be targeted at architecture specific configuratons, such as different cache and AVX sizes on Intel Xeon and Xeon Phi. The interface parser/transformer (IPT) will be a source-to-source translator. The default target will be Fortran 2003. This means that the code will always be able to be debugged using standard tools, correctness can be checked and standard compilers with optimisation benefits can be deployed. However, where hardware and computational optimisation opportunities exists the translator can be used to generate highly optimised code. This optimisation need only be created once, and potentially re-used whenever the computational pattern fits. In the case of multiple functions being passed, the IPT implementing the PSy layer will be able to decide the most appropriate ordering of these functions, including whether they are to be computed concurrently, that is, exploiting task or functional parallelism. It is the responsibility of the PSy layer to access the raw data from the data arguments passed through the interface. The PSy layer calls routines from the kernel layer. The implementation will also be able to call routines from the infrastructure layer. The data access descriptors will allow the translator to reason when communication is required and place the appropriate calls, for example halo swap, to the infrastructure layer. The PSy layer will also be responsible for on-node, shared memory parallelism most likely implemented with OpenMP threads or OpenACC. 3.3.1. PSy to kernel interface The PSy layer calls the kernel routines directly, passing data in and out by argument with field information being passed as raw arrays. This means that the data has to be unpacked from derived data type objects in the PSy layer. 3.4. Kernel layer The kernel layer is where the building blocks of an algorithm are implemented. This layer is expected to be written by natural scientists with input from computational scientists as this layer should be written for ease of maintenance but also such that, for example, the compiler can optimise the code to run efficiently. 12 To help the compiler optimise, explicit array dimensions should be provided where possible. In certain circumstances, where performance of a routine is critical it may be that compiler directives might need to be added to the kernel code to aid optimisation (such as unrolling or vectorization). In more extreme cases there may need to be more than one implementation of a particular kernel which is optimised for a particular class of architecture, but this can be avoided if the Fortran 2003 compiler can provide sufficient performance. Two potential kernel implementations are considered for the design. These are not mutually exclusive and it may be that some mixture of the two is used. In the first implementation the parallelisable dimensions are made malleable, so that the parallelisable dimensions can be called with different numbers of work units. This approach is flexible in that the same kernel code can be used for smaller or larger numbers of work units per call depending on what the architecture requires. For example, one would expect a GPU machine to require a large number of small work-units and a many-core machine to require fewer larger work units. In the second implementation the loop structures are not contained in the kernel code and any looping is performed by the PSy layer. One exception to this rule is the loop over levels which may be explicitly written, particularly if it is not possible to parallelise the loop. The size of the kernels, in terms of computational work, is still under consideration, although there is nothing in the architecture design that dictates kernel size. Smaller kernels are more suitable for architectures based on GPUs but larger kernels are more suitable for multi-core processors. It may be that smaller kernels can be efficiently combined to create larger kernels which would tend to favour smaller ones. Ultimately it will be the unit of work defined by the algorithm developer that will dictate the size of a kernel. Finally, the kernel layer will be managed as a toolkit of functions which can only be updated by agreement with the toolkit owner. Combined with a rule that disallows code, other than subroutine calls and control code, at the algorithm layer, this approach would promote the idea of re-using existing functionality (potentially across different models, not just the Gung Ho dynamical core) as code developers would need to apply to add new functionality to the kernel layer and this could be checked with the existing toolkit for duplication. 13 3.5. Infrastructure layer The infrastructure layer provides generic functionality to all of the other layers. It is actually a grouping of different types of functionality including parallelisation, communication, libraries, logging and calendar management. Parallelisation support will include functionality to support the partitioning of a mesh and mapping partitions to a processor decomposition. It is expected that ESMF or some similar software solution will be used to support this functionality. However, this functionality is not provided directly but rather through the infrastructure layer so that only the appropriate functionality is exposed. Communication support is related to the partitioning support as halo operations are derived from the specification of a partitioned mesh in ESMF. Again this functionality is not provided directly but rather through the infrastructure layer. The infrastructure will also provide the coupling put/get functionality (for example, implemented by OASIS36 ) but that is out of scope for Gung Ho. It makes sense to use standard optimised libraries (such as the BLAS or FFTs) where possible and the infrastructure will provide an interface to a subset these libraries. Finally, logging support is a useful way to manage the output of information, warnings and errors from code and calendar support helps manage the time-stepping of models. ESMF provide infrastructure for both of these and the infrastructure will provide an interface. 4. Data model A data model is an abstract description of the data consumed and generated by a program. The fundamental form of data employed in simulation software is the field. That is, a function of space and potentially, time. A data model for fields therefore sets out to express field values and their relationship to space and time. In simulation software, the field is represented by a finite set of degrees of freedom (DoFs) associated with a mesh and potentially changing through time. Simulation software must therefore have a data model which encompasses meshes and fields, and gives the relationship between them. 6 https://verc.enes.org/oasis 14 4.1. Choice of discrete function space The conventional approach in geoscientific simulation software is for degrees of freedom to be identified with topological mesh entities. For example, it is usual to speak of “node data”, meaning that a field has one degree of freedom associated with each mesh vertex, or “edge data” indicating that a flux degree of freedom is associated with each edge of the mesh. Furthermore, it is conventional to select a single (or perhaps a small related set) of discretisations, and to develop the model with a hard-coded assumption that that discretisation will apply: for example C-grid models are coded with field data structures which assume that velocity will always be represented by a single flux variable on each face, while tracers will be represented by a single flux variable at each cell centre. In contrast, finite element models in various domains of application conventionally record an explicit relationship for each field between the degrees of freedom and the mesh topology. This permits a single computational infrastructure to support the development of models with radically different numerical discretisations. In this model, a single infrastructure could be used to develop a C grid model, with a single flux DoF with every mesh facet, or an A grid model with one velocity DoF per dimension with each vertex. The infrastructure could also support slightly more unusual cases, such as the BDFM element [introduced for weather applications in 7] associates two flux DoF with each triangle edge and three flux DoF with the triangle interior. Spectral element schemes take this much further with very large numbers of DoF associated with each topological entity. The Gung Ho data model is of this type, and allows for different function spaces to be represented, including those which associate more than one DoF with a given topological entity, and those in which a single field has DoF associated with more than one kind of topological object. It is important to note that, although this data model is taken from the finite element community, it is merely an engineering mechanism which allows for the support of different DoF locations on the mesh. There is no impediment to Gung Ho, or a future dynamical core, adopting a finite difference or finite volume discretisation in association with this data model. 4.2. Vertical structure in the data model Gung Ho meshes will be organised in columns. Section 2.1 examines the issues surrounding the data-layout and indirect addressing will be employed in the horizontal, with direct addressing employed in the vertical. For this 15 to be an efficient operation, the data model will reflect this choice inherently by ensuring vertically aligned degrees of freedom are arranged contiguously in memory. This will be achieved by storing an explicit indirection list for the bottom DoFs in each column. For each column in the horizontal mesh, the indirection list will store the DoFs associated with the lowest three-dimensional cell. It will also store the offset from these DoFs to those associated with the cell immediately above this cell. Since vertically adjacent DoFs will have adjacent numbers, this offset list will be the same for every column, and for every layer in the column. By always iterating over the mesh column-wise, it will be possible to exploit this structure efficiently as follows: first, the columns DoFs associated with the bottom cell of a given column are looked up, and the numerical calculations for that cell are executed. Next, the DoFs for the cell above the first one are found by adding the vertical offsets to the bottom cell DoFs. This process is repeated until the top of the column is reached and the DoFs for the next column must be explicitly looked up. Since the vertical offsets are constant for all columns in the mesh, these offsets can be expected to be in cache, so the indexing operation for all but the bottom cell require no access to main memory. Further, the vertical adjacency of DoF values in combination with this iteration order results in stride one access to the field values themselves, maximising the efficient use of the hardware memory hierarchy. Finally, it should be noted that this approach generalises directly to iterations over entities other than column cells. For example iteration over faces to compute fluxes is straightforward. 5. Conclusions Exascale computing presents serious challenges to weather and climate model codes, to such an extent that it may be considered a disruptive technology. Recognising this, the Gung Ho project is considering a mathematical formulation for a dynamical core which is capable of exposing as much parallelism in the model as possible. The design of the software architecture has to support features of this model, such as the unstructured mesh, whilst exploiting the parallelism to achieve performance. In a separation of concerns model of software design, each component of the architecture has a clear purpose. The strongly defined interfaces between these layers promote a powerful abstraction which can be used to design software which can be 16 developed and maintained, be portable between diverse hardware with as yet unknown features and may be executed with the high performance. 6. Acknowledgments We acknowledge use of Hartree Centre resources in this work. The STFC Hartree Centre is a research laboratory in association with IBM providing High Performance Computing platforms funded by the UK’s investment in e-Infrastructure. The Centre aims to develop and demonstrate next generation software, optimised to take advantage of the move towards exascale computing. This work was supported by the Natural Environment Research Council Next Generation Weather and Climate Prediction Program [grant numbers NE/I022221/1 and NE/I021098/1] and the Met Office. References [1] J. J. Dongarra, A. J. van der Steen, High-performance computing systems: Status and outlook, Acta Numerica 21 (2012) 379–474. doi:10.1017/S0962492912000050. URL http://journals.cambridge.org/article S0962492912000050 [2] A. Brown, et al., Unified Modeling and Prediction of Weather and Climate: A 25-Year Journey, Bulletin of the American Meteorological Society 93 (2012) 1865–1877. doi:10.1175/BAMS-D-12-00018.1. [3] D. N. Walters, et al., The Met Office Unified Model Global Atmosphere 4.0 and Jules Global Land 4.0 configurations, Geoscientific Model Development Discussions 6 (2) (2013) 2813–2881. doi:10.5194/gmdd-6-28132013. URL http://www.geosci-model-dev-discuss.net/6/2813/2013/ [4] N. Wood, et al., An inherently mass-conserving semi-implicit semilagrangian discretisation of the deep-atmosphere global nonhydrostatic equations, Q. J. R. Meteorol. Soc. (2013). doi:10.1002/qj.2235. [5] C. J. Cotter, D. A. Ham, Numerical wave propagation for the triangular p1dg-p2 finite element pair, Journal of Computational Physics 230 (8) (2011) 2806 – 2820. 17 [6] J. Thuburn, C. Cotter, A framework for mimetic discretization of the rotating shallow-water equations on arbitrary polygonal grids, SIAM Journal on Scientific Computing 34 (3) (2012) B203–B225. arXiv:http://epubs.siam.org/doi/pdf/10.1137/110850293, doi:10.1137/110850293. URL http://epubs.siam.org/doi/abs/10.1137/110850293 [7] C. Cotter, J. Shipton, Mixed finite elements for numerical weather prediction, Journal of Computational Physics 231 (21) (2012) 7076 – 7091. doi:10.1016/j.jcp.2012.05.020. [8] A. MacDonald, J. Middlecoff, T. Henderson, J. Lee, A general method for modeling on irregular grids, International Journal of High Performance Computing Applications 25 (4) (2011) 392–403. doi:10.1177/1094342010385019. [9] N. Bell, M. Garland, Efficient sparse matrix-vector multiplication on CUDA, NVIDIA Technical Report NVR-2008-004, NVIDIA Corporation (Dec. 2008). [10] G. I. Egri, Z. Fodor, C. Hoelbling, S. D. Katz, D. Nogradi, et al., Lattice QCD as a video game, Comput.Phys.Commun. 177 (2007) 631–639. arXiv:hep-lat/0611022, doi:10.1016/j.cpc.2007.06.005. [11] M. Clark, R. Babich, K. Barros, R. Brower, C. Rebbi, Solving Lattice QCD systems of equations using mixed precision solvers on GPUs, Comput.Phys.Commun. 181 (2010) 1517–1528. arXiv:0911.3191, doi:10.1016/j.cpc.2010.05.002. [12] M. de Jong, Developing a CUDA solver for large sparse matrices in Marin, Master’s thesis, Delft University of Technology (2012). [13] E. Mueller, X. Guo, R. Scheichl, S. Shi, Matrix-free GPU implementation of a preconditioned Conjugate Gradient solver for anisotropic elliptic PDEs, submitted to comput. vis. sci. (2013). URL http://arxiv.org/abs/1302.7193 [14] I. Reguly, M. Giles, Efficient sparse matrix-vector multiplication on cache-based GPUs, in: Innovative Parallel Computing (InPar), 2012, 2012, pp. 1–12. doi:10.1109/InPar.2012.6339602. 18 [15] S. M. Pickles, A. R. Porter, Developing NEMO for large multicore scalar systems: Final report of the dCSE NEMO project, Tech. rep., HECToR dCSE report (2012). URL http://www.hector.ac.uk/cse/distributedcse/reports/nemo02/ [16] Y. Zhang, J. Cohen, J. D. Owens, Fast tridiagonal solvers on the GPU, in: Proceedings of the 15th ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming, PPoPP ’10, ACM, New York, NY, USA, 2010, pp. 127–136. doi:10.1145/1693453.1693472. URL http://doi.acm.org/10.1145/1693453.1693472 [17] F. Rathgeber, G. R. Markall, L. Mitchell, N. Loriant, D. A. Ham, C. Bertolli, P. H. Kelly, Pyop2: A high-level framework for performance-portable simulations on unstructured meshes, High Performance Computing, Networking Storage and Analysis, SC Companion: 0 (2012) 1116–1123. doi:http://doi.ieeecomputersociety.org/10.1109/SC.Companion.2012.134. [18] E. Gamma, R. Helm, R. E. Johnson, J. M. Vlissides, Design patterns: Abstraction and reuse of object-oriented design, in: Proceedings of the 7th European Conference on Object-Oriented Programming, ECOOP ’93, Springer-Verlag, London, UK, 1993, pp. 406–431. URL http://dl.acm.org/citation.cfm?id=646151.679366 [19] E. Gamma, R. Helm, R. Johnson, J. Vlissides, Design patterns: elements of reusable object-oriented software, Addison-Wesley Longman Publishing Co., Inc., Boston, MA, USA, 1995. 19