Segmentation via Genetic Programming

advertisement

Segmentation

via

Genetic Programming

a final project by Yonatan Shichel

Aug 2005

Introduction

2

Segmentation

The problem

The Dataset

2

2

2

Genetic Programming

Individuals

Fitness

Course of evolution

4

4

6

6

Segmentation via Genetic Programming

Function set

Terminal set

Fitness measure

Threshold

Image test set

Miscellaneous Evolutionary parameters

7

7

8

8

9

10

10

Results

A typical Evolutionary run

Best Individual

12

12

13

Summary & Discussion

Conclusions

Future Work

17

17

17

References

18

1

Introduction

In this project, Genetic Programming (GP) will be used to create segment maps of

given images. The result will be tested and compared to existing segmentation

methods.

Segmentation

Segmentation is a key problem in computational vision. Splitting an image into

segments can be an extremely hard problem, as some ‘real-world’ properties are

inevitably lost when transferred into 2D canvas.

To achieve good segmentation, each available image property should be used: image

colors, textures, patterns, edge and contour maps etc. But still, this problem is

conceptually ill-defined; a given image might be segmented differently by different

people, and there is no way to determine which of the segmentations is more ‘correct’

than the other.

The problem

The ‘traditional’ segmentation problem can be defined as the function:

seg x, y : R R segment1 , segment 2 ,, segment n

which gives each image pixel a ‘tag’, telling to which segment this pixel belongs. In

this project, however, I will use a slightly different function:

seg x, y : R R true, false

which decides whether a given image pixel resides on a borderline between two

segments or not.

To simplify the operation and reduce the required system resources, I have used only

grayscale images, in which each pixel is a real number in the range [0,1], describing

the intensity of the pixel (0 represents a completely black pixel, and 1 represents a

completely white pixel).

The Dataset

In this project I have used the Berkley Segmentation Dataset [1], a public library of

300 images that have been segmented by 30 human subjects. The purpose of this

library is to provide a common basis to segmentation related researches, as well as

being a benchmark for segmentation algorithms.

The dataset contains a train set of 200 images and a test set of 100 images. Each of

the images in this dataset had been segmented manually by several human subjects, in

both color and grayscale versions.

2

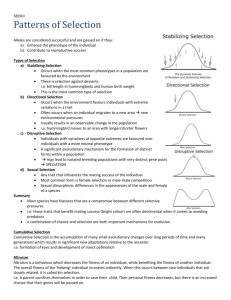

Figure 1: Image #37073 and five different segmentation interpretations

3

Genetic Programming

Genetic Programming (GP) [2] is a bio-inspired Artificial Intelligence (AI) method,

which is a relatively new approach to the field of Evolutionary Algorithms [3],

sometimes called also Genetic Algorithms (GA).

GA (and also GP) is inspired by Darwin’s four evolutionary principles [4]: In brief, a

species lives in a given environment (the competition principle). Each of the

individuals has unique, congenital attributes that are that are being passed to its

progenies (variation principle). Due to limited environmental resources, more

individuals are born than can live to reproduce (overproduction principle), leading to

a struggle for existence (survival of the fittest principle). According to these

principles, the individuals whose attributes fit the environment will be able to

reproduce; their offspring will reflect some of these attributes, hence will be able to

fit to the environment. On the long run, the species population will consist of

individuals that are fitted to the environment.

The computer model of GA/GP is similar: Each individual is a candidate solution to

the problem we wish to solve. The population is a list of individuals, and limited

resources are simulated by assigning a fixed size to the population. We can assign a

fitness measure to each individual, according to the quality of its solution to the

problem. Individuals with high fitness measures will be chosen to reproduce.

In GA, each individual is a solution; in GP, each individual is a computer program (or

function), that can operate on any input. That way, GP is far more flexible than

traditional GA, but the space of possible individuals is enormous.

Individuals

GP individuals are computer functions. The most common representation is a LISPlike program, which can be easily transformed into a tree structure. The tree contains

functions (nodes) and terminals (leaves) which are the building blocks of the

program.

For example, using the function set F = {+,-,*} and the terminal set T = {x,1} we can

describe the functions:

x 2 * x x

2x 1 * 11 x 1

The tree representations of these functions are shown in Figure 2.

The tree structure enables the reproduction of two individuals by simply exchanging

subtrees between the two parents. This operation, called crossover, results in two new

functions that partially resemble the original ones; an example of crossover is shown

in Figure 3.

Another common evolutionary operator is the mutation operator, which is done by

choosing a random node in the individual tree, discard the subtree rooted at that node

and create a new subtree. Again, this action results in an individual which resembles

4

the original, but have some new features. An example of the mutation operator is

shown in Figure 4.

*

x

x

*

+

1

1

x

1

Figure 2: Tree representations of the functions listed above

*

-

+

1

x

*

1

x

1

x

Figure 3: The original functions after crossover. The subtrees (+ 1 1) and x were exchanged,

producing the functions 2x and x2-1

*

+

1

1

*

x

*

1

1

1

x

x

1

Figure 4: 2x-1 after mutation. The (+ 1 1) subtree was replaced by the new subtree (* (- 1 1) x);

the new function is (- (* (* (- 1 1) x) x) 1), or simply -1.

5

Fitness

To determine the fitness measure of an individual, we should operate its function on

some inputs and check the quality of the results. This action is called fitness

assignment. As in real evolution, telling which individual is the fittest is not always

an easy task. I’ll discuss the fitness measures used in the segmentation problem later.

Course of evolution

To apply GP on a given problem, we should first define the function and terminal sets

- which will be used to build the evolved functions. Then we should define the fitness

measure - a function that calculates the fitness, or quality, of each individual in the

population.

The evolutionary process:

I. Generate the initial generation G0.

This is usually done by building NPOP random trees using the function and

terminal sets. There are several methods to grow the trees, as described by

Koza [2].

II. Evaluate the fitness measure of each individual in the current generation,

using the fitness function described above.

III. Create the next generation GN+1:

i. Choose two individuals by random, but in accordance to their

fitness measure. This can be achieved, for example, by

performing a mini-tournament between few random-chosen

individuals, taking the two with the highest fitness measure.

ii. With the probability PC, apply the crossover operator on the

two individuals, resulting in two child individuals. Pass these

individuals to the next generation GN+1. Otherwise, pass the

two original individuals to the next generation GN+1.

iii. With the probability PM, apply the mutation operator on the

selected individuals.

iv. Repeat until GN+1 is full.

IV. Go back to step II.

The evolution is stopped when a fairly good individual is found, after a predefined

number of generations or when the individuals fail to show further evolvement over

few generations.

6

Segmentation via Genetic Programming

I have applied GP on some images from the Berkley Segmentation Dataset. Looking

at several algorithms, I have noticed that most of them take the original image,

convolve it with one or more kernels (possibly after some noise reduction) and use a

threshold function to determine which of the pixels reside on a segmentation

boundary.

I have decided to design the individuals as functions that take the entire image (i.e.

2D matrix of real values) and return a new matrix, representing the segmentation

boundaries. Each pixel in the resulting matrix should be 0 or 1, for non-boundary or

boundary pixel respectively.

For this purpose, I have supplied the function and terminal sets with matrix-oriented

operators, as listed in the next subsection. These functions and terminals can operate

on numbers (scalars) and matrices, just like MATLAB functions. To ensure that each

individual represents a legal program, I have divided to types of tree nodes into three

categories: number, matrix and kernel. This approach is called Strongly-Typed

Genetic Programming (STGP) [5], and is commonly used.

Even though a kernel is actually a small matrix, they differ in size. For example, the

operator + cannot be applied on a matrix and a kernel. The operator conv, on the other

hand, must get a matrix and a kernel to perform correctly. Some operators can operate

on more than of input; for example: + can be applied on two number, one number and

one matrix, or two matrices.

Function set

format of the function declaration column: <return type> <function symbol> (<argument types>*)

matrix

matrix

kernel

kernel

number

+

+

+

+

+

(matrix,matrix)

(matrix,number)

(kernel,kernel)

(kernel,number)

(number,number)

Adds the two arguments.

-

Subtracts the two arguments (same as +)

*

Multiplies the two arguments (same as +)

number % (number,number)

A ‘safe divide’ operator. This one operates as a

simply divide operator, but avoids dividing by zero.

matrix neg (matrix)

kernel neg (kernel)

Returns the negative value of the given argument

matrix conv (matrix,kernel)

Returns the convolution result of the given matrix with

the given kernel

matrix opp (matrix)

kernel opp (kernel)

Returns 1 divided by the given argument. Special

care was taken to avoid division by zero; function

operates on each matrix cell individually.

matrix sqrt (matrix)

kernel sqrt (kernel)

Returns the square root of the matrix; function

operates on each matrix cell individually.

7

Terminal set

Terminals provide basic information for the evolved individual. I have included some

constant and random numbers, several kernels (like Prewitt’s gradx and grady) and

random kernels, and - of course - the original image itself.

matrix image

The original image

number 0

number 1

number const

0 & 1 are predefined constants. ‘const’ is an

Ephemeral Random Constant (ERC), as described by

Koza [2]. During the creation of the individual, its value

is randomly assigned and cannot be modified through

the course of evolution (unless mutated!)

kernel gradx

kernel grady

kernel const

Prewitt gradient kernels: [-1 0 1] and [-1 0 1]’. ‘const’ is

a kernel version of ERC - it is assigned with random

values when the individual is created, and cannot by

modified during the course of evolution, unless

mutated.

For example, we could write the gradient magnitude function using this program:

(sqrt (+ (* (conv image gradx) (conv image gradx)) (* (conv image grady)

(conv image grady))))

Fitness measure

Finding the right fitness measure is extremely important for GP applications: it sets

the course of evolution to find the fittest individuals!

In the segmentation problem, the fitness function should receive an individual,

operate its program on an input image and compare the result to a given human-made

segmentation map. As discussed earlier, segmentation is an ill-defined problem, so

different persons might draw different segmentation maps. To overcome this obstacle,

I have used the union of the human-made segmentation maps - so a pixel is treated as

‘true’ boundary pixel if one or more persons included it in their maps.

I have tried several fitness measures, which will be listed below. This task was not an

easy one, and was done with much trial-and-error. There are, however, some

measures that were found to be significantly better than the others.

Berkley’s F-measure

Given a segmentation map and a computer-generated map, this measure

calculates the precision and recall measures of the algorithm.

Precision is the probability that a machine-generated boundary pixel is a true

boundary pixel, i.e. the fraction of ‘true’ pixels that were reported by the

algorithm. Low precision measure indicates that the algorithm is ‘noisy’, and

tends to include many false pixels.

Recall is the probability that a true boundary pixel is detected, i.e. the fraction

of reported pixels that were included in the human-made segmentation map.

Low recall measure indicates that the algorithm does not fully detect the

human-drawn segmentation maps.

8

To describe the performance in one parameter, F-measure is introduced; this is

simply the harmonic mean between precision and recall.

I have tried this measure for a while, but the figures weren’t nice. Individuals of the

early generations don’t perform very well, but get rather high F-measure: an

individual that reports all pixels as boundaries (or non-boundaries) will get F-measure

value larger than 0.5. This might contradict the variety principle: many individuals

get this (relatively high) measure on the first generations, reproduce and eliminate

other individuals which might have contributed some important subprograms to the

population on later generations.

Accuracy measure

This measure is the simplest, yet very efficient. It is simply the number of

pixels common to the generated result and the human-made segmentation

map, divided by the number of pixels in the union of the two matrices:

acc

result segmap

result segmap

This measure reflects the accuracy of the algorithm by rewarding the

discovery of ‘true’ segmentation boundaries and giving penalties on both

‘false’ pixels reported by the algorithm and ‘true’ pixels that weren’t reported

(i.e. both ‘false positives’ and ‘true negatives’).

Since many individuals from the first generations fail to find even one ‘true’

pixel, they receive a fitness measure of zero; hence the first generation’s

fitness values are less variant. To overcome this phenomenon, I have slightly

modified the accuracy measure:

acc

1 result segmap

1 result segmap

Now, two individuals that haven’t found any boundary pixels may have

different fitness values - the one that reported less false pixels will be better.

Threshold

The evolved programs produce a ‘soft’ segmentation map, in which each matrix cell

contains a real number instead of a Boolean value. Of course, a threshold generating

function was needed. My assumption is that the best threshold value will be chosen

by the user (after the evolved function have been extracted and deployed), but

threshold values were needed on the fitness evaluation phase. Since the evolved

functions should be able to operate on any given image, I have avoided encoding the

threshold value in the genome. Instead, I have tried two automatic threshold methods:

The first method was used by Berkley: Berkley’s benchmark simply divides threshold

range linearly using 30 threshold values, calculating the fitness value (at Berkley it’s

the F-measure) for each of them. I found this method a bit crude, since at many

occasions the threshold range was not linear, causing the entire image to ‘slip’

between two threshold values.

9

The second (and preferred one) have divided the threshold range into uneven parts, so

each threshold step will ‘reveal’ an equal additional number of segmentation points,

calculated proportionally to the number of boundary points in the human-made

segmentation map (for example: assume that the human-made map included N

boundary points. The function will produce threshold values that will report 0.5N,

0.6N, ..., 1.9N, 2.0N points as boundary points. Lower & upper bounds can be

modified, as well as the step. Usually I have used 0.5N - 3.0N with 10 equal steps).

Like Berkley’s method, this method calculates the fitness for each of the threshold

values and picks the best. This is a somewhat slower method, but much more

accurate.

Image test set

The evolutionary process is extremely time and resource consuming. Each of the

individual programs should be applied on the original image and the results should be

evaluated; this might take up to several minutes per generation, depends on the

evolutionary parameters (see next section: Runtime).

To speed up the process, I have decided to drastically reduce the number of images

used for training. Only 3-5 images were chosen from the 200-image train set.

The resulting algorithms (best individual that have evolved during the evolutionary

process) were tested on ‘unseen’ images from Berkley’s test set.

Miscellaneous Evolutionary parameters

Population size

I have used populations of 150 individuals.

Generation count

I did not set a limit for the generation count; I have stopped the process when the

average fitness have reached peak value, and did not seem to be able to improve any

further.

Reproduction and Mutation

As described in the GP section. Crossover probability of 90% and mutation

probability of 10%.

Selection

I have used a tournament selection of k=3. To choose a single individual, the system

chooses k individuals randomly, and takes the one with the highest fitness value.

Tree Depth

Tree operations might result in extremely deep trees. To avoid this ‘bloating’ [6]

phenomenon, I have limited the depth of the trees to a value in the range 6-9.

10

Runtime

On a standard PC, Evaluation of a single individual with a single image dataset might

take up to 1 second. Multiply this by the population size and dataset size to get the

time needed for one generation. A successful GP run normally takes at least several

dozens of generations. Given the evolutionary parameters listed above, any process

took at least two hours, with the exception of several 10-hour runs.

Software

To run the GP evolutionary sessions, I have used Sean Luke’s ECJ 13 - a Java based

evolutionary computation and genetic programming research system [7]. This system

contains most of the infrastructure needed to run GP sessions, and had enabled me to

focus on research rather then on programming (though much programming and

debugging were still needed!). It also provides documentation, backup and logging

services, which are extremely helpful when dealing with such large populations and

long running times.

11

Results

After running several evolutionary processes, I have selected the best individuals.

This was done simply by picking the individuals with best fitness values from each

run. This section will discuss and examine these individuals.

A typical Evolutionary run

Figure 5 shows the progress of a short evolutionary run. These results are typical to

many evolutionary runs; we will discuss them now.

Figure 5: Accuracy fitness vs. Generation count; fitness values are shown for the best individual

as well as the average population fitness.

The first generation (G0) was created by random, so the individual accuracies are low:

0.176 for the best individual, 0.08 population average.

The following generations show improvement in both best and average values. As the

average values steadily increase, the best value sometimes ‘leaps’, as in G3 and G6.

This is due to the emergence of a new individual, created either by a crossover of two

individuals or by a mutation of an existing individual, which is much better than its

ancestors.

It is noticeable that fitness values might drop during the course of evolution; for

example, best individual of G2 is slightly worse than the best individual of G1. This is

due to the nature of the genetic operators - it is not always granted that the offspring

will be better than its ancestors.

By the end of this run, accuracy values seem to converge. On this phase, the

population variance is usually low, so only mutation may produce new individuals.

12

Best Individual

The best individual, with accuracy value of 0.262, was taken from G93. We’ll

examine its performance in the following section.

Segmentation Function

The segmentation function was extracted from the best individual:

(- (- (conv (* (conv image gradx) (conv image

gradx)) (- (- (kernel 5.381114 -8.362269 8.888325 1.1866289

-6.4069843 -8.251046 -9.389916 6.183886 -7.817788)

grady) (- (kernel -2.334486 -4.6182337 -9.115009 8.010966 3.0507333 3.22619 2.068446 -2.932576 -6.243905)

0.0))) (conv (* (conv image gradx) (conv

image gradx)) (- (- (kernel 2.4412537 -8.362269 8.888325

1.1866289 -6.4069843 -8.251046 -9.389916 6.183886 -7.817788)

grady) (- (kernel 9.936699 -4.6182337 -9.115009 8.010966 3.0507333 3.22619 2.068446 -2.932576 -6.243905)

0.0)))) (- (- (- (conv image grady) (* 1.0

9.336973)) (% (* 1.0 9.336973) 9.336973))

(% (% (* -3.9138038 0.0) (* 0.0 0.0)) (%

(* 1.0 9.336973) (* 1.0 9.336973)))))

As in most cases of GP, one may find it difficult to analyze the code of the function.

But looking deeper into the function, we can see a few interesting features:

This code uses both ‘gradx’ and ‘grady’ predefined kernels. In fact, a gradient

magnitude function for the x-axis has emerged during the evolution:

(* (conv image gradx) (conv image gradx)).

Like in human DNA, there is a lot of ‘junk genome’ in this function. For

example, (% (* 1.0 9.336973) (* 1.0 9.336973)) is simply 1.0; this is a

common phenomenon is GP, but it contributes operation to the genetic

operators - if one of the redundant subtrees will mutate, the entire individual

may function differently.

13

Here are some examples of the output of the evolved function. Accuracy values are

listed below, along with the accuracies of the ‘standard’ gradient magnitude

algorithm.

Accuracy = 0.307 (GM accuracy = 0.280)

14

Accuracy = 0.262 (GM accuracy = 0.245)

Accuracy = 0.126 (GM accuracy = 0.119)

15

Accuracy = 0.172 (GM accuracy = 0.193)

16

Summary & Discussion

Conclusions

In this work I have tried to use Genetic Programming to evolve segmentation

functions. Results show that it is possible to evolve segmentation functions using GP,

and that some good results can be achieved: in most cases, the best evolved function

‘beats’ the standard gradient-magnitude algorithm. Yet, gradient magnitude is not the

best segmentation algorithm available, so we cannot rely on it as a benchmark.

Usually, when applying GP to a problem, it is possible to drastically improve the

results using greater power. This problem is no different, and I believe that using

more resources could have produced better results.

Fitness evaluation uses much CPU resources, thus faster computers (or multi-CPU

architecture) will allow enlarging the population size.

Individuals’ tree structure tends to use exponential memory as a function of tree

depth; more RAM will allow using deeper (hence more complex) function trees.

Koza [2], for example, uses populations of ~10K individuals on his 1000-CPU

machine for GP evolutionary sessions.

Future Work

Use more CPU and RAM resources, hopefully in order to include all the train

set within the fitness calculation function.

Add some useful building blocks to the terminal set: more image-specific

kernels (like Gaussian smoothing kernel for noise reduction etc.)

Use ADFs (Auto Defined Functions) [8], which are subroutines that separately

evolved and may be used and reused by the evolved individuals.

Evolve the threshold function instead of using the same one for all images.

The threshold function may be integrated into the genome or evolved by coevolution [3], in which species are being evolved separately and evaluated

together.

Create an evolving ‘kernel library’, which will be evolved separately and may

be used by all evolved individuals.

Include more inputs, like texture maps or other edge detector outputs.

Use color images, which contain much more information.

17

References

[1]

The Berkley Segmentation Dataset and Benchmark

http://www.cs.berkeley.edu/projects/vision/grouping/segbench/

[2]

Koza, J. R.: Genetic Programming: On the programming of computers by

natural selection. MIT press, Cambridge, Mass. (1992)

[3]

Tomassini M.: Evolutionary Algorithms. Swiss Scientific Computing Center,

Manno.

[4]

Darwin, Charles: On the origin of species by means of natural selection.

London, John Murray, 1859

[5]

Montana, D.J.: Strongly typed genetic programming. Evolutionary

Computation 3 (1995) 199–230

[6]

Langdon, W.B.: Size fair and homologous tree genetic programming

crossovers.

Genetic Programming and Evolvable Machines 1 (2000) 95–119.

[7]

Luke S.: ECJ 13 - a Java based Evolutionary Computation and Genetic

Programming research system

http://cs.gmu.edu/~eclab/projects/ecj/

[8]

Koza, J. R.: Genetic Programming II: Automatic Discovery of Reusable

Programs. MIT press, Cambridge, Mass. (1994)

18