Table of Contents - PSU Systems Engineering

Portland State University

Systems Engineering

Extending the Life Cycle of Power

Plants through Predictive Maintenance and Performance Testing

Submitted by

Donald Ogilvie

Masters Project - SYSE 506

Submitted August 22, 2010

ACKNOWLEDGEMENT

First I have to thank my advisor Dr. Herman Migliore for his support, guidance and constant encouragements to make this project a reality. I am very grateful that I was given the opportunity to work on such a challenging project that has created a new horizon to herald the dawn of a new day. The continuous support from my family and friends is the key component that allows me to be successful at the graduate level. I will be forever grateful to my wife Caron who stood by me to make this day possible. I must say thanks to my daughter Thandi and my son Hakeem for their understanding while I was pursuing graduate studies. Finally I must say thanks to Robert Drummonds of

Ontario Power Generation who was always available for technical assistance to make this project a success.

ii

Table of Contents

Abstract

Section 1

1.0

1.1

Introduction

ConOps

1.2 Product Improvement Strategies

1.3 System Engineering Management Plan (SEMP)

1.4 Scope

1.5 System Engineering Process

1.6 Capabilities and Constraints

1.7 Functional Requirements

1.8

1.9

1.10

1.11

Verification Process

Design Synthesis

Functional Analysis/Allocation

Requirement Loop

1.12 Cost & Schedules

1.13 Project Variance Cost and Explanation of Estimates

1.14 PMTE Concept

1.15 Requirement Analysis

Section 2

2.0 Boiler Flow Nozzle Calibration & Gross Boiler Test (GBET)

2.1 Technical Performance Measure

2.2 Technical Reviews and Audit

2.3 Boiler Flow Nozzle Test - Process

2.4 Experimental Arrangement

2.5 Testing Procedure

2.6 Results

2.7

2.8

2.9

2.10

2.11

2.12

Findings

Work Environment

Work Order Task

Gross Boiler Efficiency Test

Boiler Test Curve Data

Post-Overhauled Finding

Section 3

3.0

3.1

Thermal Heat Rate

Test Objective

3.2 Test Programme

3.3 The Process Concepts

3.4 Work Breakdown Structure (WBS)

3.5 Work Task Schedule

3.6 Group-3 Plant

Page

1

21

22

23

24

26

32

32

33

34

39

40

2

4

9

13

20

45

46

47

48

49

50

51

53

58

59

61

64

65

67

67

68

69

73

74

75 iii

3.7

Group-1 & Group-2 (Coal Fire & Gas Fire) Plants

3.8

Variable Back Pressure Test

3.9

Summary of Reading for Turbine Variable Back-Pressure Test

3.10

The THR Data Extraction System (DES) Flow Diagram

Section 4

4.0 Validating the System

4.1 Validating Test with Computer Network

4.2 Test Plan

Section 5

5.0 Integrating the System

5.1 Software Engineering Project Plan

5.2 Software Engineering Process

76

77

78

81

82

83

83-95

98

99

100

Section 6

6.0

6.1

System Integration and Verification (SI&V)

System Requirements Review

6.2 The System Requirements Traceability Matrix

6.3 System Integration and Verification Planning

6.4 System Integration and Verification Development

105

106

107

108

110

6.5 System Integration and Verification Execution

6.6 Applying OPC Interface

111

112

6.7 OPC Data Access Architecture

6.8 System Integration Test using HMI

113

114

6.9 SI&V Test Procedures

6.10 SI&V Test Results

6.11 SI&V Test Case

6.12 Normal Operating State

6.13 Data Acquisition from Plant Instrumentation

Conclusion

Glossary

Appendix A Thermal Performance Curve

Appendix B System Instrumentation

Appendix-C System Engineering Master Schedule (SEMS)

Appendix D Maintenance and Training

List of Figures & Tables

Figure 1.1

Figure 1.2

Context of a Power Plant

ConOps Context Framework

Figure 1.3a Pre-Planned Product Improvement

Figure 1.3b Product Improvement Template

Figure 1.4 Integrated & Process Development

Figure 1.5 System Engineering Management Plan Layout

Figure 1.6 System Engineering Development Environment Plan

117

118

119

121

121

125

127

129

130

137

140

4

8

10

11

12

13

14 iv

Figure 1.7 Project Management Process Flow

Figure 1.8 Planning Process

Figure 1.9 The Essential Elements of Risk Management

Figure 1.10 Risk Management Control and Feedback

Figure 1.11 System Engineering Process

Figure 1.12 Flow Diagram

Figure 1.13 Traceability Flow Plan

Figure 1.14 Functional Architecture

Figure 1.15 Functional Flow Block Diagram

Figure 1.16 Design Synthesis

Figure 1.17 PMTE Layout

Figure 1.18 Quality Function Deployment

Figure 2.1 Boiler Flow Nozzle Test - Process

Figure 2.2 Calibrated Instrumentation to Measure Parameters

Figure 2.3 Tools-1

Figure 2.4 Work Environment

Figure 2.5 Work Breakdown Structure-1

Figure 2.6 Work Order Task Schedule-1

Figure 2.7 Discharge Coefficient versus Reynolds Number

Figure 2.8 Gross Boiler Efficiency Test

Figure 2.9 Method for Gross Boiler Efficiency

Figure 2.10 Tools-2

Figure 2.11 Environment

Figure 2.12 Work Breakdown Structure-2

Figure 2.13 Work Order Schedule-2

Figure 2.14 Gross Boiler Efficiency I/O Curve

Figure 2.15 Flow Nozzle Loop

Figure 3.1 Process Concepts

Figure 3.2 Analyzing the Process

Figure 3.3 Analyzing the Method

Figure 3.4 THR Loading

Figure 3.5 Work Order Task Outline & Instructions

Figure 3.6 Work Breakdown Structure

Figure 3.7 Work Task Schedule

Figure 3.8 Power Plant Concept of Description Diagram

Figure 3.9 The System THR I/O Curve

Figure 3.10 Extraction HP, 5 & 6

Figure 3.11 Extraction 2, 3 & 4

Figure 3.12 THR Data Extraction System

Figure 4.1 Data Extraction for System Under Test

Figure 4.2 Hardware Connections

Figure 5.1 Computerize Interface

Figure 5.2 Hybrid Prototype

Figure 6.1 SI&V Sub-Process

Figure 6.2 SI&V Planning Activity

Figure 6.3 SI&V Planning Development Activity

79

80

80

81

82

83

98

99

105

108

110

21

25

26

27

16

17

18

18

28

32

39

39

48

49

65

66

69

70

70

71

62

63

63

64

72

73

74

75

57

58

59

59

60

61

62 v

Figure 6.4 SI&V Execution Activity

Figure 6.5 OPC Architecture

Table 1.1 Project Management Plan

Table 1.2 Preliminary Judgements Regarding Risk Classification

Table 1.3 Gantt Timeline

Table 1.4 Requirements Allocation Sheet-1

Table 1.5 Requirements Allocation Sheet-2

Table 1.6 Staffing Cost

Table 1.7 Five Year Project Expenditure

Table 1.8 A Breakdown of Cost Increase

Table 1.9 Design Estimate

Table 1.10 Work Group Cost Estimate

Table 1.11 MOE Matrix for Nuclear Plant - 1

Table 1.12 MOE Matrix for Nuclear Plant - 2

Table 1.13 MOE Matrixes for Coal & Gas Fired Plants

Table 2.1 Pre-Overhaul Test Schedule

Table 2.2 Mean Reynolds Numbers vs. ASME PT 16.5 Code

Table 2.3 Mean Reynolds Numbers vs. Manufacture Recommendation

Table 2.4 System Reviews and Audits – As found Data

Table 2.5 Flow Conditions

Table 2.6 Nozzle Coefficient

Table 2.7 Test Setting – Mean Reynolds Number

Table 2.8 Manometer versus Kent Gauge

Table 2.9 Manometer & Kent Gauge Error

Table 2.10 Post Modification Boiler Test

Table 2.11 System Reviews and Audits – As Left Data

Table 3.1 Turbine Heat Rate Loading Test

Table 3.2 Turbine Back Pressure Test

Table 4.1 Validation Requirements

Table 5.1 Acronym (Software Development)

Table 5.2 Software Engineering Process Plan

Table 6.1 System Requirements Review Checklist

Table 6.2 System Baseline Requirements

Table 6.3 System Integration and Verification Planning

Table 6.4 SI&V Planning Input Checklist

Table 6.5 Test Case (Start-up & Shutdown)

Table 6.6 System Verification & Test Results

Table 6.7 DAH/SCADA RVM

Table 6.8 Performance Verification Matrix

Table 6.9 Test Case Results

111

113

109

117

118

122

123

124

54

55

56

57

64

66

76

78

93-95

101

102

106

107

109

33

34

34

36

38

42

15

19

29

30

31

43

44

45

46

46

47

53 vi

Abstract:

The aim of this paper is to demonstrate the process of extending the life cycle of power plants (also referred to as systems) through predictive maintenance and performance testing.

The plants are divided into three groups, namely group 1, group 2 and group 3 that correspond to Coal Fire, Gas and Nuclear respectively. The problems that led to this project are summarized in ConOps. ConOps describes the stakeholders’ vision regarding how they will use a contemplated system to affect the challenging aspects of their “as is” situation

This project will apply System Engineering Methods, Concepts, Techniques and Practices such as System Engineering Management Plan (SEMP) to provide added value to the industrial sponsor. The method used to achieve the desired target is periodical and scheduled tests in the form of instrumentation measurements, calibration, and system design calculations versus test results. By using the System Engineering process this paper shows that by targeting the high level components such as boiler, turbine, feed-water and condenser, the entire system can be successfully tested, analyzed and documented. Therefore, this project will use software programs, and computerized networks to monitor and trend the system performance before failure occurs.

1

1.0 Introduction:

This project is designed to use various tests and equations such as boiler feed-water flow nozzle calibration, gross boiler efficiency test and thermal heat rate test to analyze and improve the system performance through software engineering. The engineering data will be extracted from the physical system through the tests mentioned, and will be included in a computer program source code that will be used to analyze and validate the system.

The validated system will be tied to a network of computers that will be used in this project for major decision making. The results will also allow the operating and maintenance staff to utilize the statistical and other analytical data (collected through practical system tests), empirical table data, test performance and equations, in procedures when a system performance test is required. These tests will also be used for: (a) components history, (b) monitoring the failure trend, (c) major component replacement/repairs that will prevent unscheduled plant outage and predict system failure.

Boiler Feed-Water Nozzle Calibration/Test and Gross Boiler Efficiency Test (GBET) will be carried out to provide performance data such as boiler efficiency and nonlinear regression Rsquare value. Data obtained from the tests are very critical to the day to day operation and to the improved service life cycle of this large complex system.

Thermal Heat Rate (THR) performance test will be carried out to: (a) provide performance data in the form of input and output (I/O) equation, (b) determine I/O curves which are primarily used to calculate the total and incremental running costs, (c) discover deficiencies in the feed-water system, extraction line pressure drops and other information which are relevant to the turbine cycle, (d) benchmark data for station performance reference, monitoring, Analog Input (AI) and Computer Input (CI).

Another key purpose of this project is to use Programmable Logic Controllers (PLC), Human to Machine Interface (HMI)/Supervisory Control and Data Acquisition (SCADA) to justify replacing aged instrumentation controls and to allow the system to evolve with time through computerized network. The PLC operation will be linked to SCADA computer through data bus interface that will be apart of the system validation, verification and integration network.

2

The data for the SCADA computer will be extracted from the existing system through the tests mentioned here. Each Data Acquisition Hardware (DAH) Input/Output (I/O) maintenance screen will display the following items of each analog signal that can be displayed: (a) module ID number, (b) I/O description, current voltage reading and current engineering value, including units. Additional information will be provided to identify the applicable DAH unit, and the panel location of the hardware. The DAH I/O maintenance display screen will encompass all Data Extraction System (DES) inputs that are obtained directly from the dedicated DAH that is connected to the field instrumentation. The Digital

Control Computer (DCC) I/O Maintenance display screen will encompass all DES inputs obtained from DCC-X, through the Gateway-X Computer. Only one screen of data will be viewed at a time.

This project uses a work management and project management software program known as

PASSPORT

.

Some of the commonly used tasks in PASSPORT are: initiate work request, expedite work order, work planning, scheduling activities, work order completion, work report, equipment history analysis, calibration support, analysis activities, material inventory and reliability centered maintenance.

This program provides a wide range of panels and interfaces such as project work details and project management interface. The project work schedule detail panel help us to identify and track project work, project management development and maintenance summary tasks.

The project manager/SE also uses this panel to set and review dates associated with major projects events, milestones and tasks during the project. This panel also supports the entry of planned labour and work percent completion information, which is used to plan variance reporting and analysis. Furthermore, scheduled percentage completion information is calculated and displayed based on the date entered. Summary tasks may be associated with an activity, which is a user-defined field used to categorize the project work task. This panel also carries a field which relates project work to project budgets and actual costs. In addition,

PASSPORT provides an interface panel to upload and download scheduled and selected tasks to Microsoft (MS) Project.

3

1.1 ConOps

The Problem Space

Figure 1.1 Context of a Power Plant

(See Figure 1.1 above) Sponsor (Stakeholder) needs the system performance upgrade to:

(a) Minimize force outages

(b) Address the concern of the End-Users

(c) Meet and exceed the various Standards set by the industry

(d) Meet the needs of the professional organizations that regulate the day to day system operations

(e) Address the public outcry for loss revenue and inadequate system performance

(f) Address the findings from an independent Technical Audit Review group

(g) Make the evolutionary changes that will allow the system to evolve with time, such as Human to Man Interfaces (HMI), Machine to Man Interface (MMI),

Programmable logic controller (PLC) and SCADA

(h) Maximize the system output capacity in Megawatts and revenue

(i) Extend the Life Cycle of the system

4

The Sponsor will realize the value from the system upgraded performance and improved operational conditions. A Technical Review and Audit group was used to assess the problems.

Employer: The sponsor typically relies on the employer to manage the project that will improve the system. Furthermore, both sponsor and employer share the risks inherent in making the necessary changes to improve the system.

Project: The project is where time, schedule, resources competence, goal, status reviews and task activities are mixed together to yield the required results in a given Cycle Time

(CT). The project is also seen as the carrier that will deliver the required changes to the system.

Practitioners of Systems Engineering (PSE’s) : Plays a major role in the project as the employees who collaboratively design this project and documenting the scheme as a

Systems Engineering Management Plan (SEMP). The PSE’s will operate the project with other work groups with non-SE inputs.

Power Plant “As Is”

In this project, value added to PSE’s is considered latent until the overall project produces the desired output to effect the change that will improve the “AS Is system.

The Power Plant will consist of at least five types of providers:

(1) Industry Standards (ASME)

(2) SE relevant standards bodies

(3) Professional organizations

(4) On the job training (OJT)

(5) Manufacturers of the different sub-system

These five types of providers form an integral part of the tools and skill required by PSEs to add the desired value that will change the “As Is” power plant to make it a reliable source of power output and revenue generator. The output will be evaluated through the

5

Measure of Effective (MOE) which will be discussed in detail in this project using the major system components.

The major components of interest are: boiler, turbine, feed-water and condenser.

These components were chosen because they represent the primary components in the power plant system. Any impairment to the primary components such as the boiler, turbine and condenser/feed-water system will reduce capacity or shut down. The same cannot be said of other subsystem components such as: turbo-control and governor control. However, the system carries multiple layers of redundancy and spare modules that can be easily substituted or set to operate on manual mode without impairing the final product (output power). In addition, by focusing on these upper level components, the lower level components will also be addressed in the process. For example, the condenser is the final destination for most of the steam produced by the boiler. The condenser removes the latent heat from the steam before turning the steam back into water to allow the re-heat process to continue the cycle. All the lower level subsystems between the condenser and the boiler must be fully functional for the boiler to deliver the required steam to the turbine. Therefore, if the requirements for boiler and turbine are met then we know that the related subsystems are functional.

Addressing the problems discovered by the Technical Review Auditors

The problems will be addressed in the following ways:

(a) Use of more than one source of obtaining critical data

(b) System analysis and evaluation of the primary components with the present instrumentation in conjunction with new computerized system and interfaces that will allow the system to do advance trending and remote data monitoring.

(c) Provide the guideline to which day to day and periodic testing will become the new standard

(d) Continuous improvements maintenance practices directed through PASSPORT

(Work Management software)

(e) By breaking down all work orders into work tasks that will be followed by a work report for trending, history and maintenance traceability

6

(f) By reducing force loss rate to less than 5% annually to optimize revenue and extend the life cycle of systems across the various plants

The forecasting benefits of the project are :

(a) To keep force outage to less than 5%

(b) To meet the peak demand power to the End-User

(c) To meet and exceed the industry standard

(d) To meet the needs of the regulators

(e) To generate the required revenue that the system is capable of.

(f) To address the findings of the Technical Review Audit (TRA)

(g) To have new computer base system interface that will evolve with time

The tests & controls that will be used to deliver the forecasted benefits are:

(a) Boiler nozzle calibration test & gross boiler efficiency test

(b) Thermal Heat Rate (THR) test

(c) Supervisory Control and Data Acquisition (SCADA)

(d) Programmable Logic Controller & Human Machine Interface (HMI)

The expected relationships among the tests to be performed

There is an interdependent behaviour of the test parameters. For example, the boiler flow nozzle calibration test will directly affect the Gross Boiler Efficiency Test

(GBET) because flow rate depends on temperature. Furthermore, if the gross efficiency test is low, the thermal heat rate will be higher that normal. Therefore, in order to produce optimum power output, all three tests mentioned above will have to produce results showing maximum performance.

7

The ConOps framework is summarized in Figure-1.2 below with the three major players interconnected to solve the common problem. The three-body components shown will be elastic in their decision making scheme in order to create non-hierarchical relationship patterns between each body. The decision making data derived from independent TRA group will lead to choices, options and appropriate actions to achieve the desired goal.

Figure-1.2 ConOps Context Framework.

8

1.2 Product Improvement Strategies

Complex power plant systems do not have to be stagnant in their operational configurations and the need for change can come from sources such as software engineering. By applying software engineering technology the operational configuration can be affected in infinite ways. The major problem with configuration change is the risk associated with the predicted change and the unknown changes to a large complex system. Acting on the Technical Review Audit findings in ConOps, this project will use technology availability that allows the system to perform better and be more cost effective throughout its life cycle.

In addition, to better the system performance, the project will also focus on reliability and maintainability through data obtained from performance testing and documentation.

Performance testing and documentation will be done through system re-validation and verification of the existing systems. This project will adapt the Pre-Planned Product

Improvement (P3I) approach to establish effective and complete interface requirements to upgrade and improve the existing system performance. See Figure-1.3a below. Using the

P3I approach will generally increase the initial cost, configuration management activity, and technical complexity. However, the big payoff using this method is that it provides modular equipment upgrade with flexible interface connectivity and open systems development.

A Test Plan will be in place to validate the existing system using. This plan will include the causes for test failures, failure of system under test, error in expected results, failure due to test script or procedure, recording of failure in test report and tests deferred to subsystem testing. An integration test plan will integrate the validated data into a network of computers with some operating under different protocols and software program, such as Microsoft C/C++ and Microsoft Visual Basic (VB). A configuration plan will be in place to look at the various systems of computer within the network and make sure that their operation meets the baseline requirements.

9

Product Improvement Strategies

Figure-1.3a Pre-Planned Product Improvement

By embracing the concept that equipment fails when its capability drops below desired performance, this P3I systems engineering management scheme will target causes of the failures to prevent any drop in performance as it relates to power plant (system). In order to control performance, this project will test, monitor and analyze the engineering data extracted from the system. The monitoring and analyzing will be done through a network of computers.

10

Product Improvement Strategies

Figure-1.3b Product Improvement Template

Figure-1.3b shown above is used as a template to guide the development of this project to meet the needs of the external and internal stakeholders. PASSPORT is the hub that drives the process such as what gets done and the sequence of how all work tasks are done. Furthermore, PASSPORT provides an indispensable traceability path that will track the status of a device from the manufacturer through identification codes such as Cat ID,

UTC number and Material Request (MR) number to the final work report. The business

LAN is not a physical link to the process plan. However, all tasks carried out must include a work order with a number, and at the end of each day a work report must be filed against the work order to support the task, even if the task is incomplete.

11

Product Improvement Strategies

Open System Approach to the Systems Engineering Process

Figure-1.4 – Integrated and Process Development

This open system approach is a combined approach that includes business and technical components to develop a process that results into a system that is easier to change, upgrade and replace components. This approach allows the project to develop and produce flexible interfaces and software programs that maximize the current commercial available competitive product that will evolve with time and enhance the process for future upgrade. From a business standpoint, this type of operation will directly reduce the system’s life cycle cost. Meanwhile, from a technical perspective, the emphasis is on interface control, modular design and design upgrade.

Furthermore, incremental upgrades are tied to the growing trend in new technology capability and technical maturity in areas such as Human to Man Interface and client servers. This concept also add advantages that will benefit this project, such as (a) a wide range of commercial products that are tested to various industrial standards (b) obsolete control equipment due to lack of spare parts, can be replaced with interface common to the industry standard. Moreover, replacement of obsolete control equipment will have the capability to be modular, and therefore minimizes system downtime and enhances productivity as recommended by Technical Review Audit group. Figure 1.4 above, summarizes the open concept approach.

12

1.3 System Engineering Management Plan (SEMP)

The SEMP in layout in Figure-1.5 is a top level management plan with technical components that is used to form a live document that integrates the project activities, operation and expansion. The Project Management Plan is shown in Table-1.1 matrix, and the various phases such as Project Identification, Project Initiation, Project Definition and Execution

Phase will be covered under the Project Management Plan. This project is using the SEMP plan as a guide to answer questions about the problem we are trying to solve, the sensitivity parameters that will be influencing the process dynamics, and which matrix will be used to measure the technical progress.

System Engineering Management Plan (SEMP) Layout

Figure-1.5 System Engineering Management Plan (SEMP) Layout

13

System Engineering Development Environment (SEDE) Plan

Figure-1.6 System Engineering Development Environment

The objective of having a SEDE plan is to support the tools and methods with input from the process and the environment, (see Figure 1.6 above). In addition, the Systems

Engineer will ensure that all the processes and environments on this project are integrated and used properly.

The control and integration of SEDE embraces the management structure and Systems

Engineering Policy that create the process, which in turn feeds the tools and method. On the other hand, the environment supports the tools and methods with the required utilities such as computer facilities, laboratory facilities, communication and networking. In addition, SEDE fills the gap that exists in the SEMP plan.

In this project the environment is anchored by a work management software package known as PASSPORT. This software covers every facet of work management such as material inventory, staging, tracking material, work procedures, work orders, work task and work reports. The process and methods are tailored to different tasks that will be performed here. While tools are common to the system’s computerized network that forms an integrated process for a complete system. The environment will provide each task with the required software/tools to complete the task.

14

The Project Management Plan

Table 1.1 - The Project Management Plan: Project Estimation & Accuracy Matrix

Stage Conceptual Budgetary Release Quality Definitive Close Out

Objectives (a) Screen concept with respect to strategic fit

(b) Identify and clarify needs and select projects which maximize long term values

(c) Identify high level resource needs

(a) Establish & list alternatives that meet need or opportunity

(b) Prioritization based on resource availability and needs in the Life

Cycle plan

(c) Develop and get approval for preliminary business case to proceed.

(a) Define the preferred alternative for

Business Case

(b) Project

Execution Plan and schedule project milestones for approval.

(a) Design, build, test & commission

(b) Detailed execution plans to ensure effective conduct and control.

(a) Assess efficiency and effectiveness of the project management process

(b) Feedback drive to achieve continuous improvement

Estimate

Accuracy

Cost

Tracking &

Control

(a) Up to +/-60% during stage

(b) Target +/-40%

(a) Total Project estimate +30% to -15%

(a) Release

Quality Estimate

+/- 15% to -10%

(b) Target to be within +/-5%

(a) Approved definition work is charged to

Project Work

Order

Definitive

Estimate +/-

5%

(a) Actual

Cost incurred is reported

(b) Cost charged to

Work Order

(a) Reviews estimate quality at each phase

(b) Search out means for estimate accuracy improvement

(a) Project

Complete & put in service

(b) Complete inservice report

( c) Transfer to

Fixed Assets

(d) Complete

Project Closure

Report

15

Project Management Process Flow

Figure-1.7 Project Management Process Flow

Figure 1.7 shows the project management process flow. The needs were identified by a

Technical Review Audit (TRA) group, as discussed earlier in the ConOps. However, special attention will be paid to technology insertion and engineering data extracted from the physical system. This data will be converted into a computer program source code for the purpose of: documenting, improving, analyzing and validating the system. The engineering data will be available through a series of tests described here. Each test will provide an interface to the computer network, so that the system can be tracked continuously. The Initiation phase was done with internal Stakeholders, external

Stakeholders, and Project Team coming together to share ownership of the project as a group. This group acts on the findings of the TRA group to determine the high level deliverables, resource requirements, policies, procedures and objectives to obtain the project initial approval. The planning process will define work activities, sequence of work activities, identify work resources, estimate work durations, identify the risk, estimate cost and establish a base budget. Furthermore, this planning phase will estimate the project size; produce a schedule with the work breakdown structure (WBS) that will be governed by a System Engineering management plan as shown below in Figure-1.8.

16

Planning Phase

Figure-1.8 Planning Process

The Figure 1.8 refers to the planning process which includes a series of activities such as

Boiler Feed-Water flow nozzle calibration, Gross Boiler Efficiency Test, Thermal Heat

Rate test and software program interfacing. The steps will result in a complete management plan. The process activities will:

(a) Yield a plan that will define how the scope, schedule, deliverables, resources and cost will meet the project objectives

(b) Establish a subsidiary management plan to define a given area of the project. In this project, the subsidiary management plan is the software development plan.

Monitoring & Control process

This group will develop project performance reports, review and track project status as it relates to performance criteria such as scope, cost, schedule and quality assurance. This group will also develop and manage a corrective action plan that will keep the project on track to meet the objectives.

Executing & Close Phase

The Executing Phase will be done with regular schedule and requirement reviews in order to resolve any requirement issues, while the closing out phase will review the lessons learned in all of the phases, and signed off on the project completion.

17

Risk Management

Figure-1.9 The Essential Elements of Risk Management

Figure-1.10 Risk Management Control and Feedback

Adapted from DoD

18

Table-1.2 Preliminary Judgements Regarding Risk Classification

Consequences

Probability of

Occurrence

Extend of

Demonstration

Existence of Capability

Low Risk

In-signification cost,

Schedule, or technical impact

Little or no impact likelihood

Full-scale, integrated technology has demonstrated previously

Capacity exists in known product; requires integration into new system

Moderate Risk

Affects program objective, cost or schedule, however cost, schedule, performance are achievable

Probability sufficiently high to be concern to management

Has been demonstrated but design changes test in relevant environments required

Capability exists, but not at performance levels required for new system

High Risk

Significant impact, requiring reserve or alternate courses of action to recover

High likelihood of occurrence

Significant design changes required in order to achieve required/desired results

Capability does not currently exist

Adapted from DoD

Figure-1.9 uses the four essential elements of risk to identify, analyze, mitigate, control and plan the risk process. These elements provide a continuous feedback process that is used to monitor and control the risk management process. Figure-1.10 shows an integrated risk management system with feedback control that reacts with four key elements to identify activities shown in Table-1.2. By identifying risk and analyzing uncertainty sources and potential drivers, one can transform uncertainty into risk that could affect the life cycle. This project will aim at minimizing or avoiding the risk, because power operation at this capacity (500/950 MW) can lead to a very large sum of money in system downtime. The matrix in Table-1.2

is used to help select the mode of the risk, such as low, medium and high . In avoiding the risk, the project will remove requirement that leads to uncertainty and high risk probability. The control process is built into the software development plan, so the design process follows a low risk pattern.

For example, software program insertion is considered to be high risk. Therefore, a prototype software program model was used to provide a mock-up operation of the existing power plant system in order to iron out the bugs and minimizing the risk.

19

1.4 Scope

The scope as it relates to the Systems Engineer is to:

(a) Ensure that the correct technical task gets done during the development and planning phase.

(b) Develop a total system solution that balances cost, schedule, performance and risk

(c) Develop and track technical information needed for decision making.

(d) Develop verification and technical solution procedures to satisfy customer requirements.

(e) Develop baseline and configuration control

(f) Develop a system that can produce economically throughout the full life cycle

Scope and Structure of Document

The Standards and Procedures Guidebook (SPG) for the improvement of the system as it relates to the three groups of power plant is used to embrace an open Systems

Engineering process. This guide is based on the related activities and recommendation of the TRA-group findings shown in the ConOps.

The SPG Provides description for:

(a) Validated data from Boiler feed-water flow nozzle, GBET and THR will be analyzed and displayed through computerized network

(b) New technology insertion such as Supervisory Control and Data Acquisition

(SCADA) and software program using Microsoft C/C++ and Visual Basics

(c) The software development plan to track system performance

(d) The software engineering model to mock the current system function baseline

(e) The software engineering process to run different programs

(f) The software organization and interfaces

(g) The verification & integration test plan

(h) A full qualified verified & integrated system to meet the needs of the sponsor and the various stakeholders

(i) Training Maintainers and Operators

20

1.5 System Engineering Process (Road Map for this Project)

Figure-1.11 System Engineering Process

The System Engineering Process as outlined in Figure 1.11 includes the following:

Process Input: Process Output:

Customer needs/Objectives/Requirements Development Level Dependent

(a) Missions (a) Decision Data Base

(b) Measures of Effectiveness

(c) Environments

(d) Constraints

(b) System/Configuration

Item Architecture

(C) Specification and Baseline

Related Term : Customer = Organization responsible for Primary Functions

Primary Functions = Development, Production/Construction, verification,

Deployment, Operations, Support and Disposal

System Element: Hardware, Software, Personnel, Service and Techniques

21

1.6 Capabilities and Constraints

Group-1 Coal Fire Capabilities:

(1) Plants are capable of producing 510 MW at Maximum Capacity Rating (MCR)

(2) Using ASME PTC6 code standard plants should be able to:

(a) operate 4 mills pulverization, reduced throttle pressure, throttle temperature, full isolation and 4 Governor Valve Wide Open (4VWO) to produce 375 MW at 74% High Pressure (HP) efficiency

(b) operate 5 mills pulverization, reduced throttle pressure, throttle temperature, full isolation and 4 Governor Valve Wide Open (4VWO) to produce 395 MW at 77% High Pressure (HP) efficiency

(c) operate with 4VWO condition, while a mix of coal blend burnt at the boiler to produce a ratio of: 85/15 by mass

Group-2 Gas or Oil Fire Capabilities:

Start-up and shut-down in less than 4 hours

Constraints

Constraint: Design, such as fineness of atomization, size and shape of fuel oil particles are limited by:

(a) Burner capacity which depends on fuel oil pressure and on nozzle diameter

(b) Spiral pitch of fuel oil which is an integral part of the nozzle diameter

(c) Flow resistance due to turbulent flow and frictions

(d) The throughput capacity of the mechanical burner is limited by flow-rate through the nozzle. This flow-rate is also directly proportional to the square root of the fuel oil pressure.

(e) Adjustable mechanical atomizer carries a narrow throttling range, due to the fact that doubling the pressure will increase the flow-rate by only 40%.

22

Group-3 Nuclear Capabilities:

Can operate at 36 efficiency, which is above the industry average for power plants

Constraints

(a) Start-up and Shut-down are limited by the K-Value: K-1 = ρ (reactivity)

K

Starting up a reactor (CANDU) can be a very long process that varies from 7-14 days if the outage is forced by an unplanned event during normal operation. The K-value limits the use of reactor to the following: supercritical, critical and subcritical.

A Reactor with a K-value >1 indicates that the neutron population is increasing with time. Since the reactor power is directly proportional to the neutron level in the reactor,

K> 1 indicates that the reactor power is increasing with time and state and is said to be a

Supercritical Reactor. When then K-value =1, the reactor is said to be Critical. When

K-value < 1, the reactor power is decreasing with time and the reactor power is said to be

Subcritical.

(b) Regulating constraints such as those set by the Canadian Nuclear Safety Commission

(CNSC), Federal environmental standard and the world wide industry standard set by

World Association of Nuclear Operators (WANO)

1.7 Functional Requirements:

1.

To measure Thermal Heat Rate (THR) test using, GBET and Manufacturer Design

Specifications as a guide

2.

To apply THR test in the form of net input and output (I/O) characteristics curve from

Quadratic Equation concept such as: Input (GJ/h) = A + B *MWnet + C*MWnet^2

3.

To measure the steam at the turbine stop valve

4.

To measure the steam flow to the re-heater

5.

To measure the enthalpy of steam supplied to IP turbine before the interceptor valve

6.

To measure the enthalpy of the feed water at the HP heater outlet

7.

To measure the enthalpy of steam turbine exhaust

8.

To measure net generated power output

9.

To calculate the electrical power drawn by the boiler feed pump

23

10.

To calculate the following using the actual test condition:

(a) Main steam pressure

(b) Re-heat temperature

(c) Re-heat pressure drop

(d) Extraction line pressure drop

(e) Turbine internal efficiency

1.8 Verification Process

The verification process will follow the sequence shown in the top down flow diagram below. This will be done with the use of System Classification Index (SCI), and the objective for applying this index is to assign every device to a subsystem using

PASSPORT (Work Management Program). Every device will be in format of: Unit-

System-Device, a typical example could be the Boiler Feed Pump number-1 (BFP) in unit-3; therefore the SCI would read 3-1101-BFP #1. In terms of calibrated device, the procedure and specification and data sheet are available in the PASSPORT. See

Appendix-B for a general overview of the calibration setup and procedures.

This method of verification allows us to provide a seamless path to the system integration and traceability. In addition this method should use two persons to carry out the correct component/device verification. This is done by using one person to read the correct component/device from a live document while placing his or her finger on the device.

The other person will verify that the reading from the live document corresponds to the device of interest that will be undergoing maintenance, test or repairs. The second person will indicate a verbal yes or no to the physical device verification.

The second person will now take on the role of the first person by reading the live document while pointing to the device. This process is very important in identifying the correct device for verification.

24

Figure-1.12 Flow Diagram

The above flow diagram shown Figure-1.12 is used to describe the steps required to effectively verify a complex system such as a power plant(s). This process is used to verify the functional and physical architecture of a system, which includes the functional analysis/allocation and synthesis. The physical verification approach is used to coordinate design, develop and test processes. This process ensures also that the requirements are testable and achievable. Variances and conflicts will be identified by this process. Therefore, allowing the process to meet the system configuration baseline that will control the product breakdown structure.

25

1.9 Functional Analysis/ Allocation

The goal of this analysis is to move from higher level requirements to lower level requirements with a flow plan for traceability. See the Figure 1.13 below.

Figure 1.13 Traceability Flow Plan

Input: The output from the requirements analysis

Output: System configuration

Enablers:

1.

Various work groups such as:

(a) Mechanical maintainers

(b) Electro-mechanical maintainers

(c) Electrical and Instrumentation Control Technician/ Technologist

(d) Engineering Support

(e) Assessors & Planners

2.

Decision database from calculated input and output data shown on the R-square regression analysis curve

3.

Function flow block diagram and behaviour diagrams

4.

Requirement allocation sheet and timelines

26

Functional Architecture

Figure-1.14 Functional Architecture

This functional requirements allocation (Figure 1.14) is used to document the link between allocated function and performance requirements of the physical system. It is used as an indispensable source of traceability analysis and design synthesis. The function numbers are used to match the Functional Flow Block Diagram (FFBD) traceability and indenture numbers. Figure 1.15 shows FFBD in the top level down format to match the operational sequence of the events for the system. By using the test performance data collected through the boiler, turbine and generator, we are able to produce the required output. When the required output meets system requirement, then level sub-system 1.1 through to 1.4.1 are also meeting the system requirements.

Furthermore, every single block component in the first and second level blocks can cause the system to fail. Each component in the system is given a SCI that will identify the block and each device in the block, such as pressure switch, flow switch, flow transmitter and temperature transmitter. This is done to establish a flow diagram with continuous reference for traceability, integration and verification.

27

Functional Flow Block Diagram (FFBD)

Figure-1.15 Functional Flow Block Diagram

The objectives of the Functional Flow Block Diagram (FFBD) are to ensure that all life cycles are covered and all elements of the system are identified and defined to specific system functions. The numbering scheme is used as an explicit source for system verification. Furthermore, these numbers introduce identification that will be present through all functional Analysis and Allocation activities from lower level to top level.

See Tables 1.4 and 1.5 Requirements Allocation below.

28

Table 1.3 Gantt Timeline

Timeline (Gantt) 5 Years – January 2009 – December 2013

Duration Duration % Description Start Time Delay from

Start

Task 1

Task 2

02/2009

01/2010

1 month

Delay %

0.02

12 months 0.2

12 months

12 months

0.2

0.2

Task 3

Task 4

Task 5

12/2010

01/2012

11/2012

23 months 0.38

36 months 0.6

46 months 0.77

12 months 0.2

12 months 0.2

13 months 0.22

The Timeline Gantt is used to give an immediate update on what should have been achieved at any given point in time. This is in accordance with Event Based-Detailed

Schedule Interrelation shown in the System Engineering Master Schedule (SEMS). See

Table 1.3 above, and Appendix-C.

29

Requirements Allocation Sheet

Table 1.4 Requirements Allocation Sheet-1

Requirements

Allocation Sheet

Function Name &

No.

200.1 provide guidance for boiler pressure

200.1.2 provide guidance for boiler level

200.1.3 provide guidance for boiler temperature

200.1.4 provide guidance for combined parameters:

(a) boiler level

(b) feed water

(c) steam flow through summer amplifier

Function flow diagram No.

200.1

Function

Performance and design requirements

Boiler pressure must be maintained at initial calibration pressure of

7mpa. Initial boiler pressure:

70% - 75% of the design requirement

Boiler level must be maintained between 70 –

75% of design requirement

Boiler temperature must be maintained at calibration temperature of

300 degrees C.

The initial boiler temperature is

280 degrees C.

Boiler summer amplifier output must be maintained at 7.6

MA. The initial calibration will be between 7.5

MA and 7.6 MA

Facility

Requirements

Group-3

Nuclear

Group-3

Nuclear

Group-3

Nuclear

Group-3

Nuclear

Equipment Identification

Nomenclature

Boiler Pressure

Channel D, E, and

F

Boiler Pressure

Channel D, E, and

F

Boiler Pressure

Channel D, E, and

F

Boiler Pressure

Channel D, E, and

F

Computer Input (CI) or Analog

Input (AI)

AI

16 MA

14.933 MA

14.933 MA

7.6 MA

CI

75

70

70

30

Table 1.5 Requirements Allocation Sheet-2

Requirements

Allocation Sheet

Function Name &

No.

200.1 provide guidance for boiler pressure

200.1.2 provide guidance for boiler level

200.1.3 provide guidance for boiler temperature

200.1.4 provide guidance for combined parameters:

(a) boiler level

(b) feed water

(c) steam flow through summer amplifier

Function flow diagram No.

200.1

Function

Performance and design requirements

Boiler pressure must be maintained at initial calibration pressure of

7mpa. Initial boiler pressure:

70% - 75% of the design requirement

Boiler level must be maintained between 70 –

75% of design requirement

Boiler temperature must be maintained at calibration temperature of

520 degrees C.

The initial boiler temperature is

365 degrees C.

Boiler summer amplifier output must be maintained at 7.6

MA. The initial calibration will be between 7.5

MA and 7.6 MA

Facility

Requirements

Coal & Gas

Plants

Coal & Gas

Plants

Coal & Gas

Plants

Coal & Gas

Plants

Equipment Identification

Nomenclature

Boiler Pressure

Channel D, E, and

F

Boiler Pressure

Channel D, E, and

F

Boiler Pressure

Channel D, E, and

F

Boiler Pressure

Channel D, E, and

F

Computer Input (CI) or Analog

Input (AI)

AI

16 MA

14.933 MA

14.933 MA

7.6 MA

CI

75

70

70

31

1.10 Requirement Loop

This loop is used to identify each function for the purpose of traceability. It also performs the interactive process between the requirements analysis and the functional analysis/ allocation.

1.11 The Design Synthesis

This design synthesis is used to combine the physical process with the computer process and software. The physical architecture will provide a base structure for the specification baseline operation. See Figure 1.16 below.

Figure 1.16 Design Synthesis

The output will serve several different forms of interface such as Openness, Productivity, and Collaboration (OPC). This form of connectivity will allow system parameters such as temperature, pressure and flow to be converted to current or voltage and fed directly into the Excel software or other operating system. This allows one to write programs, control various subsystems, trend and monitor the complete system using SCADA in conjunction with PLC and Human to Machine Interface (HMI/MMI). In addition, the output will be interfaced to accommodate two independent systems namely X & Y computer that will provide the same data processing for reliability and contingency.

32

1.12 Cost & Schedules

System Engineering Staffing Cost by Management and Technical Staff

Table-1.6 Staffing Cost

Personnel

Category

Jan1, 2009 Jan1, 2010 Jan1, 2011 Jan1, 2012 Jan1, 2013 Totals Grand Total

$1,625,000 Management

Staff Cost

$325,000 $325,000 $325,000 $325,000 $325,000 $1,625,000

Technical

Staff Cost

$275,000 $275,000 $275,000 $275,000 $275,000 $1,375,000 $1,375,000

Grand Total

Cost

$600,000 $600,000 $600,000 $600,000 $600,000 $3,000,000

Table-1.6 above shows the cost breakdown of staffing for both managerial and technical

$3,000,000 human resource for the project. The cost value is based on benchmark costs against other business units and companies in the same industries. The sum of $325,000 will allow the project to operate with a group of five management staffs, while the sum of $275,000 will allow the project to operate with a group of four technically skilled persons.

There are several software packages available to estimate cost and scheduling such as

Microsoft Project and Timeline. However, while cost of staffing can be predicted quite safely over the five year period of this project, the same cannot be said about cost components such as installation, removal and material shown in Table-1.7. The primary source of getting cost related data is from actual cost experience from past projects.

Using the data collected the components cost was estimated on the basis of previous benchmark setting.

By comparing the release estimate against the definitive estimate, the project was able to establish a variance cap of 30% between the total release estimate and the definitive estimate. The variance cap allows the budgeting and scheduling to be tracked and controlled to prevent cost overrun or stoppages due to unavailable cash flow.

33

Table-1.7 5 Year Project Expenditure

Cost Component Release Estimate

Cost M$

Engineering

Commissioning

Material

Install & Removal

Indirect Costs

1.30

0

3.50

2.60

1.5

Interest & Overheads 1.25

Contingency 1.75

Definitive Estimate

Cost M$

1.29

1.5

3.80

4.30

2.50

1.65

2.0

Variance Definitive

Cost M$ Release

1

1.5

3.0

1.7

1.0

0.4

0.25

Project Total 11.9 17.04 5.14

1.13 Project Variance Cost M$ and Explanation of Estimates

As summarized in Table-1.7 the Definitive Estimate of 17.04 million represents an increase of 5.14 over the release estimate. This increase of $5.14 million is broken down in Table-1.8 with an explanation provided in the following sections.

Table-1.8 A Breakdown of Cost Increase

Cost Items Cost Increase % Of Variance

Engineering

Commissioning

Material

1

1.5

0.3

1%

Not in the Release

7%

Install & Removal 1.7

Indirect Costs 1

Interest &

Overheads

0.4

40%

40%

24.24%

Contingency 0.25 12.5%

Project Total 5.14 30%

Explanation of Decrease Estimate for Engineering

The forecast Engineering cost of 1.29 M$ is 1 M$ (1%) under the release estimate of 1.30

M$ and it is within the estimated range.

34

Explanation of increase Estimate for Commissioning and Training

The 1.5 M$ increase in costs is due to omission in the release estimate for commissioning, training, and station support functions. No separate work order task for commissioning will be issued for this project.

Explanation of Increase Estimate for Material

The increase of 1.7 M$ is mainly due to addition of Allen Bradley Programmable Logic

Controller (PLC-5) Control Unit System Modules.

Explanation of Increase Estimate for Installation and Removal

The 1.71 M$ increase is due to additional work on the SCADA system to a allow remote access to power plant live trend data.

Explanation of Increase Estimate for Material for Indirect Costs

The Increase of 1.71 M$ is to the addition of Turbine Governor Electro module that will evolve with current and future computer based interface

Explanation of Increase Estimate for Interest, Overheads and Contingency a) The increase in interest and overheads of 0.4M$ is due to an increased direct and indirect minor scope creep b) The project is carrying a 12.5% contingency on the balance of the work resulting from increase of 0.25 M$ in contingency. c) By allowing a variance cap of 30%, the project is preventing use of the contingency funds, assuming every task executed as planned as scheduled.

Cost Variance Summary

The 5.14 million dollar increase is mainly due to added scope for SCADA based

Equipment such as indirect cost increases and unplanned repairs.

35

Design Estimate

This design estimate, Table 1.9, uses the matrix below to identify the man hours for the various tasks. The man hours will be assigned a cost value that will be used as an integral part of the total cost value.

Table 1.9 Design Estimate

Task

No.

Task

Description

Deliverables or

Documents to be

Produced

Hours

Comments

1.0 Preliminary engineering

1.1 Pre-design Problem Definition

1.1.1 Scope 7

Development

Definition

1.1.2

“As built”

14

Info Package

1.1.3 COMS

Review

1.1.4 MOD

Scoping

Check Sheet

1.1.5 Document

Scoping

5

3

3

Checklist

1.1.6 Needs

Statement

1.2 Assessment of Problem

Definition

1.2.1 Develop

Alternate

Solution

3

5

30

1.2.3 Assessment

Report

1.3 Stakeholder

Review

1.3.1 Finalize

COMS

21

7

6

36

Table 1.9

Design Estimate (continued)

Task

No.

Task Description Deliverables or Documents to be

Produced

Duration in working days

Hours

7 1.3.2 Stake Holder

Review/

Meeting sign off

1.4 Design

Requirements

1.5 Preliminary

Design Plan

1.6 Preliminary

Flow Diagram

Mark-ups

Complete

Detailed

Design

Estimate

1.8 Review/ Issue

2.0 Contract

Management

2.1 Technical

Specification

2.2 Contract

Tender

2.3 Bid Evaluation

2.4 Award Contract

3.0 Detailed

Engineering

3.1 Detailed

Design Plan

3.2 Detailed

Design

Requirements

3.3 Review/

Update MOD

Scoping Sheet

3.4 Review/Update

Doc. Scoping

3.5

Checklist

Communication

Review

31

21

7

7

7

7

31

7

14

30

31

14

5

N/A

31

5

37

Comments

3.6 Regulatory

Approval

3.6.1 Regulatory

Approval

Needs

Assessment

3.6.2 Code

Classification

7

90

Cost Estimate by Work Group

A summary of the costs in dollars by work groups include contingencies, support, overhead, etc. When adding contingencies and support dollars, explanations are required for the values used. Large ticket items such as material may be broken down into separate rows as shown in Table 1.10 below.

Table 1.10 Work Group Cost Estimate

Work Group Total Hours

Support

Mechanical

3000

6000

Civil

Electrical/I&C

Drawing Office

Contract

Materials

2500

2000

5000

4000

Contingency (State Contingency Rate)

Total Cost summary

Staffing Cost

Components Cost

Work Group

Total Project Cost

Rate

$75/hour

$60/hour

$60/hour

$60/hour

$50/hour

$60/hour

Total

3,000,000K

17,040,000K

7,097,500K

27,137500 $M

Cost ($K)

225K

360K

150K

120K

250K

240K

5000K

1000K

7097,500K

38

1.14

PMTE Concept

Process, Method, Tools and Environment (PMTE) is the method used in carrying out this project. The PMTE layout shown in Figure-1.17 and Figure-1.18 is used as the primary path to execute a given task. The process starts with management setting the agenda for a given task. The Method and Tools used is controlled by the Systems Engineer (SE), and the

Environment is controlled by management. The PMTE concept also shows the overlap between the Management and Engineering domain. The method employed to execute the various task is the QFD process shown in Figure-1.17 below.

PMTE Layout

Figure-1.17 PMTE Layout

The Quality Function Deployment (QFD) Process

Figure-1.18 Quality Function Deployment

39

PMTE Environment

The environment concept of the PMTE pyramid is embodied in management software known as PASSPORT. This software is used to manage projects and tasks by setting the agenda. For example, under the work management folder, the various tasks for the project are outlined and put in order of priority. Each task will have a procedure that must be carried out as written in the steps. At the end of the work day or shift, a worker from the other shift should be able to continue from the last step completed. In addition, each task that is assessed to use material will show the material request (MR) number and catalogue identification (Cat ID) number. Both MR and Cat ID numbers are stored in the main computer system to allow traceability of parts and equipment. At the end of every shift a detailed work report with the work order number and task number must be written.

1.15 Requirement Analysis

Customer Mission: Is the continuous system improvement through performance testing, preventative and predictive maintenance over the system life cycle with minimum operating cost.

Customer Performance Requirements :

1.

Less than 5% force outages (un-scheduled power loss) per annum for each operating power plant unit

2.

100% operating capacity for each power plant unit

3.

Less than 1% change in steam enthalpy entering the high pressure turbine from the boiler

4.

Condensate flow to the heater must maintain temperature within 1 degree of designed requirements

5.

Steam quality form boiler into the high pressure turbine must be greater than or equal to 95%

6.

Condensate cooling water returning to the lake shall not be greater than 30 degrees Celsius

7.

Boiler feed pump efficiency shall be less than 88%

8.

Carbon pollution to the environment shall not exceed the industry standard ( ppm)

40

9.

The regression analysis R-square value should not be less that 98% for THR test

Assumptions:

1.

All control maintenance procedures (CMP) such as continuous, reference and information is followed as written.

2.

all mechanical maintenance procedure (MMP) are followed as written

3.

All operating procedures are carried out in the required steps

4.

There will be no environmental infraction(s) that will put the public health and safety at risk.

5.

All data and test results shown in the project are related to a specific group of power plants, or should be viewed as concept only.

6.

The author assumes no responsibility of data, procedures, and or results tailored to other projects.

The Measure of Effectiveness (MOE) shown in Table 1.11 is used to measure the relative important aspect of the system. The measurements are qualitative in nature and they describe the customer expectation of the product or system.

41

Table 1.11

- Measure of Effectiveness (MOE) Matrix for Nuclear Plant-1

Instrumentation Calibration Requirements - Output Matrix for 950 MW Units

Requirements Requirements

Boiler pressure (BP) should not be

> 5.5 Mpa

Boiler pressure should not be

< 70%

Description

BP should not be > 75% of maximum

Pressure

BP should not be < 70% of maximum pressure

Test Script Test Case Analog

I/P (AI)

If boiler

Pressure

(BL) >

75%, then display high pressure alarm.

If BP <

70%, then display low pressure alarm

Figure 1.1 current loop output

(o/p) = 16 mA

14.933

16 mA

14.933

Computer

I/P (CI)

75

70

Boiler temperature

(BT) should not be > 300

◦ c

BT should exceed 75% of the maximum temperature

If BT >

75% then display high temperature alarm.

Figure 1.2 current loop o/p =

16mA

16mA 75

Boiler

Temperature is not < 280

◦ c

Boiler level

(BL) should not be > 540”

H

2

O

Boiler level should not be

< 516” H

2

O

If BT dropped below 70% of maximum temperature

BL should not exceed 100% of the maximum height

BL should not be < 95% of maximum level

If BT <

70% then display low temperature alarm

If BL =

105% then display high level alarm

If BL is <

95%, then display low level

Figure 1.2 current loop o/p

14.933 mA

Figure 1.3 current loop o/p

16 mA

Figure 1.3 current loop o/p

14.933

14.933 mA

16mA

15.2mA

70

75

95

Table 1.11 to 1.13 shows the operating parameters and corresponding AI & CI for the

(MOE)

42

Table 1.12

- Measure of Effectiveness (MOE) Matrix for Nuclear Plant-2

Instrumentation Calibration Requirements - Output Matrix for 450 MW Units

Requirements Requirements

Boiler pressure (BP) should not be

> 5.5 Mpa

Boiler pressure should not be

< 70%

Description

BP should not be > 75% of maximum

Pressure

BP should not be < 75% of maximum pressure

Test Script Test Case Analog

I/P (AI)

If boiler

Pressure

(BL) >

75%, then display high pressure alarm.

If BP <

70%, then display low pressure alarm

Figure 1.1 current loop output

(o/p) = 16 mA

14.933

16 mA

14.933

Computer

I/P (CI)

75

70

Boiler temperature

(BT) should not be > 300

◦

Boiler

Temperature is not < 280

◦ c

Boiler level

(BL) should not be > 270”

H

2

O c

Boiler level should not be

< 257” H

2

O

BT should exceed 75% of the maximum temperature

BT is not dropped below

70% of maximum temperature

BL should not exceed 75% of the maximum height

BL should not be < 95% of maximum level

If BT >

75% then display high temperature alarm.

If BT <

70% then display low temperature alarm

If BL =

105% then display high level alarm

If BL is <

95%, then display low level

Figure 1.2 current loop o/p =

16mA

Figure 1.2 current loop o/p

14.933 mA

Figure 1.3 current loop o/p

16 mA

Figure 1.3 current loop o/p

14.933

16mA

14.933 mA

16mA

15.2mA

75

70

75

95

43

Table 1.13 - Measure of Effectiveness (MOE) Matrix for Coal & Gas Fired Plants

Calibration Requirements Output Matrix

Requirements Requirements

Description

BP should be Boiler pressure (BP) should not be

> 14 Mpa

> 75% of maximum

Pressure

Boiler pressure should not be

< 70%

BP should not be < 75% of maximum pressure

Test Script Test Case Analog

I/P (AI)

If boiler

Pressure

(BL) >

75%, then display high pressure alarm.

If BP <

70%, then display low pressure alarm

Figure 1.1 current loop output

(o/p) = 16 mA

14.933

16 mA

14.933

Computer

I/P (CI)

75

70

Boiler temperature

(BT) should not be > 520

◦ c

Boiler

Temperature is not < 365

◦

Boiler level c

(BL) should not be > 270”

H

2

O

Boiler level should not be

< 256” H

2

O

BT should exceed 75% of the maximum temperature

BT is not dropped below

70% of maximum temperature

BL should not exceed 75% of the maximum height

BL should not be < 70% of maximum level

.

If BT >

75% then display high temperature alarm.

If BT <

70% then display low temperature alarm

If BL

>105% then display high level alarm

If BL is <

100%, then display low level

Figure 1.2 current loop o/p =

16mA

Figure 1.2 current loop o/p

14.933 mA

Figure 1.3 current loop o/p

16 mA

Figure 1.3 current loop o/p

14.933

16mA

14.933 mA

16mA

15.2mA

75

70

75

95

44

2.0

Boiler Flow Nozzle Calibration/Test

Test Objectives:

The main objectives of the test were as follows:

(a) To assess the efficiency of the Boiler prior to the retrofit outage

(b) To determine the performance of the unit turbine cycle and its major components before and after a planned overhaul by means of test grade instruments

(c) To identify the potential controllable operating problems that would degrade the Thermal Heat Rate (THR) test

(d) To ensure that the Boiler Flow Nozzle co-efficient is in compliance with the

ASME PT Code 19.5

(e) To ensure the numerical results of the nozzle co-efficient do not exceeds ±

0.95

(f) To ensure that the Boiler performance meets the Manufacture Design specifications

(g) To identify test results that can be used for predictive maintenance practices which will extend the lifecycle of the system. See Table 2.1.

Table-2.1 Pre-Overhaul Test Schedule

Test No. Test Date Time (EST)

Pre-Overhaul

1

2

Feb.2, 2009

Feb.3, 2009

Feb.4, 2009

0945 -1213

1001-1245

1025 -1245

Unit load

(MW)

~ 440

~ 440

~ 440

Test

Description

Preliminary

Test;

Instrument

Checks

4 GV-VWO @

Rated Pressure and

Temperature

Repeat Test 1:4

GV VWO

45

2.1 Technical Performance Measure (TPM )

In order to measure the technical performance of the boiler nozzle calibration and test, the mean value of the Reynolds Number from the four tests setting, (namely: A, B, C &

D) will be used to plot against the ASME Code PT 16.5 Nozzle Coefficient Standard. See

Table 2.2 and 2.3. In addition, the same Reynolds Number will be plotted against the manufacturer’s recommended “Mean” for the Nozzle Coefficient. The results from both plots will be used as the standard to validate the boiler calibration/test performance.

Table 2.2 Mean Reynolds Numbers Vs ASME PT 16.5 Code

Reynolds Number ASME-PT 16.5 Code

516

767

504

769

0.9900

0.9920

0.990

0.992

Table 2.3 Mean Reynolds Numbers Vs Manufacture Recommendation

Reynolds Number ASME-PT 16.5 Code

516

767

0.9835

0.9856

504 0.9825

769 0.9855

The result from the Mean Reynolds Numbers Vs ASME PT 16.5 Code will be analyzed with regression analysis tool to produce an R-Square value of (R 2 = 0.9988).

The results from Mean Reynolds Numbers Vs Manufacture Recommendation using the same regression tool should yield an R-Square value of (R 2 = 0.9423). Both plots must meet the acceptable minimum standard for the purpose of system validation.

46

2.2 Technical Reviews and Audits (TRA)

This project uses TRA to review and verify all activities at various points in the process to ensure operational procedures are followed and deficiencies are identified and corrected before system integration. See Table 2.4. It also looks at the process such as major system overhaul, Input and Output (I/O) curves before the task begins. The information collected before the task begins will be recorded as: As Found and the data at the end of the task will be classified as: As Left.

Table 2.4 System Reviews and Audits

Test Type Manufacture

Boiler Gross 88%

ASME

Requirements Code

86% eff.

0.992 Nozzle

Calibration

Test

0.9833

Coefficient of Discharge

K = 1.049

(K)

500 Gross

Nominal

Load (MW)

K = 1.0550

N/A

Test

W

As Found Data

Parameter

Temperature in Deg.C cfs

K = 1.0550

Current

System

76%

Not available

Not available

440

Thermal

Heat Rate

Cycle

Efficiency

Isentropic efficiency

4000 GJ/h

35

80

N/A

33

78

Joules

N/A

N/A

% Error

12%

N/A

N/A

12%

4480GJ/h 12%

30.8

68

12%

15%

47

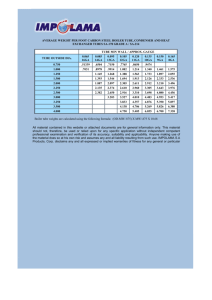

2.3 Boiler Flow Nozzle Test - Process:

Figure 2.1 Boiler Flow Nozzle Test – Process

Ontario Hydro performed the boiler flow nozzle test in September, 1961 as part of a commissioning test scheme, to check the calibration of an 8.735 inch by 5.028 inch flow nozzle. The result of the test was measured against the ASME Code standard. A Kent flow meter, with Serial No 6/2018A/1, was employed as the primary differential pressure measuring instrument during the calibration. An independent performance check was carried out with an independent manometer. The Boiler Flow Nozzle Test – Process is outlined in Figure 2.1 above.

48

Today, the same test is used in projects to look at the calibration of boiler feed flow nozzle, as it relates to discharge coefficient versus Reynolds Number. The findings are shown in Table-2.5 to Table-2.9, while Figure-2.1 to Figure-2.4 shows the process concept. Task-1 in the process flow diagram is ASME PT Code 19.5 Section-7, while

Task-2 is the local test being performed.

2.4 Experimental Arrangement

Calibrated Instrumentation to Measure Parameters

…

Figure 2.2 Calibrated Instrumentation to Measure Parameters

The nozzle assembly, 12 ft. 8 ½ in. long, was installed in OPG hydraulics laboratory. The water entered the nozzle test section through a 90 degree elbow which contained straightening vanes to minimize the swirl of the secondary flow produced by the elbow.

A 5 ft. 4 in. length of straight pipe was installed at the outlet from the test assembly. A gate valve installed downstream from the nozzle was used to control the flow and ensure that the pipe flowed full.

49

The differential pressure across the nozzle (for calibration purposes) was measured by an independently connected, well-type, single scale mercury manometer. This was connected to one set of pressure taps on the assembly. For the first two series of tests

(settings A and B) the Kent flow gauge was connected to the other set of taps on the assembly and its performance checked by the independent manometer. For the third and fourth series of tests (settings C and D) an additional manometer was used and connected in parallel with the Kent gauge. These readings, as well, were checked against the independent manometer. The latter arrangement is to be used in the plant and it was necessary to determine if any peculiarities would result.

A bypass was provided for each manometer so that the zero point could be checked between tests without altering the flow setting. The pressure measuring instruments were placed in the lower laboratory, approximately 20 feet below the flow nozzle assembly.

This minimized problems of air leakage into the instruments.

2.5 Testing Procedure:

Two test settings were chosen. One was the highest flow that could be read on the Kent gauge, and the second was the intermediate flow. For each setting, 5 tests were run, each lasting 15 minutes. Thus, the Reynolds number, which remained constant during each series of five 15 minute tests, was considered to be the controlled variable for the calibration.

Discharge was measured with the two weighing tanks situated in the lower laboratory.

The tanks, each having a capacity of 15,000 lbs., were used in series. During each 15 minute test, differential head readings were taken independently upon the instruments every 15 seconds. After each test, the instruments were checked for the zero point without altering the discharge setting. Water and air temperatures were recorded for each test. See Table-2.5.

50

2.6 Results:

Test data, observations and results are presented in Tables 2.5 to 2.9. The calibration coefficient of the nozzle (C) is a function of the Reynolds number and the ratio (see Table

2.5). It is defined by the following equation:

Where

Q is the discharge in cfs.

F is the velocity of approach factor

A d

is the nozzle area in sq ft.

G is the gravitational constant in ft/sec

2

(lb/ft

3

)