Oracle Streams - Pavan DBA's Blog

advertisement

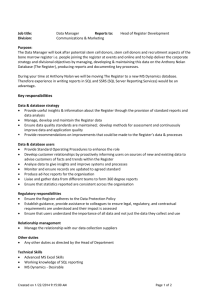

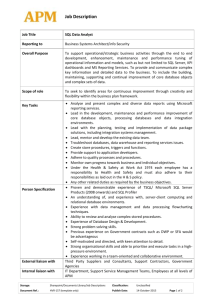

Oracle Streams OVERVIEW OF ORACLE STREAMS ARCHITECTURE AND PROCESSING Oracle Streams enables the propagation and management of data, transactions, and events in a data stream either within a database, or from one database to another. Oracle Streams consists of three components: capture, propagation, and apply. Each of these components is made up of several Operating System processes, Capture Component The capture component mines redo logs from a source database and captures changes. It consists of multiple operating-system processes. A reader process reads the redo log and divides the redo log into regions. The preparer processes scan the regions defined by the reader in parallel and pre-filter changes found in the redo log based upon user-defined rules. A builder process merges redo records from the preparers and passes the merged redo records to the capture process. The capture process then formats each change into a Logical Change Record (LCR) and enqueues to a queue if it satisfies the defined rules. You can configure capture locally at a source database or remotely at a downstream database. A local capture process runs at the source database and captures changes from the local source database redo log. You can configure a downstream capture process for realtime capture in which redo data from source database is transmitted to the downstream database and written to the standby redo log files, from which the capture process captures changes. A single capture process can send changes to multiple propagation and apply processes. You can also configure multiple capture processes. Propagation Component The propagation component uses queues to transfer the LCRs from the source database to the destination database. Apply Component The apply component receives the LCRs and applies the changes at the target database. The reader process dequeue LCRs from a queue, assembles the changes into transactions, calculates the transaction dependencies, and passes the transactions to the coordinator. The coordinator process assigns transactions to available appliers, based on the transaction dependencies and ordering. The applier process executes the changes at the target database. You can configure multiple appliers to increase apply parallelism. . Enable ARCHIVELOG MODE on both databases Reference: http://dbataj.blogspot.com/2007/09/how-to-enable-archivelog-mode.html 2. Create Stream administrator User Source Database: DB1 SQL> conn sys@DB1 as sysdba Enter password: Connected. SQL> create user strmadmin identified by strmadmin; User created. SQL> grant connect, resource, dba to strmadmin; Grant succeeded. begin dbms_streams_auth.grant_admin_privilege (grantee => 'strmadmin', grant_privileges => true); end; / PL/SQL procedure successfully completed. SQL> grant select_catalog_role, select any dictionary to strmadmin; Grant succeeded. Target Database: DB2 SQL> conn sys@DB2 as sysdba Enter password: Connected. SQL> create user strmadmin identified by strmadmin; User created. SQL> grant connect, resource, dba to strmadmin; Grant succeeded. SQL> begin dbms_streams_auth.grant_admin_privilege (grantee => 'strmadmin', grant_privileges => true); end; / PL/SQL procedure successfully completed. SQL> grant select_catalog_role, select any dictionary to strmadmin; Grant succeeded. 3. Setup INIT parameters Source Database: DB1 SQL> conn sys@DB1 as sysdba Enter password: Connected. SQL> alter system set global_names=true; System altered. SQL> alter system set streams_pool_size = 100 m; System altered. Target Database: DB2 SQL> conn sys@DB2 as sysdba Enter password: Connected. SQL> alter system set global_names=true; System altered. 4. Create Database Link Target Database: DB1 SQL> conn strmadmin/strmadmin@DB1 Connected. SQL> create database link DB2 connect to strmadmin identified by strmadmin using 'DB2'; Database link created. Source Database: DB2 SQL> conn strmadmin/strmadmin@DB2 Connected. SQL> create database link DB1 connect to strmadmin identified by strmadmin using 'DB1'; Database link created. 5. Setup Source and Destination queues Source Database: DB1 SQL> conn strmadmin/strmadmin@DB1 Connected. SQL> EXEC DBMS_STREAMS_ADM.SET_UP_QUEUE(); PL/SQL procedure successfully completed. Target Database: DB2 SQL> conn strmadmin/strmadmin@DB2 Connected. SQL> EXEC DBMS_STREAMS_ADM.SET_UP_QUEUE(); PL/SQL procedure successfully completed. 6. Setup Schema for streams Schema: SCOTT Table: Taj NOTE: Unlock scott schema because in 10g scott schema is locked by default Source Database: DB1 SQL> conn sys/manager@DB1 as sysdba Enter password: Connected. SQL> conn scott/tiger@DB1 Connected. SQL> create table TAJ ( no number primary key,name varchar2(20),ddate date); Table created. Target Database: DB2 SQL> conn sys@DB2 as sysdba Enter password: Connected. SQL> conn scott/tiger@DB2 Connected. SQL> create table TAJ ( no number primary key,name varchar2(20),ddate date); Table created. 7. Setup Supplemental logging at the source database Source Database: DB1 SQL> conn scott/tiger@DB1 Connected. SQL> alter table taj add supplemental log data (primary key,unique) columns; Table altered. 8. Configure capture process at the source database Source Database: DB1 SQL> conn strmadmin/strmadmin@DB1 Connected. SQL> begin dbms_streams_adm.add_table_rules ( table_name => 'scott.taj', streams_type => 'capture', streams_name => 'capture_stream', queue_name=> 'strmadmin.streams_queue', include_dml => true, include_ddl => true, inclusion_rule => true); end; / PL/SQL procedure successfully completed. 9. Configure the propagation process Source Database: DB1 SQL> conn strmadmin/strmadmin@DB1 Connected. begin dbms_streams_adm.add_table_propagation_rules ( table_name => 'scott.taj', streams_name => 'DB1_TO_DB2', source_queue_name => 'strmadmin.streams_queue', destination_queue_name => 'strmadmin.streams_queue@DB2', include_dml => true, include_ddl => true, source_database => 'DB1', inclusion_rule => true); end; / PL/SQL procedure successfully completed. 10. Set the instantiation system change number (SCN) Source Database: DB1 SQL> CONN STRMADMIN/STRMADMIN@DB1 Connected. SQL> declare source_scn number; begin source_scn := dbms_flashback.get_system_change_number(); dbms_apply_adm.set_table_instantiation_scn@DB2 ( source_object_name => 'scott.taj', source_database_name => 'DB1', instantiation_scn => source_scn); end; / PL/SQL procedure successfully completed. 11. Configure the apply process at the destination database Target Database: DB2 SQL> conn strmadmin/strmadmin@DB2 Connected. SQL> begin dbms_streams_adm.add_table_rules ( table_name => 'scott.taj', streams_type => 'apply', streams_name => 'apply_stream', queue_name => 'strmadmin.streams_queue', include_dml => true, include_ddl => true, source_database => 'DB1', inclusion_rule => true); end; / PL/SQL procedure successfully completed. 12. Start the capture and apply processes Source Database: DB1 SQL> conn strmadmin/strmadmin@DB1 Connected. SQL> begin dbms_capture_adm.start_capture ( capture_name => 'capture_stream'); end; / PL/SQL procedure successfully completed. Target Database: DB2 SQL> conn strmadmin/strmadmin@DB2 Connected. SQL> begin dbms_apply_adm.set_parameter ( apply_name => 'apply_stream', parameter => 'disable_on_error', value => 'n'); end; / PL/SQL procedure successfully completed. SQL> begin dbms_apply_adm.start_apply ( apply_name => 'apply_stream'); end; / PL/SQL procedure successfully completed. NOTE: Stream replication environment is ready, just needed to test it. SQL> conn scott/tiger@DB1 Connected. SQL> --DDL operation SQL> alter table taj add (flag char(1)); Table altered. SQL> --DML operation SQL> begin insert into taj values (1,'first_entry',sysdate,1); commit; end; / PL/SQL procedure successfully completed. SQL> conn scott/tiger@DB2 Connected. SQL> --TEST DDL operation SQL> desc taj Name Null? Type ----------------------------------------- -------- ---------------------------NO NOT NULL NUMBER NAME VARCHAR2(20) DDATE DATE FLAG CHAR(1) SQL> --TEST DML operation SQL> select * from taj; NO NAME DDATE F ---------- -------------------- --------- 1 first_entry 24-JAN-08 1