1 Definition - CSE User Home Pages

advertisement

End-user Web Automation: Challenges, Experiences,

Recommendations

Alex Safonov, Joseph A. Konstan, John V. Carlis

Department of Computer Science and Engineering, University of Minnesota

4-192 EECS Bldg, 200 Union St SE, Minneapolis, MN 55455

{safonov,konstan,carlis}@cs.umn.edu

Abstract

The changes in the WWW including complex data forms, personalization, persistent state and sessions

have made user interaction with it more complex. End-user Web Automation is one of the approaches to

reusing and sharing user interaction with the WWW. We categorize and discuss challenges that Web

Automation system developers face, describe how existing systems address these challenges, and propose

some extensions to the HTML and HTTP standards that will make Web Automation scripts more powerful

and reliable.

Keywords: Web Automation, end-user scripting, PBD

Introduction

From its first days, the World-Wide Web was more than a just vast collection of static hypertext, and now

its character as a Web of interactive applications is essentially defined. A significant number, if not a

majority, of what we think of as "Web pages", are not stored statically, but rather generated on request from

databases and presentation templates. Web servers check user identity, customize delivered HTML based

on it, automatically track user sessions with cookies and session ids, etc.

Interaction with the Web has become more complex for the user, too. One has to remember and fill out user

names and passwords at sites requiring identification. Requesting information such as car reservations,

flight pricing, insurance quotes, and library book availability requires filling out forms, sometimes complex

and spanning several pages. If a user needs to repeat a request with the same or somewhat different data, it

must be entered again. We claim that there are tasks involving Web interactions that are repetitive and

tedious, and that users will benefit from reusing these interactions and sharing them. Interactions can be

reused by capturing (recording), and reproducing them. We illustrate the repetitive aspects of interacting

with the Web and potential for reusing and sharing tasks in the examples below.

A typical scenario of interaction with a Web information provider may involve navigating to the site's

starting page, logging in, providing parameters for the current request (which can be done on one but

sometimes several pages), and retrieval of results, such as the list of available flights or matching citations.

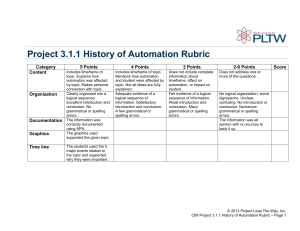

Figure 1 shows a typical session with the Ovid citation database licensed to the University of Minnesota.

The login page is bookmarked directly; no steps to navigate to it are shown.

Figure 1: A Session with the Ovid Citation Search

Since the citation database changes, the user performs the citation search multiple times, perhaps on a

regular basis. The interaction can be repeated exactly or with variations. For example, the user may be

interested in new articles by the same author and enter the same author name. Alternatively, the user may

specify different keywords or author, which can be thought of as parameters of the interaction.

Reuse of Web interaction is not limited to a single user. Consider a scenario in which a college lecturer

determines a set of textbooks for a class she is teaching. She recommends that her students purchase these

at Amazon.com. To make it easier for students to find the books and complete the purchase, she would like

to be able demonstrate the interaction with the bookstore using her browser: navigate to Amazon.com home

page, perform searches for desired books, and place them in a shopping cart. If these steps can be saved and

replayed by students, they will instantly get a shopping cart filled with textbooks for the class.

Citation searches, flight reservations, rental and car classifieds are all examples of hard-to-reach pages [2]

that are not identified by any bookmarkable URL, but must be retrieved by a combination of navigation and

form filling. Traditional bookmarks do not address the problems with hard-to-reach pages, since they use

static URLs to identify pages. Bookmarks are unaware of any state (typically stored in cookies) needed for

the server to generate the desired page, so sharing and remote use of bookmarks are limited. Finally,

bookmarks do not notify the user when the bookmarked page has changed in some significant way, for

example, when new citations have been added to a personal publications page.

Users can automate repetitive interactions with the Web by demonstrating actions to a system that can

capture and replay them. Tools to automate traditional desktop applications, such as word processors and

email clients, have been available for years. They range from simple macros in Microsoft Office to

sophisticated Programming By Demonstration, or End-user Programming systems that use inference,

positive and negative examples, and visual programming to generalize user actions into scripts. The WWW

is a different environment for automation compared to office applications, the main differences being the

dynamic nature of information on the Web and the fact that servers are "black boxes" for a Web client. In

this paper, we consider the challenges of automating the Web, based on our experiences developing the

WebMacros system and studying Web Automation systems.

End-user Web Automation is different from the automatic Web navigation and data collection by Web

crawlers, or bots, in that the former is intended to assist end users, who are not expected to have any

programming experience. Developing Web crawlers, even with available libraries in several programming

languages and tutorials, still requires understanding of programming, the HTTP protocol and HTML. Enduser Web Automation also differs from the help that individual sites give users in managing hard-to-reach

pages. For example, Deja.com provides a "Bookmark this thread" link for each page displaying messages in

a specific newsgroup thread. With Web Automation, users should be able to create and use scripts that

combine information from different sites, perhaps competing ones.

The rest of paper is organized is follows. First, we categorize and discuss challenges in end-user Web

Automation. Second, we describe the approaches in existing Web Automation systems that address some of

these challenges. Finally, we offer recommendations for standards bodies and site designers that, if

implemented, will make Web Automation more powerful and reliable.

Web Automation Challenges

We have identified four types of challenges that developers of Web Automation system face. The first

challenge type is caused by the fluidity of page content and structure on the WWW. The second type is the

difficulty of Web Automation systems to reason and manipulate script execution state and side effects,

since these are hidden in the Web servers. The third type is explained by the WWW being almost

exclusively a human-oriented information repository. Web pages are designed to be understandable and

usable by humans, not by scripts. Finally, the fourth type of challenges for Web Automation system lies in

the need to make them more flexible by specifying and using parameters in scripts.

Dealing with Change

Position-dependent and Context-dependent References

Because many sites use references that are defined by their position and context on a page, Web

Automation systems cannot always use fixed URLs to access pages. For example, the desired page may be

pointed to by the second link in a numbered list with the heading "Current Events"; the URL in the link

may change on a revisit. On the other hand, a reference with a fixed URL and context may point to a page

that gets updated on a regular basis. Examples include many "current stories" sections of online

newspapers, as well as eBay's Featured Items (http://listings.ebay.com/aw/listings/list/featured/index.html).

When Web Automation scripts execute, following such a URL will lead to a different page. The user may

intend to use the current information; however, it is also possible that the user is interested in the content

that existed when the macro was recorded.

Unrepeatable Navigation Sequences

Many sites use URLs and hidden form inputs that contain expiring and randomly generated values.

Avis.com, the Web site of the car rental company, and Ovid citation search both use hidden form inputs

that are generated from the current time when pages are requested. If page requests are repeated after a

certain period of time, both sites detect the expired sessions and return the user login page. Amazon.com

generates on first page request random tokens used in all URLs of subsequently requested pages. The

implication for a Web Automation system is that scripts authored by recording user's navigation cannot be

replayed verbatim - expiring and random values must be identified and their current values regenerated.

Reasoning about State

Cookie Context Dependence

HTTP was designed as a stateless protocol; however, the cookie extension allows servers to store state

between HTTP requests. Pages retrieved by Web Automation scripts depend on the context stored in

cookies on user's computer 1. For example, Yahoo! Mail uses cookies with an expiration date far into to the

future to identify the user, and cookies valid for several hours for the current login session. Depending on

what cookies are available, retrieving the URL http://mail.yahoo.com may produce a login screen for the

current user, a login screen for a new user, or the contents of the mailbox.

1

Other context elements include browser preferences when script was authored, and browser identity.

Ill-defined Side Effects

RFC 2068 defines that the HTTP GET, HEAD, PUT and DELETE request methods are idempotent, that is

the side effects of multiple identical requests are the same as for a single requests. However, there are no

standards for describing side effects of other request methods. Unlike a human who reads and understands

information on a page, a Web Automation system does not have information on what side effects can be

potentially caused by executing a script, or a specific step in a script. Examples of actions on the Web with

side effects include:

purchasing goods or services with the user's credit card charged (travel services at travelocity.com)

creating a new account with an online service when user's email address is disclosed to that service

the server determining user identity (typically from cookies) and updating its customer history

database

Clearly, a Web Automation system must not execute scripts multiple times that result in the user being

charged, unless this was the user's intention.

Overcoming Information Opaqueness

Navigation Success or Failure not Machine-readable

A human can determine from the login failure that she has typed an incorrect name or password, and repeat

the login procedure. For a Web Automation system, a retrieved page is not labeled with "Login successful,

ok to proceed", or "Login failed, please retry", or "We have been acquired by another company, please

click here to proceed to their Web site". It is not trivial to program a Web Automation system to detect such

conditions. Reacting to them correctly is even more challenging. Another example is when no results are

returned in response to a query. A Web Automation script must detect this condition and not attempt to

extract non-existent results from such a page.

Machine-opaque Presentation Media

Page contents can be in a format that is hard or impossible to parse for a Web Automation script, including

images and imagemaps, browser-generated content (client-side scripting), plugins and Java Applets.

Parameterization of Web Automation Scripts

The power of a Web Automation system increases if its scripts support parameters. For example, a user

may be interested in obtaining the best airfare to a specific city, but is somewhat flexible on the travel

dates. Such a user is likely to repeat the same interactions with the online reservation service, supplying

different travel dates. In this case, departure and return dates are reasonable parameters for a script

automating this interaction. The destination airport, airline and seating preferences and all other

information the user can specify are likely to remain constant. The ability of a Web Automation system can

be increased if it can distinguish information used to specify what the user wants (destination city for

airline reservations, author name for a citation search), and identify the user to the provider (user name,

password).

Approaches for Web Automation

Dealing with Change: Dynamic Content and Structure of Web Pages

AgentSoft's LiveAgent [4] was an early proprietary Web Automation system. LiveAgent scripts are

authored by user demonstrating the navigation and form-filling actions. LiveAgent introduced HPDL, an

HTML Position Definition Language that described the link to be followed in terms of its absolute URL, or

its number on page, text label, and a regular expression on the URL. It was the responsibility of a

demonstrating the script to specify the correct HPDL in a graphical dialog.

Turquoise [5] was one of the first "web clipping" systems, allowing users to author composite pages by

specifying regions of interest in source pages. Turquoise has a heuristically chosen database of HTML

pattern templates, such as "the first <HTML Element> in <URL> after <literal-text>". Patterns describing

user-selected regions on a page are matched against the pattern template database. For example, a page

region corresponding to the first HTML table on a page may match the template above, by instantiating the

pattern "the first TABLE in http://xyz.org after Contents". The template matching and instantiation

algorithm, along with the carefully crafted database of pattern templates, allows Turquoise to author

composite pages that are robust with respect to changes in source pages.

Internet Scrapbook [9] is another "web clipping" system, designed primarily for authoring personal news

pages from on-line newspapers and other news sources. Instead of using pattern templates, it uses the

heading and the position patterns of the user-selected region to describe it, so it is in theory less general

than Turquoise. Internet Scrapbook uses heuristics to perform partial matching of saved heading and

position patterns with updated content. The evaluation on several hundreds of Web pages randomly chosen

from Yahoo! categories showed that extraction worked correctly on 88.4% of updated pages, and 96.5%

with learning from user hints.

WebVCR [2] is an applet-based system for recording and replaying navigation. The developers of

WebVCR acknowledged the problems with replaying recorded actions verbatim. WebVCR stores the text

and Document Object Model (DOM) index of each link and form navigated during script recording. During

replay, a heuristic algorithm matches the link's text and DOM index against the actual page. WebVCR is

the only system that attempts to account for time-based and random URLs and form fields in the matching

algorithm. Though [2] does not discuss evaluation results, the matching algorithm in WebVCR is less

generic than those in Internet Scrapbook and especially Turquoise.

Reasoning About State

WebMacros ([6], [7]) is a proxy-based personal Web Automation system we developed. One of the goals

for WebMacros was to share Web Automation scripts among users, and to execute them from any

computer. This required the ability to encapsulate context in the form of cookies with a recorded script.

WebMacros script can be recorded in two modes. In the "safe" mode, no existing user cookies are sent to

the Web (but new cookies received during script demonstration are). The safe mode is appropriate when a

script will be played back from a different computer, or by another user. For example, the script to populate

an Amazon.com shopping cart with course textbooks should be recorded by the instructor in a safe mode,

since it will be replayed by students who should not have access to the instructor private information stored

in her cookies. In the "open mode" of recording, existing cookies are used, which may allow to create a

shorter script, if, for instance, a user login step can be skipped.

When a user plays a WebMacros script recorded in an open mode, she can select whether her browser

cookies or script cookies are allowed. If both are used, the user can choose their priority. We acknowledge

that having to select cookie options can be confusing to users, so reasonable defaults are provided in

WebMacros.

Overcoming Information Opaqueness

Verifying results of script playback is important, considering the dynamic nature of information on the

WWW. A limited form of machine-readable status reporting is built into the HTTP protocol response

codes. A page returned by a server could be different from the one expected by a Web Automation system,

yet not have an error response code. For example, in response to a query a server may return a humanreadable page explaining the session has expired and the user needs to re-login, or that no results are

matching the query. Since no explicit machine-readable notification of query failure is provided, a Web

Automation system must resort to natural language processing of page content, or reason about the HTML

structure of the returned page.

To verify that a page retrieved by a script step is the desired one, WebMacros builds a compact

representation of the HTML markup of each page as a script is being recorded. At playback, WebMacros

compares the recorded and retrieved pages based on their HTML structure [8]. Page structure is represented

as a set of all paths in the HTML parse tree leading to text elements on the page. Path expressions are

enhancing with some tag attributes, such font color and size, and "pseudoattributes", such as the column

number for a <td> tag. For compactness, path expressions are hashed into 64-bit fingerprints using

irreducible polynomials [1].

WebMacros determines the similarity of two pages based on the relative overlap of their path expression

sets. This similarity measure only depends on HTML structure, but not on content, which means that two

pages generated from the same presentation template with different data will be highly similar. Our initial

experiments indicate that the threshold values of 0.5 - 0.6 reliably distinguish pages with similar structure

and different content (as will be the case with template-generated pages), from all other ones. This allows

to verify results of WebMacros scripts playback, and alert the user if an unexpected page is retrieved. We

are conducting additional experiments on clustering pages and identifying page type based on structure,

using large e-commerce sites eBay! and Amazon.

Parameterization of Web Automation Scripts

Both WebVCR and WebMacros support authoring parametric scripts, with form values as parameters. In

WebMacros, all input and select elements are constant by default - these cannot be overridden when a

script is played. However, when a user is demonstrating a script, she can select "Variable" or "Private"

radioboxes added by WebMacros to each form element. Variable parameters use default values recorded at

playback, but can be modified when a user plays a WebMacros script in an interactive mode. Private

parameters must be specified by the user at playback; by default, WebMacros assumes that PASSWORD

inputs are private.

How Standards Committees and Site Designers Can Help Web

Automation Developers

In the previous two sections, we described the challenges of Web Automation and how existing systems

addressed some of them. In this section, we offer recommendations for the standards bodies and Web site

designers that should make Web Automation systems more reliable and powerful. The general approach we

recommend is to extend the HTTP protocol and the HTML standard with optional header fields and tag

attributes that provide additional information to Web Automation scripts. We believe that extending the

standards with optional elements is less intrusive to site designers and can be more easily adopted than the

switch to an XML representation.

Dealing with Change

Mark references to updateable resources

To inform a Web Automation script that a URL points to a page that get updated on a regular basis, a

special attribute should be added to the <A> tag. This attribute, perhaps, "Updateable", will take on boolean

values. Additional attributes can specify update frequency and the time of the last and next expected

update, if known.

Mark expiring and random values

Random and expiring hidden fields can be marked by adding the boolean "MustRegenerate" attribute to the

<INPUT> tag. With this information, the Web Automation system will know that for this field the current

value, rather than the recorded one, should be used. An additional "RegenerateUrl" attribute may point the

Web Automation system to the URL at which the server regenerates the random or expiring values. A

similar approach works for random and time-based tokens in URLs: for these, the HTTP reply header

should contain the "Regenerate-Url" attribute.

Include date of page template modification.

For dynamically generated pages, either the HTTP reply header, or the <HEAD> section, should designate

the date of the last template modification. A Web Automation script will be able to ascertain that the same

template is used during recording and playback, so script rules for extracting information from a page still

apply. This is different from the information in the "Last-Modified" header, which refers to the page itself

and not its template.

Reasoning About State

Annotate forms with side effect info

We propose adding a "SideEffect" attribute to the HTML <FORM> tag. The possible values for this

attribute include CardCharged, CardDisclosed, ListSubscribed, and EmailDisclosed. This attribute can then

be examined by a Web Automation script, so that it does not perform actions with significant side effects

without notifying the user.

Identify actions to regenerate cookies

If a server requires the user or session to be identified using cookies, it should provide a URL at which

these cookies can be regenerated. The server can send a "Cookie-Required" headers with the reply,

identifying the cookies that must be sent with the request to complete it, and the URLs at which these

cookies can be regenerated. The header will have the following form: "Cookie-Required: SESSIONID;

URL=http://www.abc.org/startsession.html".

Conclusion

Why should site designers consider Web Automation systems? We believe that, as the complexity of the

Web technologies and applications grows, users will turn to personal Web Automation as one of the tools

that simplifies use of the Web. Sites that cannot be easily automated by end users will be at a disadvantage

compared to the sites that are automation-friendly. We are developing a set of scripts that take HTML

pages or HTML templates as input, and annotate the HTML tags with the attributes we propose above.

Using a simple graphical interface similar to "Search-and-Replace", a site administrator will be able to

override default values of added attributes where appropriate.

We expect that some content providers, such as those providing real-time stock data, will not make their

sites easy to automate (as they already obfuscate content by, for instance, returning stock quotes as images

rather than text). We target our recommendations at sites for which the benefits of automation-friendliness

outweigh the potential drawbacks.

References

[1] Andrei Broder. Some applications of Rabin's fingerprinting method. R. Capocelli, A. De Santis, and U.

Vaccaro, editors, Sequences II: Methods in Communications, Security, and Computer Science, pages

143-152. Springer-Verlag, 1993.

[2] J. Freire, V. Anupam, B. Kumar, D. Lieuwen. Automating Web Navigation with the WebVCR.

Proceedings of the 9th International World Wide Web Conference, Amsterdam, Netherlands, May

2000.

[3] T. Kistler and H. Marais. WebL - A Programming Language for the Web. Proceedings of the 7th

International World Wide Web Conference, Brisbane, Australia. April 1998.

[4] Bruce Krulwich. Automating the Internet: agents as user surrogates. IEEE Computing, July-August

1997.

[5] Robert C. Miller and Brad A. Myers. Creating Dynamic World Wide Web Pages By Demonstration.

Carnegie Mellon University School of Computer Science Tech Report CMU-CS-97-131, May 1997.

[6] Alex Safonov, Joseph Konstan, John Carlis. Towards Web Macros: a Model and a Prototype System

for Automating Common Tasks on the Web. Proceedings of the 5th Conference on Human Factors &

the Web, Gaithersburg, MD, June 1999.

[7] Alex Safonov, Joseph Konstan, John Carlis. Beyond Hard-to-Reach Pages: Interactive, Parametric

Web Macros. Proceedings of the 7th Conference on Human Factors & the Web, Madison, WI, June

2001.

[8] Alex Safonov, Hannes Marais, Joseph Konstan. Automatically Classifying Web Pages Based on Page

Structure. Submitted to ACM Hypertext 2001.

[9] A. Sugiura and Y. Koseki. Internet Scrapbook: Automating Web Browsing Tasks by Demonstration.

Proceedings of ACM Symposium on User Interface Software and Technology, 1998.