RISC Pipeline Homework: Timing, Forwarding, Hazards

advertisement

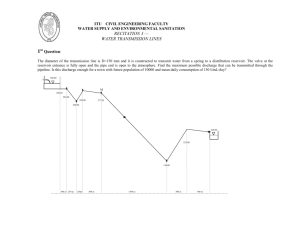

Homework 2 Due March 26 1 Use the following code fragment: Loop: LD R1, 0(R2) DADDI R1, R1, #1 SD 0(R2), R1 DADDI R2, R2, #4 DSUB R4, R3, R2 BNEZ R4, Loop ; load R1 from address 0+R2 ; R1=R1+! ; store R1 at address 0+R2 ; R2=R2+$ ; R4=R3-R2 ; branch to Loop if R4!=0 Assume that the initial value of R3 = R2+396. Throughout this exercise use the classic RISC five-stage integer pipeline and assume all memory accesses take 1 clock cycle. a. Show the timing of this instruction sequence for the RISC pipeline without any forwarding or bypassing hardware but assuming a register read and a write in the same clock cycle. Use a pipeline timing chart like Figure A.10. Assume that the branch is handled by simply stall the pipeline, and the branch condition checking and target address calculation are performed in the second stage. How many cycles does this loop take to execute? b. Show the timing of this instruction sequence for the RISC pipeline with normal forwarding and bypassing hardware. Assume that the branch is handled by predicting it as not taken. How many cycles does this loop take to execute? 2. We will now add support for register-memory ALU operations to the classic five-stage RISC pipeline. To offset this increase in complexity, all memory addressing will be restricted to register indirect (i.e., all addresses are simply a value held in a register: no offset or displacement may be added to the register value). For example, the registermemory instruction ADD R4, R5, (R1) means add the contents of register R5 to the contents of the memory location with address equal to the value in register R1 and put the sum in register R4. Register-register ALU operations are unchanged. Answer the following for integer RISC pipeline. a. List a rearranged order of the five traditional stages of the RISC pipeline that will support register-memory operations implemented exclusively by register indirect addressing. b. For the reordered stages of the RISC pipeline, what new data hazards are created by this addressing mode? Give an instruction sequence illustrating each new hazard. 3. Consider the following MIPS assembly code. LD R1, 45(R2) DADD R7, R1, R5 DSUB R8, R1, R6 OR R9, R5, R1 BNEZ R7, target DADD R10, R8, R5 XOR R2, R3, R4 a. Identify each dependence by type (data or control); list the two instructions involved; identify which instruction is dependent; and, if there is one, name the storage location involved. Hint: this question just checks the dependence between two instructions (not just two consecutive instructions). It has nothing to do with pipelining. Do not assume that this code will be executed in a five-stage pipeline. Please use a table to list all dependence. Below is an example Dependence 1 2 3 . . Data Data Independent instruction LD R1,… LD R1,… Dependent instruction DADD R7, .. DSUB Storage location R1 R1 b. Use information about the MIPS five-stage pipeline from Appendix A and assume a register file that writes in the first half of the clock cycle and reads in the second half-cycle forwarding. Which of the dependences that you found in part (a) become hazards and which do not? Why? Hint: I would like to see answers like the following: From the table in part (a), dependences #?, #?, .. and #? will (or will not) become hazards since … You may need to consider the cases with forwarding and without forwarding. 4. The following loop computes Y[i] = a X[i] + Y[i], the key step in a Gaussian elimination. Assume the pipeline latencies from Figure 2.2 (or the following table) and a 1-cycle delayed branch. Loop: L.D MUL.D L.D ADD.D S.D DSUBUI DSUBUI BNEZ F0, 0(R1) F0, F0, F2 F4, 0(R2) F0, F0, F4 0(R2),F0 R1, R1,#8 R2, R2, #8 R1,loop ; load X[i] ; multiply a*X[i] ; load Y[i] ;add a*X[i]+Y[i} ;store Y[i] ;decrement X index ;decrement Y index ; loop if not done Assume a single-issue pipeline. Unroll the loop as many times as necessary to schedule it without any delays, collapsing the loop overhead instructions. Show the schedule. What is the execution time per element? Hint: you need to unroll the loop twice. “per element” means “per iteration”.