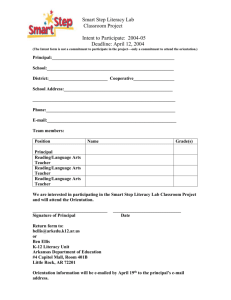

Poster

advertisement