Summer07_Topic4 - The University of Texas at Austin

advertisement

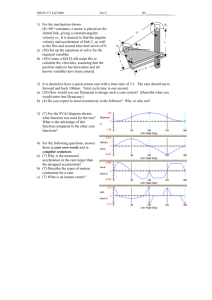

EEw382V ADVANCED PROJECTS I (ECD) SUMMER 2007 MAYANK GUPTA “TOPIC 4 – CIRCUITS & DATAPATH” INTRODUCTION At its very simplest, a microprocessor can be thought of as an assembly of distinct functional units: an input/output, a memory, a datapath that consists of arithmetic & logic (ALU) and control [1]. The datapath performs the actual data processing and is directly critical to the performance and throughput of the microprocessor. It consists of a pipelined flow of fixed and floating point execution units that perform addition, multiplication, logical operations etc; register files (a type of memory) and other components such as multiplexers, memory and wires, buses and buffers that make up the interconnections. The modern approach to microprocessor design strives for higher computational throughput but at lower active/static power and smaller area. This approach must manifest itself in the design of the basic units of the microprocessor as well. This means that circuit designers and architects must leverage existing technologies and inventive circuit topologies to strive for the most energy/area efficient performance in the combinational and sequential logic that makes up the microprocessor, especially the datapath [2]. A lot of research has gone into innovative circuits and architectures to create power efficient datapaths and memories. Designers must balance high performance with leakage, noise, wiring density and clock generation complexities at the circuit and transistor level; develop new architectures to target the right applications and implement underlying functional blocks efficiently. This also compounds challenges with design for test and manufacturability, designing for process variations in sub-micron technology, scalability, ease of design interoperability, extensions to the instruction set for media type applications and design verification [2]. The design must also champion an easy design flow which requires the right set of simulation and verification tools. The designs reviewed in this paper offer a good window into the challenges in designing the components of the datapath and clock; and the complications with silicon implementation. RESEARCH OVERVIEW I have reviewed four papers in the field of circuits and datapath from the IEEE Journal of Solid State Circuits. A list of these papers can be found in the references section of this paper. Two papers come from designers of the multicore CELL processor; one paper focuses on the general circuit design elements, styles and philosophy of the entire CELL processor while the other paper focuses on the floating point unit of the synergistic processing elements of the CELL [3, 4]. The third paper comes from Intel and focuses on the integer execution unit of the Pentium 4 designed for 65nm process [5]. I have grouped these three papers under the topic of ‘Datapath Circuits’ and provided a background section, a section on the features of their design and a critique on each paper. Some of the questions I have posed while reviewing these papers are: 1. Does it present the tradeoffs with respect to power, area and performance? 2. Does it address technology or process issues with sub-micron technology? 3. What are the constraints on the design styles? 4. Do the design choices appear to be scalable? 5. Does it address testability and design for manufacturing? 6. Is the design approach application specific or general purpose? 7. Does it address and distinguish between architectural and circuit design choices? The final paper comes from researchers at the University of Toronto and is a tutorial and an extensive survey of CAM architectures [6]. This paper is analyzed separately under the ‘High Speed Memory’ section with a background, a section on the features of CAM memory and a critique. Some questions I have posed when reviewing this paper are: 1. Does it address power efficiency? 2. Does is address technology and process implementation and scaling issues? 3. Does it present all the tradeoffs in the competing architectures? 4. Does it address any specialized circuit topologies in addition to static CMOS? 5. Does it provide real silicon experimental data? 6. Does it provide adequate information on design for test and manufacturing? DATAPATH CIRCUITS There are various distinct circuit elements that make up a typical datapath. Each circuit element must be designed and implemented with power efficiency, scalability and robustness 2 in mind. The latches and flip flops are the basic elements of pipelined flow and registers. These require an over-reaching design cycle that must allow sufficient room for macro level optimization and tradeoffs as well. This calls for interesting dynamic and static circuit topologies. The SRAM and array circuitry that make up the various levels of cache and register files also require careful considerations during design. A robust and balanced clocking scheme is required to drive the various circuit topologies as well. The performance and power requirements of the execution units of the datapath depends on the strengths of the underlying circuit elements and topologies, however the decisions and tradeoffs in the architectural design are equally important. Architects can take an application specific approach based on the most likely format of the data to be processed or a more general approach in their design. The execution units are also usually the hot-spots of a chip and limit overall active power and frequency of the chip. The first set of papers deal with circuit design and datapaths of the CELL. The CELL was developed jointly by the Sony, Toshiba and IBM alliance starting in 2001 [7]. The CELL Broadband Engine combines a general-purpose POWER architecture based core with powerful co-processing elements that can perform multimedia and vector processing. The architectural design and first implementation was carried out jointly by the STI alliance on 90nm technology followed by a 65nm implementation [7]. The CELL was designed to be a multi-core powerhouse that combines the capabilities of a general purpose CPU and a GPU into one die. The design emphasizes power efficiency, bandwidth and complex code execution to allow peak throughput computation [8]. The CELL consists of nine coprocessors: a POWER based main processor (Power Processing Element) and eight coprocessors (Synergistic Processing Elements) connected through a circular bus called the Element Interconnect Bus [8]. The PPE runs the OS and controls the SPE. Various innovative circuit elements in the datapath, clocking systems and the arrays of the CELL have been designed to support power efficiency, high bandwidth of code execution, scalability and technology challenges. These innovative circuit elements are the subject of the first paper. The SPE is designed for vectorized floating point calculation as is the case in media and graphics applications [8]. As a result, the datapath of the SPE has been architected to support the unique stream of Single Instruction Multiple Data (SIMD) type instructions seen in these types of applications [8]. The second paper details the floating point execution unit that supports this type of instruction execution. 3 The Intel paper details the integer execution unit of the 65nm Pentium 4 microprocessor. The Pentium is the brand name for x86 and now x86-64 based single core CISC processors made by Intel. The Pentium 4 features a deeper instruction pipeline than previous Pentium versions designed to scale to very high frequencies known as the NetBurst architecture which brought about obvious power limitations [9]. It also featured x86 multimedia extensions and support for virtualization. The Pentium 4 went through numerous core designs and various technology iterations. The 65nm Pentium 4 released in 2006 is based on the 90nm ‘Prescott’ core was the end of the line for the high power Pentium 4 architecture [10]. The 65nm implementation essentially strived to bring a degree of power efficiency to the Prescott core and demanded some innovative circuit design techniques. The integer execution unit for the 65nm Pentium 4 is a good example of the circuit redesign involved in implementing the datapaths of an older technology into a new technology and is detailed in the third paper. CELL circuit design [3] This paper focuses on the circuit design techniques and design verification methodology that went into designing the circuit components of the CELL processor: the PPE, the EIB, units for thermal and power management, test, debug and the functional units of the SPE. The goal for the designers was to integrate high performance components without violating any thermal or package constraints in 90nm SOI technology. This required a simple yet robust design style as well as dynamic and highly customized circuits for maximum performance, yield and speed. The challenge was to address these tradeoffs and to pick the right implementation for the technology. Background The CELL architecture was designed to exploit streaming vectorized loads and thread level parallelism seen in media type application software where the PPE runs the OS and coordinates the flow of data and threads through the SPE. The CELL has its own custom instruction set architecture with some elements of VLIW computing and is based on the POWER architecture with additional support for its multi-core execution. The CELL is a push by its inventors to create a processing powerhouse designed for the media and digital entertainment content consumer market. At the same time, the CELL also features powerful SIMD, vectorized co-processors that are adept at floating point calculations suitable for super computing type needs. Currently, the most visible implementation of the CELL processor is 4 in the Sony Playstation 3 game console. There could also be possible applications of CELL based systems in the embedded or blade server market suited for medical imaging purposes. The competitors for such a product would be multi-core offerings from IBM such as the Xenon which also features a SIMD like implementation. The x86 offerings from Intel and AMD that penetrate every level of the microprocessor market are also competitors. The main constraint of the chip is the actual code development for extracting high throughput potential from the CELL. As a result, the success of the chip requires a strong adoption by software developers that write applications for the chip. The architecture also forces the CELL to be cost effective only in a segment of the market dominated by media application software and therefore unpractical for consumer PC or desktop computer. The dependence of the SPE on the PPE is another constraint as yield can depend solely on PPE functionality. Features and Technology The circuit design of the CELL features an aggressive latch, flip-flop, clock generation and array design methodology along with robust electrical verification to enable 4GHz operation in hardware. The design style ranges from simple static CMOS to pulsed, dynamic and domino circuits for speed critical paths. One of the many design challenges was to design local base clock generators (LBC) to drive the master-slave flip flops. A single phase global clock grid drives numerous LBCs to provide gain for local clock nets and functionality for test and local clock gating. The constraints were to minimize latencies, power and area. The LBC was designed to drive a 64bit register through a three stage buffer. The LBC drives a launch clock (lclk) with three fan-out-of-four (FO4) delays from base clock as well as a data capture clock (dclk) with longer delay to provide clock overlap at the cycle boundary to minimize races. Clock waveform integrity was ensured by a post layout electrical design rule checker. The LBCs are also designed to be clock gates through test and hold signals. They also support scan clocks for test since scan signals are distributed throughout the chip using simple flip-flops. The test and hold signals can also define regions of half-frequency operation which mitigates the need for a separate low frequency clock. In addition to basic transmission gate flip-flops, designers made use of a variety of special purpose flip-flops and latches to meet power and frequency targets. One important design was a high speed multiplexer-latch with nine inputs that accepts and drives static signals. The multiplexer (MUX) is a dynamic NOR followed by a set-reset latch. It is driven by an LBC 5 through a delay chain to force dynamic gate inputs after a fixed time interval to minimize hold time. One branch of the MUX is dedicated to fast-scan testing. The addition of a scanport was found to have minor impact on area and delay for the latches. The area, power, hold time, latency and delay tradeoffs for four different types of latches/flip-flops were characterized, included in the design library and chosen effectively. Pulsed latches had a power advantage over flip-flops due to reduction in clock power, but flip-flops were best for hold time considering process, voltage and temperature variations. Longer hold times and tougher implementation rules for the pulsed clock limited their usage. To maximize design re-use the circuit design flow was partitioned hierarchically. There was also re-use within the block or macro. A macro scales from a few transistors to a few thousand transistors and were designed in three different styles: fully automated, semicustom and full custom. The main control logic was designed using fully automated techniques due to its regular structure and constant re-work. The random logic macros (RLM) of the control logic were partitioned into a few thousand gates using standard cells. Libraries made use of multi-Vt cells. All timing and electrical analysis was carried out at transistor level for all RLMs. A lot of effort went into the synthesis algorithms for the flip-flops and the clock buffers as well. Some macros were built using a semi-custom flow allowing quick tuning iterations. The designers constructed a schematic using basic gates from a standard cell library which was then optimized to tune device widths using an in-house tuner without adding buffers or logic. The 24bit adder was constructed using this methodology and reduced path delay by 30% and area by 25%. A full custom design methodology was used for specialized circuitry where pockets of domino logic had to be inserted surrounding static logic to provide speedup with minimal power consumption increase. This was used to create the footer-less domino circuits to produce fast carry input to the CAM speeding it up by 20%. The SPE circuit design received special attention as they are the limiters of performance and power for the CELL. The design philosophy was to use static CMOS as much as possible with dynamic circuits used in only the most area, power and timing critical paths. All dynamic circuits were self-contained in a macro with static gating. Some examples of macros using dynamic circuits are multi-port registers files, data forwarding macros, floating point unit MUX and the dynamic programmable logic arrays (DLPA). The general purpose register files (GPR) were designed to operate in three cycles: one cycle for address pre-decoding, one 6 cycle for final decoding and array access and the last cycle for dataflow distribution. The GPR was designed to support eight read/write operations in a cycle. The design uses a twostage domino read and a static write. The wire spacing and width was determined through Spice simulations and implemented using custom developed routing tools to optimize signal integrity and distribution delays. The DLPAs were generated using a custom designed program for schematics and layout. It was implemented using dynamic footed NOR gates followed by a strobe circuit which also incorporates scan testing. The DLPAs are also used for clock gate control signal generation. The dependence checking and the data-forwarding of the SPE are carried out by the dependence check macro (DCM), forwarding macro (FM) and several DLPAs. The DCM compares a new instruction with instructions in the pipeline and the DLPA is used to determine final dependencies. Based on these results the data in the DM is forwarded to the right dynamic MUX-latch. The DCM is highly customized and was implemented using static and transmission gates. Each slice of the FM consists of 16 32bit registers and a 16-way MUX implemented with dynamic eight-way NOR gates followed by a latch. Special attention was paid to the physical implementation to avoid cross talks in the MUX. The goal of the SRAM array design was to support minimal cycle time, power and area for maximum yield, scalability, robustness and multiple manufacturing lines. The CELL consists of a 512KB L2 cache and eight 256KB local stores (LS) in the SPEs for a total of 2MB memory. The arrays of the CELL are assigned to four functional groups: SPE, PPE, L2 and support. The SPE and PPE arrays work on core clock while L2 and support arrays work at half-frequency. The arrays are pipelined to support high frequency with one cycle latency between wordline select and dataout. Read operations are single ended and require bitline pre-charge high. The 6T SRAM cell was designed to minimize wire lengths and gate alignment. The LS bitcell uses a 0.99 square micron cell with 66 cells per bitline and a highVt, noise tolerant sense-amp read scheme, while the remaining cells use a 1.06 square micron cell with a ripple domino sense write and 16 cells per bitline to reduce stress during reads. Array functionality over all process, clock variability, voltage and temperature corners had to be ensured through device level corner analysis with special attention paid to timing and stability. Heavy statistical analysis and electrical verification was used to ensure cell operation with margins for writability, stability, yield, timing and reliability. The designers strived for minimum area, uniform printability with added redundancy in word and bit 7 direction to address defects and tail-end distribution writability and stability fails. A scan based memory BIST was implemented as well which has the ability to test multiple arrays at once. To ensure design and electrical integrity a rigorous system of design checks was implemented alongside the design analysis methodology. All digital macros had to pass a unified set to electrical and topological checks which focus on clock integrity, latch and flipflop usage, dynamic circuit usage as well as use of transmission and static gates. All designs also passed EM and IR drop checks to ensure robust power supply. Physical design checks to improve yield without impacting area and to ensure easy integration of the macros was also adopted. Transistor level timing, noise and power analysis was performed using identical flows that comprehend SOI effects like floating body etc. All timing checks were done at macro level and transistor level consistently. Critique The paper goes a great job of covering all the design styles and circuit elements that went into the CELL microprocessor. The designers adopted a robust verification and electrical check methodology to ensure a sound design across all electrical parameters. There was good information on the various types of latch/flip-flop design as well as the pros and cons of each. The tradeoffs made in the design were apparent. For example, the designers chose to minimize area in the caches at the cost of higher BIST redundancy and testability. It wasn’t clear what debug and test features were part of the BIST engine, but it should be strong enough to account for SOI hysteresis effects on cache cells and read circuitry. There was little information on architectural gating and ability to switch off cores. It wasn’t clear if the CELL supports any type of gating of the SPE cores to combat defect and yield issues. Another tradeoff was the need to use dynamic and domino circuit to speed up critical paths usually at a minor cost of power, wiring and additional design verification. The design uses a mixture of domino and static logic; the decision to encapsulate domino logic with static buffers at the macro boundaries is a good practice to ensure timing and signal integrity. The design of the local clock generator is also a good choice as it ensures a robust timing scheme and scalability. The local clock generators are packed with test, debug and gating features as well as support for domino circuitry. From a silicon implementation point of view, it wasn’t clear how the post-layout and electrical checking comprehends multi-Vt transistors or multi- 8 voltage supplies. The paper could also have used some information on the circuit topologies of the analog components like the PLL. It is likely that the usage of more aggressive and dynamic circuit design styles complicated and increased the design checks, which may have strained design resources; but it exemplifies the importance of a robust design as process variations in technology rise. Overall, this is an informative paper on the latest design styles for a sub-micron SOI technology design implementation. SPE CELL floating point unit design [4] The focus of this paper is the architecture and circuit design of the pipelined floating point unit (FPU) of the SPE of the CELL. The SPE is designed to accelerate the processing of realtime media and data streaming applications. As a result, the SPE FPU is designed for single precision floating point SIMD instructions such as multiply-adds. The design goals were to use the right circuit components and topologies detailed in the previous paper and architect an efficient pipelined execution unit that meets all the specifications of delay and power consumption. The paper goes into details of the design challenges and the building blocks of the FPU. Background The SPE of the CELL is the workhorse of the system that performs the floating point calculations required in media applications. As a result, it is architected to sustain high bandwidth of streaming data and therefore requires wide and fast execution units. This places a burden on the designers to create specialized custom circuits with minimal wiring and clocking overhead. Since the SPE has been tuned to execute floating point instructions, it might not show the best in-class performance for more general purpose workloads. The design is also dependent on the PPE and the code base to keep the hungry cores of the SPEs fed in order to maximize performance for power. The applications, customers, competitors and constraints of the CELL architecture have already been mentioned in the previous background section. Features and Technology The SPE contains 128 128bit wide registers. The FPU sources these registers to perform very fast single and double precision arithmetic. A fixed-point unit also carries out 32bit integer arithmetic and logical operations. The single precision is a four-way SIMD design which 9 supports media extension like SSE, MMX and VMX for vector computing. The FPU is implemented in 90nm SOI technology with 769K transistors to meet a 5.6GHz, 1.4V specification that delivers 44.8 G-flops of performance and burns 1.4W at 4GHz. The designers have made an effort to optimize the architecture for target applications, co-design logic circuits with integration, and carefully balance pipeline stages for minimum delay and maximum throughput. The SPE instructions process 128 bit operands divided into four 32bit word slices. Each 32bit slice supports 32bit single precision instructions, 16bit integer multiply-add instructions and can convert instructions between both formats. Operands are fetched from the register file (RF) into the operand latches of the FPU and the results of the FPU can be sent back to the operand latches of the FPU or the forwarding unit (FW) from where they can be distributed to other units like the LS or the fixed-point unit. The designers laid out the main design challenges for the FPU in the paper. The FPU implementation had to meet an 11 FO4 stage delay to balance performance and power. The latency of the FPU was designed to be six cycles for single precision instructions. This required optimization at all design layers: architecture, logic, circuits and layout. Most of the logic was done in CMOS static gates with dynamic gate used only in timing critical areas. To reduce latch insertion delays, three different types of latches (type C, D and E) were designed, simulated and characterized with multiple levels of driver sizes and local clock buffers to minimize delay. The Type C latch uses a 9:1 multiplexer which includes a scan input, supports static inputs and outputs and uses dynamic circuits. The Type D latch is a transmission gate flip-flop that also includes scan inputs. The Type E latch is a static pulsed latch that reduces insertion delays by replacing inverters with NAND2 logic with latch functionality and time borrowing capabilities. The FPU width was required to be aligned to the data flow stack of the SPE which is 46 bits wide and consists of 32bit data and a 15bit clock bay area which occupies the center area of two adjacent slices. The 46bit FPU data flow is split between a 9bit exponent and a 37bit fraction with most fraction macros folded to reduce their width. The FPU had to be placed such that the main high frequency clock grid covers the FPU. The LBCs of each macro are located to the side of the latch bit cells. The aligner was placed between the adder and the multiplier. To optimize for target media applications i.e. single precision multiply-adds, the architects deviated from IEEE floating point standards by only supporting truncation rounding and no-trapping exception handling 10 but not the representation of infinity or NaN. However, they did add software support for missing IEEE single-precision features. The designers also went into detail about the main building blocks of the FPU: formatter, multiplier, aligner, fraction adder, leading zero anticipator (LZA), normalizer and result multiplexer. The formatter pre-processes operands of the integer and converts instructions to go through the FPU with an extra cycle penalty. The formatter also sign extends the floatingpoint mantissa with a zero sign and integer operands by a 2bit sign to perform the multiplication. The multiplier is a 25bit circuit that uses radix-4 booth encoding to cut down the number of partial products. It supports 2s complement multiplication and uses hot-1 encoding to deal with sign bit extension. The multiplier makes use of static adders which consist of transmission gate XOR and AOI logic to deal with partial products. The static implementation reduces wiring associated with timing and allows more optimization. The multiplier is a two cycle design with a total block delay of 22 FO4. The staging latches use Type E latches which allow time borrowing. Due to the 37bit width restriction, the tree had to be compressed and partial product locations had to be shifted. The aligner shifts the addend based on exponent differences to align with the product. The aligner has two critical paths both of which starts with the selection of the exponents. The first critical path computes the shift amount and decodes it into select signals which control the 4:1 MUX that performs the actual shifting. The 4:1 MUX consists of four-input transmission gate multiplexers. To reduce this delay the aligner uses a sum-addressed scheme which performs adding and decoding in parallel. The second critical path occurs when the aligner performs shift amount saturation by checking the shift amount for overflow and underflow to control the last stage of the shift. The fraction adder is a sign magnitude adder which receives the product from the multiplier in a carry-save format and the aligned addend from the aligner. The fraction adder consists of an incrementer, an adder and sticky logic. This adder uses a Kogge-Stone parallel prefix adder with a binary carry look-ahead structure. The LZA is a pipelined structure that determines the number of leading zeros in the fraction adder result. The LZA behavior depends on whether it receives the result from the adder of the fraction adder or the incrementer. In the first case, the LZA produces propagate, generate and kill signals for each bit position to detect an edge vector which determines the number of leading zeros. The normalizer performs normalization shift of the output from the LZA in four stages. The 11 fraction/exponent rounder adjusts exponents and detects adjustments. It makes use of a dynamic MUX-latch and Type C latches. To reduce active power in the SPE designers make extensive use of clock gating. All registers have a clock enable at the LBC and only pipeline stages with valid instructions are activated by the controller. Circuit blocks are clocked based on instruction type and operand values. For example, integer instructions make the LZA and normalizer idle while an add instruction bypasses the multiplier. The control logic that controls these signals is built from standard cell library and is fully automated. The design also makes use of a 32bit bypass bus in the FPU to minimize the delay of instructions that don’t require the multiplier or the aligner. Critique This paper presents a very detailed look into the architecture of the FPU. The designers presented all the constraints on the architecture of the FPU effectively to illuminate the tradeoffs in their choices. The trade-offs were quite obvious, the designers were striving for maximum throughput for minimal area and power. One of the stand-out features of the FPU architecture is the narrow data-paths of the multiplier and aligner. Although there is a minor overhead required in folding and compression of the operands, it aligns to the SPE data flow width which helps throughput. The paper mainly focuses on the architectural choices of the FPU with minimal look at the underlying circuit topologies and with even less information on silicon implementation. Some information on any SOI related issues with the FPU implementation would have also been interesting. The paper presents good information on the critical paths of each block in the FPU but could have offered more information on how they used multi-Vt cells if any or other ways to speed up all the critical paths. It would have been interesting to learn more about the architectural design choices for the register files and forwarding mechanisms as well and how they interact with the execution units. The paper mentions deviations from IEEE standards, but offers no information on any performance hit with the software routines with implementing the missing features. Would have including these missing features been as costly? The design approach seem to meet all the specifications of the FPU, however it isn’t clear if their solutions will be scalable to deeper sub-micron technologies or more cores on a die. Overall, this is a good paper on the 12 architecture of floating point execution units for media applications but could have used some more circuit and transistor level optimization information. 65nm Pentium 4 integer unit [5] The Pentium 4 integer execution unit was designed as a very high speed segmented 64bit ALU to enable 9GHz operation while lowering active and static power. The main challenges came from re-designing the original low voltage swing integer execution unit in 90nm technology for operation at 65nm with a 2x frequency fast clock (fclk). The designers go into details of the domino and static circuit topologies as well as the use of tools and methodologies to lower design complexity and reduce development cost and time. The designers claim a 42% reduction in normalized static power. Background The 65nm Pentium 4 chip was the final revision of the Pentium 4 and was meant to be a technology shrink into 65nm with minimal additional features. The 64bit 65nm Pentium 4 works in single core and dual core (two die in a single package) configuration with 2MB L2. The Pentium 4 core was originally designed to scale to extremely high frequencies but hit a power ceiling due to technology scaling. This is most likely the reason for the 9GHz design specification on its functional units like the integer execution unit. Intel went into production with the 65nm Pentium 4 under the 6xx series name [10]. The constraints on this chip were obviously the very poor thermal and power ratings compared to the K8 based Athlon branded x86 architectures from AMD. The 65nm did not appear to offer any improvements over the Prescott based 90nm Pentium 4 core either [10]. The 65nm Pentium 4 was eventually superseded by the Pentium D and Intel Core branded processors which favored more energy efficient multi-core architectures over raw clock speeds. The Pentium 4 was designed to fit into the consumer and media system solutions market in addition to the tradition desktop and low end server markets that can exploit its virtualization capabilities. Features and Technology This iteration of the Pentium 4 was designed for a 65nm strained silicon CMOS technology which offers 15% increase in drive current. It uses a NiSi layer on the gates and drain to lower capacitance, 1.2nm gate oxide and eight layers of copper interconnect with low K dielectric and 35nm gate length transistors. With this technology scaling in mind, the integer 13 execution unit of the Pentium 4 was redesigned to use a 2x frequency fast clock to enable single cycle latency on critical bypass loops, domino circuitry to replace low swing voltage technology used in 90nm and new architectures for the ALU. The integer register file (IRF) and the address generation unit (AGU) designed for 9GHz operation at 1.3V also reduce power over the 90nm integer execution unit. The designers provide excellent details on the integer unit and the 2x clocking scheme. The AGU, ALU, IRF, bypass cache, write-back buses, flag logic and single cycle latency operations (adder, shifter, rotator and logic operator) of the integer execution unit operates on the 2x frequency boundary. Longer latency operations like multiply are implemented outside the integer execution unit on the main processor clock boundary. The integer unit also includes a fast store forwarding buffer (FSFB) to reduce store to load latency. The 64bit data path is implemented as two discrete 32bit paths to speed up latencies of the 32bit ALU operators. Write-back buses provide source operand data for the ALU and AGU from the register file, data cache and FSFB. The write-back buses which are outputs of the ALU/AGU also write back results to the register file. The IRF can sustain 12 reads and 6 writes per processor clock cycle, the AGU can perform one load and store address and 32bit ALU per processor cycle and the 32bit ALU loop latency is also one processor clock cycle. The ALU and the AGU are implemented with a full-swing two phase 2x frequency domino circuitry to maintain signal integrity. However, time borrowing, races, misaligned inputs and clock duty variation shrinks the effective pulse-width for domino evaluate and pre-charge and complicates domino circuit design. The failures caused due to incomplete reset/pre-charge and pulse evaporation are called signal triangulation failures. The two phase 2x domino scheme has been implemented to minimize the risk of these signal triangulation failures in several ways. Set dominant latches (SDL) are used strategically to convert half clock period width domino signal to full clock period width. N-skewed CMOS gates are used to stretch the domino evaluate window at the cost of noise sensitivity as opposed to a mechanism that uses single PMOS for pre-charge and a complex NMOS tree for the evaluate. The effective pulse width for each transition of a node is thoroughly characterized during verification simulation to ensure triangulation fails do-not limit the frequency of the integer unit. The designers however, allowed some power race violations as clock edge adjustments for the 2x clock would have caused triangulation failures. The 2x frequency clock is designed as a single phase clock generated by a local pulse generator to minimize clock uncertainty overhead. The 14 pulse generator is dual edge triggered to produce 2 fclk in one processor clock cycle. The high phase of the fclk is independent of the processor clock by virtue of a self-timed reset loop but can be made stretchable to facilitate speed path debug on the fclk. In silicon validation, an appropriate setting is chosen to provide the best yield. The variation of width, Vt and length of the transistors of pulse generator was used to simulate the timing uncertainly. The write-back bus was designed to be timing critical and required re-design for the 65nm implementation since interconnect delay does not scale as well as gate delay. In addition to improvements to the write-back buses, to mitigate issues from duty cycle variation, all receiving low voltage swing (LVS) input multiplexers were replaced with static and domino based multiplexers; dual rail logic in the AGU and L1 data array was converted to single rail to provide additional metal resources to the write-back buses. On the other hand, the ALU adder input multiplexer was implemented as a symmetric dual rail system to streamline high frequency timing interface between the input multiplexer sequence and the dual rail domino adder. The ALU adder input multiplexer uses a segmented 5:1 multiplexer which consists of two smaller multiplexer-latches merged through a domino gate. This achieved denser layout and reduced area overhead and delay. The integer unit consists of two 64bit ALUs, each implemented as a 32bit data block with one fclk latency for communication between the upper and lower units. The ALUs execute add, subtract, logic and rotate operations. The adder is based on a sparse radix-2 carry merge tree that generates every fourth carry. This architecture speeds up the critical path by moving the carry merge logic to a non-critical side path. This adder also reduces propagate-generate fanout by up to 50%, wiring complexity by 80% and delay by 20% compared to a KoggeStone implementation. The ALUs require two fclk cycles to execute an ADD/SUB instruction. In the second fclk cycle a pair of 2:1 multiplexer selects the appropriate conditional sum or logic rotator result. The adder also supports 8bit and 16bit modes of operation by incorporating carry-kill circuits in the carry-merge tree without impacting performance. The designers found that the domino and static gates used in the ALU tree scales better at lower voltages with 5% reduction in power but at the cost of 5% more area when compared to a simple shrink from 90nm. The AGU computes linear addresses for cache access from source operands. These operands are merged using a 4:2 compressor 15 followed by a sparse tree completion adder to give full linear address. The sparse tree is divided into two 16bit segments. The lower 16bits is implemented in dual rail domino circuits in order to meet the tight latency constraints of the L1 data cache. The ALU shifter/rotator performs 8bit, 16bit and 32bit shift and rotate operations as well as byte swaps. Two 32bit circuits are cascaded to perform 64bit shift left operations. To support 2x frequency operation, the rotate/shift is broken into three stages with reduced fanout. This also lowers the wiring complexity but at the cost of 20% area penalty. The IRF is a 144 entry array which supports six reads and three writes per fclk. The main goals were to meet speed, bandwidth and area concerns. To reduce long wire delays, the designers broke up global bit lines to reduce RC delay. To minimize pulse evaporation, local bitlines are terminated by SDL. To meet the wire speed constraints, the write was pipelined; this alleviated the wire data distribution overhead across all bitcells at high frequency. A bypass network is used to detect read/write collisions which required 18 CAMs for each cycle of overlap. The decoder was carefully balanced using special latches to minimize area needed for address decode transistors and wiring to distribute final decoded address across the entire array. The 90nm decoder scaled well into 65nm technology; there was a 35% smaller area footprint and lower read/write latencies but at a higher power density. The designers found the integer unit to have the higher power and thermal density on the CPU. This required heavy circuit level power optimization in the aforementioned components of the integer unit. These optimizations included replacing LVS dual rail in the AGU with a single rail, replacing LVS carry select adder with a sparse tree adder, replacing LVS logic execution unit with a single rail static logic execution unit, replacing the LVS AGU adders with a parallel sparse adder and replacing the LVS rotator with a single rail domino rotator design. The entire AGU, ALU, rotator, adder and logic execution was done in single cycle gating and high leakage devices were minimized. All these changes resulted in a 3.8W reduction in power which translates to a 200MHz upside for the 65nm processor. At 1.3V and 70 degrees the power consumption was found to be 10.3W. Critique With the hindsight of Pentium 4’s demise in the face of thermal and power challenges, it is little easier to critique the design choices in this paper. It appeared the integer unit required some complex and specialized re-design to fit into a competitive 65nm thermal budget. This 16 required a re-design of most blocks which resulted in either an area or power hit. It was clear that the architecture of the Pentium 4 functional units was not scalable with technology. The main design approach seems to favor replacing LVS circuits with domino circuits to gain performance and combat process variations and noise. The incorporation of dual-rail domino logic suggests additional wiring and area hits to gain additional performance and complications with clocking and pre-charge. The paper claims huge power gains with their implementation but this is compared to a straight-forward shrink of their 90nm into 65nm which would have un-realistic. A better metric of comparison would be some kind of relative power consumption of the integer unit compared to the rest of the system for a given technology. The paper provides good information on all components of the integer execution unit including register files and the 2x clocking scheme. The obvious drawback of having to use a 2x clocking scheme is higher power dissipation. The paper claims that in spite of a mixed domino/static circuit design style the complexity of the design flow was reduced, this speaks to the refinement of the design flow which is important as designs get complex. The designers explain the architectural tradeoffs involved in designing various components of the unit including the AGU, ALU and shifter/rotator as well the challenges of actual circuit implementation. The goals were to lower latency and minimal area and power cost, and the 9GHz specification was reached. The paper could have used some more information on the test and debug features that went into the flip-flop and latches used in their design. HIGH SPEED MEMORY In addition to the various levels of cached SRAM memory, there are other memory architectures that are part of the datapath and must serve single-cycle, high speed lookup applications. As opposed to random memory access of standard computer memory where an address is supplied to procure a data word, certain applications require a memory that can search through the entire array and return a storage address for a given word. A common example is the lookup table function of an internet router. Such a content-addressable memory (CAM) architecture is also used in Translation Lookaside Buffers, cache controllers and data compression hardware in addition to network applications [11]. Clearly, the CAM also plays an important role in the datapath of various computer architectures. The design and implementation of the CAM requires careful consideration of competing aspects of area, density, power and speed. This is the subject of the final paper. 17 CAM Tutorial [6] This paper is an extensive tutorial and survey of current CAM architectures and the challenges and tradeoffs in the design of each component of the CAM. The goal of the authors is to explain the working of the CAM with special emphasis on power saving approaches. The authors provide some good models and metrics for measuring power and delay and discuss the tradeoffs of various architectures. The authors approach the topic of CAM design from two levels – architectural and circuit. The paper also presents some data on CAM efficiency for various architectures in 180nm CMOS technology. Background This paper is a general survey on CAM architecture which means it can apply to any microprocessor design. The most common applications of a CAM might be in network processors that run in internet routers. Networking involves lookup-table matching for packet forwarding which requires a fast array lookup scheme to read out output address ports associated with an IP packet. The CAM offers the best solution in lookup and search functions out of any hardware or software approach so it appears that unless a radically new hardware comes along the CAM architecture will be ubiquitous. The single cycle-throughput of a CAM makes it very attractive to architects. Another quality that makes a CAM suitable to network processors is its ability to comprehend priority matching and encoding in hardware itself. The constraints on the design are the area, power and latency concerns that come with scaling the size of the CAM. From a hardware perspective, there are also issues with transistor and wire density as well technology challenges. Today, the largest single chip CAM implementations run in the 18Mb range. Features and Technology A CAM compares input search data against a table of stored data and returns the address of the matching data in a single cycle. The input search-word is broadcast on the search-lines (SL) to the table of stored CAM words. A CAM word can range from 36bits to 144bits and a typical CAM table can range from a few hundred to 32K entries. Each stored word has a match-line (ML) that indicated a hit or a miss which is fed into an encoder to generate a binary match location corresponding to the ML. In case of multiple matches a priority encoder can be used. The output of the encoder is used to read out the correct address from an SRAM. Thus the matching word of a CAM becomes a pointer into an SRAM. The authors 18 develop a model of the basic CAM cell. A CAM bitcell has two basic functions: bit storage and bit comparison. CAM bitcells are arranged horizontally to make up a CAM word with a ML corresponding to each word which is fed into ML sense amplifiers (MLSA). Each CAM bitcell in a column is attached to a differential SL pair. CAM operation takes place in the following stages all happening in one clock cycle: first the search-word is loaded into data register, then the MLs are pre-charged high, then the search-word is broadcast through the SL, then each CAM bit compares a stored bit with the bit on the SL, then CAM words with at least one mismatch will discharge the ML while words with all matching bits keep the ML charged high and finally the MLSA detects whether an ML has a matching or miss condition and maps the ML to an encoded binary address in an SRAM. The authors present two types of CAM bitcells: NOR and NAND cells, each using cross coupled inverters which make up an SRAM. They are named for the logical structure that they resemble when arranged in a CAM word. The bit comparison is logically equivalent to an XOR of the stored bit and the search bit. The NOR cell uses four minimum sized transistors to implement a pull down path from ML in the event of a mismatch. The NAND cell implements three minimum sized transistors where one transistor is a pass transistor implementation of the XNOR function. The NOR cell provides full rail voltage swing while the NAND provides reduced logic due to the NMOS pass transistor implementation. There are variants of both the NAND and the NOR cells depending on the number of transistors which pose tradeoffs in terms of transistor density and voltage swing. It is also possible to implement ternary logic cells which can store a value representing ‘0’ and ‘1’ and causes a match regardless of the input bit. Such an application works in network routing and priority encoding schemes. To make a ternary cell, a second SRAM can be added to the NOR cell where each bit connects to its own independent pull-down path. The authors point out several modifications to the ternary cell using PMOS pull downs and complementing logic for the SL and the ML. PMOS transistors offer a more compact layout which lowers wiring capacitance, however they are slower. A NAND cell can be made ternary by adding storage for a mask bit which can override matching regardless of bit value. The paper goes into details about the ML structures and sensing schemes as well. The NOR ML is formed by connecting NOR cells in parallel. A NOR search cycle involves three steps: SL pre-charge low to disconnect ML from the ground, ML pre-charge high by turning on an 19 ML pre-charge transistor and SL being driven to trigger ML evaluation. The slowest discharge path for the ML is through two series transistors to ground which is still faster than a NAND cell. For this reason NOR based CAMs are more prevalent. A NAND ML is formed by cascading several NAND cells. A PMOS pre-charge transistor pre-charges the ML high and during evaluation, an NMOS ML evaluation transistor turns on to creates a series path (8 to 16 transistors) to ground for a CAM word with matching bits. In case of a mismatch a single NAND cell can break the chain to ground. The MLSA detects differences between low and high voltage. An important feature of the NAND ML is that a miss stops signal propagation and there is no more power consumption. The drawbacks are the quadratic delay dependence on the number of NAND cells and the noise margin of the NMOS pass transistors. The NAND ML also has potential for charge sharing across the NAND cells which may cause incorrect voltage on the ML; however this can be avoided by pre-charging intermediate nodes in the cells which increases power consumption. There are several ML sensing schemes with varying tradeoffs in power, delay, control and area that can be used in CAM architectures. The authors propose a simple model to determine power consumption of various ML sensing schemes of a NOR cell. The ML can be modeled as a simple capacitor to model ML wiring capacitance, diffusion capacitance of the NOR cell, pre-charge transistors and the MLSA; and a pull down resistor weighted by the number of mismatched bits. The model assumes that charge sharing is avoided by connecting the CAM storage bits to the top transistors of the pull down path according to figure x in the paper. To control pre-charge and evaluation clocking, a replica ML is generated for worst case timing and is used to generate timing signals. The dynamic power consumption for w miss MLs can be given by the following equation, where f is the frequency of search operations: 2 CML VDD f w [6] To reduce power and increase speed a low swing voltage scheme may be used. This modifies the power equation to CML VDD VMLswing f w [6] 20 The authors reference a paper [12] that utilizes a tank capacitor with every ML that uses charge sharing to pre-charge the ML. In case of a mismatch the ML discharges to ground and in case of a hit it remains pre-charged. A current-race scheme removes the SL pre-charge phase, mitigates charge sharing and saves some delay and power. The ML is pre-charged low and concurrently drives the SL; evaluation happens by connecting the ML to a current source. If there is a miss, then the ML is driven high. The power consumption for this scheme is given by CML VDD Vt f w [6] The authors also point out a selective pre-charge scheme that allocates power to the ML nonuniformly. The match operation happens on the first few bits of the word before activating the remaining bits. The authors claim that this can save 88% dynamic power in a 144bit CAM word. The two drawbacks are that non-uniform data distribution might eliminate overall power savings and to maintain speed the initial matching might draw higher power per bit. The selective pre-charge scheme can implemented using a mixed NAND/NOR ML structure [43]. A pipeline scheme is a selective pre-charge scheme that divides the ML into multiple segments in a pipelined fashion where a match in one segment drives searching in the following segments serially. The drawbacks are increased latency, area and power overhead although hierarchal SL bitlines can save some power. Finally, the authors present a current saving scheme that is a data dependent ML sensing scheme that improves on the current sensing scheme. This scheme adds a current control block which allocates different amounts of currents based on a match or a miss, but requires ML to the pre-charged low. Since most evaluations result in a miss this saves 50% power compared to current race scheme. The authors summarize the various power and energy numbers for the different ML schemes for a ternary 152 by 72 CAM block in 180nm CMOS technology in the paper. The next topic was SL driving schemes as they apply to three cases: ML pre-charge high, ML pre-charge low and a pipelined ML with hierarchal SL. The conventional approach applies to when the ML is pre-charged high. The SL is driven by a cascade of inverters, first to their pre-charge level and then to their data value. The power consumption due to SL can be given 21 by the following equation, where CSL denotes the capacitance of the SL, and n is is the total number of SL pairs. 2 n CSL VDD f [6] Power consumption of the drivers can add an additional 25%. If the SL pre-charge is eliminated as seen in the case of the current race scheme then this can secure an additional 50% power reduction. The hierarchal SL schemes built on top of the pipelined ML scheme removes the need to drive every SL. Since only the global SL are active , the local SL might be turned off most of the time. However this adds to implementation complexity and lower noise immunity. The power consumption for hierarchal SL can be given by the following equation, where is the activity rate of the local SL. C GSL 2 2 VDD C LSL VDD f This requires that power dissipation by the global SL be sufficiently smaller than the local SL which is the case if wiring capacitance is small compared to the transistor capacitance. A small-swing scheme on the global SL can further reduce power but requires an amplifier to convert signals to full-swing for the local SL. The authors summarize the various power and energy numbers for the different SL schemes for a ternary 1024 by 144 CAM block in 180nm CMOS technology in the paper. Finally, the authors look at architectural techniques to lower power consumptions. Three architectures have been proposed: bank selection, pre-computation and dense encoding. The bank selection technique utilized an architecture where only a subset of CAM is active on a given cycle. This saves area and power as the CAM is portioned by virtue of two or more extra data select bits. The comparison circuitry can be shared as well. Total power savings amount to 75%, however the major drawback are the additional circuitry to invoke multiple banks, problem of bank overflow and complexity of algorithms to partition the banks and balance the data. The pre-computation approach applies to binary CAM. In this method, some extra information bits in a search word can be used to for an initial search thereby saving power from a full search each time. This also saves area by simplifying comparison 22 circuitry for the second search. The third approach involves encoding or mapping stored data in a ternary CAM to reduce the size of the CAM required for an application. Reducing the number of entries can reduce power consumption. The authors refer to some papers [13, 14] which propose some mapping algorithms to make use of unused ternary states to store additional combinations of matched words. However, this requires changes to the ML architecture. The authors conclude their paper by noting that CAM power consumption is dominated by dynamic power and there is opportunity for research here. Some of the guidelines to keep in mind when implementing CAMs are to minimize transistors and wire capacitance, reduce voltage swing on the ML and reduce supply voltage. Reducing peak power consumption is also an interesting challenge for future CAM design. New memory technologies like ZRAM and MRAM will also pose challenges when architecting CAM. Critique This paper is probably one of the best available tutorials on memory architectures. It provides excellent information on various CAM architectures with special emphasis on the tradeoffs between them. The authors cover design styles and tradeoffs for every component of the CAM from both a circuit and an architectural level. It gives designers the right knowledge to pick the best implementation for their designs. However, the authors leave out some potentially interesting information on the associated SRAM that goes with a CAM in a lookup table scheme. There might be some research opportunities in implementing both the CAM and SRAM bitcell together to save on area, wiring and peripheral circuitry. The authors chose not to focus on the actual reading and writing of the CAM cells for the sake of simplicity, but they could have devoted a small section on the circuit design challenges especially for writing into CAM cells. The authors could have also addressed future challenges with scaling the size of the CAM with respect to sub-micron technology issues. One of the most interesting architectures was the partitioning and data encoding algorithms. There might be opportunity for research in algorithms that favor application specific dynamic partitioning. It would also be interesting to see how multi-voltage supplies and dynamic circuits can be incorporated in the CAM architectures to further improve performance. I believe there is some room for improvement in the working of the worst-case replica ML as well. The replica ML governs timing based on worst case matching or miss conditions; this might be wasteful as a sizable number of searches most likely do not require such worst case boundaries. Perhaps some form of adjustable ML replica scheme that is aware of the application and the 23 running average times for matching and miss on the ML can be used to better tune CAM timing. Overall, I think this paper does a great job in highlighting opportunities for future research in CAM architectures in addition to a very complete introduction to CAM. CONCLUSION In conclusion, the papers on circuits and datapath were probably the most detailed and technical out of all the topics. The papers stress the important of architecture in addition to circuit level design and optimization for the datapaths and the high speed memories. A good architecture must be scalable and must remain competitive in performance and power for several generations of technology. A poor architecture from a power, performance or area perspective cannot offer much optimism for improvement through technology process or circuit improvements alone. In addition to architecture, careful design at the logical, circuit and layout level is also important. Being able to identify and learn more about the target applications can also help architects from the very beginning of a design cycle. As more dynamic and domino circuit topologies find their way into static CMOS designs the issue of local clock generation to support these topologies becomes important as well. Special care must be taken to maintain electrical integrity on a local and global scale of a circuit with timing included for all circuit topologies. Sub-micron technology poses well known challenges with variation and higher leakage and must be accounted for by both architects and circuit designers. Features for test, debug and yield in addition to the design verification, synthesis and simulation tools are equally important. New, innovative, extensible and specialized datapath architectures to address the current technology and performance efficiency challenges will surely be interesting research topics to watch out for. 24 REFERENCES [1] C. Hamacher et al, Computer Organization, 5th ed. New York: McGraw-Hill, 2002. [2] N. Weste et al, CMOS VLSI Design: a circuits and systems perspective, 3rd ed. New York: Addison-Wesley, 2005. [3] J. Warnock et al, “Circuit Design Techniques for a First-Generation Cell Broadband Engine Pocessor,” in Solid-State Circuits, IEEE Journal of, Volume 41, Issue 8, Aug. 2006 Page(s):1692 – 1706. [4] H. Oh et al, “A Fully Pipelined Single-Precision Floating-Point Unit in the Synergistic Processor Element of a CELL Processor,” in Solid-State Circuits, IEEE Journal of, Volume 41, Issue 4, Apr. 2006 Page(s):759 – 771. [5] S. Wijeratne et al, “A 9-GHz 65-nm Intel Pentium 4 Processor Integer Execution Unit,” in Solid-State Circuits, IEEE Journal of, Volume 42, Issue 1, Jan. 2007 Page(s):26 – 37. [6] K. Pagiamtzis et al, “Content-Addressable Memory (CAM) Circuits and Architectures: A Tutorial and Survey,” in Solid-State Circuits, IEEE Journal of, Volume 41, Issue 3, Mar. 2006 Page(s):712 – 727. [7] “Cell Broadband Engine resource center” IBM developerWorks. Retrieved 1 Jul.2007 <http://www.ibm.com/developerworks/power/cell/documents.html>. [8] B. Flachs et al., “The microarchitecture of the synergistic processor for a cell processor,” in Solid-State Circuits, IEEE Journal of, Volume 41, Issue 1, Jan. 2006 Page(s):63 – 70. [9] “Intel NetBurst Architecture” Intel Software Network. Retrieved 5 Aug. 2007. <http://www.intel.com/cd/ids/developer/asmo-na/eng/44004.htm>. [10] V. Baranov, “Intel Pentium 4 641 (Cedar Mill) – 65nm process technology advancing” Digital-Daily.com. Retrieved 5 Aug. 2007. <http://www.digitaldaily.com/cpu/intel_pentium_4_641/>. [11] K. Pagiamtzis, “Introduction to Content-Addressable Memory (CAM)” Kostas Pagiamtzis, University of Toronto. Retrieved 5 Aug. 2007. <http://www.pagiamtzis.com/cam/camintro.html [12] G. Kasai et al, “200 MHz/200 MSPS 3.2W at 1.5V Vdd, 9.4 Mbits ternary CAM with new charge injection match detect circuits and bank selection scheme,” in Proc. IEEE Custom Integrated Circuits Conf. (CICC), 2003, Page(s):387 – 390. [13] S. Hanzwa et al, “A dynamic CAM based on a one-hot-spot block code-for millionentry lookup,” in Symp. VLSI Circuits Dig. Tech. Papers, 2004, Page(s):382 – 385. 25 [14] “A large-scale and low-power CAM architecture featuring a one-hot-spot block code for IP-address lookup in a network router,” in IEEE J. Solid-State circuits, Volume 40, Issue 42, Apr. 2005, Page(s):853 – 861. 26