A Simple Regular Pulse Excited and Multi Pulse Excited LPC

advertisement

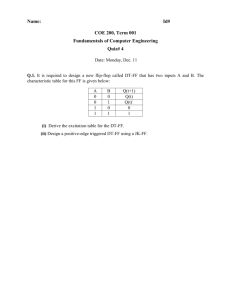

A Simple Regular Pulse Excited and Multi Pulse Excited LPC encoder-decoder Introduction In this project, regular pulse excited and multi pulse excited LPC encoder-decoder algorithms were implemented and applied on a typical Sine wave and a real speech file. All the files, speech samples are enclosed. Mainly, this project consists of four sub parts. 1) A simple multi pulse excitation algorithm for a Sine wave 2) A simple regular pulse excitation algorithm for a Sine wave 3) Application of step 1 to a speech wave 4) Application of step 2 to a speech wave There are two kinds of multi pulse algorithms. The first one (step 1), recursively calculates gain and pulse positions, finds the error signal and repeats the same operation on the error signal. The aim is to find the excitation signal that minimises the error. The second algorithm (step 2), pulse positions for every single excitation frame is predetermined. Pulse positions are as follows. Pulse locations for 10 excitation pulses (Frame size is 160). L10= [1 17 33 49 66 82 98 114 130 146] Pulse locations for 23 excitation pulses (Frame size is 160). L23= [1 8 15 22 29 36 43 50 57 64 71 78 85 92 99 106 113 120 127 134 141 148 155] As the locations are known, correlation matrix is solved. Algorithm For this project, LPC synthesis and analysis filters were needed. These are 1/A(z) and A(z) filters. The Matlab codes are given below: function sn=az160(a,e) % 1/A(z) % this is the synthesising block % % a=lpc parameters % e=excitation signal % signal output->sn % N=160; if N==0 N=160; end function e=iaz160(a,s) % A(z) % this is the analysing block % % a=lpc parameters % s=original signal % excitation output->e % N=160; if N==0 N=160; end a=-a(2:11); sn(1:N)=0; a=-a(2:11); e(1:N)=0; for n=1:N, temp=0; for p=1:10, if (n-p)>0 temp=temp+a(p)*sn(n-p); end end sn(n)=e(n)+temp; end az160.m (1/A(z) filter) for n=1:N, temp=0; for p=1:10, if (n-p)>0 temp=temp+a(p)*s(n-p); end end e(n)=s(n)-temp; end iaz160.m (A(z) filter) After az160 and iaz160 codes were implemented, they were tested. The excitation frame from A(z) filter was fed into 1/A(z) filter. The synthesised waveform was subtracted from the original signal to find the difference. Then excitation frame’s first 10 parameters were used to synthesise waveform and the difference is plotted. Top to bottom: 1) Original signal , 2)Excitation from Analysis Filter, 3)Synthesised waveform for the excitation signal above. 4) Difference between 1 and 3. 5) First 10 parameters of the excitation, 6) Synthesised waveform from the excitation signal given in figure 5. 7) Difference between original signal and the figure 6. The MATLAB code for this figure is code1.m After implementing LPC filters, the gain and positioning algorithms were decided. In Digital Speech book ( A. M. Kondoz) there are two algorithms. First one is the recursive calculations of gain and position. The other one is finding the suitable pulse positions and exploiting the gain values from correlation matrix. (Page 163). Algorithm 1: The input waveform is a sine wave, with a period of 160 samples. 1) Find the pulse position that minimises the square of error. (Error is the difference between original and the synthesised waveform) 2) For this pulse position find the corresponding gain value. 3) Given the pulse position and gain, calculate sn, using az160.m and subtract 4) Repeat the same operation (1-3) for 20 times. Top to bottom: 1) Original signal , 2) Excitation calculated using the algorithm 1. 3) Original signal (green) and synthesised signal (blue) 4) Difference between the two signals. The MATLAB code for this figure is code2.m Algorithm 2: The main problem of algorithm 1 is its inaccuracy when number of pulses per frame increases. (Kondoz,Page 163). As a result the waveform shapes match but the gains don’t fit. In the second algorithm, pulse positions were given as below. L10= [1 17 33 49 66 82 98 114 130 146] The corresponding gain values are calculated using correlation matrix. Algorithm consists of 2 steps. Again the input waveform is a sine wave, with a period of 160 samples. 1) Extract the values of the correlation matrix for given pulse positions 2) Find the synthesised signal from the az160 code. Top to bottom: 1) Original signal (green circles) and synthesised signal (blue), 2) Excitation calculated using the algorithm 2. 3) Difference between the two signals. As seen from the figures the original and the synthesised waveforms match quite good. The MATLAB code for this figure is code3.m Application of Algorithms to the Speech After applying these algorithms on sine waves, speech samples were used to test the implementations. Firstly, single frames were tested, afterwards whole speech was companded. Algorithm 1 Input waveform is ‘miners.au’, the speech segment is between the 950th and 1109 th sample. 20 excitation pulses were calculated. Top to bottom: 1) Original signal 2) Excitation calculated using the algorithm 1. 3) Original signal(green) and synthesised signal (blue circles) 4) Difference between the two signals. The MATLAB code for this figure is code4.m The main problem of algorithm 1 appears in this figure. Algorithm 1 exaggerates peaks and valleys. Algorithm 2 Input waveform is ‘miners.au’, the speech segment is between the 950th and 1109th sample. 10 excitation pulses were calculated. Top to bottom: 1) Original signal (blue), synthesised (black circles) 2) Excitation calculated using the algorithm 2. 3) Difference between the two signals. The MATLAB code for this figure is code5.m The synthesised waveforms from each algorithm are different. Obviously the algorithm 2 works better than algorithm 1. In the next part the algorithms will be compared. Comparisons of the Algorithms Although the differences between the algorithms are obvious, another code was implemented to understand the similarities and differences of the algorithms. In the first set of figures, consecutively original signal, signal form algorithm 1, signal from algorithm2 and the differences between the synthesised signals were given. The performances of the algorithms are quite different. The first algorithm (recursive) is very slow. The second algorithm (correlation matrix) is at least 10 folds faster than the first algorithm Top to bottom: 1) Original signal 2) Original signal (green), synthesised (blue circles) signal for algorithm 1 (recursive) 3) Original signal (green), synthesised (blue circles) for algorithm 2 (correlation matrix) 3) Difference between the two synthesised signals. All the calculations are for 20 excitation pulses per frame. The MATLAB code for this figure is code6.m In the next set of figures, excitation signals are compared. The results are quite different as well. Top to bottom: 1) Excitation signal derived from algorithm 1. 2) Excitation signal derived from algorithm 2 The MATLAB code for this figure is code6.m From the figures, it is obvious that excitation signals are different. In the first algorithm, the pulse positions are the locations where the square of error is minimised, but in the second algorithm pulse positions are given and the gain values for these positions were calculated. The second algorithm is better, because it considers the interaction between the pulses. (Kondoz, Page 163). Summary and Conclusion In this project two different algorithms for multi pulse were examined. Firstly, the LPC filters were implemented and then the algorithms were coded. Both algorithms were tested on sine wave and speech signals. The results were compared. The main difference between the algorithms is the consideration of interaction between the pulses. This is maintained in Algorithm 2 (Correlation matrix). The mathematical results show that algorithm 2 works better both in terms of performance and waveform matching. Appendix The Application of Algorithms on Speech Files The algorithms described were used on a whole speech file. Because of MATLAB Student Version’s limitations, segment sizes were kept below 15000. There are 4 extra m files. They are code7.m: This file reads the ‘miners.au’ and performs algorithm 2 on this file. The process takes 2 minutes. The synthesised speech is intelligible. The waveforms this program plots are given below. The name of the wave file is “code7-algorithm2-N82-E23.wav” The synthesised speech (above) and the original speech(below). code8.m: This file reads the ‘miners.au’ and performs algorithm 1 on this file. The process takes 8 minutes. The synthesised speech is intelligible. But the quality is very low. This code was run for 62 frames. The name of the wave file is: “code8-algorithm1-N62-E23.wav” code9.m: This code reads the ‘boys.au’ and performs algorithm 2 on this file. At the end it writes the data to a file named “code9-N82-EXCITATION23.bin”.The first 2 bytes of the file indicates the number of frames. The second 2 bytes are the number of excitation pulses per frame. The rest are the lpc parameters and excitation gains. (Because the pulse locations are constant) code10.m: This code reads the “code9-N82-EXCITATION23.bin”, extracts the necessary parameters, constructs the waveform and plays it. The waveform generated from the binary file is “code9-boys-algorithm2-N83-E23.wav”.