Meta_modelado_de_pre..

(*)

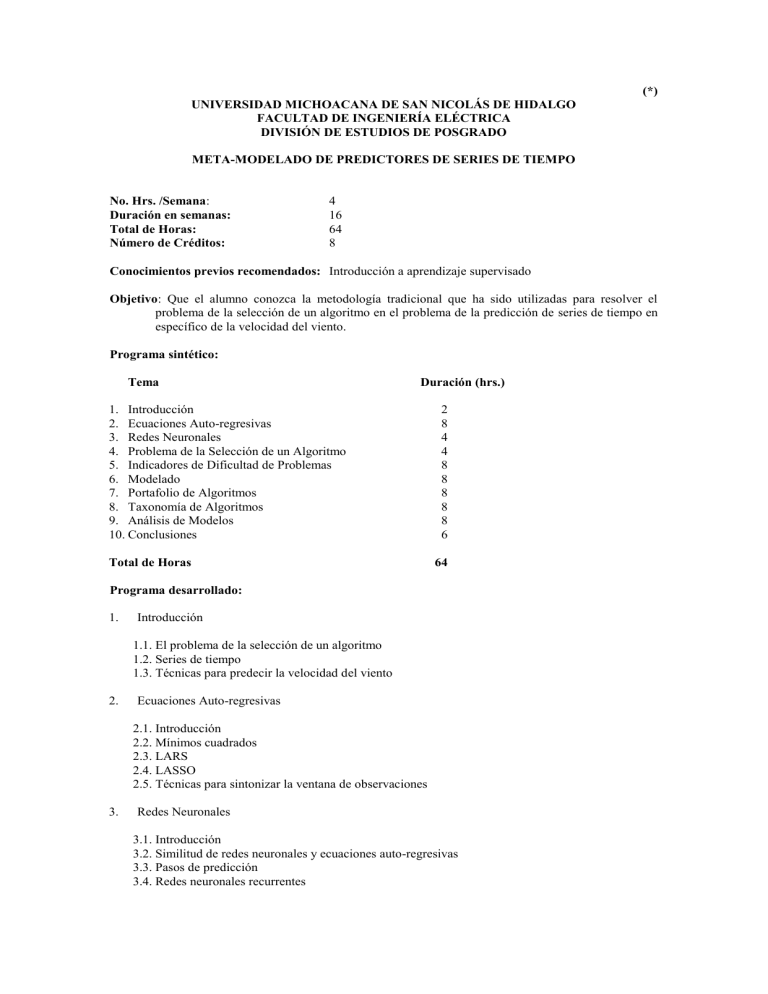

UNIVERSIDAD MICHOACANA DE SAN NICOLÁS DE HIDALGO

FACULTAD DE INGENIERÍA ELÉCTRICA

DIVISIÓN DE ESTUDIOS DE POSGRADO

META-MODELADO DE PREDICTORES DE SERIES DE TIEMPO

No. Hrs. /Semana :

Duración en semanas:

Total de Horas:

Número de Créditos:

4

16

64

8

Conocimientos previos recomendados: Introducción a aprendizaje supervisado

Objetivo : Que el alumno conozca la metodología tradicional que ha sido utilizadas para resolver el problema de la selección de un algoritmo en el problema de la predicción de series de tiempo en específico de la velocidad del viento.

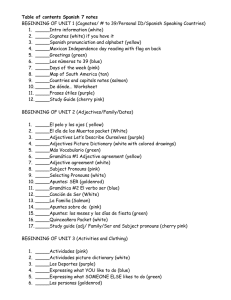

Programa sintético:

Tema Duración (hrs.)

1.

Introducción

2.

Ecuaciones Auto-regresivas

3.

Redes Neuronales

4.

Problema de la Selección de un Algoritmo

5.

Indicadores de Dificultad de Problemas

6.

Modelado

7.

Portafolio de Algoritmos

8.

Taxonomía de Algoritmos

9.

Análisis de Modelos

10.

Conclusiones

2

8

4

4

8

8

8

8

8

6

Total de Horas

Programa desarrollado:

64

1.

Introducción

1.1.

El problema de la selección de un algoritmo

1.2.

Series de tiempo

1.3.

Técnicas para predecir la velocidad del viento

2.

Ecuaciones Auto-regresivas

2.1.

Introducción

2.2.

Mínimos cuadrados

2.3.

LARS

2.4.

LASSO

2.5.

Técnicas para sintonizar la ventana de observaciones

3.

Redes Neuronales

3.1.

Introducción

3.2.

Similitud de redes neuronales y ecuaciones auto-regresivas

3.3.

Pasos de predicción

3.4.

Redes neuronales recurrentes

4.

Problema de la Selección de un Algoritmo

4.1.

Introducción

4.2.

Metodología

4.3.

Listado del estado del arte

5.

Indicadores de Dificultad de Problemas

5.1.

Introducción

5.2.

Indicador de Franco

5.3.

Modificaciones al indicador de Franco

5.4.

Indicadores usados en trabajos relacionados

5.5.

Indicadores usados en otros dominios

6.

Modelado

6.1.

Introducción

6.2.

Modelos usados en trabajo relacionado

6.3.

Modelos usados en algoritmos evolutivos

6.4.

Modelos en otros dominios

7.

Portafolio de Algoritmos

7.1.

Introducción

7.2.

Creación de un portafolio

7.3.

Análisis del portafolio

8.

Taxonomía de Algoritmos

8.1.

Introducción

8.2.

Taxonomía de Programación Genética

8.3.

Metodología usada en su construcción.

8.4.

Creación de una taxonomía

9.

Análisis de Modelos

9.1.

Introducción

9.2.

Análisis realizado en los modelos de programación genética

9.3.

Analizar los modelos creados

10.

Conclusiones

Bibliografía:

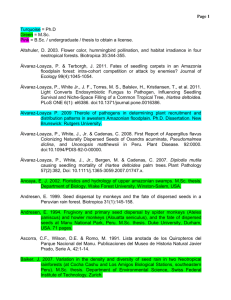

[1] Christian Bessiere, editor. Principles and Practice of Constraint Programming - CP 2007, 13th

International Conference, CP 2007, Providence, RI, USA, September 23-27, 2007, Proceedings , volume 4741 of Lecture Notes in Computer Science . Springer, 2007.

[2] Justin A. Boyan and Andrew W. Moore. Learning evaluation functions for global optimization and boolean satisfiability. In Proceeding of the Fifteenth National Conference on Artificial Intelligence and Tenth Innovative Applications of Artificial Intelligence Conference (AAAI-1998, IAAI-1998) , pages 3–10, Madison, Wisconsin, United States, 1998. AAAI Press.

[3] Eric A. Brewer. High-level optimization via automated statistical modeling. In Proceedings of the

Fifth ACM SIGPLAN Symposium on Principles and Practice of Parallel Programming (PPOPP-

1995) , pages 80–91, Santa Barbara, California, USA, 1995. ACM Press.

[4] Juan J. Flores, Mario Graff, and Hector Rodriguez. Evolutive design of ARMA and ANN models for time series forecasting. Renewable Energy , (0).

[5] Aoife M. Foley, Paul G. Leahy, Antonino Marvuglia, and Eamon J. McKeogh. Current methods and advances in forecasting of wind power generation. Renewable Energy , 37(1):1–8, January 2012.

[6] Cormac Gebruers and Alessio Guerri. Machine learning for portfolio selection using structure at the instance level. In Wallace [30], page 794.

[7] Cormac Gebruers, Alessio Guerri, Brahim Hnich, and Michela Milano. Making choices using structure at the instance level within a case based reasoning framework. In Jean-Charles Régin and

Michel Rueher, editors, CPAIOR , volume 3011 of Lecture Notes in Computer Science , pages 380–

386. Springer, 2004.

[8] Cormac Gebruers, Brahim Hnich, Derek G. Bridge, and Eugene C. Freuder. Using CBR to select solution strategies in constraint programming. In Héctor Muñoz-Avila and Francesco Ricci, editors,

ICCBR , volume 3620 of Lecture Notes in Computer Science , pages 222–236. Springer, 2005.

[9] Mario Graff and Riccardo Poli. Practical performance models of algorithms in evolutionary program induction and other domains. Artificial Intelligence , 174(15):1254–1276, October 2010.

[10] Mario Graff Guerrero. Models of the Performance of Evolutionary Program Induction Algorithms .

PhD thesis, Department of Computing Science and Electronic Engineering, University of Essex,

October 2010.

[11] Eric Horvitz, Yongshao Ruan, Carla P. Gomes, Henry A. Kautz, Bart Selman, and David Maxwell

Chickering. A Bayesian approach to tackling hard computational problems. In Jack S. Breese and

Daphne Koller, editors, UAI , pages 235–244. Morgan Kaufmann, 2001.

[12] Frank Hutter, Youssef Hamadi, Holger H. Hoos, and Kevin Leyton-Brown. Performance prediction and automated tuning of randomized and parametric algorithms. In Frédéric Benhamou, editor, CP , volume 4204 of Lecture Notes in Computer Science , pages 213–228. Springer, 2006.

[13] Michail G. Lagoudakis and Michael L. Littman. Algorithm selection using reinforcement learning.

In Langley [15], pages 511–518.

[14] Michail G. Lagoudakis and Michael L. Littman. Learning to select branching rules in the dpll procedure for satisfiability. Electronic Notes in Discrete Mathematics , 9:344–359, 2001.

[15] Pat Langley, editor. Proceedings of the Seventeenth International Conference on Machine Learning

(ICML 2000), Stanford University, Standord, CA, USA, June 29 - July 2, 2000 . Morgan Kaufmann,

2000.

[16] Kevin Leyton-Brown, Eugene Nudelman, Galen Andrew, Jim McFadden, and Yoav Shoham.

Boosting as a metaphor for algorithm design. In Francesca Rossi, editor, CP , volume 2833 of

Lecture Notes in Computer Science , pages 899–903. Springer, 2003.

[17] Kevin Leyton-Brown, Eugene Nudelman, Galen Andrew, Jim McFadden, and Yoav Shoham. A portfolio approach to algorithm selection. In Georg Gottlob and Toby Walsh, editors, IJCAI , pages

1542–1542. Morgan Kaufmann, 2003.

[18] Kevin Leyton-Brown, Eugene Nudelman, and Yoav Shoham. Learning the empirical hardness of optimization problems: The case of combinatorial auctions. In Pascal Van Hentenryck, editor, CP , volume 2470 of Lecture Notes in Computer Science , pages 556–572. Springer, 2002.

[19] Kevin Leyton-Brown, Eugene Nudelman, and Yoav Shoham. Empirical hardness models for combinatorial auctions. In Peter Cramton, Yoav Shoham, and Richard Steinberg, editors,

Combinatorial Auctions , chapter 19, pages 479–504. MIT Press, 2006.

[20] Kevin Leyton-Brown, Eugene Nudelman, and Yoav Shoham. Empirical hardness models:

Methodology and a case study on combinatorial auctions. J. ACM , 56(4):1–52, 2009.

[21] Spyros Makridakis, Steven C. Whellwright, and Rob J. Hyndamn. Forecasting Methods and

Applications . John Wiley & Sons, Inc., 3 edition, 1992.

[22] NOAA. Global surface summary of day. http://www.ncdc.noaa.gov/cgi-bin/res40.pl, January 2012.

[23] Eugene Nudelman, Kevin Leyton-Brown, Holger H. Hoos, Alex Devkar, and Yoav Shoham.

Understanding random SAT: Beyond the clauses-to-variables ratio. In Wallace [30], pages 438–452.

[24] John R. Rice. The algorithm selection problem. Advances in Computers , 15:65–118, 1976.

[25] Bryan Singer and Manuela M. Veloso. Learning to predict performance from formula modeling and training data. In Langley [15], pages 887–894.

[26] Kate A. Smith-Miles. Cross-disciplinary perspectives on meta-learning for algorithm selection. ACM

Comput. Surv.

, 41(1):1–25, 2008.

[27] Orestis Telelis and Panagiotis Stamatopoulos. Combinatorial optimization through statistical instance-based learning. In Proceedings of the 13th IEEE International Conference on Tools with

Artificial Intelligence (ICTAI 2001) , page 203, Dallas, Texas, USA, 2001. IEEE Computer Society.

[28] Nathan Thomas, Gabriel Tanase, Olga Tkachyshyn, Jack Perdue, Nancy M. Amato, and Lawrence

Rauchwerger. A framework for adaptive algorithm selection in STAPL. In Keshav Pingali,

Katherine A. Yelick, and Andrew S. Grimshaw, editors, PPOPP , pages 277–288. ACM, 2005.

[29] Rich Vuduc, James Demmel, and Jeff Bilmes. Statistical models for automatic performance tuning.

In Vassil N. Alexandrov, Jack Dongarra, Benjoe A. Juliano, René S. Renner, and Chih Jeng Kenneth

Tan, editors, International Conference on Computational Science (1) , volume 2073 of Lecture

Notes in Computer Science , pages 117–126. Springer, 2001.

[30] Mark Wallace, editor. Principles and Practice of Constraint Programming - CP 2004, 10th

International Conference, CP 2004, Toronto, Canada, September 27 - October 1, 2004,

Proceedings , volume 3258 of Lecture Notes in Computer Science . Springer, 2004.

[31] H. Wang, G. Li, and C. L Tsai. Regression coefficient and autoregressive order shrinkage and selection via the lasso. Journal of the Royal Statistical Society: Series B(Statistical Methodology) ,

69(1):63–78, 2007.

[32] Xiaozhe Wang, Kate Smith-Miles, and Rob Hyndman. Rule induction for forecasting method selection: Meta-learning the characteristics of univariate time series. Neurocomputing , 72(10-

12):2581–2594, June 2009.

[33] Lin Xu, Holger H. Hoos, and Kevin Leyton-Brown. Hierarchical hardness models for SAT. In

Bessiere [1], pages 696–711.

[34] Lin Xu, Frank Hutter, Holger H. Hoos, and Kevin Leyton-Brown. SATzilla-07: The design and analysis of an algorithm portfolio for SAT. In Bessiere [1], pages 712–727.

[35] Lin Xu, Frank Hutter, Holger H. Hoos, and Kevin Leyton-Brown. SATzilla: portfolio-based algorithm selection for SAT. Journal of Artificial Intelligence Research , 32:565–606, June 2008.

Metodología de enseñanza-aprendizaje:

Revisión de conceptos, análisis y solución de problemas en clase

X

Lectura de material fuera de clase

Ejercicios fuera de clase (tareas)

X

X

X

X

Investigación documental

Elaboración de reportes técnicos o proyectos

Metodología de evaluación:

Asistencia

Tareas

Elaboración de reportes técnicos o proyectos

Exámenes

Programa propuesto por: Mario Graff Guerrero

Fecha de aprobación: 23 de marzo de 2012

X

X