Lecture 3

advertisement

Sept. 30, 2004 LEC #3

ECON 240A-1

Probability

L. Phillips

I. Introduction

Probability has its origins in curiosity about the laws governing gambles. During

the Renaissance, the Chevalier De Mere posed the following puzzle. Which is more

likely (1) rolling at least one six in four throws of a single die or (2) rolling at least one

double six in 24 throws of a pair of dice? De Mere asked his friend Blaise Pascal this

question. In turn Pascal enlisted the interest of Pierre De Fermat. Pascal and Fermat

worked out the theory of probability.

While gambling and probability are interesting topics in their own right,

probability is also a step towards making statistical inferences. The key is using a random

sample combined with probability models to estimate, for example, the fraction of voters

who will vote for a candidate. The pathway of understanding is a sequence of Bernoulli

trials leading to the binomial distribution, which for large numbers of observations can be

approximated by the normal distribution. These distributions can be used to estimate the

fraction that will vote yes and to calculate intervals within which the true fraction will lie

say 95 percent of the time.

II. Random Experiments

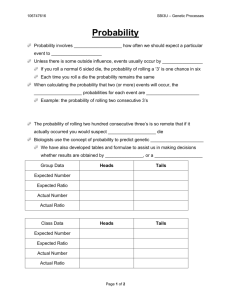

A key to understanding probability is to model certain activities such as flipping a

coin or throwing a die. The set of elementary outcomes, e.g. {heads, tails} or

symbolically {H, T}, is the sample space. Branching or tree diagrams illustrate these

random experiments and their elementary outcomes.

Sept. 30, 2004 LEC #3

ECON 240A-2

Probability

L. Phillips

-----------------------------------------------------------------------------------------------------------1

H

2

3

4

T

5

6

Figure 1: Flipping a Coin

Figure 2: Rolling a Die

Flipping a coin is an example of a Bernoulli trial, a random experiment with two

elementary outcomes, such as yes/no or heads/tails.

In a fair game, the probability of heads equals that of tails, for example, but the

laws of probability hold whether the game is fair or not. If the game is fair, the

probabilities of elementary outcomes for simple random experiments are intuitively

obvious, i.e. equally likely. In the case of flipping a coin, the probability of heads, P (H),

equals the probability of tails, P (T), equals one half. In the example of rolling a die, the

probability of rolling a one, P (1), equals the probability of rolling a six, P (6), equals one

sixth.

Probabilities of elementary outcomes are non-negative and the probabilities of all

the elementary outcomes sum to one.

Sept. 30, 2004 LEC #3

ECON 240A-3

Probability

L. Phillips

III. Events

Elementary outcomes can be grouped into sets that define an event. For example,

in the random experiment of throwing a die, the die can come up even, with the set of

elementary outcomes {2,4,6}, or the die can come up odd with the set of elementary

outcomes {1, 3, 5}. The probability of the event even is the sum of the probabilities of

the elementary outcomes in its set, so if the die is fair, P(even) = P(2) + P(4) + P(6) =

3/6.

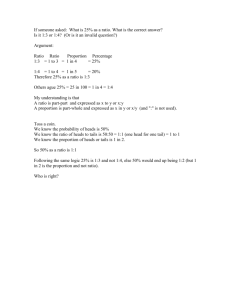

Consider the random experiment of flipping a nickel and a quarter

simultaneously. There are four elementary outcomes: (1) nickel heads and quarter heads,

{NH, QH}, (2) nickel heads and quarter tails, {NH, QT}, (3) nickel tails and quarter

heads, {NT, QH}, and (4) nickel tails and quarter tails, {NT, QT}.

This is illustrated in Figure 3.

-----------------------------------------------------------------------------------------------------------NH, QH

NH, QT

NT, QH

NT, QT

Figure 3: Flipping a Nickel and a Quarter Simultaneously, Elementary Outcomes

-----------------------------------------------------------------------------------------------------------Once again, these elementary events can be combined into events, for example,

the event nickel heads: event {Nickel Heads} = {{NH, QH} {NH, QT}}.

Assuming the coins are fair, the probability of the event nickel heads, P (NH), is

the sum of the probabilities of the elementary events that compose it: P(NH) = P(NH,

QH) + P(NH, QT) = 2/4.

Sept. 30, 2004 LEC #3

ECON 240A-4

Probability

L. Phillips

Similarly, the event quarter tails involves two elementary outcomes:

event {QT} = {{NH, QT} {NT, QT}}, with probability,

P(QT) = P(NH, QT) + P(NT, QT) = 2/4.

These events, nickel heads and quarter tails can also be combined into events: (1)

nickel heads or quarter tails, {nickel headsquarter tails}, i.e. one event or the other takes

place, (2) nickel heads and quarter tails, {nickel headsquarter tails}, i.e. both events take

place. (3)The event not nickel heads, { NH }, called the event complementary to nickel

heads, is equivalent to the event nickel tails, {NT}.

IV. The Addition Rule

The event {nickel heads or quarter tails}, {NHQT}, includes three elementary

events:

{NHQT} = {NH,QH}, {NH,QT}, {NT,QT}. The probability of nickel heads or quarter

tails is the probability of the first row of Figure 3, plus the probability of the second

column of Figure 3, minus the overlap or intersection, i.e. the double counting of the

upper right hand corner. This leads to the addition rule: P(NHQT) = P(NH) + P(QT) –

P(NHQT) = 2/4 +2/4 – ¼ = ¾.

If two events, e.g. event A and event B are mutually exclusive, then P(AB) = 0,

so P() = P(A) + P(B). Since elementary event are mutually exclusive by definition,

events composed of combining them, for example nickel heads (above), have

probabilities which are just the sum of the elementary outcomes composing this event,

e.g. recall that P(NH) = P(NH, QH) + P(NH, QT) = 2/4.

The probability of an event, and the probability of not the event must sum to one,

for example, P( NH ) + P(NH) =1.

Sept. 30, 2004 LEC #3

ECON 240A-5

Probability

L. Phillips

V. Interpretations or Meanings of Probability

There are various perspectives on the meaning of probability. One meaning is

classical or a perspective based on gambling. The basic assumption is that the gamble or

random experiment is fair and that the elementary outcomes are equally likely.

But not all uncertainty in life is associated with gambles. Much of life itself is

uncertain. For example, will the Dow Jones Industrials end the fourth quarter of the year

2004 higher than it was at the end of the third quarter? One approach to such questions is

empirical. In the last ten years, what fraction of the quarters witnessed a rise in the Dow?

Another perspective on uncertainty in events is subjective or personal. For example,

the likelihood of success in your academic career and professional career could be

assessed empirically, based on the experiences of others who faced similar

circumstances. Alternatively, you could make a personal or subjective estimate of your

chances based on your own attributes and other information you consider relevant.

VI. Conditional Probability

Suppose that when flipping a nickel and a quarter simultaneously, the quarter

lands tails while the nickel is still spinning. What is the probability of the nickel landing

heads given the quarter landed tails, i.e. P (NH/QT)?

The answer is that this conditional probability of nickel heads given quarter tails

is equal to the probability of nickel heads and quarter tails divided by the probability of

quarter tails:

P(NH/QT) = P(NHQT)/P(QT) = 1/41/2 = ½.

This can be rearranged and stated as the multiplication rule:

P(NHQT) = P(NH/QT) P(QT).

Sept. 30, 2004 LEC #3

ECON 240A-6

Probability

L. Phillips

Another example is rolling a die. Suppose you are told that the number appearing

is even. What is the probability that the number is four? The conditional sample space has

been reduced since the odd elementary events are ruled out. So P(4/even) =1/3, or using

the multiplication rule:

P(4/even) = P(4even) /P(even) = 1/6/1/2 = 1/3.

Note that P(4/even) is not equal to P(4) = 1/6, where {4} is one of six elementary

outcomes.

VII. Independence

In the example above, whether the nickel lands heads or tails is not conditional on

the outcome for the quarter. Indeed, the outcome for the nickel is independent of the

outcome for the quarter. In this case, the conditional probability of the nickel landing

heads is just the probability of getting heads in the flip of a nickel, i.e.

P(NH/QT) = P (NH),

And so the multiplication rule can be stated, given independence, as:

P(NHQT) = P(NH) P(QT)

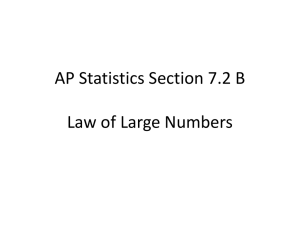

VIII. De Mere Again

With all of this probability apparatus at our disposal, we might turn to De Mere’s

question again. What is the probability of rolling at least one six in four throws of a single

die?

We could use a probability tree, as partially illustrated in Figure 4.

Sept. 30, 2004 LEC #3

ECON 240A-7

Probability

L. Phillips

1

2

1

3

2

4

3

5

4

6

5

6

Figure 4: Partial Probability Tree for Four Throws of the Die

-----------------------------------------------------------------------------------------------------If we had patience and a large enough piece of paper, we could enumerate all of the

elementary outcomes, such as {1,1,1,1}. But there will be 6*6*6*6 = 1296 elementary

outcomes. There must be an easier way.

We can use this conceptual framework of the tree or branching diagram. First, for

simplicity, consider two rolls of the die. Rolling at least one six could be one six on either

roll, or could be a six on each roll. The complement to this event is rolling zero sixes, no

six on the first roll and no six on the second roll. We can figure out the probability of this

event:

P( 6 6 ) = P( 6 ) * P ( 6 ) = (5/6)2 ,

since each roll of the die is a random experiment and hence the outcome on each roll is

independent of previous rolls of the die, we can use the special multiplication rule. It is a

simple extrapolation to four rolls of the die:

P( 6 6 6 6 ) = P( 6 ) * P ( 6 ) * P( 6 ) * P ( 6 ) = (5/6)4 .

Sept. 30, 2004 LEC #3

ECON 240A-8

Probability

L. Phillips

So the probability of rolling one or more sixes in four throws is:

1 – (5/6)4 = 1 – 625/1296 = 1 – 0.482 = 0.518.

In solving De Mere’s problem, we used the conceptual framework of the

probability tree, assumed a fair die, invoked random experiments to obtain independence,

and solved for the event complementary to one or more sixes. The same approach can be

used to solve for the probability of rolling at least one double six in 24 throws of a pair of

dice.

Independence is one key to the solution. We were able to invoke it because we conducted

random experiments. This is the motivation behind using random samples to make

inferences.

We will next pursue a sequence of Bernoulli events, for example, flipping a coin

two or more times, using the branching diagram and independence to derive the discrete

binomial distribution. We will see that for a large number of trials or flips, the binomial

can be approximated by the continuous normal or Gaussian distribution.

Sept. 30, 2004 LEC #3

ECON 240A-9

Probability

L. Phillips