Chapter 8 and 9 Review

advertisement

Chapters 8 and 9 Statistical Inference

Types of statistical Inference:

Point Estimation (Chapters 7, 8, 9)

Interval Estimation (Chapters 7, 8, 9)

Hypothesis Testing (Chapters 8, 9)

Point Estimation:

Population

Parameters

Unbiased Estimators

(Statistics)

1 n

X X i = Sample Mean

= Mean

n i 1

X

= Sample Proportion

p̂

p = Proportion

n

= Std. Dev S = Sample Std. Dev. (Small Bias)

1 – 2

d = 1 – 2

p1 – p2

X1 X 2

1 n

X d di

n i 1

1 n

( X 1i X 2i )

n i 1

ˆp1 ˆp2

where ˆp1

X1

X

, ˆp2 2

n1

n2

Chapters 8 and 9 Summary Fall 2007

Page 1 of 12

Interval Estimation

Confidence Interval for a parameter:

General Format: Estimator ME

ME = (t* or z*) × SE(Estimator)

Parameter

Estimator

Estimate of the SE(Estimator)

X

S

n

d

1 n

= 1 – 2 X d di

n i 1

(Dependent

Sd

n

Samples)

1 – 2

(Independent

Samples)

p

p1 – p2

(Independent

samples)

p1 – p2

(Dependent

Samples)

X1 X 2

S12 S22

n1 n2

p̂

ˆp( 1 ˆp )

n

ˆp1 ˆp2

ˆp1 ( 1 ˆp1 ) ˆp2 ( 1 ˆp2 )

n1

n2

ˆp1 ˆp2

Not covered

Chapters 8 and 9 Summary Fall 2007

Page 2 of 12

Hypothesis Testing

Steps in Hypothesis Testing:

1. Problem identification

(Define the population(s), parameter(s),

technique to use, etc.)

2. Check for assumptions

Types of random variables

Random Sampling

Samples large enough? [Depends on 1]

3. Specify the hypotheses

One sample test on population proportion:

i. Ho: p = po vs. Ha: p < p0 or

ii. Ho: p = po vs. Ha: p > p0 or

iii. Ho: p = po vs. Ha: p p0

One sample test on population mean:

i. Ho: = 0 vs. Ha: > 0 or

ii. Ho: = 0 vs. Ha: < 0 or

iii. Ho: = 0 vs. Ha: 0

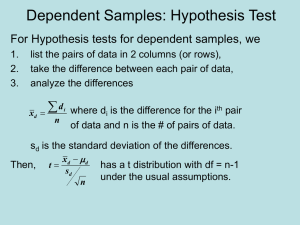

Test for difference of population means (i.e.,

d = 1 – 2) using two dependent samples

i. Ho: d = 0 vs. Ha: d > 0 or

ii. Ho: d = 0 vs. Ha: d < 0 or

iii. Ho: d = 0 vs. Ha: d 0

Chapters 8 and 9 Summary Fall 2007

Page 3 of 12

Test on difference of two population

proportions (using either dependent or

independent samples)

i. Ho: p1 – p2 = 0 vs. Ha: p1 – p2 > 0 or

ii. Ho: p1 – p2 = 0 vs. Ha: p1 – p2 < 0 or

iii. Ho: p1 – p2 = 0 vs. Ha: p1 – p2 0

Test for difference of population means (i.e.,

1 – 2) using two independent samples

i. Ho: 1 – 2 = 0 vs. Ha: 1 – 2 > 0 or

ii. Ho: 1 – 2 = 0 vs. Ha: 1 – 2 < 0 or

iii. Ho: 1 – 2 = 0 vs. Ha: 1 – 2 0

4. Specify the Test Statistic to use

(Depends on the hypotheses)

General Form:

Estimator Number in Ho

TS Z

~ N( 0,1)

SE( Estimator )

Estimator Number in Ho

TS T

~ t( d f )

Est. SE( Estimator )

See the following table for the test statistics (and

their distributions) for testing hypotheses on

different parameters.

Chapters 8 and 9 Summary Fall 2007

Page 4 of 12

Parameter

d = 1 – 2

(Dependent Samples)

1 – 2

(Independent Samples)

Test Statistic and its Distribution

X 0

~ t( n1 )

S/ n

X 0

T d

~ t( n1 )

Sd / n

( X 1 X 2 ) 0

T

~ t( df )

2

2

S1 S2

n1 n2

T

df = smaller of {(n1 – 1), (n2 – 1)}

or df = (n1 – 1) + (n2 – 1)

p

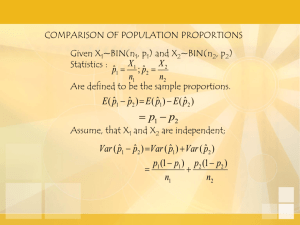

p1 – p2

(Independent samples)

p1 – p2

(Dependent Samples)

p̂ p0

~ N( 0,1 )

p0 ( 1 p0 )

n

( ˆp1 ˆp2 ) 0

Z

~ N( 0,1 )

1 1

ˆp( 1 ˆp )

n1 n2

YN NY

Z

~ N( 0,1 )

YN NY

Z

DO NOT FORGET TO VERIFY

THAT THE ASSUMPTION(S) NEEDED

FOR THE ABOVE DISTRIBUTIONS

IS/ARE SATISFIED

Chapters 8 and 9 Summary Fall 2007

Page 5 of 12

5. The p-value (Depends on the alternative)

If Ha: p < p0 then p-value = P(Z ≤ Zcal)

If Ha: p > p0 then p-value = P(Z ≥ Zcal)

If Ha: p p0 then p-value = 2×P(Z ≥ | Zcal| )

If Ha: p1 – p2 < 0 then p-value = P(Z ≤ Zcal)

If Ha: p1 – p2 > 0 then p-value = P(Z ≥ Zcal)

If Ha: p1 – p2 0

then p-value = 2×P(Z ≥ | Zcal| )

If Ha: < 0 then p-value = P(T ≤ Tcal)

If Ha: > 0 then p-value = P(T ≤ Tcal)

If Ha: 0 then p-value = 2×P(T ≥ | Tcal| )

If Ha: d < 0 then p-value = P(T ≤ Tcal)

If Ha: d > 0 then p-value = P(T ≥ Tcal)

If Ha: d 0 then p-value = 2×P(T ≥ |Tcal| )

If Ha: 1 – 2 < 0

If Ha: 1 – 2 > 0

then p-value = P(T ≤ Tcal)

then p-value = P(T ≥ Tcal)

If Ha: 1 – 2 0

then p-value = 2×P(T ≥ |Tcal| )

Chapters 8 and 9 Summary Fall 2007

Page 6 of 12

6. Decision

Only 2 possible decisions:

i. Reject Ho

ii. Do not reject Ho (or Fail to reject Ho)

Decision rule is always the same:

Reject Ho if p-value ≤

That is,

i. Small p-values give evidence that

support Ha, so we reject Ho

ii. Large p-values do not give any

evidence to support Ha, so we do not

reject Ho.

7. Conclusion

Explanation of the decision for the layman

Should not include any technical jargon.

Chapters 8 and 9 Summary Fall 2007

Page 7 of 12

Some concepts related to statistical Inference

Hypothesis: A statement about one or more

population parameter.

Decisions

Reject Ho (does not necessarily mean it is

false.)

Do Not reject Ho (does not necessarily mean

it is true.)

Erroneous Decisions:

o Type I Error: Rejecting Ho when in fact it

is true.

o Type II Error: NOT Rejecting Ho when

in fact it is NOT true.

Probabilities of Erroneous Decisions

o

= P(Type I Error) = Level of significance of a test

o (1 – )×100 = Confidence Level

o = P(Type II Error)

o 1 – = Power of a test

o CanNOT have both and small.

o Balance needed between and .

Chapters 8 and 9 Summary Fall 2007

Page 8 of 12

Limitations (of confidence intervals and significance tests)

Must have data from random samples (Data from

non-random samples are useless and may be

misleading, GIGO).

Outliers may cause problems. Special care is

needed.

“Do not reject Ho” “Accept Ho.”

“Reject Ho” “Accept Ha”

Statistical Significance Practical significance

P-value P(Ho is true)

ME takes into account ONLY sampling

variability, but NOT non-sampling errors

(measurement errors, non-coverage, nonresponse, etc.)

CI gives more information than a significance

test: “A confidence interval is the set of all

acceptable hypotheses”

Interpretation of CI

o Verbal explanation

o Meaning when

(–, –) Both of ends of CI negative

(–, +) One end negative, one positive

(+,+) both ends positive

Association does not mean causation

Chapters 8 and 9 Summary Fall 2007

Page 9 of 12

Response variable is the variable of interest that

is measured

Explanatory Variable is the variable that

explains (some of the) changes in the response

variable.

Matched (Dependent) Samples control the effect

of lurking variables. Should be preferred when

there is a positive correlation between response

and the controlled variables.

Experimental control (same environment) vs.

statistical control (same values) of lurking

variables.

Problem identification is a necessary first step in

statistical inference.

Simpson’s paradox: Be careful in interpreting

contingency tables. There may be a third factor

that influences both of the variables. Controlling

for the effect of this third variable may change the

conclusions.

Chapters 8 and 9 Summary Fall 2007

Page 10 of 12

Types of hypotheses: So far we have seen the

following hypothesis testing procedures:

I. Inference on . one population mean.

II. Inference on d = 1 – 2 using dependent

samples

III. Inference on 1 – 2 using independent samples

IV. Inference on one population proportion, p

V. Inference on p1 – p2 with dependent samples

VI. Inference on p1 – p2 using independent samples

In the test you will be given the formula you see on

the next page. You have to remember everything

else. For example, you must learn the following two

formulae. They will not be given in the test.

CI Estimator

( t* or z* ) SE( Estimator )

Estimator Number in Ho

~ N( 0,1 )

SE( Estimator )

Estimator Number in Ho

TS T

~ t( df )

Est. SE( Estimator )

TS Z

What are the conditions under which the formulas on

the next page are true?

Chapters 8 and 9 Summary Fall 2007

Page 11 of 12

Parameter

d = 1 –

2

(Dependent

Samples)

1 – 2

(Independe

nt

Samples)

Estimator

Estimate of the SE(Estimator)

X

S/ n

Xd

Sd / n

Test Statistic and its Distribution

X 0

T

~ t( n 1 )

S/ n

T

T

X1 X2

2

1

2

2

S

S

n1 n2

ˆp( 1 ˆp )

;

n

p0 ( 1 p0 )

For Tests:

n

ˆp1 ( 1 ˆp1 ) ˆp2 ( 1 ˆp2 )

For CI:

n1

n2

p̂

p1 – p2

(Independe

nt samples)

ˆp1 ˆp2

p1 – p2

(Dependent

Samples)

ˆp1 ˆp2

For Tests:

( X 1 X 2 ) 0

~ t( df )

S12 S 22

n1 n2

df = smaller of {(n1 – 1), (n2 – 1)}

or df = (n1 – 1) + (n2 – 1)

For CI:

p

X d 0

~ t( n 1 )

Sd / n

p̂ p0

Z

Z

1 1

ˆp( 1 ˆp )

n1 n2

Not covered

p0 ( 1 p0 )

n

~ N( 0,1)

( ˆp1 ˆp2 ) 0

1 1

ˆp( 1 ˆp )

n1 n2

Z

~ N( 0,1 )

YN NY

~ N( 0,1 )

YN NY

You will get these formulas in the test.

Make sure you understand them and start using them

NOW so that you know what is in the table and you

how to use them and when to use them.

Make sure conditions are satisfied before you start

using them.

Chapters 8 and 9 Summary Fall 2007

Page 12 of 12