Portrait of the USA - Guangzhou, China

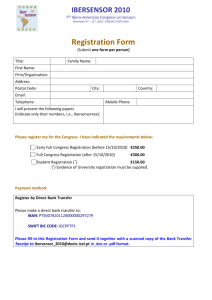

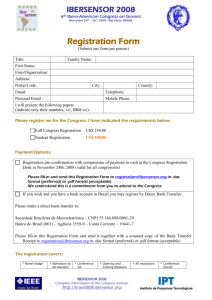

advertisement