3 Using administrative data to evaluate the effects of proxy

advertisement

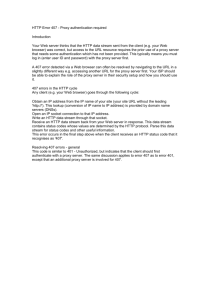

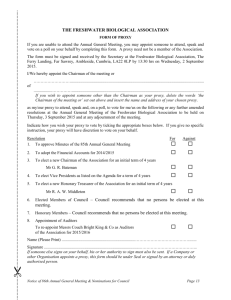

Ib Thomsen and Ole Villund: Using administrative registers to evaluate the effects of proxy interviews in the Norwegian Labour Force Survey Ib Thomsen Senior research fellow Division for Statistical Methods, Statistics Norway P.O. Box 8131, 0033 Oslo, Norway ith@ssb.no +47 21 09 42 75 Ole Villund Senior statistical adviser Division for Labour Market Statistics, Statistics Norway P.O. Box 8131, 0033 Oslo, Norway vil@ssb.no +47 21 09 47 79 1 ABSTRACT We combine data from the Norwegian Labour Force Survey with administrative register data in order to evaluate the impact of proxy interviews on the survey-based employment rate estimates. The method compares estimates under different models for proxy response and non-response models, over a relatively long time series from 1997 to 2007. Using register-based employment as an auxiliary variable, we try to discern between the effect of the measurement instrument and the fact that proxy-interviewed people are not selected at random. We label these effects "proxy effect" and "selection effect" respectively, and suggest methods for estimating them. Our conclusion, after also including the impact of non-response, is that proxy interviews probably result in a better employment rate estimate, even though it introduces some measurement errors. The reason is that proxy interviews include some hard-to-reach people with labour market situation more similar to those not reached at all. We find that including the proxy responses has approximately the same effect as post-stratification the direct responses, using register-employment status as the auxiliary variable. KEYWORDS Non-sampling error, selection bias, measurement error 2 1 Introduction The purpose of this study is to evaluate the impact of proxy interviews on the employment rate in the Norwegian Labour Force Survey (LFS), and is based on studies by Thomsen et al. (2007) and Kleven et al. (2008). Proxy response, also called "indirect interviews", is the case where a person (the proxy) answers questions on behalf of another person (the sampled interview subject). Selfresponse, also called "direct interviews", is defined as answers provided by the subject that the information is about. Currently the proxy interviews constitute about 15 per cent of the response sample. Unit non-response rate is about 15 per cent of the total sample. The variable of interest in this study is the employment rate, the number of employed relative to the population size of 15 – 74 years old. The LFS current main employment status measures whether a person is employed, according to the ILO definition, in the reference week. The employment rate in Norway was 71,6 per cent in the 4th quarter of 2007. The motivation for using proxy interviews in the LFS is primarily to save working time, and thus keeping cost down. To maintain the current response level in the LFS using only direct interview, the data collection would take longer time and be more expensive. Simply abandoning proxy interviewing could double the non-response rate. It can be argued that proxy interviewing maintains the precision within the available resources, by extending the response sample size. The assumption is that a considerable number of those interviewed by proxy, are less reachable or downright impossible to contact with reasonable means. 3 Another argument for allowing proxy interviews is that it may reduce some of the nonresponse bias. Both non-respondents and proxy-respondents have lower register-based employment than self-respondents. Proxy interviews therefore include answers on behalf of non-employed that might otherwise not have responded at all. The goal of this study is then to assess the advantages vs the disadvantages of proxy interviews to the employment rate estimates. In section 2 we review some previous results on proxy interviews. In section 3 we present a method that uses administrative register data to evaluate the effects of including proxy interviews in the response sample. In section 4 we include also the effect of non-response in our study. Section 5 offers some discussion and conclusions. 2 Previous studies In survey methodology, proxy response is recommended as a cost-saving alternative, especially when conducting face-to-face interviews. Several studies conclude that the result would be substantially increased cost, if proxy interviews were replaced by selfresponse (Moore 1988). But proxy response is often thought to differ systematically from self-response. In general, proxy responses are less accurate and rely more on generic than episodic information in recalling facts (Groves et al. 2004). On the other hand proxy interviews are in some cases better than self-response, for instance when interviewing young children, or when the topic involves self-presentation (Moore 1988). We think these cases are less relevant for our study, and assume that a direct response is the best for labour market surveys. Some labour market related studies find high level of agreement between proxy- and self-response, except for questions on income (Martin and Butcher 1982, Hill 1987). 4 Lemaitre (1988) reports on response errors in the Canadian LFS by examining a reinterviewed subsample. Both the initial interview and the re-interviews allowed proxy- as well as self-response. That means the combined data contains some individual units with both direct- and proxy response about the same reference week. We focus here on the employment topics: "Had a job, did not work" and "Worked during reference week", which are important in order to classify a person as employed. There were more inconsistent answers when the interview and re-interview were of two different types. If both were selfresponse or both proxy, the inconsistency was about half of that of the two different interview types. Given that self-response is correct the additional inconsistency in different-type cases can be attributed to proxy error. There is also higher inconsistency in proxy-proxy combinations than when both are direct interview. However, this does not tell us about the validity in proxy interviews, but indicates a lower reliability in proxy than direct interviews. The inconsistency when both interviews are direct is the lowest, and indicates higher reliability. The Canadian study gives valuable information on the proxy effect that is not possible with our data. Although proxy interviews introduces some measurement errors, the study reveals that two direct interviews about the same employment has up to 5 per cent inconsistent answers. Dawn et al. (1997) reports on a proxy response study based on questions in the British LFS. First a sample of households with at least two adults were contacted and only proxy interviews were conducted. After some time, each person for which proxy information had been given was interviewed directly. The data for both interviews referred to the same period, and the time between interview and re-interview were kept short. The design assumes a high internal reliability on the self-response, and does not measure this 5 explicitly. The errors and consistency was measured by analyzing the proxy responses and the direct answers to the same questions. The study mainly compares the relative quality differences by type of questions and type of proxy. Economic activity status (employment etc.) was one of the questions with highest quality both in terms of high consistency, few missing data, and low gross error rate (misclassifications). Questions that require more detailed or numerical answers decrease the proxy response quality considerable. The study made a comparison of the proxy's relationship with the subject, and found that spouses gave more consistent responses about each other than parents did about their children. This was not the case for all types of questions, but for economic activity status a spouse proxy was clearly better than a parent answering on behalf of an offspring. This is a very interesting result for us because a large proportion of proxies in the Norwegian LFS are parents. The proxy interview rate is highest among the youngest age groups and decreases with age up to about 30 years. 3 Using administrative data to evaluate the effects of proxy interviewing 3.1 Preparation We use survey sample data from the LFS linked with register-based employment data at the individual level. Statistics Norway has available a relatively long time series of consistent data, including quarterly files from 1997 to 2007. For a closer description of the data sources, we refer to the LFS documentation and technical reports (Villund 2007 and 2008a). Our data preparation, of linking survey with register at the unit level, creates two separate variables for the employment situation of each respondent. We regard the LFS 6 status as a survey measurement and the employment status from register as an auxiliary variable. TJA? 3.2 Defining proxy effects For each quarter we define: (3-1) 1 | LFS employed Yi 0 | not LFS employed LFS employment status for unit i. (3-2) 1 | register employed Xi 0 | not register employed Register employment status for unit i.. (3-3) 1 | direct response Zi 0 | proxy response Interview type status for unit i.. (3-4) n xz Size of subsample s xz defined by x, z. (3-5) n n xz Response sample size x, z Y i (3-6) p xz is xz LFS employment rate in subsample defined by x,z. nxz Following Thomsen (2007), we consider two models for proxy response that are analogous to non-response models. The two proxy models result in two ways of calculating proxy effect: PCAR – proxy interviews are distributed completely at random. This means that the subsample where Z=0 is a random selection of the total responses. In this case the proxy effect on employment is simply: (3-7) P(Y 1 | Z 0) P(Y 1 | Z 1) with an empirical estimate: (3-8) p1 p0 7 PAR – proxy interviews randomly distributed given the auxiliary variable. That means for a given value of X, the subsample where Z=0 is a random selection of the total responses. In this case we define two proxy effects, X=1 (register-employed) and one for X=0 (not employed) (3-9) 0 P(Y 1 | X 0, Z 0) P(Y 1 | X 0, Z 1) (3-10) 1 P(Y 1 | X 1, Z 0) P(Y 1 | X 1, Z 1) with the empirical estimates: (3-11) 0 p00 p01 (3-12) 1 p10 p11 Diagram 3-1 show the proxy effects estimated under PCAR and PAR models, for each of the quarter in our data series. 8 Diagram 3-13: Proxy-effect under different proxy models. Quarterly LFS 1997 – 2007. Proxy effect, percentage points -0.25 -0.20 -0.15 e e0 e1 -0.10 -0.05 2007Q1 2006Q1 2005Q1 2004Q1 2003Q1 2002Q1 2001Q1 2000Q1 1999Q1 1998Q1 1997Q1 - LFS Quarter Under both model there seem to be some underreporting among the proxy respondents. We think it is clear that the PCAR model can be discarded since the data suggest a strong association between X and Z. But even under the PAR model there is some underreporting. This is consistent with the findings of Kleven et al. (2008), and with Solheim et al. (2001) who found significant underreporting even after controlling for several auxiliary variables. Averaging over all the quarters, the proxy effect is about –6 percentage points under the PAR model, and roughly the same size among register-employed and not employed. Under the PCAR model the proxy effect is about –14 percentage points. Observing that the relationship between the two models is rather stable over a long time series helps establish 9 this as a significant discovery. In comparison, one standard error of the sample employment rate estimate is approximately 1 per cent. 3.3 Comparing three different estimates under the PAR model The figures for proxy effect shown in the previous section are relatively large differences in percentage points, reflecting the isolated effect. In order to evaluate the overall effect of the proxy interviews, we consider three different estimates of employment rate. This will help in deciding whether proxy interviews should be included or not in the estimation of employment rate. An estimate using only the direct interviews and another one using the combined sample (self-response and proxy interviews), are compared to a "benchmark estimate": (3-14) ydirect p01 n01 n p11 11 n1 n1 (3-15) ycombined p00 n00 n n n p01 01 p10 10 p11 11 n0 n1 n0 n1 (3-16) ydirect,PST p01 n0 n p11 1 n n The benchmark estimate (3-18) uses only the direct responses, but is adjusted by poststratification estimation using register-based employment to create two post-strata. We call this a "benchmark" because under the PAR model, and the response sample distribution of X, this is an unbiased estimate of the directly measured LFS employment rate. Using the differences between the benchmark and the direct responses, we can define a selection effect: 10 n01 n01 p p11 n1 n 01 (3-17) ( ydirect ydirect,PST ) This selection effect is an indicator of "representativity" of the self-responses, measured by the auxiliary variable X. We also compare the combined sample estimate to the benchmark, and this difference can be formulated as a linear combination of the two proxy effects under the PAR model (3-13 and 3-14): (3-18) ycombined y direct, PST 0 n00 n 1 10 n n Diagram 3-21 shows the three estimates for each quarter in our data series. Diagram 3-19: Three different employment rate estimates. Quarterly LFS 1997 – 2007. 0.76 0.74 0.73 0.72 COMBINED 0.71 DIRECT PST 0.70 0.69 0.68 2007Q1 2006Q1 2005Q1 2004Q1 2003Q1 2002Q1 2001Q1 2000Q1 1999Q1 0.66 1998Q1 0.67 1997Q1 Employment rate estimate 0.75 LFS Quarter 11 We observe that using only the direct interviews result in an overestimation of the employment rate – due to the selection effect. Using both interview types result in an underestimation of the employment rate – due to the proxy effect. But comparing the magnitude of the two effects, we observe that the combined estimate is closer to the unbiased benchmark. An average over the time series gives about -.8 percentage point difference for the combined sample, whereas the direct result in about +1.3. A preliminary conclusion is then: proxy interviewing should continue. But before we draw a final conclusion, we wish to assess the effects of non-response. In the next section we study data that includes the non-response units in a register-linked survey sample. 4 Including the effects of non-response The effect of non-response on the Norwegian LFS employment estimates has been studied in several papers, for instance Thomsen and Zhang (2001). We regard it as an established fact that employed people are over-represented in the combined response sample. And we have just shown that employed people are over-represented in the direct response sample. In our study of the effects of proxy interviews on the employment rate, we wish to incorporate both of these features. To do so we use the fact that the auxiliary variable X, register-based employment status, is available to the whole sample including non-response. We define: (4-1) N x Total selected sample size by register-employment, x. (4-2) N N 0 N1 Total selected sample size. 12 Following Thomsen (2007) we consider two non-response models: MCAR: Missing completely at random, response probability is independent of X and Y. MAR: Missing at random, response probability depends only on X. Each of these non-response models can now be considered in combination with the PAR model for proxy response proposed in section 3. Under the PAR model for proxy response and MCAR model for non-response, the benchmark employment estimate (3-18) is unbiased and the conclusions from the previous section holds. It is clear from earlier studies and our own data that the MCAR model for non-response is not plausible. Consequently the previous benchmark estimate is not an unbiased estimator of the employment rate measured directly. We therefore employ a modified benchmark that is an unbiased estimate under the MAR model: (4-3) ydirect,PST * p01 N0 N p11 1 N N In diagram 4-4 we compare the modified benchmark estimates for each quarter, with the series for the direct- and combined sample. The benchmark estimate adjusted with the nonresponse is slightly, but consistently, lower than the estimates shown earlier. 13 Diagram 4-4: Comparing benchmark employment rate estimates. Quarterly LFS 1997 – 2007. 0.76 Employment rate estimate 0.75 0.74 0.73 COMBINED 0.72 DIRECT 0.71 PST 0.70 PST_ADJ 0.69 0.68 2007Q1 2006Q1 2005Q1 2004Q1 2003Q1 2002Q1 2001Q1 2000Q1 1999Q1 1998Q1 0.66 1997Q1 0.67 LFS Quarter We observe that when we include the effect of non-response, the direct-only estimate has an even larger bias. It seems that the effect of including the proxy interviews has about the same effect as post-stratification of the direct responses by using X as the poststratification variable. From this the conclusion is that proxy interviews should continue. The inclusion of proxy interviews seems to adjust the effect of non-response, and their correcting effect outweighs the measurement errors they introduce. 5 Conclusion From the methods and data at hand, we conclude that the employment rate estimates probably are better when the combined sample is used. Extending the response sample size 14 by including proxy interviews introduces some additional measurement errors, but gives a more representative response sample. These extra people are both harder to reach and have a different labour market situation than the general population. The resulting effect is that proxy interviews adjust some of the non-response bias in the employment figures. 6 Further studies We have tried to evaluate the employment rate estimates by combining different proxy response models with different nonresponse models. Specifically have we suggested methods to discern between measurement instrument effect and the impact from the selection of proxy interviews. We think this is a useful example of linking administrative register data to survey sample data, and the methods could in principle be adapted to other variables of interest. The most relevant cases are when you do not have two simultaneous measurements for the same unit, and there is no randomized selection process. In order to evaluate the measurement error in LFS employment status, we would ideally like to know a true value for the employment status. Administrative register data with employment status is available and thus a candidate for an independent variable. However, Kleven et al. (2008) and others argue that the observed divergence between LFS employment status and register-based employment status is not equal to errors in the LFS. Different definitions and time lag, and even errors in the register as well as the survey errors can cause the divergence between the two sources. Foss (2004) used employment data from the LFS linked to register- data in order to evaluate the register, not the survey measurement. In recent studies on quality of unemployment data (Hagesæther et al. 2006 and Næsheim et al. 2007) the focus is as much on register errors as of survey error. As a 15 consequence we do not hold the register data as the true value, and don't evaluate the measurement error in self-response. Moore (1997) has review several studies of proxy vs self-response, and states three criteria for such studies. We can relate our method to these criteria: Survey subject matter: that the measurement of interest is an individual attribute, and not for instance a household characteristic or a relationship interaction. The LFS employment status is indeed an individual characteristic, and thus suitable for a study of proxy vs self-response. Treatment control: some kind of assessment of selection. Moore notes that many studies do not have an explicit design for controlling the selection. We don't have a randomized treatment control, and must rely an ex-post-facto method like many other studies. Response quality assessment: this would imply an estimate of the overall measurement errors, and should refer to a true value with which to compare the measured value. We do not evaluate the total measurement error, since we do not assess the measurement error in the direct response. However the results from other studies suggest that these factors have a greater effect on more complicated questions, such as wage figures and income components, and less on employment status. We have concentrated on the employment rate for the total population. This is of course an interesting subject, but there are of course other avenues to explore. Since Statistics Norway have register-based data on unemployment, a similar study of the LFS unemployment measurement could be attempted. Because of the nature of the 16 unemployment registration and -measurement, a study of this poses greater challenges both in terms of data linking and comparing variables. Another interesting approach is to look at young age groups, because they have high proxy interview rate, lower employment rate and possible greater divergence between the LFS status and register-based status. Solheim et al. (2001) studied the effect of proxy interviews on employment for the age group 16 to 29 years olds. Logistic regression was used to control for age and register-employment, with separate models for students and other young people. The overall result was a difference in employment rate of about –1.5 per cent, for data from 1st and 4th quarter 2000. The effect was larger for young age groups and students not living at home. It would be interesting to apply the method outlined in our study to analyze specific groups such as students or young people in general. 17 7 References Bø, Tor Petter and Håland, Inger: "Dokumentasjon av arbeidskraftundersøkelsen (AKU)" Statistics Norway "Notater" 2002/24 and 2008 Dawe, Fiona and I. Knight: "A study of proxy response in the Labour Force Survey" Labour Force Survey User Guide Vol.1, Ch.11 (2007) The Office for National Statistics. Originally published in the Survey Methodology Bulletin No.40, January 1997 Foss, Aslaug Hurlen: "Kvaliteten i arbeidsmarkedsdelen i Folke- og boligtellingen 2001" Statistics Norway "Notater" 2004/4 Groves, Robert M., F. J. Fowler Jr., M. P. Couper, J. M. Lepkowski, E. Singer and R. Tourangeau: "Survey methodology" Wiley, Hoboken New Jersey, 2004 Hagesæther, Nina and Li-Chun Zhang: "Om arbeidsledighet i AKU og Arena" Statistics Norway "Notater" 2006/34 Heldal, Johan: "Kalibrering av AKU Dokumentasjon av metode og program" Statistics Norway "Notater" 2000/7 Kleven, Øyvin, B. O. Lagerstrøm, and I. Thomsen: "A simple model for studying the effects of proxy interviewing" Paper presented at the 17th annual International Workshop on Household Survey Nonresponse, Omaha, Nebraska, US, August 2006. Published in Statistics Norway Documents 2008/2 Lemaitre, Georges: "A look at response erros in th Labour Force Survey" The Canadian Journal of Statistics 1988, Vol.16, 127-141 Lillegård, Magnar: Moore, Jeffrey C.: "Self/proxy Response Status and Survey Response Quality" Journal of Official Statistics, 1988, Vol.4 No.2, 155-172 Næsheim, H. and Pedersen, T.: The relations between the Norwegian Labour and Welfare Organisation’s and Statistics Norway’s figures of unemployment" Paper presented at Seminar on Registers in Statistics - methodology and quality, Helsinki, 21-23 May, 2007 Rubin, Donald B.: "Inference And Missing Data" Biometrika 1976 63(3):581-592 18 Solheim, L., Håland I. And Lagerstrøm, B. O.: "Proxy interview and measurement error in the Norwegian Labour Force Survey" Paper presented at the 12th International Workshop of Household Survey Nonresponse, Oslo, 12-14 September 2001 Thomsen, Ib, Ø. Kleven and Li-Chun Zhang: "Dealing with non-sampling errors using administrative data" Paper presented at the IAOS Conference, Lisboa 2007 Thomsen, Ib, Ø. Kleven, J. H. Wang and Li-Chun Zhang: "Coping with decreasing response rates in Statistics Norway" Statistics Norway report 2006/29 Thomsen, Ib and Zhang, L-C: "The Effects of Using Administrative Registers in Economic Short Term Statistics: The Norwegian Labour Force Survey as a Case Study" Journal of Official Statistics, Vol.17, No.2, 2001. pp. 285-294 Villund, Ole: "Labour Force Survey non-response in relation to immigrant origin." Statistics Norway Documents 2007/15 Villund, Ole: "Immigrant participation in the Norwegian Labour Force Survey 2006-2007" Statistics Norway Documents 2008/7 Zhang, Li-Chun: "A method of weighting adjustment for survey data subject to nonignorable nonresponse" Discussion Papers No. 311, October 2001, Statistics Norway. Zhang, Li-Chun: "A Note on Post-Stratification When Analyzing Binary Survey Data Subject to Nonresponse" Journal of Offcial Statistics, Vol. 15, No. 2, 1999, pp. 329-334 19