course description - Daniel J. Epstein Department of Industrial and

advertisement

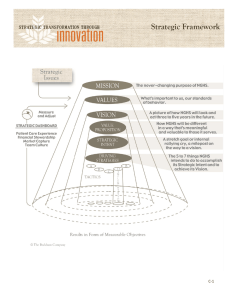

ISE 564: Spring 2010 ISE 564: PERFORMANCE ANALYSIS Tuesday and Thursday, 2:00pm – 3:20pm, OHE 120 & DEN Instructor: Dr. Shinyi Wu, Assistant Professor Epstein Department of Industrial & Systems Engineering Contact: shinyiwu@usc.edu For routine messages or questions, use either email (preferred) or leave a phone message (213-740-5073). For emergencies only, call Prof. Wu at 310-739-6873. Office Hours: 3:30pm – 4:30pm, every Tuesday afternoon in GER 240-C; 213-740-5073 Teaching Assistant: Sarah Kianfar (kianfar@usc.edu) TA Office Hours: TBA Instructor’s special announcement: Because of an injury over the winter break, I am unable to come to school until Jan. 28, 2010. I sincerely apologize for not being able to deliver the first five lectures in person. Instead, we will be showing you the lectures given by Dr. Lynne Cooper in 2009 until my return. Assignments will be sent via email. When I return to class on Jan. 28, I will review these materials and ensure seamless continuation of the course. In the meantime, should you have any questions or concerns, please feel free to email me or ask the TA. Thank you for your understanding. COURSE DESCRIPTION This course defines performance measurement and analysis within an organizational context. The organizational context is at multiple levels of the organization as a whole, production/business units, processes, teams, and individual workers. Industrial and systems engineers need to develop a keen awareness of how planning and measurement of performance affect short-term and long-term productivity of an operational unit. This course focuses on creating a knowledge base for measuring the performance of production or service organizations. The students learn to do this by class discussions, class exercises, homework, team projects and in-class presentations. Upon successful completion of this course, each student should be able to: Have a working knowledge of the taxonomy and definition of system performance and its components Define performance at different levels of an organization 1 ISE 564: Spring 2010 Identify core processes of a manufacturing or service operation Identify key measurable elements of a work system Engineer a systematic performance measurement system Identify studies relevant to the topics of performance and productivity using a variety of resources, analyze and critique emerging research, and interpret research findings Texts and Material: Rummler, G.A., and Brache, A.P. (1995). Improving Performance: How to Manage the White Space on the Organization Chart (2nd Edition). San Francisco, California: Josse Bass Publishers. Additional materials listed under “Readings” and will be posted on the DEN Blackboard Attendance and Participation While formal attendance is not graded, students should understand that regularly attending class and participating in discussions will maximize their learning. If you do miss a class, it is your sole responsibility to find out what materials were covered, what assignments were made, and what handouts you missed. Grading Your course grade is a weighted score comprised of the following: Weekly question Homework: Research Project: Midterm #1: Midterm #2: Class Project: Final Exam: 10% 15% 15% 10% 10% 20% 20% Weekly Question Learning Objective: The ability to ask a well-formed, insightful question is an important professional (and life) skill. Most courses are about giving answers. But the questions are generally much more interesting. Overview: Each week (between Jan. 18 and Apr. 23), based on course material, your experiences with the homework assignments, readings, or projects, experiences in other classes or at work, formulate a question related to performance analysis. One sentence (two if you really, really must), delivered by 8 pm Friday of that week. Include your name on the question sheet. Title the file as “WQ-[#]-[your name]”. Submit via email DEN assignment page 2 ISE 564: Spring 2010 Post to Discussion Board (optional) Project/Homework Assignment Descriptions There will be two projects in this class. They are designed to help you develop needed skills and integrate the materials learned in the class. They will also add to your professional resume as skills and a completed project. (1) Research Article Summary: Team of 2 students (recommended) or Individual (2) Dashboard Prototype: Team of 3 to 5 students (recommended) or Individual (requires concurrence from professor) You can find instructions about the projects in the end of the syllabus. Homework assignments are designed to help you complete the projects effectively and efficiently. Unless specifically directed otherwise, homework assignments are to be completed individually. You are expected to incorporate feedback from the homework into your research summary and final projects, as appropriate. Submit your homework via email DEN assignment page. Please do the following when submitting your homework: Title the file with your homework as “HW-[#]-[your name]-[optional, anything you want to include]” Include your name on the first sheet of the homework (having your name in the header on all pages is even better) All homework assignments are due by noon (12 pm) Thursday of the specified week Group Work is Encouraged for Class Projects You are encouraged to work in a team of two or four students for the class projects. The class projects can be completed individually with instructor approval. There are many benefits to working in a team: you can debate ideas and recommendations; you can share the burden of research; and you can learn from each other’s strengths. Teams for the Research Article Summary Project must be finalized by 2/4/10. Teams for the Dashboard Prototype Project must be finalized by (3/4/09). Please remember to identify all group members on the assignments. Exam Dates There are two Midterm and a Final exams planned for this course. All exams will be take-home, open-book, no collaboration. The First Midterm is due by 8pm Thursday, February 25, 2010, and the second Midterm is due by 8 pm Tuesday, March 30, 2010. There are no classes on those days the Midterm Exams are due (i.e., 2/25/10 and 3/30/10). The Final is due by 6:30 pm Thursday, May 6, 2010, per the University Schedule. Students will only be allowed to reschedule these exams in case of emergencies. Also, be aware that there are very strict conditions under which you can reschedule a Final Exam, including receiving a Dean’s approval. Be aware: personal events (e.g., weddings, 3 ISE 564: Spring 2010 birthdays, vacations, etc.) are not regarded as valid reasons for rescheduling exams and will not be seriously considered. Academic Integrity The Viterbi School of Engineering adheres to the University’s policies and procedures governing academic integrity as described in SCampus. Students are expected to be aware of and to observe the academic integrity standards described in SCampus, and to expect those standards to be enforced in this course. DEN students can find SCampus through the USC website. Students with Disabilities Any student requesting academic accommodations based on a disability is required to register with Disability Services and Programs (DSP) each semester. A letter of verification for approved accommodations can be obtained from DSP. Please be sure the letter is delivered to your instructor as early in the semester as possible. DSP is located in STU 301 and is open 8:30am to 5:00pm, Monday through Friday. The phone number for DSP is (213) 740-0776. Expected Performance Standard for Student’s Each student is expected to: Participate in class discussions, contribute individual experiences when relevant to the topic so that others can benefit and learn. Take individual responsibility for completing assignments. Late deliverables will not be accepted. Complete assignments/papers/presentations on time and in a professional manner, without spelling or grammatical errors, and using the format specified in the assignment instructions. Manage and perform individual studies independently and perform team studies interdependently. Follow the USC Guidelines for student integrity and honesty. You are required to: Have access to e-mail and check your e-mail and Blackboard regularly Use MS Word and PowerPoint for your documents Have access to USC libraries or online databases for your Research Article Summary project Use MS Excel for your Dashboard Prototype project 4 ISE 564: Spring 2010 COURSE SCHEDULE (tentative, subject to change) Week Main Subject 1 1/12 1/14 Class Introduction: Organization, Requirements, Expectations; description of projects, homework, weekly question; use of DEN BB Introduction to Performance Analysis subject area Input-Output Model and Performance Measurement Objective Hierarchy Prep for Research Article Assignment Systems Design: Three Levels of Performance o Organization Level o Process Level o Job Level 2 1/19 1/21 3 1/26 1/28 4 2/2 2/4 Value added vs. Supporting processes Process mapping Process redesign Swim lane diagrams 5 2/9 2/11 Strategy, goals and potential for improving performance (PIP) Prep for Research Project 6 2/16 2/18 RESEARCH ARTICLE ORAL REPORT 7 2/23 2/25 8 3/2 3/4 2/23 Review for Mid-Term #1 2/25 MID-TERM EXAM #1 DUE, No class Key Performance Indicators Dashboards Readings to prepare for class Class Syllabus Deliverables Due Rummler & Brache, Chapter 2 Post profile on DEN BB Weekly Question-1 HW-1: Measurement Comparison Rummler & Brache, Ch.3 Ch.4 Ch.5 Ch.6 Weekly Question-2 HW-2: Research Article List and comparison Hammer & Champy (1993) Ch 8 Hammer & Stanton (1995) Ch2 Booth, Colomb, & Williams (2008), Ch 4 & 16 Gilbert, Ch 2 Rummler & Brache, (Skim) o Ch.8 o Ch.9 o Ch.10 o Ch.11 Weekly Question-3 HW-3: Process Mapping Take Home, Open Book/Notes, To be completed individually Reh (2008) – note, this is a web link 5 None Weekly Question-4 HW-4: Draft Presentation for Research Project Weekly Question-5 Research Article written report Presentation package 5-7 minute team/individual class presentation Midterm #1 Due electronically by 8pm, 2/25/2010 Weekly Question-6 Finalize Project Team: Submit brief description of candidate organization and process (for info only, not graded) ISE 564: Spring 2010 Week Main Subject Readings to prepare for class Eckerson (2006) Benbya (2008) Deliverables Due 9 3/9 3/11 Dashboards Guest lecture by Ted Mayeshiba (tentatively) Prep for Class Project 10 3/16 3/18 SPRING BREAK 11 3/23 3/25 12 3/30 4/1 Measurements Review material from preSpring Break Weekly Question-8 3/30 MID-TERM EXAM #2 DUE, No class 4/1 Dashboard Design Class Project Requirements Niven (2006) Chapter 5 Hubbard (2007) Chapters 2 and 3 (excerpts) 13 4/6 4/8 Presenting Information Rummler & Brache, Chapter 12 Dresner (2008) Midterm #1 Due electronically by 8pm, 3/30/2010 Weekly Question-9 HW-5 Project status (optional) Weekly Question-10 HW-6 Project status (optional) 14 4/13 4/15 15 4/20 4/22 Team Performance Class Project Instructions Cohen & Bailey (1998) Sundstrom, DeMeuse, & Futrell (1990) Barker (1993) Kizilos (2002) Mason (1986) – note, this is a web link Review Class Project Instructions 16 4/27 4/29 17 5/6 Special topics, e.g., Ethics, gaming the system Class Project Forum (your questions) CLASS PROJECT PRESENTATIONS All files submitted electronically to DEN site by 10:00 am on 4/27 (late submissions will be penalized) FINAL EXAM Due Due electronically by 6:30pm, 5/6/2009 Weekly Question-7 Weekly Question-11 HW-7 Project status (optional) Weekly Question-12 Project status (optional) Dashboard Proposal Dashboard Prototype Dashboard Presentation Attendance Mandatory (in person or via DEN) Take Home, Open Book/Notes, To be completed individually Final Exam, including Dashboard Evaluation The readings are to be completed before the class sessions indicated. Additional items may be assigned as needed. Deliverables are due at the start of the class session indicated. Instructor reserves the right to assign a zero to any late item. Note: Any scheduled item in this class may be revised to accommodate the content and the pace of the class learning process. All revisions will be announced in the class. 6 ISE 564: Spring 2010 Required Readings: Barker, James R. (1993). Tightening the iron cage: Concertive control in self-managing teams. Administrative Science Quarterly 38(3), 408-437. Benbya, Hind (2008). Knowledge Management Systems Implementation: Lessons from the Silicon Valley. Cahndos Publishing: Oxford. Benner, Mary J. & Tushman, Michael. (Dec 2002). Process Management and Technological Innovation: A Longitudinal Study of the Photography and Paint Industries. Administrative Science Quarterly, 47(4), pp. 676-706. Booth, W.C., Colomb, G.G., & Williams, J. M. (2008). The Craft of Research, 3rd Edition. The University of Chicago Press: Chicago. Cohen, Susan G. & Bailey, Diane E. (1997). What makes teams work: Group effectiveness research from the shop floor to the executive suite. Journal of Management 23(3), 239-290. Dresner, Howard (2008). The Performance Management Revolution: Business Results through Insight and Action. John Wiley & Sons: Hoboken, NJ. Eckerson, Wayne W. (2006). Performance Dashboards: Measuring, Monitoring, and Managing Your Business. John Wiley & Sons: Hoboken, NJ. Gilbert, Thomas F. (2007). Human Competence: Engineering Worthy Performance, Tribute Edition. John Wiley & Sons/Pfeiffer: San Francisco. Originally published 1978. Hammer, M. & Champy, J. (1993). Reengineering the Corporation. Harper Collins. Updated 2006. Hammer, M. & Stanton, S. A. (1995). The Reengineering Revolution. Harper Collins. Hubbard, Douglas W. (2007). How to Measure Anything: Finding the Value of Intangibles in Business. John Wiley & Sons: Hoboken, NJ Kizilos, Tolly (2002). A Reality Coordinator’s Lament. In T. Kizilos, Once Upon a Corporation: Leadership Insights from Short Stories, Writers Club Press: Lincoln, NE, pp. 3-10. Loch, Cristoph H. & Tapper, U. A. Staffan. (2002). Implementing a strategy-driven performance measurement system for an applied research group. Journal of Product Innovation Management 19, pp 185-198. Mason, Richard O. (1996) Four Ethical Issues of the Information Age. MISQ, 10(1) March 1986, Available via web at: http://www.misq.org/archivist/vol/no10/issue1/vol10no1mason.html Niven, Paul R. (2006). Balanced Scorecard Step by Step: Maximizing Performance and Maintaining Results. John Wiley & Sons: Hoboken, NJ Poole, Dennis L., Nelson, Joan, Carnahan, Sharon, Chepenik, Nancy G. & Tubiak, Christine (2000). Evaluating performance measurement systems in nonprofit agencies: The program Accountability quality scale (PAQS), American Journal of Evaluation, 21(1), pp. 15-26. Reh, F. John. (2008) Key Performance Indicators (KPI). September 15, 2008. http://management.about.com/cs/generalmanagement/a/keyperfindic.htm Sundstrom, Eric, De Meuse, Kenneth P. & Futrell, David (1990). Work teams: Applications and effectiveness. American Psychologist 45(2), 120-133. 7 ISE 564: Spring 2010 Recommended Sources of Reading Materials This is a list of sources of material relevant to performance analysis. This is NOT a required reading list, nor is it a list of books you must buy. But if you are interested in the topics discussed in class and would like to dig deeper, these books represent a good starting point. Note: This list will probably grow throughout the semester. IIE Definition of Industrial Engineering: “Industrial Engineering is concerned with the design, improvement, and installation of integrated systems of people, materials, information, equipment, and energy. It draws upon specialized knowledge and skill in the mathematical, physical, and social sciences together with the principles and methods of engineering analysis and design to specify, predict, and evaluate the results to be obtained from such systems.” Books (Partial List) Adams, Scott. (1996). The Dilbert Principle. HarperCollins: New York Aziza Bruno and Joey Fitts (2008). Drive Business Performance: Enabling a Culture of Intelligent Execution. John Wiley & Sons, Inc.: Hoboken, NJ. Beyerlein, Michael M., Douglas A. Johnson, and Susan T. Beyerlein (2000). Product Development Teams. Volume 5, Advances in Interdisciplinary Studies of Work Teams. JAI Press: Stamford, CT. Beyerlein, Michael M., Douglas A. Johnson, and Susan T. Beyerlein (2000). Team Performance Management. Volume 6, Advances in Interdisciplinary Studies of Work Teams. JAI Press: Stamford, CT. Benbya, Hind (2008). Knowledge Management Systems Implementation: Lessons from the Silicon Valley. Chandos Publishing: Oxford. Blanchard, Kenneth and Spencer Johnson (1982). The One Minute Manager. William Morrow and Co, Inc.: New York. Blanchard, Kenneth, William Oncken, Jr. and Hal Burrows (1989). The One Minute Manager Meets the Monkey. William Morrow and Co, Inc.: New York. Brooks, Frederick P. (1982). The Mythical Man-Month: Essays on Software Engineering. Addison Wesley: Menlo Park, CA. Dresner, Howard (2008). The Performance Management Revolution: Business Results through Insight and Action. John Wiley & Sons, Inc.: Hoboken, NJ. Eckerson, Wayne W. (2006). Performance Dashboards: Measuring, Monitoring, and Managing Your Business. John Wiley & Sons, Inc.: Hoboken, NJ. El Sawy, Omar (2001). Redesigning Enterprise Processes for e-Business. McGraw-Hill Irwin: San Francisco. Gilbert, Thomas F. (2007). Human Competence: Engineering Worthy Performance, Tribute Edition. John Wiley & Sons/Pfeiffer: San Francisco. 8 ISE 564: Spring 2010 Hammer, Michael and James Champy (1993). Reengineering the Corporation: A Manifesto for Business Revolution. HarperCollins: New York. Hubbard, Douglas W. (2007). How to Measure Anything: Finding the Value of Intangibles in Business. John Wiley & Sons, Inc: Hoboken, NJ. Huff, Darrell (1993). How to Lie with Statistics. W. W. Norton & Co., Inc.: New York. Katz, Ralph (1997). The Human Side of Managing Technological Innovation: A Collection of Readings. Oxford University Press: New York. Kerzner, Harold (1996). Project Management: A Systems Approach to Planning, Scheduling, and Controlling, Sixth Edition. Von Nostrand Reinhold: New York. Kizilos, Tolly (2002). Once Upon a Corporation: Leadership Insights from Short Stories. Writers Club Press: New York. Koomey, Jonathan G. (2008). Turning Numbers into Knowledge: Mastering the Art of Problem Solving, Second Edition. Analytics Press: Oakland, CA. Latzko, William J. and David M. Saunders (1995). Four Days with Dr. Deming: A Strategy for Modern Methods of Management. Prentice Hall: Upper Saddle River, NJ. Richard O. Mason. Four Ethical Issues of the Information Age, MISQ, 10(1) March 1986 Available via web at: http://www.misq.org/archivist/vol/no10/issue1/vol10no1mason.html Niven, Paul R. (2006). Balanced Scorecard Step-by-Step: Maximizing Performance and Maintaining Results, Second Edition. John Wiley & Sons, Inc.: Hoboken, NJ. Project Management Institute (1996). A Guide to the Project Management Body of Knowledge. Project Management Institute: Upper Darby, PA. Reh, F. John (n.d). Key Performance Indicators (KPI): How an organization defines and measures progress towards its goals. Available on web at: http://management.about.com/cs/generalmanagement/a/keyperfindic.htm Rummler, Geary A. and Alan P. Brache (1995). Improving Performance: How to Manage the White Space on the Organization Chart, Second Edition. Jossey-Bass: San Francisco. [Note: this is the textbook for the class. I don’t know if there are any significant differences between the first edition and the second, but I’m using the second.] Tufte, Edward R. (1983). The Visual Display of Quantitative Information. Graphics Press: Cheshire, CT. [Note: there’s a more recent 2nd edition, 2001] Turner, Wayne C., Joe H. Mize, Kenneth E. Case, and John W. Nazemetz. (1993). Introduction to Industrial and Systems Engineering, Third Edition. Prentice Hall International: Englewood Cliffs, NJ. von Oech, Roger (1990). A Whack on the Side of the Head: How You Can Be More Creative, Revised Edition. Warner Books: New York. Williams, Frederick and Peter Monge (2001). Reasoning with Statistics: How to Read Quantitative Research, Fifth Edition. Harcourt: Orlando, FL. Zmud, Robert W. (2000). Framing the Domains of IT Management: Projecting the Future Through the Past. Pinnaflex Educational Resources, Inc.: Cincinnati, OH. 9 ISE 564: Spring 2010 Journals (Partial List) IEEE Transactions on Engineering Management Journal of Quality Management Organizational Dynamics Sloan Management Review Systems Engineering Journal Technological Forecasting & Social Change Organizational Behavior and Human Performance Journal of Manufacturing Systems Organizational Learning Intelligent Manufacturing Systems Organization Science Management Science Strategic Management Journal Administrative Science Quarterly Information Systems Research MIS Quarterly Academy of Management Journal Academy of Management Review Academy of Management Executive Academy of Management Perspectives Performance Improvement Journal Journal of Product Innovation Management Journal of Engineering and Technology Management R&D Management Quality Progress Industrial Engineering Solutions Industrial Management Harvard Business Review National Productivity Review Engineering Management Journal Journal of Quality and Participation 10 ISE 564: Spring 2010 Research Article Summary Learning Objective: Performance analysis is a hot topic, with new books, articles, and methods emerging on a regular basis. As a professional (engineer or otherwise), you will be bombarded with information on this topic and need the skills to evaluate what your managers, co-workers, consultants, and researchers will be telling – and selling – to you. As a professional, you also will benefit from developing the skills to learn from original source (vs. popularized) material. Overview: Identify a set of 3 articles from the published, peer-review literature related to performance analysis. From that set articles, select one (using the criteria/method that will be discussed in class) and develop a written summary and a seven-to-nine minute oral presentation (using the format and guidelines discussed in class) Overview of Activities: (Note, additional detail will be provided in class. Some of the specifics may change as the assignment gets refined) 1. Homework 2: Develop a list of 3 research articles using USC (or other) library search tools. Present the list in a table, identifying key features. Rank order the articles based on your preference for the Research Project. 2. Self-directed: Read the paper and analyze using guidelines provided in class 3. Self-directed: Prepare a 7-9 minute oral report summarizing the paper per class guidelines 4. Self-directed: Prepare a 3 page written summary of the paper and analysis questions per class guidelines. [An example is provided below.] 5. Week 6: Present your oral report and submit written summary. Example: ISE 564 Research Report Project [Date] [Your Name] Loch, C. H. and Tapper, U. A. S. (2002). Implementing a strategy-driven performance measurement system for an applied research group. Journal of Product Innovation Management, 19, pp. 185-198. [example] Loch and Tapper (2002) describe a process for implementing a performance measurement system for an applied research group. Their research addresses the 11 ISE 564: Spring 2010 difficulties inherent in measuring R&D performance: (a) effort levels are not directly observable; (b) consequences of actions are not directly observable; and (c) there are high levels of uncertainty and events may be uncontrollable and unpredictable. The authors view their study as “developing a specialized scorecard for applied research with strategyspecific dimensions” (p. 186). The Loch & Tapper (2002) paper incorporates multiple concepts we have discussed in class, most importantly: (1) connecting performance measurement to strategy; (2) developing measures based on outputs and processes; and (3) evaluating performance at multiple levels. These concepts are integrated via a case study of an applied research group that develops equipment and process technologies to support diamond mining. The strategy-specific dimensions of performance were developed by individual researchers, facilitated by the researchers, and guided by the company’s business strategy. This strategy was stated in a form derived from previous research (Markides, 1999): “What do we sell, to whom [do we sell it], how (with what core competences and processes), why (what is the value proposition to the customers and its competitive advantage), and what are the major threats in the environment” and what are the “tradeoffs and priorities among conflicting goals” (p186-7). The case study describes how the business strategy (related to selling gemstones) “cascaded” into the development strategy (finding raw stones), which in turn cascaded into the research strategy. As recommended by our Rummler & Brache text, the organization used its strategy to guide the development of the performance measures. The performance measures themselves were tied to processes within the applied research group: developing equipment, providing technical services, and building knowledge and reputation. These processes contributed to the organizations technology strategy via three outputs: technology demonstrations, customer service, and serving as a knowledge repository. For each output, the research group developed output measures at the group level by customer, output measures at the project level, and process measures at the project level. The authors present a set of 28 specific measures organized based on the tree outputs and the three levels of performance, e.g., number of significant innovations delivered, level of 12 ISE 564: Spring 2010 prototype maturity, customer satisfaction index, percent of support requests filled, completeness of literature surveys, and clarity and quality of conclusions in technology assessments). In addition to designing the performance measurement system, the case study describes how key measures supported business processes and influenced organizational performance. The organization published the measurement system and presented information using a “radar screen” which tracked 16 measures in the areas of research progress, new technologies, knowledge repository, and technical support and compared measured values to targets. By monitoring performance, the organization was able to improve actual expenditures relative to year-end budget forecasts, identify and take corrective action relative to innovation performance, and improve quality management system records. Company managers attribute part of the increase to improved transparency about projects and accomplishments, better focus on important aspects of performance, and improved understanding of priorities. The research described in this paper is potentially applicable to a wide variety of Industrial and System Engineering areas, but is most directly applicable to measuring performance in R&D groups. The authors report a large number of performance metrics that are either directly applicable to most R&D domains, or which may serve as a basis for analogy to generate company-specific measures (see Table 3, p. 194). These metrics provide proof that, although challenging, R&D performance can be measured. The authors do, however, caution that this specific R&D group developed process technology, and that the measures may need to be adjusted for groups addressing product technology. More generally, however, this research describes a process for generating performance measures that is tied to business strategy, engages those most directly responsible for producing performance, addresses fairness and responsibility issues, and can lead to significant organizational improvement. The authors highlight how the process for developing the performance measures enabled R&D employees to “give themselves a process for diagnosis and improvement rather than having a system imposed” (p. 194). The authors claim that this is similar to the concept of an “enabling bureaucracy” (Adler 13 ISE 564: Spring 2010 et al., 1999), where structure is introduced in an atmosphere of trust and with substantial input from the employees. In their paper, Loch & Tapper (2002) advance our understanding of performance measurement by applying it to a domain that has been challenging to measure. Their case study presents a process for developing performance measures, examples of multiple specific measures, and evidence that such a system can lead to organizational improvements. 14 ISE 564: Spring 2010 Dashboard Project Instructions Learning Objective: “In theory there’s no difference between theory and practice, but in practice there is.” The Dashboard Prototype Project enables you to integrate what you have learned throughout the class. Overview: Select an organizational process (with professor concurrence), map and analyze the process, identify organizational goals and strategies associated with it, and design, develop and demonstrate a Dashboard Prototype to monitor and assist planning of that process. You are responsible for coming up with the process, but I will work with you to make sure that it is scoped appropriately for this class (i.e., keep it simple, but with enough content to address the learning objective). Most of the course homeworks are designed to help you develop this prototype, and the course content is timed to support incremental development of your prototype. Your team will deliver your prototype (in Excel) and give a 15-18 minute oral presentation (using the format and guidelines discussed in class) during the last two regular class sessions. Overview of Activities: (Note, some of the specifics may change as the assignment gets refined, and additional detail will be provided in class. ) 1. Self-directed: Team up – or get concurrence from me to work individually 2. Homework 3: Identify candidate process(es), and provide rationale for selecting this process 3. Homework 4: Identify the strategies and organizational/process goals associated with the process you have chosen 4. Homework 5: Develop a process map describing your project 5. Homework 6: Develop a list of Key Performance Indicators for your process (you will select a subset of these for your prototype) 6. Homework 7: Define your dashboard: What KPIs will you include, how will you specify the information, where will you get the data, what format does the input data need to be in, how does the dashboard elements map to KPIs 7. Homework 8: Design your dashboard: E.g., Address how you will visually and numerically represent the elements of your dashboard; and how you will implement this using capabilities in Excel? 8. Self-directed: Implement your dashboard 9. Week 15: Deliver your dashboard prototype, populated with test data to demonstrate capabilities. In-Class presentation (for at least subset of class) 10. Week 16: In-Class presentation (if too many from last week). Perform a peer review of another team’s Dashboard Prototype (you will be assigned a specific team). Overview of Grading: The Dashboard Project has three graded components and a peer evaluation: 1. Develop a “Proposal” for a Performance Dashboard for a company or organization. 15 ISE 564: Spring 2010 2. Develop a Prototype Dashboard to demonstrate a subset of the capabilities of your Performance Dashboard Design 3. Present your proposal in class, providing context information about the company or organization, but focusing on the Prototype Dashboard. 4. Peer Evaluation: Individual evaluation of another team’s Dashboard Proposal & Prototype, will be graded as part of the Final exam. Grading point distribution: The Dashboard Project will receive a team grade – all members of the team will receive the same grade. Team members are responsible for managing their and their team mates’ participation. The point distribution for the Dashboard Project is given in the following table: Sections Formatting & Compliance I. Organization Overview II. Performance Measures III. Dashboard Design and Prototype Description IV. Expected Benefits V. Dashboard Prototype VI. Presentation VII. Peer Evaluation Total Points 10 40 60 60 20 40 70 n/a 300 Instructions: Formatting & Compliance of Proposal (10 points) Maximum of 10 pages for Sections I – IV. Pages for required elements and references DO NOT count in page total. Do not include a title page. You are encouraged to use the minimum number of pages to convey your information. Partially used pages count as a full page. 12 pt Times or Times New Roman Font 1” margins Top, Bottom, Left; 2” margin Right Header, 8 pt font, Contain last names of all Team Members, on separate line, file name for each document. Page number, 8 pt font, in footer This document complies with formatting for “Proposal” Organize based on the sections. Provide headers for all sections and titles for all Tables and Figures Cite all references to other work in text, with references at the end. (References do not count toward page count). If you provide information in a Table or Figure, you do not need to duplicate that material in the text. BUT, all Tables and Figures need to be explicitly referenced in the text, preferably with some information to place it in context. 16 ISE 564: Spring 2010 Minimum content requirements are listed for each section. You must address each of these items – points will be deducted for missing information. You may, within the page limits include additional information. Note that the point distributions (see Table 1) represent the relative importance for each of the sections. Use your time and document real-estate accordingly. Required elements should be on separate pages appended to the end of the document and referenced as Tables or Figures, as appropriate. Other Tables and Figures should be inserted in the text and count toward the page count. Section I. Organization Overview (40 points) (a) Describe the organization at the strategic level What is the business? Who are the customers? What is the business model? How is the company organized? What are the major processes and functions (you do not need to include general functions such as HR, accounting, etc.) What is the general environment (e.g., economic, political, regulatory, industry conditions) in which the organization is operating? Who are its customers? What is important to the company? Required figure: Organization Chart (b) Describe one part of the organization at the tactical level (e.g., plant, operating division, functional area) What is it? What does it do? How does this part of the organization fit in with the rest of the organization? Who are its customers? What are its core processes? What is important to this part of the organization? (c) Describe one operational component of the organization (e.g., a service center, operating unit, manufacturing line) What is it? What does it do? What is its product? Who are its customers? What is important to this operational component (e.g., what constitutes “success”)? Section II. Performance Measures (60 points) (a) For each level (strategic, tactical, and operational): Name three (3) decisions that are made at that level and who makes them What information is needed to support these decisions? Identify specific performance measures o Strategic 2-5 measures o Tactical 3-10 measures o Operational 5-12 measures (b) Required Table: Summary of All Measures 17 ISE 564: Spring 2010 Level Name Description (what it is, units) How it is measured (data source) How it is used and interpreted Note: you do not need to create a comprehensive list of all Performance Measures for the company – just a representative set that corresponds to the parts of the organization you described in Section I. Section III. Dashboard Design and Prototype Description (60 points) (a) Describe the functional components of your overall dashboard What are the elements What does each element do How does it do it Required Table or Figure identifying major components including inputs and outputs (b) Create a use case for a user at each of the three levels Who is this person? What is their job? What decision(s) does he/she need to make? How would he/she use the Performance Dashboard to help make that decision? Required Figures: One flow chart for each use case (3 in total) (c) Describe your Performance Dashboard Prototype What subset of performance measures and functionality are you prototyping? What is your use case for demonstrating how the prototype functions for nominal and off-nominal situations for a specified user? What was your rationale for selecting this subset and use case? Required Figure(s): Flow chart for use case(s). Section VI. Expected Benefits (20 points) (a) Describe the benefits that your Dashboard (if fully implemented) would provide to users. Section V. Performance Dashboard Prototype (60 points) Note: this is not part of the Proposal document and will be a separate file (a) Provide Excel or Power Point file that contains your Dashboard Prototype (b) Prototype must contain Graphical representation of the user interface for the subset of measures and functionality you identified Representative data depicting a nominal and an off-nominal (problem) situation Navigation mechanism to step through the scenario (as appropriate) 18 ISE 564: Spring 2010 (c) Please make sure that your Prototype is viewable via DEN computer screen Section VI. Presentation (70 points) (a) Give a 15 minute presentation (reserving 5 minutes for questions) during assigned period in class. Off-campus students will follow same protocol as for research project presentation. Each team decides which member/s will be presenting (each team member does NOT have to present) All team members need to be available to answer questions (b) Presentation package: Minimum font for bullet text: 24 pt. Please make sure other text is readable (e.g., in Figures) is readable Title chart with Name of your Dashboard, listing all team members in alphabetical order. Page numbers At least one chart providing an overview of the company At least one chart providing an overview of Dashboard Design (c) Walk through your Use Case(s) using your prototype Note: the peer reviewers for each team are expected to ask at least one question following the presentation. Section VII. Peer Evaluation (Graded as part of Final Exam) (a) What is the most compelling reason given by the team for the organization to implement this Performance Dashboard? Why? (b) What are the major strengths/weaknesses of the design and performance measures. (You need to identify at least one each) (c) Based on the design, prototype implementation, and proposed benefits/value, would you recommend that management proceed with the implementation? Why? 19