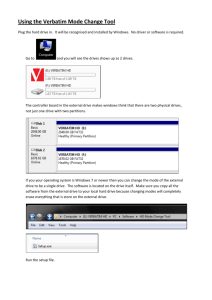

Computer Technician Training Course

advertisement