Conf

advertisement

A Framework for the Simulation of UAE University Campus Computer

Network

Shakil Akhtar*, Khaled Shuaib*, Hashir Kidwai*, Farag Sallabi*, and Mohammed Javeed**

*College of Information Technology, U.A.E. University

**Information Technology Center, U.A.E. University

{s.akhtar, k.shuaib, hkidwai, f.sallabi, javeed}@uaeu.ac.ae

Abstract

This paper presents the initial framework for the performance study of UAE University

campus network via simulation. The campus network topology is presented with a detailed

discussion of two major underlying technologies, namely ATM and Free Space Optics

(FSO). Benefits and limitations of these technologies have been discussed with reference to

the campus network setup. Further, the performance metrics are defined and explained as a

foundation for the forthcoming simulation studies.

1. INTRODUCTION

In order to characterize the traffic in a network, it is necessary to develop both analytical models, as well as

experimental testbeds. Experimental testbeds not only allow the validation of analytical models but also provide

a development and testing environment for new topologies and technologies. On the other hand, analytical

models are helpful in studying the scenario of new implementation before actually deploying any additional

network equipment or enabling new services. These models may allow a study of system performance metrices.

Many researchers have modeled network and telecommunication systems under different application

scenarios. For instance, in recent study mobility modeling has been done for wireless networks to predict the

trajectory and predict the location of users in wireless ATM networks [1]. Similarly, some other simulation

studies deal with traffic characterization and models of PCS networks [2-5]. Some additional studies deal with

traffic analysis through measurements on network implementations involving very high speed Backbone

Network Services (vBNS) [6] and TCP/IP with ATM [7, 8].

High-speed networks of the future are expected to carry traffic from a variety of applications. One objective

in such hybrid networks is to achieve low latencies, and more importantly, to obtain guarantees on the delay

bounds for the certain running applications. It is important for the UAE, as one of the fastest developing

country, to embark on such research areas. UAEU campus network is one of the largest in the country and there

is a big potential to develop this network using state-of-the-art technologies and devices. Many vendors take

interest in testing their devices in an experimental setup such as the UAEU network.

This paper presents a framework for investigating the performance of UAEU campus network in terms of

several application measures at the device and network levels. For instance, application related measures such

as synchronization, throughput, delay, delay jitter, convergence time, and redundancy may be obtained. The

paper reports the initial findings and presents the network setup that will be used to develop the simulation

models to identify the growth potential and future developments.

On an abstract level, a communication network can be considered as a set of resources, for which an

extensive set of users is competing. With simulation models this competition can be described and analyzed in

terms of performance measures. Another aspect of study concerns the system economic model. The key

problem is the search for mechanisms that allocate the available resources (bandwidth, buffer space) to the

population of heterogeneous users in an economically sound way. More specific problems are: (1) charging

network users based on their contribution to congestion, by packet marking, (2) allocation of bandwidth

through auctions, (3) models that allocate cost among network users, in conjunction with network

measurements.

Research in the area of Network Modeling and Simulation is applied by its nature. The main reason to perform

the simulation studies is to evaluate the needs carefully before deploying expensive network equipment. Vendors

often propose the solution to customers that are not optimized to their needs and very often the decision mistakes

1

are realized after the implementation. The process of network modeling provides recommendations to avoid these

decision mistakes through a set of performance metrics. The metrics help us understand the potential bottlenecks in

the growth network. In addition, the effect on bottleneck is studied by simulating different vendor equipment.

Most of the organizations undergoing growth and expansion in their networks go through a detailed study of

different alternatives to avoid future performance problems. These studies often include simulation and modeling.

Apparently, it is a new area of research in UAE. We are applying the study to UAE university computer network

and this paper presents the initial framework for the study.

The next section presents the ATM architecture, which is used as one of the main technologies in the university

network. Section 3 deals with FSO (Free Space Optics FSO) technology, which has recently been deployed by the

university as an alternate to the configured leased lines via Etisalat. Section 4 presents the network topology

followed by the next section on performance metric expected as a result of the simulation studies.

2. ATM Architecture

The interconnection between different sites within UAE University network is provided via configured ATM

links. Users at different sites access the Internet via a single 20 Mbps link available from the Islamic Institute

campus. To provide a better understanding of the campus network, we discuss the general ATM architecture in this

section which includes an explanation of general ATM architecture and the type of configurations offered.

ATM is a connection oriented transport mechanism. All switches on the path are consulted before a new

connection is set up. The switches ensure that they have sufficient resources before accepting a connection. The

connections in ATM networks are called Virtual Circuits (VCs). ATM networks use VC Identifiers (VCIs) to

identify cells of various connections. VCIs are assigned locally by the switch. A connection has different VCI at

each switch. The same VCI can be used for different connections at different switches. Thus, the size of VCI field

does not limit the number of VCs that can be set up. This makes ATM networks scalable in terms of number of

connections.

The use of a fixed length cell in ATM allows switching decisions to be implemented in faster hardware, rather

than making routing decisions in relatively slow software. It is also based upon the premise of a short cell length in

order to prevent excessive serialization delay and to prevent one user from blocking others. By combining the

hardware based switching together with the short, fixed length cell; ATM offers the potential for extremely low

delay and high throughput.

The international standards bodies have chosen 53 byte cells as the length for broadband services. This represents

a compromise between those who wanted longer payloads to reduce addressing overhead and processing load; and

those who wanted shorter payloads to improve the quality of low speed services, congestion avoidance, and delay.

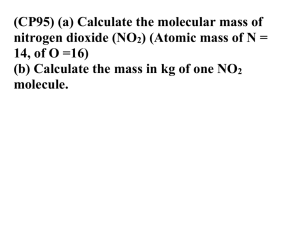

ATM is explained by using the layered protocol architecture as shown in Figure 1. The physical layer defines the

interface with the transmission media, much like the OSI reference model. It is concerned with the physical

interface, transmission rates, and how the ATM cells are converted to the line signal. However, unlike many LAN

technologies, such as Ethernet, which specify a certain transmission media, ATM is independent of the physical

transport. ATM cells can be carried on SONET, Synchronous Digital Hierarchy (SDH), T3/E3, T1/E1, or even

9600 bps modems.

The next higher layer (ATM Layer) deals with ATM cells. The format of the ATM cell is quite simple. It consists

of a 5 byte header and a 48 byte payload. The header contains the ATM cell address and other important

information. The payload contains the user data being transported over the network. Cells are transmitted serially

and propagate in strict numeric sequence throughout the network. The payload length was chosen as a compromise

between a long cell length (64 byte), which is more efficient for transmitting long frames of data, and a short cell

length (32 byte), which minimizes the end-to-end processing delay, and is good for voice, video, and delay

sensitive protocols. Although not specifically designed as such, the cell payload length conveniently accommodates

two 24 byte IPX FastPackets.

Two types of ATM cell headers are defined: the User-to-Network Interface (UNI) and the Network-to-Network

Interface (NNI). The UNI is a native-mode ATM service interface to the WAN. Specifically, the ATM UNI defines

an interface between cell-based Customer Premises Equipment (CPE), such as ATM hubs and routers, and the

ATM WAN. The NNI defines an interface between the nodes in the network (the switches), or between networks.

The NNI can be used as an interface between a user's private ATM network and a service provider's public ATM

network.

2

Physical Layer

ATM Layer

AAL Convergence Sublayer

ATM Adaption Layer (AAL)

AAL Segmentation and Reassembly Sublayer

Higher Layer Protocols

(Control, Management, and Applications)

Figure 1: The ATM four-layer mode. Different types of traffic can be mixed on the same switched

network. The AAL convergence sublayer makes sure that different types of traffic—data, voice and

video—receive the right level of service.

The primary function of both types of cell headers, the UNI and the NNI, is to identify Virtual Path Identifiers

(VPIs) and Virtual Circuit Identifiers (VCIs) as routing and switching identifiers for ATM cells. The VPI

identifies the path or route to be taken by the ATM cell, while the VCI identifies the circuit or connection

number on that path. The VPI and VCI are translated at each ATM switch, and are unique only for a single

physical link.

The five byte ATM header uses UNI and NNI cell formats. Most of the fields in two formats are similar

except that the UNI cell format includes a four-bit Generic Flow Control (GFC) field to provide flow control at

the UNI level. The NNI level flow control is achieved by using longer VPIs (12 bits as opposed to 8 bits for

UNI), thus allowing more virtual paths. Among other fields in the header are VCI (16 bits), Payload Type (PT - 3 bit field), Cell Loss Priority (CLP -- 1 bit) and Header Error Correction (HEC -- 8 bits). The defined PT for

both types of cells is user data (with congestion and signaling bits) and management data (encoding different

types of management control). The one bit CLP indicates the priority level of a cell, one indicating a higher

priority. In case of congestion, cells with 0 CLP may be dropped to meet certain Quality of Service (QoS)

requirements. Finally, the HEP is the CRC field computed over the first four bytes of the header.

The purpose of the next layer, the ATM Adaptation Layer (AAL) is to accommodate data from various

sources with differing characteristics. Specifically, its job is to adapt the services provided by the ATM layer to

those services that are required by the higher user layers (such as circuit emulation, video, audio, frame relay,

etc.). The AAL receives the data from the various sources or applications and converts it to 48 byte segments

that will fit into the payload of an ATM cell. The adaptation layer defines the basic principles of sublayering. It

describes the service attributes of each layer in terms of constant or variable bit rate, timing transfer

requirement, and whether the service is connection-oriented or connectionless.

AAL consists of two sublayers, the Convergence Sublayer (CS) and the Segmentation and Reassembly

sublayer (SAR). The Convergence sublayer receives the data from various applications and makes variable

length data packets called Convergence Sublayer Protocol Data Units (CS-PDUs). The Segmentation and

Reassembly sublayer receives the CS-PDUs and segments them into one or more 48 byte packets that map

directly into the 48 byte payload of the ATM cell transmitted at the physical layer. The types of AAL service

are defined as follows:

AAL1 - for a constant bit rate, connection-oriented service that requires timing transfer (synchronous), such

as voice and video. The CS in AAL1 divides the incoming data from higher layer to 47 byte segments (CSPDUs) that is passed to SAR sublayer. The SAR sublayer attaches one byte of header to each CS-PDU and

passes them as 48 byte payload to the ATM layer. The one byte header consists of Convergence Sublayer

Identifier (CSI -- 1 bit), Sequence Count (SC -- 3 bits), CRC (3 bits) and Parity (P -- 1 bit). The SC field is a

modulo 8 sequence number used for cell ordering and end-to-end error and flow control. The three bits CRC is

used as a checksum of preceding four bits in the header. Finally the P bit is simply the parity bit for the

preceding seven bits in he header.

AAL2 - for a variable bit rate, connection-oriented service that requires timing transfer (synchronous), such as

compressed voice and video. In this case, the CS divides the incoming data to 45 byte segments allowing a one

byte header and a two byte trailer. The one byte header consists of one bit CSI for signaling and three bit SC as

3

AAL1. In addition, a four bits Information Type (IT) field identifies the data segment in the cell to be identified

as beginning, middle or end of the message. The two byte trailer consists of a six bit Length Indicator (LI) and a

ten bit CRC over the entire data unit. The LI field is designed to be used with the last 45 byte segment. It

indicates the start of padding bytes in the final segment.

AAL3/4 - for a variable bit rate, connectionless service that doesn't require timing transfer (asynchronous),

such as SMDS and LANs. The overhead is added both at CS and SAR in this case. The CS accepts a maximum

of 64 kilobytes (65,535 bytes) of data from the upper layer. Four bytes of header and four bytes of trailer are

included to the data before breaking it to 44 byte segments that is passed to SAR. A padding of upto 43 bytes

may be included before the trailer to ensure 44 byte data segments. The header for CS to SAR segments

consists of Type (T -- 1 byte) set to all zeros, Begin Tag (BT -- 1 byte) used as begin flag and Buffer Allocation

(BA -- 2 bytes) to tell the receiver what size buffer is needed for the incoming data.

The number of padding bytes would be between 0 and 43 depending upon the number of data bytes in the

final (or final two) segment(s). When the number of data bytes is exactly 40 in the final segment, no padding is

required since the four byte trailer completes the segment. When the number of data bytes in the final segment

is between 0 and 39 padding bytes between 40 and 0 bytes may be added respectively to bring the total to 44

bytes. However, when the number of data bytes is between 41 and 43 bytes, the number of padding bytes must

be between 43 and 41 respectively to bring the total to 84 bytes. These 84 bytes are divided into 44 bytes in the

first segment and 40 bytes with the trailer in the next segment to complete last two segments. The trailer field

consists of one byte Alignment (AL) field, one byte End Tag (ET) as ending flag and two bytes Length (L) field

indicating the length of data unit.

The SAR header and trailer in ALL3/4 are each two bytes long. The header consists of Segment Type (ST -- 2

bits) to indicate the segment by itself or begin, middle or end segment in the message; one bit CSI, three bits SC

and ten bits Multiplexing Identification (MID) that identifies cells coming from different sources but

multiplexed using the same virtual connection. The trailer consists of six bits LI and a ten bits CRC over the

entire data unit.

AAL5 - for a variable bit rate, connection-oriented service that doesn't require timing transfer (asynchronous),

such as X.25 and Frame Relay. AAL5 simplifies the complex overhead mechanism of AAL3/4 by removing the

sequencing and error control mechanism that is not required for many applications. The maximum data in this

case remains 64 kilobytes like AAL3/4 but the only overhead added in this case is 8 bytes of trailer at CS layer

that is divided into 48 bytes segments at SAR. A padding of upto 47 bytes are used at CS to ensure 48 bytes

boundaries. The trailer field consists of one byte User-to-Use identifier left at the discretion of the user, a

reserved one byte Type (T) field, a two byte Length (L) field indicating the start of padding and a four byte

CRC over the entire data unit.

ATM switches provide the switching and multiplexing of cells in the system. Switches are designed to

provide virtual path and virtual channel switching using VPIs and VCIs. Switches break the incoming data into

fixed-length cells with a header and a payload field. Further, switches address the cell by prefixing it with a

logical destination called Virtual Path (VP) address. Further, they assign different Virtual Channels (VC) within

the VP, depending upon the type of data. Switches also multiplex the cells from various sources together onto

the outgoing transmission link and map the incoming VPI/VCI to the associated outgoing VPI/VCI address.

The demultiplexing task of switches consists of unpacking the cells from various sources, translating them back

into their native format, and delivering them to the appropriate device or port.

3. Free Space Optic Technology

The access technologies in use today include copper wire, wireless Internet access, broadband

RF/microwave, coaxial cable and direct optical fiber connections (fiber to the buildin g; fiber to the

home). Many telephone networks are still trapped in the old Time Division Multiplex (TDM) based

network infrastructure that rations bandwidth to the customer in increments of 1.5 Mbps (T -1) or 2.024

Mbps (E-1). DSL penetration rates have generally been slow too due to pricing strategies of the PTTs.

Cable modem access has had more success in residential markets, b ut suffers from security and capacity

problems, and is generally conditional on the user subscribing to a package of cable TV channels.

Wireless Internet access is still slow and web browsing is unappealing due to tiny screens.

The UAE University has decided to deploy the Free Space Optics (FSO) technology to connect campuses. It

offers a flexible networking solution that delivers on the promise of broadband . It provides the essential

combination of qualities required to bring the traffic to the optical fiber backbone, low cost, ease and

speed of deployment. In addition, freedom from licensing and regulation translates into ease, speed and

4

low cost of deployment. Since FSO optical wireless transceivers can transmit and receive through

windows, it is possible to mount systems inside buildings, reducing the need to compete for roof space,

simplifying wiring and cabling, and permitting the equipment to operate in a very favorable

environment. The only essential requirement for FSO is line of sight between the two ends of the link. It

is a line-of-sight technology that uses lasers to provide optical bandwidth connections. Typically, FSOs are

capable of up to 2.5 Gbps of data, voice and video communications through the air, allowing optical

connectivity without requiring fiber-optic cable or securing spectrum licenses. It requires light, which can be

focused by using either light emitting diodes (LEDs) or lasers. The use of lasers is a simple concept similar to

optical transmissions using fiber-optic cables; the only difference is the medium. Light travels through air faster

than it does through glass, so it is fair to classify Free Space Optics as optical communications at the speed of

light.

The FSO technology is relatively simple. It's based on connectivity between FSO units (Figure 2), each

consisting of an optical transceiver with a laser transmitter and a receiver to provide full duplex (bi-directional)

capability. Each FSO unit uses a high-power optical source (i.e. laser), plus a lens that transmits light through

the atmosphere to another lens receiving the information. The receiving lens connects to a high-sensitivity

receiver via optical fiber. FSO technology requires no spectrum licensing.

As mentioned above, unlike radio and microwave systems, FSO is an optical technology and no spectrum

licensing or frequency coordination with other users is required, interference from or to other systems or

equipment is not a concern, and the point-to-point laser signal is extremely difficult to intercept, and therefore

secure. For UAE University, it has proven to be a viable option since the need to lease inter-campus lines via

Eitsalat has reduced upto some extent by using the FSO technology. On the plus side the data rates comparable

to optical fiber transmission can be carried by FSO systems with very low error rates, while the extremely

narrow laser beam widths ensure that there is almost no practical limit to the number of separate links that can

be installed in a given location.

The advantages of free space optical wirelessdo not come without some cost. When light is transmitted

through optical fiber, transmission integrity is quite predictable – barring unforseen events such as backhoes or

animal interference. When FSO light is transmitted through the air it must deal with atmosphere, which may

affect the performance drastically.

Figure 2: FSO Unit

4. Campus Network Topology

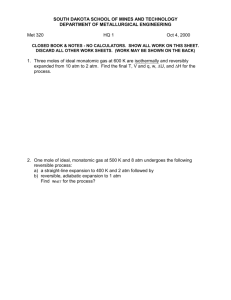

The backbone connection between various campuses of UAE University network is provided by Etisalat.

These connections are configured PVC lines using VCs at constant bit rates. Although the university provided

links are available at higher speeds, the connection speed to the Islamic Institute campus is limited by two

factors. First, the configured lines provided by Etisalat. Secondly, by the 155 Mbps link connection from

Etisalat cloud to the Islamic Institute campus.

Connection to Internet is further limited by the single 20 Mbps link from the Islamic Institute. All Internet

connections are expected to come via Islamic Institute. This topological setup provides many interesting study

cases for our research project. For instance, the throughput delay studies for the bottleneck outgoing Internet

link may be done using the available traffic pattern. Further we could study the network reliability keeping in

view the redundancies available in the network. Next section presents the details of some ongoing studies with

expected results in near future.

5

ZYED

CENTRAL

LIBRARY

INTERNET

FSO

1Gbps

20

Mbps

MIRFA

CAMPUS

34

Mbps

(Inactive)

2.04 Mbps

PUBLIC ATM

CLOUD

FROM

ETISALAT

ISLAMIC

INSTITUTE

CAMPUS

MAQAM

CAMPUS

50 Mbps

34 Mbps STM1

FSO

100

Mbps

FMHS

CAMPUS

34

Mbps

(Inactive)

34 Mbps

JIMMI-I

CAMPUS

FSO

1 Gbps

JIMMI-2

CAMPUS

MUWAIJI

CAMPUS

Figure 3: Core Network Diagram of UAE University Campus

A bird's eye view of the campus network is presented below and shown in Figure 3:

o

o

o

o

Etisalat is working as a switching network for UAE University's campuses network.

Main Campus, Islamic Institute Campus is connected to Etisalat via 155Mbps pipe.

All campuses are either connected to Public ATM Etisalat cloud or directly to Islamic Institute Campus

network for Internet connectivity.

For example,

o Mirfa Campus, which is 300 Km away from Alain, is connected to Public ATM Cloud via

E1-Access (2.048 Mbps).

o Maqam Campus is connected to Public ATM Cloud via STM1 link (50 Mbps).

o FMHS Campus is connected to Public ATM cloud via STM1Access which is of 34 Mbps.

o Murwaji Campus is connected to Public ATM cloud via STM1 Link of 34 Mbps.

o Jimmi-2 Campus is connected to Etisalat ATM cloud via STM1 (34 Mbps). This link is not

currently active.

o Jimmi-1 and Jimmi-2 Campuses are connected with each other via 1Gbps Free space optic

link.

o Jimmi-1 Campus is connected via 34 Mbps to Etisalat's ATM public Etisalat cloud. In case of

video conferencing, data from the internet is routed through Islamic Institute to Jimmi-1

campus and video packets are routed through Public ATM cloud to Jimmi-1 Campus.

o Jimmi-1 Campus is connected to Islamic Institute campus via Free space optic link of

100Mbps.

6

o

o

Islamic Institute Campus is connected to Public ATM cloud of Etisalat via OC3 pipe of 155

Mbps link.

Then comes Zyed Central library which is connected to Public ATM cloud of Etisalat and

Islamic Institute Campus via STM1 (34 Mbps) and Free Space Optic (1Gbps) links

respectively.

Note that there is only one 20 Mbps link connecting the entire UAE University campuses to the Internet.

Logically, All campuses has to forward the data to the Islamic Institute Campus network. Thus they are all

logically connected to the Islamic Institute campus. The Islamic institute campus is connected to Public ATM

cloud with an OC3-155 Mbps link. All other campuses are sharing the same bandwidth. Thus the total

bandwidth used by all campuses should not exceed 155Mbps speed in order to avoid bottleneck. There are two

traffic shaping parameters which are defined UAE University's Engineers with consent of Etisalat' Engineers,

i.e. Peak Information Rate (PIR) and Sustain Information Rate (SIR). The PIR and SIR of the UAE University's

campuses are as follows:

Jimmi-1 Campus PIR: 30Mbps

SIR: 7.5Mbps

(Both of them are VBR, Max bandwidth which can be

allocated through PVC: 34 Mbps)

Muwaiji Campus PIR: 30Mbps

SIR: 7.5Mbps

(Both of them are VBR, Max bandwidth which can be

allocated through PVC: 34 Mbps (PVC) )

FMHS Campus PIR: 30Mbps

SIR: 7.5Mbps

(Both of them are VBR, Max bandwidth which can be

allocated through PVC: 34 Mbps (PVC) )

Maqam Campus PIR: 50Mbps

SIR: 12.5Mbps

(Both of them are VBR)

Mirfa Campus

PIR: 2Mbps

SIR: 2Mbps

(Both of them are VBR)

Accumulated speed of 142 Mbps is obtained, which is less than the maximum 155Mbps total speed. So as far

as bandwidth utilization is concerned, UAE University network is working under normal condition. In order to

avoid bottleneck some links are not active and they are used as redundant links only.

5. Performance Metrics

There are several metrics we consider important when evaluating the performance of a network or

considering growing the network. Here we only consider some of them, based on what we think are most

important to the UAEU network. These metrics are explained below in detail.

5.1 WAN Bottleneck Link

The campus network is connected to Internet via single 20 Mbps link. Our research is focused on determining

two things. First, we study the effect on response time with a link connection between the client and server.

Secondly, the effect of using different traffic types or protocol types is studied. We are conducting simulations

to evaluate the response time of a web server for a mix of request times from a web client across different

bottleneck links. In our simulation, we focus on finding the response times using different topologies and types

of application traffic.

7

5.2 Network Size and Hierarchy

The performance metric here is the balance between the size, load and hierarchy of a network. There are

several network design considerations we consider when building a network or expanding on an existing one.

The proper design of a network is the key element to preventing network failures and to control and limit the

consequences of any failures once that happen. Once a failure happens, network convergence and recovery time

becomes very crucial especially when the network failure might have a great impact on users. There is always a

compromise between the network operational and maintenance (OAM) cost and the complexity of the design of

a network. The more complex a network, the greater its OAM cost.

The effect of a network failure might materialize in many ways; some examples would be as follow: A

noticeable increase in the CPU load of the device experiencing the failure and other devices in the network,

partial service disruption, a total network melt down, and a set of what if conditions must be considered and

tested on a similar emulated network prior to deployment. This set of what if conditions must be tailored toward

the applications, technology and software features implemented in the network. The set of what if conditions

might include but no limited to the following: A fiber cut, a line card or central processor failure, a sudden

bandwidth change over multiple links that might cause generation of control packets, a node failure,

simultaneous multiple node failures, an addition of a new link or trunk and the creation of a new hierarchal

level, such as the creation of a new OSPF area.

5.3 Network Redundancy

This network performance metric is a measure of how redundant the network is. Network redundancy can be

accomplished in many ways; we will only consider some of them.

Re-route around link or card failures that is alternate path redundancy. A measure of the network

performance in this case would the time it takes for traffic to re-route over failed paths

Another network redundancy issue is the central processor redundancy. In this case, the use of one or

more redundant central processor on a particular switch or router is essential to maintain stability in

the network. A central control processor switchover test should be run to determine if there is any

service or routing disruption.

When the network topology is hierarchal, proper attention needs to be paid to the link redundancy

scheme which is necessary to avoid the creation of any blackout areas when faults occur.

In certain cases, redundancy can be overdone to a point where it might actually work against the

stability of the network. We refer to this as negative redundancy. For example, if a network is running

a link state protocol as OSPF or PNNI consideration must be taken to limit the number of adjacencies

a node might have. Therefore, a network designer must not over do link redundancy and a balance

between redundancy network stability, and traffic distribution must be struck.

5.4 Number of adjacencies and Link State Size

To investigate the effect of increased number of adjacencies and the size of a link state on the network

stability and performance several tests may be conducted as follow:

A test to measure the time it takes to reboot a node when varying the number of PNNI or OSPF from 1

to n (where n = the maximum number of configurable PNNI/OSPF adjacencies) on a given switch

while using an emulated topology to obtain a large link state database.

A test by fixing the number of adjacencies on a node to the maximum desired number and increasing

the emulated network size by gradually increasing the link state database size and then measuring the

reboot time of the node under test.

A possible study scenario for UAE campus network consists of a test suite to see the relation between the node

central processor CPU load, the number of adjacencies on the node and the emulated link state data base size.

5.5 Network convergence time

The network convergence time is one of the most critical performance parameter of a network. Upon a

network failure event, it is desired that the network go back to a steady state operational state. The network

convergence time depends on many factors and a network designer must take all of these factors into

consideration when building or expanding a network. These factors include the number of nodes in a network,

the hierarchy and topology of the network, the maximum number of a adjacencies on a node in the network,

how powerful are the used CPUs on the cards, the total number of trunks in a network, the maximum number of

8

hops between any nodes in the network, applications running in the network, maximum number of cards and

their types configured on a particular node in the network, the routing protocol or protocols used in the network

and several other factors as well. The shorter the convergence time the more stable is the network. A set of

what if conditions may be created and the convergence time may be measured for each one of them for a

network operator to have a sense of how stable a network is. We intend to conduct these measurements on an

emulated network making it as similar as possible to the real-life network.

CONCLUSION

A framework for the simulation study of UAE University campus network is presented. The underlying

networking technologies are discussed in light of the current network implementation. The focus for the

ongoing simulation studies has been identified as a set of performance metrics that include response times on

bottleneck links, failure recovery analysis, network reliability/availability, and network convergence time.

ACKNOWLEDGEMENT

This work was supported in part by the Research Affairs at the UAE University under a contract no. 04-03-911/03. We also acknowledge Dr. Junaid Khawaja (CBE) for several useful discussions. Special thanks are to

Mr. Minhal (ITC) for providing the network diagram and topology information.

REFERENCES

[1]

T. Liu, P. Bahl and I. Chlamtac, “Mobility Modeling, Location Tracking, and Trajectory Prediction in

Wireless ATM Networks,” IEEE Journal on Special Areas in Communications, Special Issue on

Wireless Access Broadband Networks, Vol. 16, No. 6, (August 1998), pp 922-936.

[2]

Y. Fang and I. Chlamtac, “A new mobility model and its application in channel holding time

characterization in PCS networks,” Proceedings of the IEEE International Conference on Computer

Communications (INFOCOM'99), New York, March 1999.

[3]

Y. Fang and I. Chlamtac, “Teletraffic analysis and mobility modeling for PCS networks,'' IEEE

Transactions on Communications, Vol.47, No.7, pp.1062-1072, 1999.

[4]

Y. Fang, I. Chlamtac and Y. B. Lin, “Channel Occupancy Times and Handoff Rate for Mobile

Computing and PCS Networks,” IEEE Transactions on Computers, Vol. 47, No. 6, pp.679-692, 1998.

[5]

Y. Fang and I. Chlamtac, “Handoff probability in PCS networks,'' Proceedings of the 1998 IEEE

International Conference on the Universal Personal Communications (ICUPC'97)}, Florence, Italy,

October 1998.

[6]

Sujata Banerjee, David Tipper, Martin Weiss and Anis Khalil, "Traffic Experiments on the vBNS Wide

Area ATM Network,” IEEE Communications Magazine, August 1997.

[7]

V. Pendyala and S. Banerjee, “An Experimental Study of Throughput Translation Between TCP and

ATM,” In Proceedings of the 6th International Conference on Telecommunication Systems Modeling

and Analysis, Nashville, TN, March 1998.

[8]

Georgios Y Lazarou, Victor S. Frost, Joseph B. Evans, Douglas Niehaus, “Simulation & Measurement of

TCP/IP over ATM Wide Area Networks,” IEICE Transactions on Communications special issue on

ATM Switching Systems for Future B-ISDN, Vol.E81B, No. 2, February 1998, pp 307-314.

9