AI Homework #1 2006/3/21 2.1 Define in your own words the

advertisement

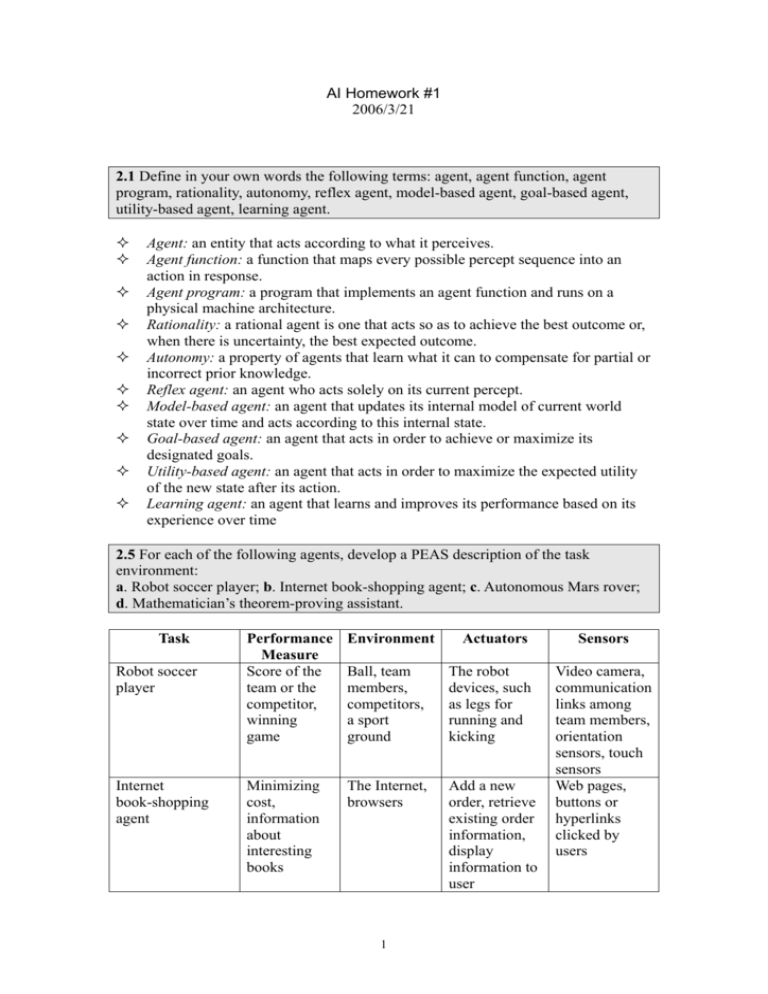

AI Homework #1 2006/3/21 2.1 Define in your own words the following terms: agent, agent function, agent program, rationality, autonomy, reflex agent, model-based agent, goal-based agent, utility-based agent, learning agent. Agent: an entity that acts according to what it perceives. Agent function: a function that maps every possible percept sequence into an action in response. Agent program: a program that implements an agent function and runs on a physical machine architecture. Rationality: a rational agent is one that acts so as to achieve the best outcome or, when there is uncertainty, the best expected outcome. Autonomy: a property of agents that learn what it can to compensate for partial or incorrect prior knowledge. Reflex agent: an agent who acts solely on its current percept. Model-based agent: an agent that updates its internal model of current world state over time and acts according to this internal state. Goal-based agent: an agent that acts in order to achieve or maximize its designated goals. Utility-based agent: an agent that acts in order to maximize the expected utility of the new state after its action. Learning agent: an agent that learns and improves its performance based on its experience over time 2.5 For each of the following agents, develop a PEAS description of the task environment: a. Robot soccer player; b. Internet book-shopping agent; c. Autonomous Mars rover; d. Mathematician’s theorem-proving assistant. Task Robot soccer player Internet book-shopping agent Performance Measure Score of the team or the competitor, winning game Minimizing cost, information about interesting books Environment Actuators Ball, team members, competitors, a sport ground The robot devices, such as legs for running and kicking The Internet, browsers Add a new order, retrieve existing order information, display information to user 1 Sensors Video camera, communication links among team members, orientation sensors, touch sensors Web pages, buttons or hyperlinks clicked by users Autonomous Mars Collect, rover analyze and explore samples on Mars Theorem-proving Time assistant requirement, degree of correction Mars, vehicle The theorem to prove, existing axioms Collection , analysis, and motion devices, radio transmitter Accept the right theorem, reject the wrong theorem, infer based on axioms and facts Video camera, audio receivers, communication links Input device that reads the theorem to prove 2.10 Consider a modified version of the vacuum environment in Exercise 2.7, in which the geography of the environment – its extent, boundaries, and obstacles – is unknown, as is the initial dirt configuration. (The agent can go Up and Down as well as Left and Right.) a. Can a simple reflex agent be perfectly rational for this environment? Explain. b. Can s simple reflex agent with a randomized agent function outperform a simple reflex agent? Design such an agent and measure its performance on several environments. c. Can you design an environment in which your randomized agent will perform very poorly? Show your results. d. Can a reflex agent with state outperform a simple reflex agent? Design such an agent and measure its performance on several environments. Can you design a rational agent of this type? a. Because a simple reflex agent does not maintain a model about the geography and only perceives location and local dirt. When it tries to move to a location that is blocked by a wall, it will get stuck forever. b. One possible design is as follows: if (Dirty) Suck; else Randomly choose a direction to move; This simple agent works well in normal, compact environments; but needs a long time to cover all squares if the environments contain long connecting passages, such as the one in c. c. The above randomized agent will perform poorly in environments like the following one. It will need a lot of time to get through the long passage because of the random walk. 2 d. A reflex agent with state can first explore the environment thoroughly and build a map of this environment. This agent can do much better that the simple reflex agent because it maintains the map of the environment and can choose action based on not only the current percept, but also current location inside the map. 2.12 The vacuum environments in the preceding exercises have all been deterministic. Discuss possible agent programs for each of the following stochastic versions: a. Murphy’s law: twenty-five percent of the time, the Suck action fails to clean the floor if it is dirty and deposits dirt onto the floor if the floor is clean. How is your agent program affected if the dirt sensor gives the wrong answer 10% of the time? b. Small children: At each time step, each clean square has a 10% chance of becoming dirty. Can you come up with a rational agent design for this case? a. The failure of Suck action doesn’t cause any problem at all as long as we replace the reflex agent’s ‘Suck’ action by ‘Suck until clean’. If the dirt sensor gives wrong answers from time to time, the agent might just leave the dirty location and maybe will clean this location when it tours back to this location again, or might stay on the location for several steps to get a more reliable measurement before leaving. Both strategies have their own advantages and disadvantages. The first one might leave a dirty location and never return. The latter might wait too long in each location. b. In this case, the agent must keep touring and cleaning the environment forever because a cleaned square might become dirty in near future. 3