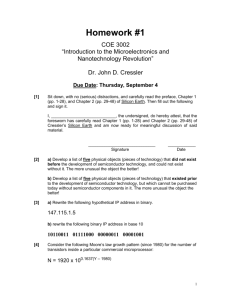

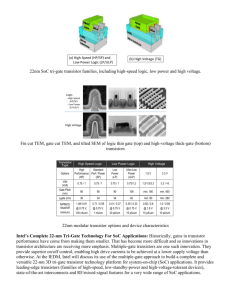

Physical limits of silicon transistors and circuits

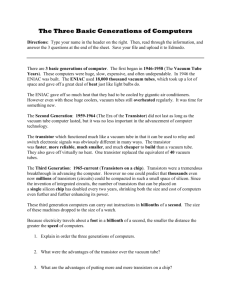

advertisement