Psychometric Properties of an Emotional Adjustment Measure

advertisement

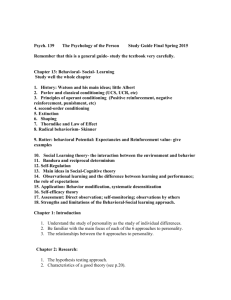

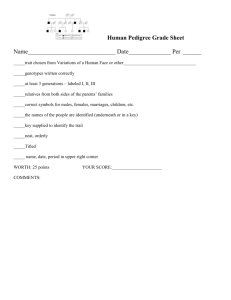

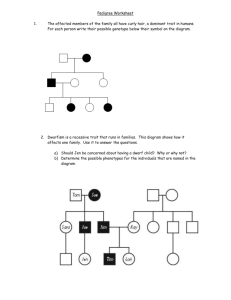

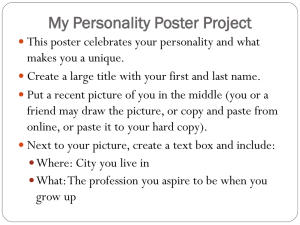

V.J. Rubio et al.: European Psychometric Journalof Prop Psychological erties of an©Assessment Emo 2007tional Hogrefe 2007; Adjustment & Vol. Huber 23(1):39–46 Publishers Measure Psychometric Properties of an Emotional Adjustment Measure An Application of the Graded Response Model Víctor J. Rubio1, David Aguado2, Pedro M. Hontangas3, and José M. Hernández1 1 Department of Biological and Health Psychology, Autonoma University of Madrid 2 Institute of Knowledge Engineering, Autonoma University of Madrid 3 Department of Methodology of Behavioral Sciences, University of Valencia, all Spain Abstract. Item response theory (IRT) provides valuable methods for the analysis of the psychometric properties of a psychological measure. However, IRT has been mainly used for assessing achievements and ability rather than personality factors. This paper presents an application of the IRT to a personality measure. Thus, the psychometric properties of a new emotional adjustment measure that consists of a 28-six graded response items is shown. Classical test theory (CTT) analyses as well as IRT analyses are carried out. Samejima’s (1969) graded-response model has been used for estimating item parameters. Results show that the bank of items fulfills model assumptions and fits the data reasonably well, demonstrating the suitability of the IRT models for the description and use of data originating from personality measures. In this sense, the model fulfills the expectations that IRT has undoubted advantages: (1) The invariance of the estimated parameters, (2) the treatment given to the standard error of measurement, and (3) the possibilities offered for the construction of computerized adaptive tests (CAT). The bank of items shows good reliability. It also shows convergent validity compared to the Eysenck Personality Inventory (EPQ-A; Eysenck & Eysenck, 1975) and the Big Five Questionnaire (BFQ; Caprara, Barbaranelli, & Borgogni, 1993). Keywords: emotional adjustment, item response theory, Samejima’s graded response model, personnel recruitment Emotional Adjustment (EA, also called Neuroticism, emotional equilibrium, Emotional Stability) is one of the constructs that systematically appears to determine “personality structure.” It constitutes a dimension in most of personality theories (Cattell & Scheier, 1961; Eysenck, 1947; Guilford, 1959). Recently, the five-factor model (FFM) of personality includes Emotional Stability as one of the “Big Five.” Actually, EA is less conceptually controversial dimension (Costa & McCrae, 1992; Digman, 1990; Wiggins & Trapnell, 1997). EA relates to whether or not someone has the tendency to feel negative emotions and have irrational thoughts as well as to control the impulses when facing a stressful situation. Characteristics of this dimension are moody, touchy, irritable, anxious, unstable, pessimistic, and complaining versus controlled, secure, calm, self-satisfied, and cool. The dimension EA is also included in most of the commonly used personality questionnaires, such as the 16PF (Cattell, 1972), EPQ (Eysenck & Eysenck, 1975), and NEO-PI-R (Costa & McCrae, 1992). The Use of IRT in Personality Assessment IRT provides valuable methods for the analysis of the psychometric properties of a psychological measure. IRT has © 2007 Hogrefe & Huber Publishers several advantages compared to classical test theory (CTT). First, the psychometric information is not sample dependent (Lord, 1980). Second, the effectiveness of a scale can be assessed at every level of the trait being measured. Consequently, IRT provides guidance in the construction of tests and allows the designer to tailor the efficiency of the instrument for a specific level of the trait. Third, each item is not equally weighted in the estimation of the trait level. Thus, the predicted trait level will be equivalent regardless of the items on which it is based. Finally, psychometric development in IRT in conjunction with advances and use-generalization in computer technology have made computerized adaptive testing (CAT) feasible. Despite those advantages, a general overview of the psychometric literature shows that IRT has been mainly used for assessing achievements and ability rather than personality factors (Ferrando, 1994; Cooke & Michie, 1997; Rouse, Finger, & Butcher, 1999). Two reasons have been pointed out for this (Waller & Reise, 1989). The first one states that IRT is without any doubt a more complex and higher mathematically demanding psychometric theory. The second reason implies the fact that, at this moment, the majority of IRT models entail the assumption of unidimensionality, that is to say a single trait or factor explains subjects’ performance level in a particular test. Some personEuropean Journal of Psychological Assessment 2007; Vol. 23(1):39–46 DOI 10.1027/1015-5759.23.1.39 40 V.J. Rubio et al.: Psychometric Properties of an Emotional Adjustment Measure ality inventories are unidimensional but many others are multidimensional scales like those that have been designed by means of empirical strategies. This is why IRT as applied to personality tests has attempted to fit unidimensional scales or subscales rather than multidimensional ones. We can also add another reason that might have contributed to the scarce application of IRT models to personality assessment: The response format of the personality questionnaires. Thus, while in aptitude assessment the common type of response is dichotomous, many personality assessment instruments have a rating-scale response format. Nonetheless, in recent years several studies have successfully used IRT in personality assessment instruments (Chernyshenko, Stark, Chan, Drasgow, & Williams, 2001; Cooke & Michie, 1997; Ferrando, 1994, 2001; Flannery, Reise, & Widaman, 1995; Gray-Little, Williams, & Hancock, 1997; Reise & Henson, 2000; Reise & Waller, 1990; Rouse et al., 1999; Zickar & Ury, 2002; Zumbo, Pope, Watson, & Hubley, 1997). Those studies have used different IRT models. According to the response format, some of them have used dichotomous models, either a one- (1-PLM), or two-parameter logistic model (2-PLM). Researchers have pointed out the 2-PLM generally fits well the data (Ferrando, 1994, 2001; Reise & Waller, 1990), and the 1-PLM is not appropriate (Ferrando, 1994). Some authors have even suggested the use of a three-parameter logistic model (Zumbo et al, 1997; Rouse et al., 1999) in as far as the c parameter, which is usually considered as a pseudo-chance factor in ability testing, has been shown to be influenced by social desirability (Rouse et al., 1999). For polytomous responses, two main alternatives have been used: Masters’s partial credit model (PCM) and variants or Samejima’s graded response model (GRM). GRM has been the most used model. The GRM has fitted the data reasonably well in most studies (Flannery et al., 1995; Cooke & Michie, 1997; Gray-Little et al., 1997) and even better than the PCM (Baker, Rounds, & Zevon, 2000; King, King, Fairbank, Schlengar, & Surface, 1993). There is just one study, the Chernyshenko et al.’s (2001), which found that the GRM did not fit well. This paper presents the construction, validation, and calibration of a measure for assessing EA in order to build a bank of polytomous items that could be used in the future as a CAT. Method Participants Participants were 858 psychology students from the University “Autonoma” of Madrid, Spain; 80.6% females and 19.4% males, aged between 18 to 55 (age mode = 18). Subjects completed the questionnaires in groups, and the instructions were provided by two trained assessors. The European Journal of Psychological Assessment 2007; Vol. 23(1):39–46 sample size is large enough for using the GRM and obtaining stable parameter estimation (Reise & Yu, 1990). Instruments The EA Bank. The EAB (Aguado, Rubio, Hontangas, & Hernández, 2005) consists of 28 items. All of them have a graded response option from 1 (totally agree) to 6 (totally disagree). The EAB was designed combining several strategies. First, more than 1,000 items from the most commonly-used personality questionnaires in Spain were reviewed. Then, following Costa and McCrae’s EA conceptualization, a rational fitting to the theoretical components of this dimension was made to select a subset of items. Subsequently, a group of experts, through interjudge agreement, proposed the final wording to try to tap the different aspects included in the original items. Last, the definitive composition of the EAB was established using factorial techniques. Other Instruments The N scale of the Eysenck Personality Inventory (EPQ; Eysenck & Eysenck, 1975) and the EA scale of the Big Five Questionnaire (BFQ; Caprara, Barbaranelli, & Borgogni, 1993) were used to establish the convergent validity of the EAB. IRT Model According to the type of scale used and its response format (polytomous and graded), Samejima’s graded response IRT model was selected. The reasons for this choice instead of others such as PCM (van der Linden & Hambleton, 1997) were: (1) GRM was one of the first models for graded polytomous items; (2) it is appropriate for items with different a parameters; (3) as Jansen and Roskam (1986) pointed out, only the GRM is a natural model for rating scales, and (4) more published studies exist on parameterization over the GRM than any other polytomous models so the conditions for obtaining good estimations are well known. Under the GRM an item is comprised of k ordered response options. Parameters are estimated for k-1 boundary response functions. Each boundary response function represents the cumulative probability of selecting any response options greater than the option of interest. The functions for an item i is characterized by two types of parameters. The discrimination parameter ai is a measure of the discriminating power of the item. It indicates the magnitude of change of probability of responding to the item in a particular direction as a function of trait level. It can be interpreted qualitatively with Baker’s (1985) classification, using the following terms (under a normal model): ai < 0.20, very low discrimination; 0.21 < ai < 0.40, low discrimination; 0.41 < © 2007 Hogrefe & Huber Publishers V.J. Rubio et al.: Psychometric Properties of an Emotional Adjustment Measure ai < 0.80, moderate discrimination; 0.81 < ai < 1, high discrimination; ai 1, very high discrimination. The difficulty or location parameter bi provides a measure of item difficulty or, in personality assessment, the extremity or frequency of a behavior or an attitude. So, the GRM is a logistic two-parameters model that can be expressed in the following way: P∗ik(θj) = eDai(θj−bik) 1 + eDai(θj−bik) Pik(θj) = P∗ik(θj) − P∗ik+1(θj) [1] [2] Where: k: ordered response option or score; Pik(®j): Probability of responding to alternative k of item i with a trait level ®j; P*ik(®j): Probability of responding to alternative k or above in item i with a trait level ®j; ®j: Trait level of the subject; bik: Location parameter of the alternative k of item i; ai: Discrimination parameter of item i; D: Constant (1.7). The model fits a two-parameter logistic model to each of the events obtaining a score of k or higher, P*ik(®j) (see Figure 1, boundary characteristics curves). Thus, the probability of obtaining a score of k, Pik(®j), is the difference between the probability of obtaining a score of k or higher and that of obtaining a score of k + 1 or higher (see Figure 1, response characteristics curves). There are several constraints: P*i,0(®j) = 1 and P*i,m + 1(®j) = 0, where m is the 41 number of options minus 1. That is, the probability of any score k ≥ 0 must be 1, and the probability of obtaining a score higher than k must be 0. Parameter bik refers to segmented category location over the trait level. It is interpreted as the degree to which the subject has the trait being measured. In this way, GMR uses the cumulative segmentation method to estimate the parameters. In other words, the k response categories become k-1 dichotomized options. For each option, one bik parameter is estimated. Parameter bi1 refers to the trait level to which a subject has a 0.5 probability of scoring 1 in dichotomy 1 (to chose Category 2 or 3 or 4 or 5 or 6). Parameter bi2 refers to the location of trait level in which a subject has a probability of 0.5 of scoring 1 in dichotomy 2 (to respond with 3 or 4 or 5 or 6 category), and so on. As mentioned, parameter ai is the item discrimination, and concerns the degree to which the item is capable of differentiating between subjects with different trait levels. Data Analysis CTT Analyses Descriptive statistics (M, SD) as well as item-total score correlation indexes and internal consistency (Cronbach’s α) were computed. Unidimensionality A basic prerequisite for the application of unidimensional models of IRT is the establishment of the unidimensionality of the scale. This assumption refers to the fact that the probability of subjects responding in a particular direction is exclusively a function of one trait. That means subjects’ responses are based on a single underlying dimension responsible for these responses. In practice, the theoretical requirement of absolute unidimensionality of the scales is rarely fulfilled. A more realistic approach consists of demonstrating that there is a dominant dimension (Hambleton & Swaminathan, 1985). In this sense, the percentage of variance explained by the first factor in a factor analysis and this one compared to the second has been computed. Moreover, according to the strategy Horn (1965) suggested, a second factor analysis has been carried out using a similar (28 items × 858 subjects) matrix randomly generated by filling up each row and column with random responses (from 1 to 6). The comparison between both factor analyses shows whether the EAB structure is a random effect or not. Finally, second-order factor analysis has been calculated. Parameterization Figure 1. Example of boundary characteristic curves and response characteristic curves of the graded response model (a = 1.25, b1 = –2, b2 = –1, b3 = 1, b4 = 2). © 2007 Hogrefe & Huber Publishers A marginal maximum likelihood method was used to estimate GRM item parameters. European Journal of Psychological Assessment 2007; Vol. 23(1):39–46 42 V.J. Rubio et al.: Psychometric Properties of an Emotional Adjustment Measure European Journal of Psychological Assessment 2007; Vol. 23(1):39–46 © 2007 Hogrefe & Huber Publishers V.J. Rubio et al.: Psychometric Properties of an Emotional Adjustment Measure Model Fit In comparison to CTT, IRT requires that the model fits the data. However, there is no generally accepted goodness-offit test for the GRM (Gray-Little et al., 1997). Nevertheless, some evidence can be observed in order to determine the fitness of the model: Easy convergence (number of iterations) for estimating the model parameters, reasonable parameter estimations, standard error of parameters, and the factual invariance of the parameters. In turn, two different strategies were followed in order to check the invariance of the parameters. First, estimations about global scores with even items and with odd items were computed. Second, the sample was randomly divided into two groups. The scores were computed and parameter estimation correlation between these two groups were calculated. Precision of the Measures The Item information function, I(®j), indicates the precision to which each item measures through the different trait levels and may be defined as the inverse of the variance of the estimations of that item. Therefore, the square root of this inverse is the standard error of measurement (SEM) that particular item is measuring. This standard error of measurement allows the researcher to establish to what extent accurate estimations about the subjects’ trait level are made with each item. 43 (Item 13). Nonetheless, most of the items of the bank are .5 above or below the theoretically medium point of the scale. Items’ standard deviations are quite similar, ranging from SD = 1.2 (Items 16 and 25) to 1.7 (Item 2). Unidimensionality The scree test showed that the first factor explains the greater percentage of variance (near 32%). Thus, it fulfills the 20% some authors have established as a unidimensionality criteria (Reckase, 1979) but it is below the 40% some other researchers have pointed out (Carmines & Zeller, 1979). Moreover, the eigenvalue of the first factor (8.86) is 5.43 times the second (1.63), higher than the criterion of more than five times, which has been proposed as evidence of the unidimensionality of the measure (see Hambleton et al., 1991). That is to say, there is only one dominant factor responsible for subjects’ answers to the items. Furthermore, the comparison between that factor analysis and the one that can be computed according Horn’s (1965) suggestions using a similar randomly generated matrix shows the bank structure is not a random effect: Only the empirical first-factor eigenvalue is greater than the random one. The analysis of the factorial loadings showed that nearly all items have greater loadings on the first factor. Moreover, a second-order factor analysis over the EAB five factors structure showed there is only one second-order factor, which explains covariances of single factors. In conclusion, the results supported the idea of a dominant dimension made using the IRT feasible. Validity of the EAB Two different strategies were used. First, a factorial strategy was used in order to analyze the latent structure of the items of the bank. Second, a convergent validity study was carried out using the N scale of the EPQ-A and the EA scale of the BFQ. CTT analyses as well as unidimensionality and validity studies were computed using the SPSS v10.0. IRT parameterization of the scale was made using the program PARSCALE 3.0 (Muraki & Bock, 1996). Results CTT Analyses EAB scores ranged from 46 to 159 (M = 101.77, SD = 22.44). Correlation between items and total score ranged from .31 (Item 6) to .62 (Item 8). Cronbach’s α for the 28-item bank was α = 0.92, and SEM for this reliability estimation was SEM = 0.28 (SEM = √ ⎯⎯⎯⎯ 1−α ) (see Table 1). As can be seen, the pool of items has a mean (3.5) very close to the theoretically medium point of the scale. The highest mean is 4.4 (Items 6 and 25) and the lowest is 2.4 © 2007 Hogrefe & Huber Publishers Parameterization Table 1 also shows the parameterization of the 28 items of the bank according to the GRM. Regarding the item discrimination, there was no item with a very low level and just one (Item 6) with a low discrimination. It should be noticed that item discrimination in the GRM depends jointly on the ai parameter and the distances among bik parameters, so item discrimination can be considered as generally adequate. Moreover, the correlation between ai parameters and corrected item-total score correlation (a measure of “item discrimination” from CTT) was r = 0.89. The bik parameters, especially extremes bi1 and bi5, represent the trait level range in which the item is positioned. For example, in Item 1, b1,1 = –3.24 and b1,5 = 1.42 (see Table 1). A very low trait level (–3.24) is required to have a probability of 0.5 in order to respond 1 (which is the lowest trait level) whereas a moderated trait level (1.42) is needed to have a probability of 0.5 in order to respond on category 6 (the highest trait level). As a consequence, subjects with a moderate trait level tend to show the maximum EA level in this item. Item 13 shows that a high trait level (b13,5 = 3.25) is required to have a probability of 0.5 in order European Journal of Psychological Assessment 2007; Vol. 23(1):39–46 44 V.J. Rubio et al.: Psychometric Properties of an Emotional Adjustment Measure to chose Category 6 while a medium trait level (b13,1 = –0.79) is needed to have a probability of 0.5 to respond on Category 1 (see Table 1). The correlation between bi3 (the medium location parameter of each item) and the items’s mean was r = –0.97, which illustrates the idea that the higher the mean an item has, the lower the trait level is needed to respond to the upper categories of the scale. Model Fit The calibration of the EAB was made using a discrimination parameter, and five (k–1) location parameters between categories for each of the 28 items. Parameterization was reached with less than 15 iterations, with reasonable estimations and good standard errors. For the ai parameter S.E. ranged from SEItem6 = 0.01 to SEItem18 = 0.04. For the bik parameter S.E. ranged from SEItem18,k = 5 = 0.08 to SEItem6,k = 4 = 0.11. In order to check the invariance of the parameters, two different strategies were followed. First, the sample was randomly divided into two groups and the parameters for each were computed. The ai parameter correlation between these two subsamples was r = 0.81 (p < .01). The bik parameter correlations were: bi1 = 0.87, bi2 = 0.84, bi3 = 0.82, bi4 = 0.77, bi5 = 0.75; all of them were significant (p < .01). Second, estimations about global scores with even and with odd items were computed. Correlation between both estimations was r = 0.70 (p < .01), lower than expected but probably the result of an unbalanced distribution of the items regarding the facets they represent. Nevertheless, we think that both strategies reasonably support the invariance of parameters. These results support the appropriateness of GRM to the data. For all practical purposes, this fitness means that the EAB has the features required to be used as a CAT under the GRM. ,6 ,5 SE ,4 ,3 CTT ,2 IRT -4 -3 -2 -1 0 1 2 3 4 Trait Figure 2. Standard error (classical test theory and item response theory). European Journal of Psychological Assessment 2007; Vol. 23(1):39–46 Precision of the Measures IRT allows establishing the EA level to which an item is more informative. The Item Information Function indicates the precision to which each item measures through the different trait levels. Compared to CTT, the precision is not global. Rather, it is associated to the different levels of ®. Moreover, the Information Function of the measure is equal to the sum of the Information Functions of each item. Figure 2 shows the standard error for each trait level. As can be seen, EAB appears especially appropriate for ® levels between –2 and 2. It measures less precisely the subjects with extreme values, whether they are quite high or quite low on the trait levels. Moreover, the CTT SE (horizontal line in Figure 2) does not represent the SE differences IRT is able to show. For CTT, the measure has the same SE for the whole continuum of the trait level. Thus, accuracy of true score estimation is independent of the level to which this estimation is made. Contrary to that, IRT states that, using the same items, precision level depends on the trait level that is estimated. As can be seen in Figure 2, for extreme values of the trait, the SE ≈ 0.5, whereas for the intermediate values the SE ≈ 0.2. Furthermore, IRT does not assume equal distances between categories. Actually, the analysis of the distances between bik parameters shows there are differences between |bi1 – bi2|, |bi2 – bi3|, |bi3 – bi4|, and |bi4 – bi5|, either in a specific item or compared to the others. Distance average between |bi1 – bi2| was 1.33, meanwhile |bi2 – bi3| was 1.07, |bi3 – bi4| was 0.94, and |bi4 – bi5| was 1.38. As can be seen, the distances between central categories are smaller than the distances between extreme ones. Moreover, distances are item idiosyncratic. The biggest item average distances can be found in Items 6, 15, and 28 (1.7) and the smallest in Item 7 (0.8). Validity of the EAB Two different strategies were carried out to determine the validity of the EAB. First, factorial analysis (principal axis factorization, Varimax rotation) was executed with the 28 items of the EAB. Factorialization showed that, when a five-factor solution was forced, below the dominant dimension, several facets would appear. Concretely, anxiety (Items 1, 2, 3, 4, 5, and 26), hostility (7, 8, 9, 10, and 11), depression (6, 12, 13, 14, 15, 16, and 24), low self-esteem (23, 25, 27, 28), and emotionability (17, 18, 19, 20, 21, 22) (see Table 1). Moreover, the ai parameter shows hostility and emotionability are the less ambiguous facets (ai average = .83, .86, respectively) and the bi3 parameter (which refers to the location of trait level in the middle of the continuum and would be interpreted as the item difficulty) shows depression needs a higher trait level for responding in the side of EA (bi3 average = .19). On the contrary, self-esteem needs a lower trait level (bi3 average = .80). © 2007 Hogrefe & Huber Publishers V.J. Rubio et al.: Psychometric Properties of an Emotional Adjustment Measure These facets agree on most of those that were found in Eysenck’s (1959), and Costa & McCrae’s (1992) work. Actually, for these authors EA is a second-order factor dimension. Second, a convergent validity study was carried out. The correlations between the EAB scores and the EPQ-A N scale and the BFQ EA scale were r = 0.86 and r = 0.77, respectively. The correlation between both criteria were r = 0.81. Discussion According to the results, EAB is a good measure of EA. First, the factors that EAB showed agree with most of Eysenck’s and Costa & McCrae’s facets. Second, EAB showed a high convergent validity with the N scale of Eysenck’s EPQ. Third, it provides a highly reliable measure of EA. Furthermore, even though the percentage of variance explained by the first factor is not as much higher as expected, the results using different strategies (first-factor eigenvalue/second-factor eigenvalue, comparison of empirical-random matrix analysis, scree test, and second-order factor analysis) have shown that a dominant dimension accounts for the responses to the bank of items. These results together with simulation studies about the effect of multidimensionality over the unidimensional estimations show that in those conditions parameterization reproduces the dominant dimension, which enables the use of IRT models. Results have also shown IRT psychometric properties of the measure. For instance, the ai parameter has shown a reasonably good discrimination power. In a personality data context, the ai parameter can be conceptualized as the numerical expression of the “psychological ambiguity” of the item (Roskam, 1985). Consequently, high values of ai mean clear and well-defined questions (Ferrando, Lorenzo, & Molina, 2001). Otherwise, low values mean items that can be differentially understood and would be ambiguous. So, the 28 items have shown a reasonably good level of clearness and definition. Moreover, the bik parameters, which serve to locate the scale value of the item response on the underlying z-score scale of the trait, have shown the differences among items regarding that. Contrary to CTT, IRT allows the distinction between people with high and low trait levels in each item. Results obtained have demonstrated the appropriateness of GRM for the EAB. Therefore, the applicability of the IRT as a psychometric strategy for the determination of scientific guarantees of an assessment instrument proves to be extensible to personality dimensions. Hence, the possibility of extending the IRT to instruments for assessing personality dimensions provides important advantages compared to CTT. The first and most basic advantage is the independence of the indicators that characterize the scale to the sam© 2007 Hogrefe & Huber Publishers 45 ple which the scale has been calibrated. Thus, it is not necessary to compare the direct scores to norms. Furthermore, appropriateness of the items according to the GRM allows applying it as a CAT. Obviously, 28 items is a very small number for a CAT. However, the invariance of the parameters allows the comparison of scores that have been obtained using different items. In this format, only those items which provide the maximum information about a given subject’s trait level may be used. This has several advantages. First, it optimizes the testing time to get the best parameter estimation. Second, accuracy of estimations is improved. Third, voluntary response distortion is reduced. This effect will be obtained, partly, because of the difficulty in maintaining a consistent response profile (in order to cope with an expected one) when subjects do not know which items will be administered, and partly because of the difficulty of being trained on faking. Then, this measure could be a good instrument to be improved as an assessment tool in contexts such as personnel recruitment. Another important advantage is the information it provides concerning the functioning of the different items making up the scale. This information may lead to increasing reliability as the calibration of the items that show less discrimination may be improved without having to alter the measure as a whole. Further work with he EAB should improve the discrimination power of such items as well as provide a greater number of elements in order to develop a bank of items for the assessment of EA as an adaptive test. Particularly, the authors are working on automatic item generation as well as on anchoring designs for item calibration. References Aguado, D., Rubio, V.J., Hontangas, P., & Hernández, J.M. (2005). Propiedades psicométricas de un test adaptativo informatizado para la medición del ajuste emocional [Psychometric properties of an emotional adjustment computerized adaptive test]. Psicothema, 17, 484–491. Baker, F.B. (1985). The basics of item response theory. Portsmouth, NH: Heineman. Baker, J.G., Rounds, J.B., & Zevon, M.A. (2000). A comparison of graded and Rasch partial credit models with subjective wellbeing. Journal of Educational and Behavioral Statistics, 25, 253–270. Caprara, G.V., Barbaranelli, C., & Borgogni, L. (1993). BFQ: Big Five Questionnaire. Manual (Spanish version: TEA, 1995). Firenze: Italy: Organizzazioni Speciali. Carmines, E.G., & Zeller, R.A. (1979). Reliability and validity assessment. Beverly Hills, CA: Sage. Cattell, R.B. (1972). Sixteen Personality Factor. 16 PF. Chicago, IL: ITPA. Cattell, R.B., & Scheier, I.H. (1961). The meaning and measurement of neuroticism and anxiety. New York: Ronald Press. Chernyshenko, O.S., Stark, S., Chan, K.Y., Drasgow, F., & Williams, B. (2001). Fitting IRT models to two personality invenEuropean Journal of Psychological Assessment 2007; Vol. 23(1):39–46 46 V.J. Rubio et al.: Psychometric Properties of an Emotional Adjustment Measure tories: Issues and insights. Multivariate Behavioral Research, 36, 523–562. Cooke, D.J., & Michie, C. (1997). An item response theory analysis of the Hare Psychopath Checklist-revised. Psychological Assessment, 9, 3–14. Costa, P.T., & McCrae, R.R. (1992). Revised NEO Personality Inventory (NEO-PI-R) and NEO Five-Factor Inventory (NEOFFI) professional manual. Odessa, FL: Psychological Assessment Resources. Digman, J.M. (1990). Personality structure: Emergence of the five-factor model. Annual Review of Psychology, 41, 417–440. Eysenck, H.J. (1947). Dimensions of personality. London: Routledge and Kegan Paul. Eysenck, H.J. (1959). Eysenck Personality Inventory. London: University of London Press. Eysenck, H.J., & Eysenck, S.B.G. (1975). Manual for the Eysenck Personality Inventory (Spanish version: TEA, 1975). London: Hodder & Stoughton. Ferrando, P.J. (1994). Fitting item response models to the EPI-A Impulsivity scale. Educational and Psychological Measurement, 54, 118–127. Ferrando, P.J. (2001). The measurement of neuroticism using MMQ, MPI, EPI, and EPQ items: A psychometric analysis based on item response theory. Personality and Individual Differences, 30, 641–656. Ferrando, P.J., Lorenzo, U., & Molina, G. (2001). An item response theory analysis of response stability in personality measurement. Applied Psychological Measurement, 25, 3–17 Flannery, W.P., Reise, S.P., & Widaman, K.F. (1995). An item response theory of the general and academic scales of the SelfDescription Questionnaire II. Journal of Research in Personality, 29, 168–188. Gray-Little, B., Williams, V.S.L., & Hancock, T.D. (1997). A item response theory analysis of the Rosenberg Self-Esteem Scale. Personality and Social Psychology Bulletin, 23, 443–451. Guilford, J.P. (1959). Personality. New York: McGraw-Hill. Hambleton, R.K., & Swaminathan, J. (1985). IRT: Principles and applications. Boston, MA: Kluwer. Hambleton, R.K., Swaminathan, J., & Rogers, H.J. (1991). Fundamentals of IRT. Newbury Park, CA: Sage. Horn, J.L. (1965). A rationale and technique for estimating the number of factors in factor analysis. Psychometrika, 30, 179– 185. Jansen, P.G.W., & Roskam, E.E. (1986). Latent trait models and dichotomization of graded responses. Psychometrika, 51, 69– 91. King, D.W., King, L.A., Fairbank, J.A., Schlengar, E., & Surface C.R. (1993). Enhancing the precision of the Mississippi scale for combat-related posttraumatic stress disorder: An application of item response theory. Psychological Assessment, 5, 457–471. Lord, F.M. (1980). Applications of item response theory to practical testing problems. Hillsdale, NJ: Erlbaum. European Journal of Psychological Assessment 2007; Vol. 23(1):39–46 Muraki, E., & Bock, R.D. (1996). Parscale 3.0. Chicago: Scientific Software International. Reckase, M.D. (1979). Unifactor latent trait models applied to multifactor tests: Results and implications. Journal of Educational Statistics, 4, 207–230. Reise, S.P., & Henson, J.M. (2000). Computerization and adaptive administration of the NEO PI-R. Assessment, 7, 347–364. Reise, S.P., & Waller, N.G. (1990). Fitting the two-parameter model to personality data. Applied Psychological Measurement, 14, 45–58. Reise, S.P., & Yu, J. (1990) Parameter recovery in the Graded Response Model using MULTILOG. Journal of Educational Measurement, 27, 133–144. Roskam, E.E. (1985). Current issues in item response theory: Beyond psychometrics. In E.E. Roskam (Ed.), Measurement and personality assessment (pp. 3–19). Amsterdam: North-Holland. Rouse, S.V., Finger, M.S., & Butcher, J.N. (1999). Advances in clinical personality measurement: An item response theory analysis of the MMPI-2 PSY-5 scales. Journal of Personality Assessment, 72, 282–307. Samejima, F. (1969). Estimation of latent ability using a response pattern of graded scores. Psychometrika Monograph, Suppl., 34, 100. van der Linden, W.J., & Hambleton, R.K. (1997). Handbook of modern IRT. New York: Springer. Waller, N.G., & Reise, S.P. (1989). Computerized adaptive personality assessment: An illustration with the absorption scale. Journal of Personality and Social Psychology 57, 1051–58. Wiggins, J.S., & Trapnell, P.D. (1997). Personality structure: The return of the Big Five. In R. Hogan, J. Johnson, & S. Briggs (Eds.), Handbook of personality psychology (pp. 737–765). San Diego, CA.: Academic Press. Zickar, M.J., & Ury, K.L. (2002). Developing an interpretation of item parameters for personality items: Content correlates of parameter estimates. Educational and Psychological Measurement, 62, 19–31. Zumbo, B., Pope, G.A., Watson, J.E., & Hubley, A.M. (1997). An empirical test of Roskam’s conjecture about the interpretation of an ICC parameter in personality inventories. Educational and Psychological Measurement, 57, 963–969. Víctor J. Rubio Department of Psicología Biológica y de la Salud Universidad Autónoma de Madrid E-28049 Madrid Spain Tel. +34 913 975179 Fax +34 913 975215 E-mail victor.rubio@uam.es © 2007 Hogrefe & Huber Publishers