Electronic Collection Assessment and Benchmarking

advertisement

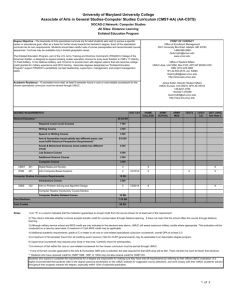

Electronic Collection Assessment and Benchmarking to Demonstrate the Value of Electronic Collections Stephen Miller Associate Provost, Information & Library Services Library Assessment Conference 2012 October 31, 2012 About UMUC • • • • • • Part of University System of Maryland 90k+ worldwide students and faculty Non-traditional student focus 100 bachelors and masters programs Doctor of Management Programs 77% of classes online The UMUC Library • • • • Resources/Services almost 100% online Core collection of 125 research databases 28 staff – 19 librarians, 9 staff Mission: – Library and information literacy education – Partnering with UMUC’s schools and faculty – Worldwide library resources and services Annual Collection Review • Criteria: – – – – – – – Usage via login statistics Percentage of full-text contained Relevance to curriculum Overlap with other databases Cross-linking activity Librarian instructional usage Input from faculty Curricular Mapping Project • Databases supporting academic programs • Academic programs potentially utilizing databases • Found wide applicability – most databases have content usable by many programs Database Assessment Projects 1. Library program review with external reviewer evaluation (2010) 2. Benchmarking research database holdings (2011) a. Peer group database holdings analysis b. Peer group metrics analysis Project Goals • • • • Prove reliability of annual assessment process Ensure collection competitive with peers ID additional or alternative resources Ensure compliance with best practices for database coverage • Show value of collections & justify continuing financial support • Objective evidence of library quality for accreditation Project 1: Library Program Review • Based on academic program review process • Five topic areas: 1. 2. 3. 4. 5. Management and Business Community College Administration Education Cybersecurity Social sciences/humanities Findings • Collections sufficient and in many areas exceed expected minimum requirements • Confirmed reliability of annual review process • Need for additional resources for IT/Cybersecurity (obtained funding for additional databases) Project 2: Benchmarking • Peer group chosen using Carnegie Classifications • Selected only public, non-profit universities because of availability of database A-Z lists and IPEDS survey data • Included one University System of Maryland institution (Towson University) A-Z List Harvesting • Different approaches: Inclusive vs. exclusive • Different naming conventions • Combined vs. split apart vendor packages Finding: A-Z lists rely on non-standard local practice and local needs, errors/inconsistencies b/c of development over time. Major Questions/Findings • If most in peer group subscribe, but not UMUC, why not? – Database did not fit curriculum or adequate coverage in other databases • If UMUC subscribes, but not most in peer group, why? – Database supporting particular part of UMUC curriculum not covered by other resources Metrics Analysis • IPEDS Data Center tools • Utilized total collection expenditures vs. electronic collections only • Ratios of expenditures per FTE • Staffing, salaries, total library expenditures, holdings and activity data Findings • Ratio of faculty librarians to staff higher than other institutions • UMUC generally low in total staff/FTE, total collection expenditures/FTE Overall Results • External validation of holdings quality and fit to curricular requirements • Validation of effectiveness of annual review process • New databases for IT/Cybersecurity • Case for sustained and additional funding and support of the library Questions? Stephen Miller Associate Provost, Information & Library Services University of Maryland University College stephen.miller@umuc.edu